文章目录

1、环境准备

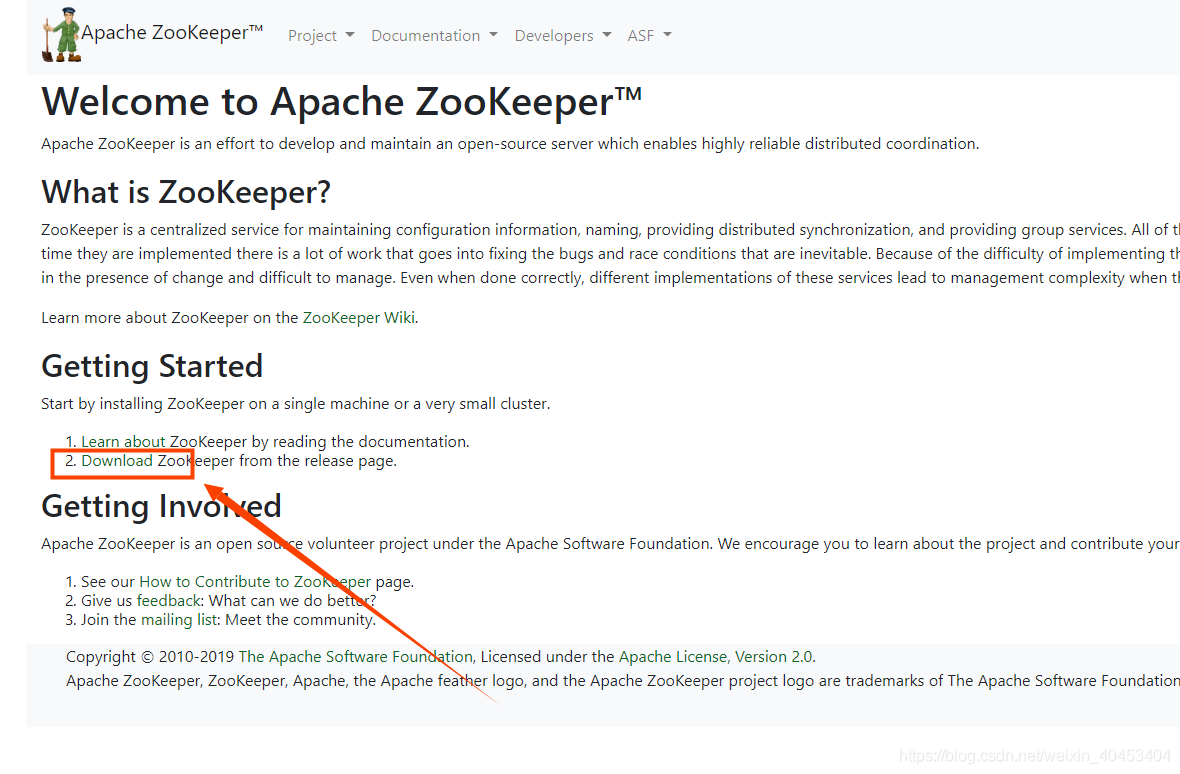

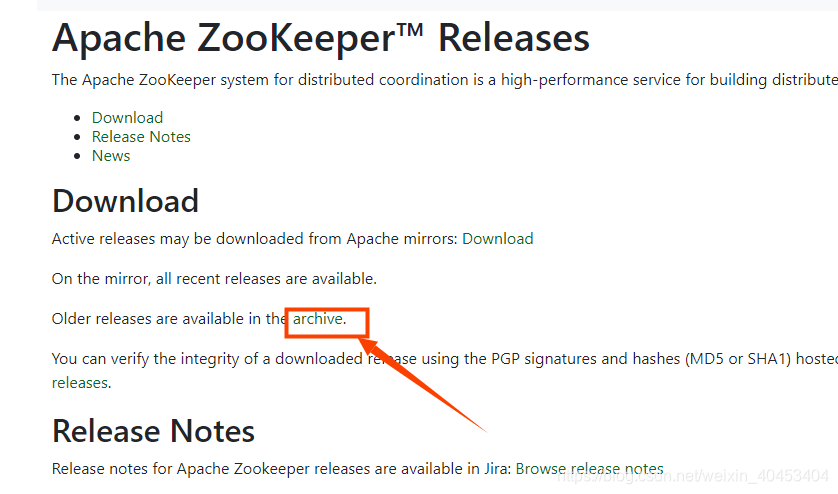

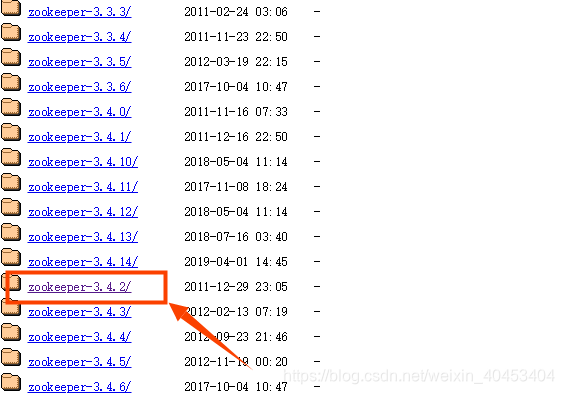

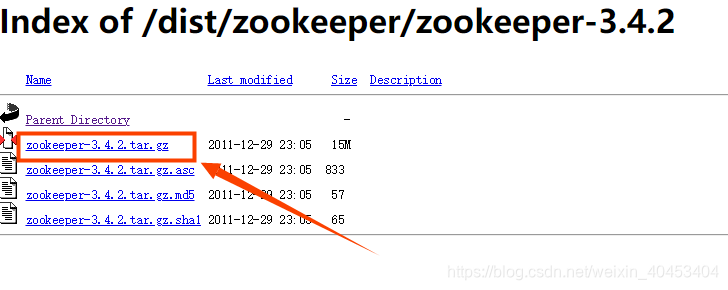

1.1下载zooKeeper

查阅hadoop2.7.3的文档我们可以看到hadoop2.7.3在搭建高可用的时候使用的是zookeeper-3.4.2版本,所以我们也按照hadoop官网的提示,接下来我们安装zookeeper-3.4.2版本.进入官网下载ZooKeeper3.4.2版本

官网地址:https://zookeeper.apache.org/

点击Download

1.3安装zooKeeper

#1.把zookeeper的压缩安装包解压到/opt/bigdata/目录下

[root@node1 ~]# tar -xzvf zookeeper-3.4.2.tar.gz -C /opt/bigdata/ #输入完命令后回车

#2.切换到bigdata目录下

[root@node1 ~]# cd /opt/bigdata/

#3.按照安装hadoop的方式,将zookeeper的安装目录的所属组修改为hadoop:hadoop

#修改zookeeper安装目录的所属用户和组为hadoop:hadoop

[root@node1 bigdata]# chown -R hadoop:hadoop zookeeper-3.4.2/

#4.修改zookeeper安装目录的读写权限

[root@node1 bigdata]# chmod -R 755 zookeeper-3.4.2/

1.4配置zooKeeper环境变量

#1.切换到hadoop用户目录下

[root@node1 bigdata]# su - hadoop

Last login: Thu Jul 18 16:07:39 CST 2019 on pts/0

[hadoop@node1 ~]$ cd /opt/bigdata/zookeeper-3.4.2/

[hadoop@node1 zookeeper-3.4.2]$ cd ..

[hadoop@node1 bigdata]$ cd ~

#2.修改hadoop用户下的环境变量配置文件

[hadoop@node1 ~]$ vi .bash_profile

# Get the aliases and functions

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

JAVA_HOME=/usr/java/jdk1.8.0_211-amd64

HADOOP_HOME=/opt/bigdata/hadoop-2.7.3

SPARK_HOME=/opt/spark-2.4.3-bin-hadoop2.7

M2_HOME=/opt/apache-maven-3.0.5

#3.新增zookeeper的环境变量ZOOKEEPER_HOME

ZOOKEEPER_HOME=/opt/bigdata/zookeeper-3.4.2/

PATH=$PATH:$HOME/bin:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$M2_HOME/bin

#4.将zookeeper的环境变量ZOOKEEPER_HOME加入到path中

PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin:$ZOOKEEPER_HOME/bin

export JAVA_HOME

export HADOOP_HOME

export M2_HOME

export SPARK_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HDFS_CONF_DIR=$HADOOP_HOME/etc/hadoop

#5.导出zookeeper环境变量

export ZOOKEEPER_HOME

#6.保存修改内容

:wq! #记得回车

#7.使得环境变量生效

[hadoop@node1 ~]$ source .bash_profile

#8.输入zk然后按键盘左侧的Tab键

[hadoop@node1 ~]$ zk

#有如下的提示,表名zookeeper的配置完成

zkCleanup.sh zkCli.cmd zkCli.sh zkEnv.cmd zkEnv.sh zkServer.cmd zkServer.sh

[hadoop@node1 ~]$ zk

1.5 修改zookeeper集群配置文件

将目录切换到zookeeper的安装目录下的conf目录下复制zoo_sample.cfg文件为zoo.cfg

[hadoop@node1 ~]$ cd /opt/bigdata/zookeeper-3.4.2/conf/

[hadoop@node1 conf]$ ll

total 12

-rwxr-xr-x 1 hadoop hadoop 535 Dec 22 2011 configuration.xsl

-rwxr-xr-x 1 hadoop hadoop 2161 Dec 22 2011 log4j.properties

-rwxr-xr-x 1 hadoop hadoop 808 Dec 22 2011 zoo_sample.cfg

#1.复制zoo_sample.cfg模板配置文件为正式的配置文件zoo.cfg

[hadoop@node1 conf]$ cp zoo_sample.cfg zoo.cfg

[hadoop@node1 conf]$ ll

total 16

-rwxr-xr-x 1 hadoop hadoop 535 Dec 22 2011 configuration.xsl

-rwxr-xr-x 1 hadoop hadoop 2161 Dec 22 2011 log4j.properties

-rwxr-xr-x 1 hadoop hadoop 808 Jul 19 11:20 zoo.cfg

-rwxr-xr-x 1 hadoop hadoop 808 Dec 22 2011 zoo_sample.cfg

[hadoop@node1 conf]$

修改dataDir的值为 dataDir=/var/lib/zookeeper,在文件的末尾添加如下配置:

server.1=node1:2888:3888

server.2=node2:2888:3888

server.3=node3:2888:3888

修改完配置文件记得保存

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/var/lib/zookeeper

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

开课吧

kaikeba.com

精选领域名师,只为人才赋能 6

1.6 创建myid文件

在节点node1,node2,node3对应的/var/lib/zookeeper目录下(dataDir配置的目录/var/lib/zookeeper)创建myid文

件,几个文件内容依次为1,2,3

如下图我们切换到root用户,在/var/lib目录下创建zookeeper目录,因为hadoop用户对/var/lib目录没有写权限,

所以我们在创建zookeeper目录时需要切换到root用户(拥有最大权限)

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=node1:2888:3888

server.2=node2:2888:3888

server.3=node3:2888:3888

#修改完配置文件记得保存

1.6 创建myid文件

在节点node1,node2,node3对应的/var/lib/zookeeper目录下(dataDir配置的录/var/lib/zookeeper)创建myid文件,几个文件内容依次为1,2,3。切换到root用户,在/var/lib目录下创建zookeeper目录,因为hadoop用户对/var/lib目录没有写权限,所以我们在创建zookeeper目录时需要切换到

root用户(拥有最大权限)

[hadoop@node1 conf]$ vi zoo.cfg

#1.切换到root用户

[hadoop@node1 conf]$ su - root

Password:

Last login: Fri Jul 19 10:53:59 CST 2019 from 192.168.200.1 on pts/0

#2.创建zookeeper目录

[root@node1 ~]# mkdir -p /var/lib/zookeeper

#3.进入到/var/lib/zookeeper/目录

[root@node1 ~]# cd /var/lib/zookeeper/

You have new mail in /var/spool/mail/root

#4.创建myid配置文件

[root@node1 zookeeper]# touch myid

#5.编辑myid文件,输入1,我们目前编辑的是node1的节点的myid文件,node2的myid内容为2,node3的myid内容为3

[root@node1 zookeeper]# vi myid

You have new mail in /var/spool/mail/root

#6.查看一下myid文件内容为1

[root@node1 zookeeper]# cat myid

1

You have new mail in /var/spool/mail/root

1.7 修改myid目录权限

#1.配置完成后记得修改zookeeper目录的所属组和读写权限

[root@node1 zookeeper]# cd ..

You have new mail in /var/spool/mail/root

#2.修改zookeeper目录所属组

[root@node1 lib]# chown -R hadoop:hadoop zookeeper/

#3.修改zookeeper目录的读写权限为755

[root@node1 lib]# chmod -R 755 zookeeper/

[root@node1 lib]#

2、复制zookeeper

#1.复制/var/lib目录下的zookeeper目录到node2和node3的/var/lib目录下

[root@node1 lib]# scp -r zookeeper node2:$PWD

[root@node1 lib]# scp -r zookeeper node3:$PWD

#2.复制zookeeper安装目录到node2和node3的安装目录下/opt/bigdata目录下

[root@node1 lib]# scp -r /opt/bigdata/zookeeper-3.4.2/ node2:/opt/bigdata/

[root@node1 lib]# scp -r /opt/bigdata/zookeeper-3.4.2/ node3:/opt/bigdata/

3、修改node2和node3节点zookeeper的相关目录权限

修改node2节点zookeeper 相关目录权限

#1.修改zookeeper的myid配置目录所属组和读写权限

[root@node2 lib]# cd ~

[root@node2 ~]# chown -R hadoop:hadoop /var/lib/zookeeper

[root@node2 ~]# chmod -R 755 /var/lib/zookeeper

#2.修改zookeeper安装目录所属组和读写权限

[root@node2 ~]# chown -R hadoop:hadoop /opt/bigdata/zookeeper-3.4.2/

You have new mail in /var/spool/mail/root

[root@node2 ~]# chmod -R 755 /opt/bigdata/zookeeper-3.4.2/

[root@node2 ~]#

修改node3节点zookeeper 相关目录权限

#1.修改zookeeper的myid配置目录所属组和读写权限

[root@node3 bigdata]# cd ~

You have new mail in /var/spool/mail/root

[root@node3 ~]# chown -R hadoop:hadoop /var/lib/zookeeper

[root@node3 ~]# chmod -R 755 /var/lib/zookeeper

#2.修改zookeeper安装目录所属组和读写权限

[root@node3 ~]# chown -R hadoop:hadoop /opt/bigdata/zookeeper-3.4.2/

You have new mail in /var/spool/mail/root

[root@node3 ~]# chmod -R 755 /opt/bigdata/zookeeper-3.4.2/

[root@node3 ~]#

4、修改node2和node3的myid文件内容

修改node2节点zookeeper 的myid内容为2:

[root@node2 ~]# vi /var/lib/zookeeper/myid

You have new mail in /var/spool/mail/root

[root@node2 ~]# cat /var/lib/zookeeper/myid

2

[root@node2 ~]#

修改node3节点zookeeper 的myid内容为3

[root@node3 ~]# vi /var/lib/zookeeper/myid

You have new mail in /var/spool/mail/root

[root@node3 ~]# cat /var/lib/zookeeper/myid

3

[root@node3 ~]#

5、配置node2和node3的zookeeper环境变量

我们在node1节点上直接将hadoop用户的环境变量配置文件远程复制到node2和node3的hadoop用户家目录下

#1.如果当前登录用户是root用户,需要切换到hadoop用户下,如果当前用户是hadoop用户,请将目录切换到hadoop用

户的家目录下,在进行环境变量文件的远程复制.

[root@node1 lib]# su - hadoop

Last login: Fri Jul 19 11:08:44 CST 2019 on pts/0

[hadoop@node1 ~]$ scp .bash_profile node2:$PWD

.bash_profile 100% 681

64.8KB/s 00:00

[hadoop@node1 ~]$ scp .bash_profile node3:$PWD

.bash_profile 100% 681

156.8KB/s 00:00

[hadoop@node1 ~]$

5.1 使得node2和node3的环境变量生效

使得node2的hadoop的环境变量生效

#注意:切换到hadoop用户下

#1.使得环境变量生效

[hadoop@node2 ~]$ source .bash_profile

#2.输入zk然后按键盘左侧的Tab键

[hadoop@node2 ~]$ zk

#3.有如下命令和shell脚本的提示,说明zookeeper的环境变量配置成功.

zkCleanup.sh zkCli.sh zkEnv.sh zkServer.sh

zkCli.cmd zkEnv.cmd zkServer.cmd

[hadoop@node2 ~]$ zk

使得node3的hadoop的环境变量生效

#注意:切换到hadoop用户下

[root@node3 bigdata]# su - hadoop

Last login: Thu Jul 18 15:37:50 CST 2019 on :0

#1.使得环境变量生效

[hadoop@node3 ~]$ source .bash_profile

#2.输入zk然后按键盘左侧的Tab键

[hadoop@node3 ~]$ zk

#3.有如下命令和shell脚本的提示,说明zookeeper的环境变量配置成功.

zkCleanup.sh zkCli.sh zkEnv.sh zkServer.sh

zkCli.cmd zkEnv.cmd zkServer.cmd

[hadoop@node3 ~]$ zk

6.启动zookeeper集群

6.1 启动zookeeper集群

启动zookeeper集群需要手动分别依次在三台机器上启动,启动前需要在三台机器上都将用户切换为hadoop用户.

node1上启动zookeeper

[hadoop@node1 ~]$ zkServer.sh start

JMX enabled by default

Using config: /opt/bigdata/zookeeper-3.4.2/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@node1 ~]$

node2上启动zookeeper

[hadoop@node2 ~]$ zkServer.sh start

JMX enabled by default

Using config: /opt/bigdata/zookeeper-3.4.2/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@node2 ~]$

node3上启动zookeeper

[hadoop@node3 ~]$ zkServer.sh start

JMX enabled by default

Using config: /opt/bigdata/zookeeper-3.4.2/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@node3 ~]$

6.2 查看zookeeper集群状态

使用zkServer.sh status命令在三个节点分别执行查看状态

在node1上查看

[hadoop@node1 bin]$ zkServer.sh status

JMX enabled by default

Using config: /opt/bigdata/zookeeper-3.4.2/bin/../conf/zoo.cfg

Mode: follower

[hadoop@node1 bin]$

在node2上查看

[hadoop@node2 bin]$ zkServer.sh status

JMX enabled by default

Using config: /opt/bigdata/zookeeper-3.4.2/bin/../conf/zoo.cfg

Mode: follower

[hadoop@node2 bin]$

在node3上查看

[hadoop@node3 bin]$ zkServer.sh status

JMX enabled by default

Using config: /opt/bigdata/zookeeper-3.4.2/bin/../conf/zoo.cfg

Mode: leader

[hadoop@node3 bin]$

至此我们zookeeper集群安装完成.

6.3 zooKeeper安装遇到问题

由于按照hadoop2.7.3版本官方文档中使用zookeeper-3.4.2版本,但是zookeeper-3.4.2版本比较低,我们在启动zookeeper后,可以使用jps命令或者ps -ef|grep zookeeper命令查看zookeeper主进程的状态,但是我们发现是正常的,如果我们使用zkServer.sh status命令查看zookeeper的状态却显示是异常的,不管启动多少次都会得到同样的结果。

[hadoop@node1 bin]$ zkServer.sh status

JMX enabled by default

Using config: /opt/bigdata/zookeeper-3.4.2/bin/../conf/zoo.cfg

Error contacting service. It is probably not running.

[hadoop@node2 bin]$ zkServer.sh status

JMX enabled by default

Using config: /opt/bigdata/zookeeper-3.4.2/bin/../conf/zoo.cfg

Error contacting service. It is probably not running.

[hadoop@node3 bin]$ zkServer.sh status

JMX enabled by default

Using config: /opt/bigdata/zookeeper-3.4.2/bin/../conf/zoo.cfg

Error contacting service. It is probably not running.

分析主要有以下两个原因造成:

1.centos7上没有安装nc工具.

2.zookeeper启动脚本中的nc命令在不同的linux版本中使用了无效的参数导致获取状态异常或者获取的状态为

空状态导致的。

解决方法:

1.使用yum 在三个节点上分别安装nc工具

yum install nc -y

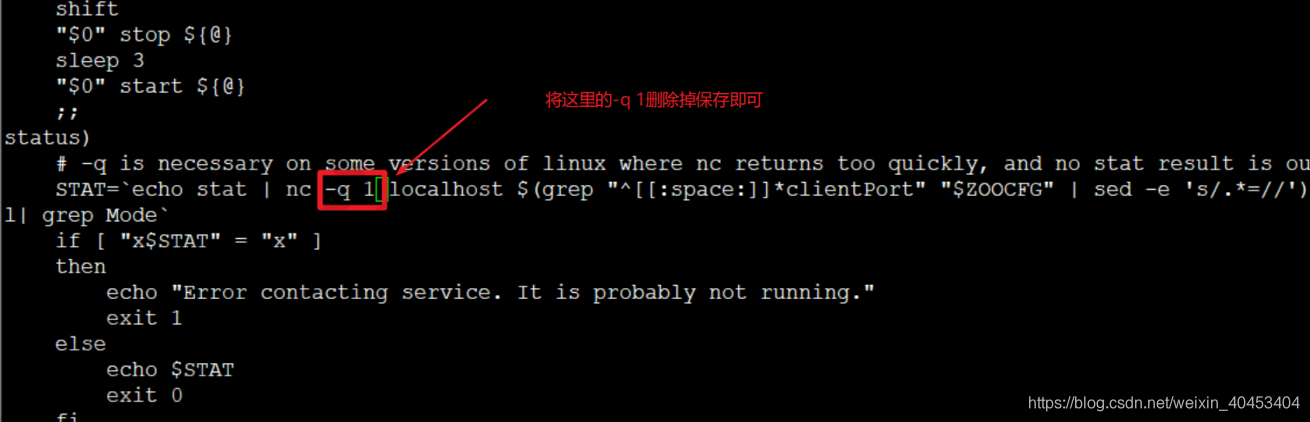

2.修改zookeeper安装目录下的bin目录下的zkServer.sh脚本文件内容

修改完成后我们在使用zkServer.sh status就能看到zookeeper的状态了