相信各位学习爬虫的老铁们一定看过崔大佬的爬虫教学。在第六章利用Ajax爬取今日头条街拍图片这部分,由于网站已变更,会发现书中具体代码无法执行。本人作为爬虫新手,用了2小时时间自行摸索该部分,并对相应内容进行调整,最终【成功爬取】,在这里跟大家分享一下我踏过的各种大坑。

首先模块导入

import requests

import re

import os

from time import sleep

from urllib.parse import urlencode

from urllib import parse

from hashlib import md5

爬虫三步走,获取特面--分析页面--存储信息

首先,获取页面的函数设置。这里值得注意的是headers部分要添加cookies,内容不做赘述。

headers = {

'Host': 'so.toutiao.com',

'Referer': 'https://so.toutiao.com/search?keyword=%20%E8%A1%97%E6%8B%8D&pd=synthesis&source=input&dvpf=pc&aid=4916&page_num=0',

'Cookie': 'ttwid=1|KviVmcSjms80bH3CAgjoWLkug459q7mO4n8oe79jffQ|1634094110|72c4e7c5de9eddb603ee7144203a64762a6e383f21d66b619e50cb9a4740e7c6',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0'

}

headers2 = {

'Host': 'www.toutiao.com',

'Cookie': 'ttwid=1|KviVmcSjms80bH3CAgjoWLkug459q7mO4n8oe79jffQ|1634096506|eaa9c570e34a6c383c184a4b0855b9d13833c4414ded5a4f82227b3f8bc3f8ea',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0'

}

接下来,搜索框输入:街拍搜索,加时间等待2秒是防止被关小黑屋

def get_page(page):

"""搜索街拍"""

params = {

'keyword': '街拍',

'pd': 'synthesis',

'source': 'input',

'dvpf': 'pc',

'aid': '4916',

'page_num': page

}

url = 'https://so.toutiao.com/search?' + urlencode(params)

try:

response = requests.get(url, headers=headers)

sleep(2)

if response.status_code == 200:

# print(response.text)

return response.text

except requests.ConnectionError as e:

print('Error', e.args)

然后,获取一个页面的所有文章链接:

def parse_one_page(html):

"""获取文章链接"""

pattern = re.compile(

'"title":.*?"article_url":"(.*?)"',

re.S)

result = re.findall(pattern, html)

# print(result)

return result

接下来,打开其中一个文章链接,获取响应页面:

def in_article(url):

"""进入文章里面"""

try:

# 进入文章里面

response = requests.get(url, headers=headers2)

sleep(2)

if response.status_code == 200:

# print(response.text)

return response.text

except requests.ConnectionError as e:

print('Error', e.args)

再然后,下载文章里面图片:

def get_image_url(html):

"""获取图片下载链接"""

try:

pattern1 = re.compile(

'alt="(.*?)" inline="0".*?<div class="pgc-img">.*?src=',

re.S)

items = re.findall(pattern1, html)

result = []

pattern2 = re.compile(

'<div class="pgc-img">.*?src="(.*?)"',

re.S)

items2 = re.findall(pattern2, html)

data = [['title', items], ['image_url', items2]]

result.append(dict(data))

# print(result)

return result

except:

print('无法匹配成功!!!!!!')

最后,保存图片:

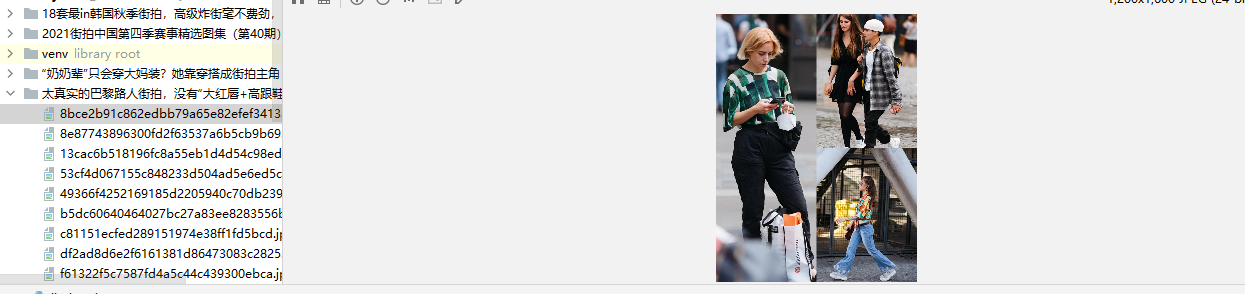

def save_image(item):

"""根据图片地址下载图片"""

try:

if item[0].get('title')[0]:

if not os.path.exists(item[0].get('title')[0]):

os.mkdir(item[0].get('title')[0])

try:

# 某个文章下的图片地址

list = item[0].get('image_url')

for url in list:

response = requests.get(url)

sleep(2)

if response.status_code == 200:

# 图片名称用其内容md5值,这样可以去除名称重复

file_path = '{0}/{1}.{2}'.format(item[0].get('title')[0], md5(response.content).hexdigest(),

'jpg')

if not os.path.exists(file_path):

with open(file_path, 'wb') as f:

f.write(response.content)

print('{0}.........下载成功!!!'.format(item[0].get('title')[0]))

else:

print('Already Downloaded', file_path)

except requests.ConnectionError:

print('Failed to Save Image')

except:

print('No Data!!!')

if __name__ == '__main__':

# 共17页

for i in range(0, 18):

# 获取前i页数据

data1 = get_page(i)

# 获取文章链接

links = parse_one_page(data1)

for link in links:

fail_url = parse.urlparse(link)

path = fail_url.path.split('/group/')

new_path = 'a' + path[-1]

true_url = parse.urljoin('https://www.toutiao.com', '{0}'.format(new_path))

article_html = in_article(true_url)

image_info = get_image_url(article_html)

print(image_info)

# 保存图片,以文章名称作为文件夹名称

save_image(image_info)