CrawlSpider不在手动处理url,它会自动匹配到响应文件里的所有符合匹配规则的链接。

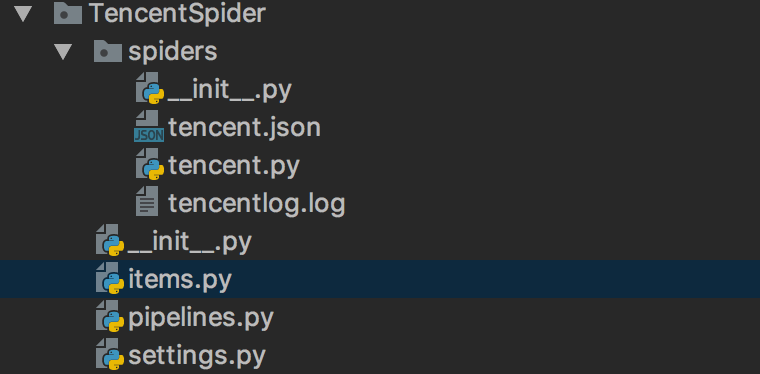

创建项目

scrapy startproject TencentSpider

items.py

import scrapy class TencentItem(scrapy.Item): # define the fields for your item here like: # 职位名 positionname = scrapy.Field() # 详情连接 positionlink = scrapy.Field() # 职位类别 positionType = scrapy.Field() # 招聘人数 peopleNum = scrapy.Field() # 工作地点 workLocation = scrapy.Field() # 发布时间 publishTime = scrapy.Field()

创建CrawlSpider,使用模版crawl

scrapy genspider -t crawl tencent tencent.com

tencent.py

import scrapy # 导入CrawlSpider类和Rule from scrapy.spiders import CrawlSpider, Rule # 导入链接规则匹配类,用来提取符合规则的连接 from scrapy.linkextractors import LinkExtractor from TencentSpider.items import TencentItem class TencentSpider(CrawlSpider): name = "tencent" allow_domains = ["hr.tencent.com"] start_urls = ["http://hr.tencent.com/position.php?&start=0#a"] # Response里链接的提取规则,返回的符合匹配规则的链接匹配对象的列表 pagelink = LinkExtractor(allow=("start=d+")) rules = [ # 获取这个列表里的链接,依次发送请求,并且继续跟进,调用指定回调函数处理 Rule(pagelink, callback = "parseTencent", follow = True) ] # 指定的回调函数 def parseTencent(self, response): #evenlist = response.xpath("//tr[@class='even'] | //tr[@class='odd']") #oddlist = response.xpath("//tr[@class='even'] | //tr[@class='odd']") #fulllist = evenlist + oddlist #for each in fulllist: for each in response.xpath("//tr[@class='even'] | //tr[@class='odd']"): item = TencentItem() # 职位名称 item['positionname'] = each.xpath("./td[1]/a/text()").extract()[0] # 详情连接 item['positionlink'] = each.xpath("./td[1]/a/@href").extract()[0] # 职位类别 item['positionType'] = each.xpath("./td[2]/text()").extract()[0] # 招聘人数 item['peopleNum'] = each.xpath("./td[3]/text()").extract()[0] # 工作地点 item['workLocation'] = each.xpath("./td[4]/text()").extract()[0] # 发布时间 item['publishTime'] = each.xpath("./td[5]/text()").extract()[0] yield item

pipelines.py

import json class TencentPipeline(object): def __init__(self): self.filename = open("tencent.json", "w") def process_item(self, item, spider): text = json.dumps(dict(item), ensure_ascii = False) + ", " self.filename.write(text.encode("utf-8")) return item def close_spider(self, spider): self.filename.close()

settings.py

BOT_NAME = 'TencentSpider' SPIDER_MODULES = ['TencentSpider.spiders'] NEWSPIDER_MODULE = 'TencentSpider.spiders' # 保存日志信息的文件名 LOG_FILE = "tencentlog.log" # 保存日志等级,低于|等于此等级的信息都被保存 LOG_LEVEL = "DEBUG" ITEM_PIPELINES = { 'TencentSpider.pipelines.TencentPipeline': 300, }

执行

scrapy crawl tencent