使用XPath

1 import requests 2 import json 3 from lxml import etree 4 from urllib import parse 5 6 url = 'https://www.zhihu.com/explore' 7 headers = { 8 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36' 9 } 10 html = requests.get(url, headers=headers).text 11 # 响应返回的是字符串,解析为HTML DOM模式 text = etree.HTML(html) 12 text = etree.HTML(html) 13 # 返回所有内容的结点位置 14 node_list = text.xpath('//div[@class="explore-feed feed-item"]') 15 items ={} 16 for node in node_list: 17 # xpath返回的列表,这个列表就这一个参数,用索引方式取出来 18 #问题 19 question = node.xpath('.//h2/a')[0].text.replace(" ","") 20 # 作者 21 author = node.xpath('.//*[@class="author-link-line"]/*')[0].text 22 #author = "".join(node.xpath('.//*[@class="author-link-line"]//text()')).replace(" ","") 23 # 回答 24 answer = node.xpath('.//*[@class="content"]')[0].text 25 #answer = "".join(node.xpath('.//*[@class="content"]/text()')).strip() 26 #answer = str(node.xpath('.//*[@class="content"]/text()'))[1:-1] 27 28 items = { 29 "question" : question, 30 "author" : author, 31 "answer" : answer, 32 } 33 34 with open("explore.json", "a") as f: 35 #f.write(json.dumps(items, ensure_ascii = False).encode("utf-8") + " ") 36 f.write(json.dumps(items, ensure_ascii = False) + " ")

保存为TXT

1 import requests 2 3 from lxml import etree 4 from urllib import parse 5 6 url = 'https://www.zhihu.com/explore' 7 headers = { 8 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36' 9 } 10 html = requests.get(url, headers=headers).text 11 # 响应返回的是字符串,解析为HTML DOM模式 text = etree.HTML(html) 12 text = etree.HTML(html) 13 # 返回所有内容的结点位置 14 node_list = text.xpath('//div[@class="explore-feed feed-item"]') 15 16 for node in node_list: 17 # xpath返回的列表,这个列表就这一个参数,用索引方式取出来 18 #问题 19 question = node.xpath('.//h2/a')[0].text.replace(" ","") 20 # 作者 21 author = node.xpath('.//*[@class="author-link-line"]/*')[0].text 22 #author = "".join(node.xpath('.//*[@class="author-link-line"]//text()')).replace(" ","") 23 # 回答 24 answer = node.xpath('.//*[@class="content"]')[0].text 25 #answer = "".join(node.xpath('.//*[@class="content"]/text()')).strip() 26 #answer = str(node.xpath('.//*[@class="content"]/text()'))[1:-1] 27 28 with open('explore.txt', 'a', encoding='utf-8') as file: 29 file.write(' '.join([question, author, answer])) 30 file.write(' ' + '=' * 50 + ' ')

保存为csv

1 import requests 2 from lxml import etree 3 from urllib import parse 4 import csv 5 6 url = 'https://www.zhihu.com/explore' 7 headers = { 8 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36' 9 } 10 html = requests.get(url, headers=headers).text 11 # 响应返回的是字符串,解析为HTML DOM模式 text = etree.HTML(html) 12 text = etree.HTML(html) 13 # 返回所有内容的结点位置 14 node_list = text.xpath('//div[@class="explore-feed feed-item"]') 15 16 for node in node_list: 17 # xpath返回的列表,这个列表就这一个参数,用索引方式取出来 18 #问题 19 question = node.xpath('.//h2/a')[0].text.replace(" ","") 20 # 作者 21 author = node.xpath('.//*[@class="author-link-line"]/*')[0].text 22 #author = "".join(node.xpath('.//*[@class="author-link-line"]//text()')).replace(" ","") 23 # 回答,为方便展示,只取部分内容,text[ :10] 24 answer = node.xpath('.//*[@class="content"]')[0].text[ :10] 25 26 #answer = node.xpath('.//*[@class="content"]')[0].text 27 #answer = "".join(node.xpath('.//*[@class="content"]/text()')).strip() 28 #answer = str(node.xpath('.//*[@class="content"]/text()'))[1:-1] 29 30 31 with open('explore.csv', 'a', encoding='utf-8') as csvfile: 32 fieldnames = ['question', 'author', 'answer'] 33 writer = csv.DictWriter(csvfile, fieldnames=fieldnames) 34 writer.writeheader() 35 writer.writerow({'question': question, 'author': author, 'answer': answer})

读取csv

1 import csv 2 3 with open('explore.csv', 'r', encoding='utf-8') as csvfile: 4 reader = csv.reader(csvfile) 5 for row in reader: 6 print(row)

Excel打开乱码参考:excel打开csv文件显示乱码的处理方法_百度经验

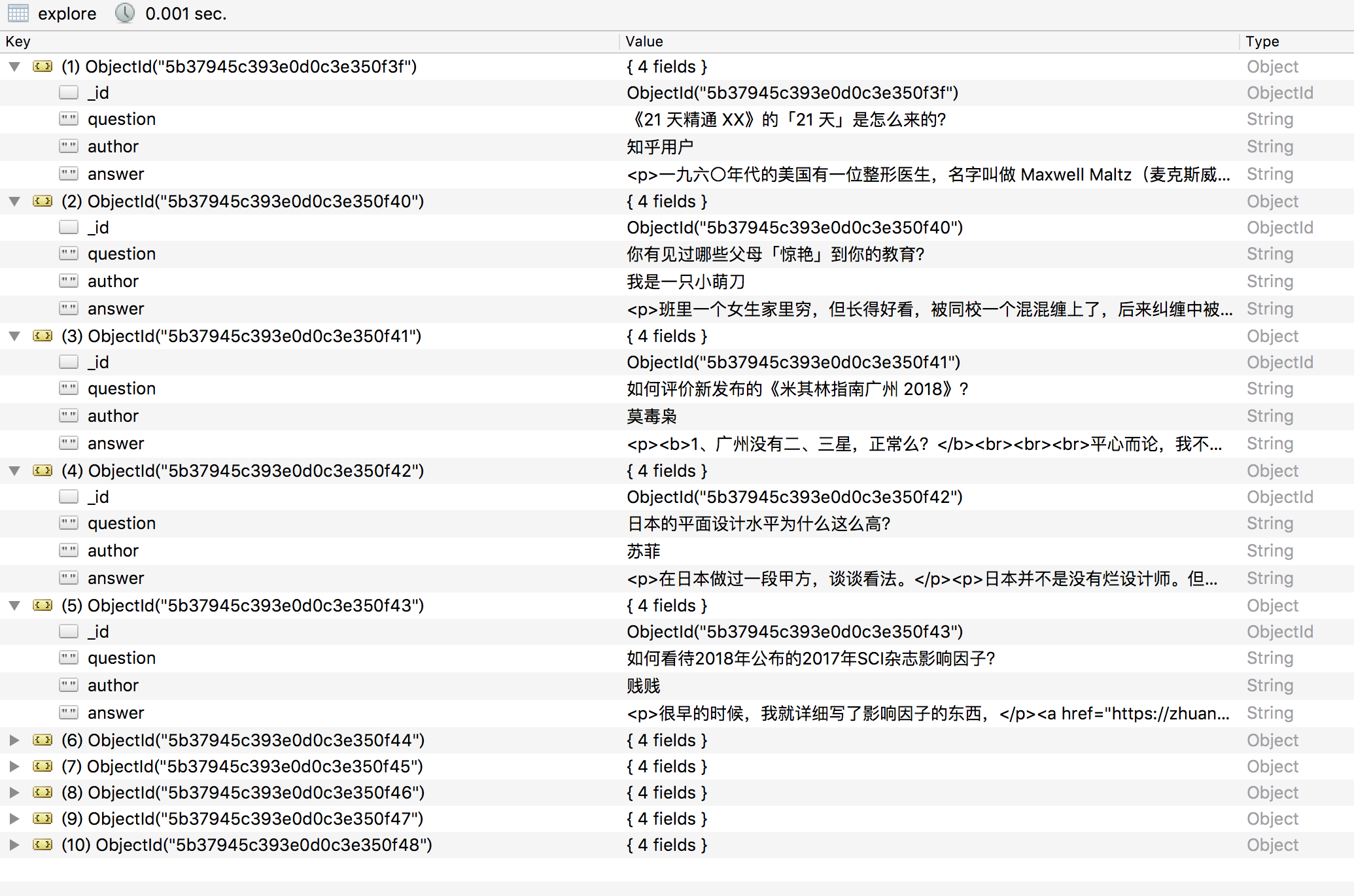

保存到MongoDB

1 import requests 2 from lxml import etree 3 from urllib import parse 4 from pymongo import MongoClient 5 6 client = MongoClient() 7 db = client['explore'] 8 collection = db['explore'] 9 10 url = 'https://www.zhihu.com/explore' 11 headers = { 12 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36' 13 } 14 html = requests.get(url, headers=headers).text 15 # 响应返回的是字符串,解析为HTML DOM模式 text = etree.HTML(html) 16 text = etree.HTML(html) 17 # 返回所有内容的结点位置 18 node_list = text.xpath('//div[@class="explore-feed feed-item"]') 19 20 for node in node_list: 21 # xpath返回的列表,这个列表就这一个参数,用索引方式取出来 22 #问题 23 question = node.xpath('.//h2/a')[0].text.replace(" ","") 24 # 作者 25 author = node.xpath('.//*[@class="author-link-line"]/*')[0].text 26 #author = "".join(node.xpath('.//*[@class="author-link-line"]//text()')).replace(" ","") 27 # 回答 28 answer = node.xpath('.//*[@class="content"]')[0].text 29 #answer = "".join(node.xpath('.//*[@class="content"]/text()')).strip() 30 #answer = str(node.xpath('.//*[@class="content"]/text()'))[1:-1] 31 32 items = { 33 "question" : question, 34 "author" : author, 35 "answer" : answer, 36 } 37 38 if collection.insert(items): 39 print('Saved to Mongo')

稍微改动

1 import requests 2 from lxml import etree 3 from urllib import parse 4 from pymongo import MongoClient 5 6 client = MongoClient() 7 db = client['explore'] 8 collection = db['explore'] 9 10 url = 'https://www.zhihu.com/explore' 11 headers = { 12 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36' 13 } 14 html = requests.get(url, headers=headers).text 15 # 响应返回的是字符串,解析为HTML DOM模式 text = etree.HTML(html) 16 text = etree.HTML(html) 17 # 返回所有内容的结点位置 18 node_list = text.xpath('//div[@class="explore-feed feed-item"]') 19 explore ={} 20 for node in node_list: 21 # xpath返回的列表,这个列表就这一个参数,用索引方式取出来 22 #问题 23 explore['question'] = node.xpath('.//h2/a')[0].text.replace(" ","") 24 # 作者 25 explore['author'] = node.xpath('.//*[@class="author-link-line"]/*')[0].text 26 #author = "".join(node.xpath('.//*[@class="author-link-line"]//text()')).replace(" ","") 27 # 回答 28 explore['answer'] = node.xpath('.//*[@class="content"]')[0].text 29 #answer = "".join(node.xpath('.//*[@class="content"]/text()')).strip() 30 #answer = str(node.xpath('.//*[@class="content"]/text()'))[1:-1] 31 32 if collection.insert(explore): 33 print('Saved to Mongo')

抛出异常

raise DuplicateKeyError(error.get("errmsg"), 11000, error)

pymongo.errors.DuplicateKeyError: E11000 duplicate key error collection: explore.explore index: _id_ dup key: { : ObjectId('5b3792ae393e0d0c38123bbc') }

id用于记录唯一的一条Schema,Schema本身就有标识唯一性的属性,它就是:ObjectId主键,一种特殊而且非常重要的类型,每个Schema都会默认配置这个属性,属性名为_id

MongoDB 默认会创建一个索引_id

1 import requests 2 from lxml import etree 3 from urllib import parse 4 from pymongo import MongoClient 5 import pymongo.errors 6 7 client = MongoClient() 8 db = client['explore'] 9 collection = db['explore'] 10 11 url = 'https://www.zhihu.com/explore' 12 headers = { 13 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36' 14 } 15 html = requests.get(url, headers=headers).text 16 # 响应返回的是字符串,解析为HTML DOM模式 text = etree.HTML(html) 17 text = etree.HTML(html) 18 # 返回所有内容的结点位置 19 node_list = text.xpath('//div[@class="explore-feed feed-item"]') 20 explore ={} 21 for node in node_list: 22 # xpath返回的列表,这个列表就这一个参数,用索引方式取出来 23 #问题 24 explore['question'] = node.xpath('.//h2/a')[0].text.replace(" ","") 25 # 作者 26 explore['author'] = node.xpath('.//*[@class="author-link-line"]/*')[0].text 27 #author = "".join(node.xpath('.//*[@class="author-link-line"]//text()')).replace(" ","") 28 # 回答 29 explore['answer'] = node.xpath('.//*[@class="content"]')[0].text 30 #answer = "".join(node.xpath('.//*[@class="content"]/text()')).strip() 31 #answer = str(node.xpath('.//*[@class="content"]/text()'))[1:-1] 32 try: 33 if collection.insert(explore): 34 print('Saved to Mongo') 35 except pymongo.errors.DuplicateKeyError: 36 # 对唯一字段进行重复插入,pymongo则会抛出这个错误,并且插入失败 37 print("重复插入") 38 pass

还是不能插入数据。