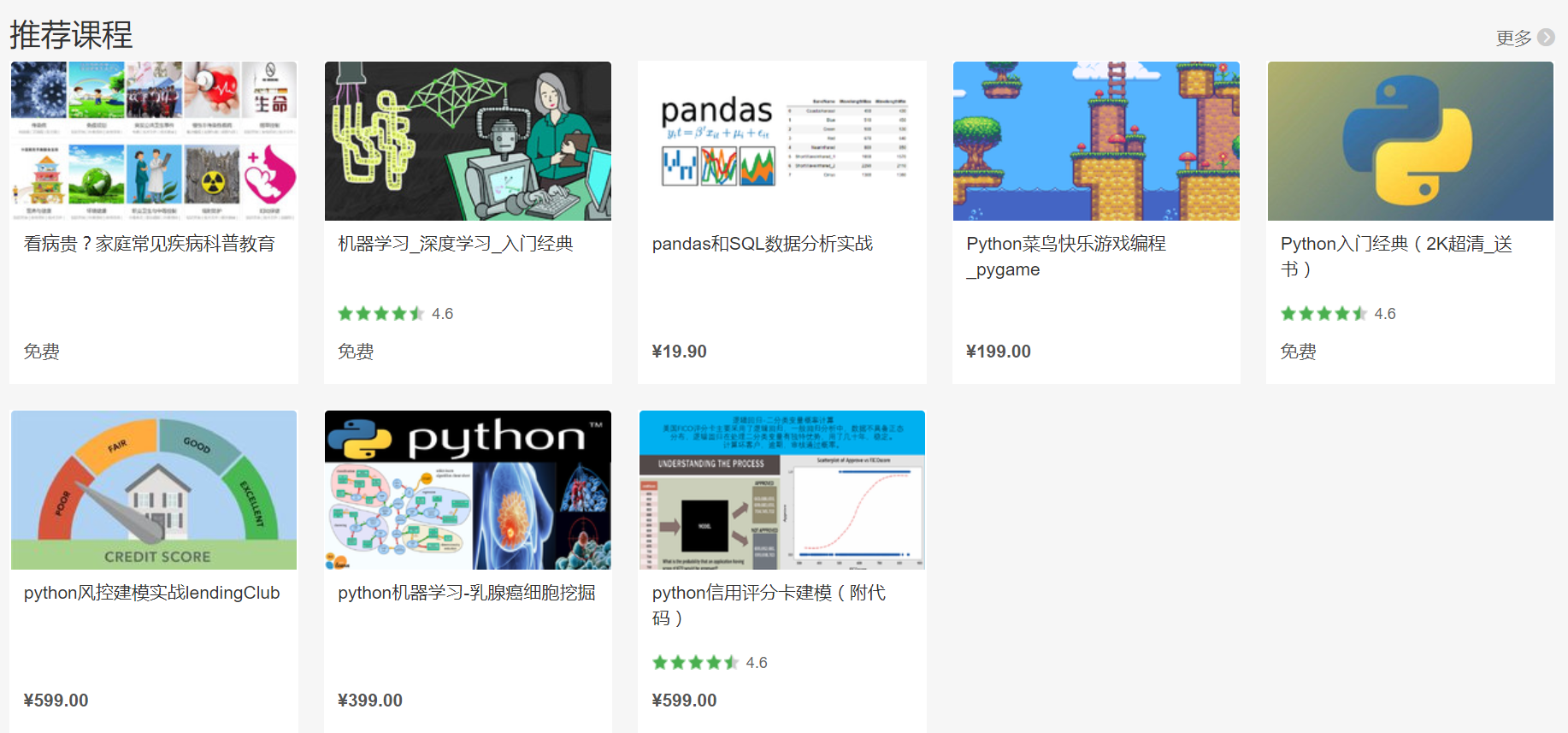

python机器学习-乳腺癌细胞挖掘(博主亲自录制视频)https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

pickle用于持久化保存数据,分类器生成需要消耗大量时间,避免以后重复消耗时间

import pickle

#保存分类器 save_classifier = open("naivebayes.pickle","wb") pickle.dump(classifier, save_classifier) save_classifier.close() #导入分类器

classifier_f = open("naivebayes.pickle", "rb")

classifier = pickle.load(classifier_f)

classifier_f.close()

Training classifiers and machine learning algorithms can take a very long time, especially if you're training against a larger data set. Ours is actually pretty small. Can you imagine having to train the classifier every time you wanted to fire it up and use it? What horror! Instead, what we can do is use the Pickle module to go ahead and serialize our classifier object, so that all we need to do is load that file in real quick.

So, how do we do this? The first step is to save the object. To do this, first you need to import pickle at the top of your script, then, after you have trained with .train() the classifier, you can then call the following lines:

This opens up a pickle file, preparing to write in bytes some data. Then, we use pickle.dump() to dump the data. The first parameter to pickle.dump() is what are you dumping, the second parameter is where are you dumping it.

After that, we close the file as we're supposed to, and that is that, we now have a pickled, or serialized, object saved in our script's directory!

Next, how would we go about opening and using this classifier? The .pickle file is a serialized object, all we need to do now is read it into memory, which will be about as quick as reading any other ordinary file. To do this:

Here, we do a very similar process. We open the file to read as bytes. Then, we use pickle.load() to load the file, and we save the data to the classifier variable. Then we close the file, and that is that. We now have the same classifier object as before!

Now, we can use this object, and we no longer need to train our classifier every time we wanted to use it to classify.

While this is all fine and dandy, we're probably not too content with the 60-75% accuracy we're getting. What about other classifiers? Turns out, there are many classifiers, but we need the scikit-learn (sklearn) module. Luckily for us, the people at NLTK recognized the value of incorporating the sklearn module into NLTK, and they have built us a little API to do it. That's what we'll be doing in the next tutorial.

把documents,word_features,classifier三个数据保存,以免以后做大量重复时间消耗

# -*- coding: utf-8 -*-

"""

Created on Thu Jan 12 10:44:19 2017

@author: Administrator

用于短评论分析-- Twitter

保存后的"positive.txt","negative.txt"需要转码为utf-8

在线转码网址

http://www.esk365.com/tools/GB2312-UTF8.asp

features=5000,准确率百分之60以上

features=10000,准确率百分之 以上

运行时间可能长达一个小时

"""

import nltk

import random

import pickle

from nltk.tokenize import word_tokenize

import time

time1=time.time()

short_pos = open("positive.txt","r").read()

short_neg = open("negative.txt","r").read()

# move this up here

documents = []

all_words = []

for r in short_pos.split('

'):

documents.append( (r, "pos") )

for r in short_neg.split('

'):

documents.append( (r, "neg") )

# j is adject, r is adverb, and v is verb

#allowed_word_types = ["J","R","V"] 允许形容词类别

allowed_word_types = ["J"]

for p in short_pos.split('

'):

documents.append( (p, "pos") )

words = word_tokenize(p)

pos = nltk.pos_tag(words)

for w in pos:

if w[1][0] in allowed_word_types:

all_words.append(w[0].lower())

for p in short_neg.split('

'):

documents.append( (p, "neg") )

words = word_tokenize(p)

pos = nltk.pos_tag(words)

for w in pos:

if w[1][0] in allowed_word_types:

all_words.append(w[0].lower())

#保存文档

save_documents = open("documents.pickle","wb")

pickle.dump(documents, save_documents)

save_documents.close()

#时间测试

time2=time.time()

print("time1 consuming:",time2-time1)

#保存特征

all_words = nltk.FreqDist(all_words)

#最好改成2万以上

word_features = list(all_words.keys())[:5000]

save_word_features = open("word_features5k.pickle","wb")

pickle.dump(word_features, save_word_features)

save_word_features.close()

def find_features(document):

words = word_tokenize(document)

features = {}

for w in word_features:

features[w] = (w in words)

return features

featuresets = [(find_features(rev), category) for (rev, category) in documents]

random.shuffle(featuresets)

print(len(featuresets))

testing_set = featuresets[10000:]

training_set = featuresets[:10000]

classifier = nltk.NaiveBayesClassifier.train(training_set)

print("Original Naive Bayes Algo accuracy percent:", (nltk.classify.accuracy(classifier, testing_set))*100)

classifier.show_most_informative_features(15)

#保存分类器

save_classifier = open("originalnaivebayes5k.pickle","wb")

pickle.dump(classifier, save_classifier)

save_classifier.close()

time3=time.time()

print("time2 consuming:",time3-time2)

https://study.163.com/provider/400000000398149/index.htm?share=2&shareId=400000000398149(博主视频教学主页)