在之前的文章中我们简单了解了一下Scrapy 框架和安装及目录的介绍,本章我们将根据 scrapy 框架实现博客园首页博客的爬取及数据处理。

我们先在自定义的目录中通过命令行来构建一个 scrapy 项目目录

scrapy startproject scrapyCnblogs

生成一下目录:

然后在终端命令行中输入

scrapy genspider cnblogs cnblogs.com

在 scrapCnblogs/spiders 下就会生成一个 cnblogs.py 的文件,代码如下:

1 # -*- coding: utf-8 -*- 2 import scrapy 3 4 5 class CnblogsSpider(scrapy.Spider): 6 name = 'cnblogs' 7 allowed_domains = ['cnblogs.com'] 8 start_urls = ['http://cnblogs.com/'] 9 10 def parse(self, response): 11 pass

在上面的代码中 allowed_domains 将限制爬虫的作用范围,start_urls 是爬虫的起始 url,爬取的结果将在 parse 方法中进行数据处理。

我们要做的案例是爬取博客园首页的博客列表,链接为 https://www.cnblogs.com/,内容如下:

本次我们就只爬取网页中间的博客列表中的:博客名称,链接和作者 这三个信息,分别定义为 title,link,author。

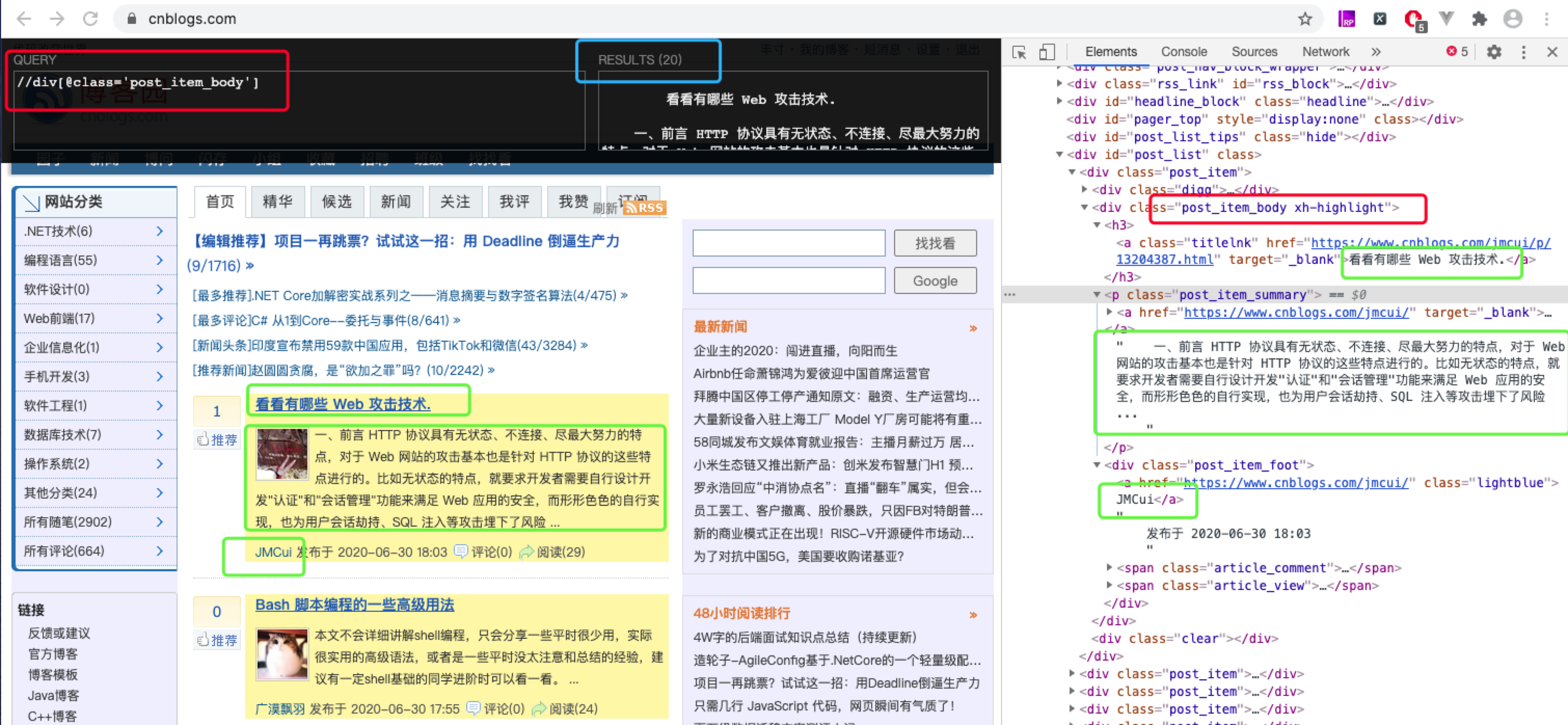

在页面筛选信息时我用的是我比较习惯用的 xpath,scrapy 框架集成了该模块,使用起来也非常方便。xpath 的使用规则:https://www.cnblogs.com/weijiutao/p/10879871.html

我们先通过控制台来查找到我们要获取的字段信息:

我们根据xpath获取到的信息将上面的 cnblogs.py 文件改为如下:

1 # -*- coding: utf-8 -*- 2 import scrapy 3 4 5 # 创建一个爬虫类 6 class CnblogsSpider(scrapy.Spider): 7 # 爬虫名 8 name = 'cnblogs' 9 # 允许爬虫作用的范围 10 allowed_domains = ['cnblogs.com'] 11 # 爬虫起始的url 12 start_urls = ['https://www.cnblogs.com'] 13 14 def parse(self, response): 15 # 通过 scrapy 自带的xpath匹配出所有博客的根结点列表集合 16 post_list = response.xpath("//div[@class='post_item_body']") 17 # 遍历根节点集合 18 for post in post_list: 19 # extract() 将匹配的对象结果转换为Unicode字符串,不加 extract() 结果为xpath匹配对象 20 # title 21 title = post.xpath("./h3/a[@class='titlelnk']/text()").extract()[0] 22 # link 23 link = post.xpath("./h3/a[@class='titlelnk']/@href").extract()[0] 24 # author 25 author = post.xpath("./div[@class='post_item_foot']/a/text()").extract()[0] 26 print(title + link + author)

上面的代码中,我们只需要定义 allowed_domains 和 start_urls 这两个字段,scrapy 就会自动帮我们去进行内容爬取来,并且通过 parse() 方法返回 response 的结果,然后我们再通过 scrapy 提供的 xpath 模块过滤我们想要的信息就可以了。

在终端输出:

scrapy crawl cnblogs

其中 cnblogs 使我们在上面的代码中定义的爬虫名 name 的值,意思是启动该爬虫,然后我们就可以在控制台查看我们的打印结果了:

上面的代码已经大大简化了我们很久之前写的爬虫的文章,接下来我们再来将 scrapy 其他的文件串联起来。

在 scrapyCnblogs/items.py 中写入一下代码:

1 # -*- coding: utf-8 -*- 2 3 # Define here the models for your scraped items 4 # 5 # See documentation in: 6 # https://docs.scrapy.org/en/latest/topics/items.html 7 8 import scrapy 9 10 11 class ScrapycnblogsItem(scrapy.Item): 12 # define the fields for your item here like: 13 # 标题 14 title = scrapy.Field() 15 # 链接 16 link = scrapy.Field() 17 # 作者 18 author = scrapy.Field()

该代码是将我们想要过滤的信息进行定义,我们在此文件中定义了一个 ScrapycnblogsItem 的类,里面定义了 title,link 和 author 三个字段。

接下来将刚才写的 cnblogs.py 改为如下代码:

1 # -*- coding: utf-8 -*- 2 import scrapy 3 # 引入 ScrapycnblogsItem 类 4 from scrapyCnblogs.items import ScrapycnblogsItem 5 6 7 # 创建一个爬虫类 8 class CnblogsSpider(scrapy.Spider): 9 # 爬虫名 10 name = 'cnblogs' 11 # 允许爬虫作用的范围 12 allowed_domains = ['cnblogs.com'] 13 # 爬虫起始的url 14 start_urls = ['https://www.cnblogs.com'] 15 16 def parse(self, response): 17 # 通过 scrapy 自带的xpath匹配出所有博客的根结点列表集合 18 post_list = response.xpath("//div[@class='post_item_body']") 19 # 遍历根节点集合 20 for post in post_list: 21 # extract() 将匹配的对象结果转换为Unicode字符串,不加 extract() 结果为xpath匹配对象 22 # title 23 title = post.xpath("./h3/a[@class='titlelnk']/text()").extract()[0] 24 # link 25 link = post.xpath("./h3/a[@class='titlelnk']/@href").extract()[0] 26 # author 27 author = post.xpath("./div[@class='post_item_foot']/a/text()").extract()[0] 28 29 # 将我们得到的数据封装到一个 `ScrapycnblogsItem` 对象 30 item = ScrapycnblogsItem() 31 item['title'] = title 32 item['link'] = link 33 item['author'] = author 34 35 # 将获取的数据交给pipelines 36 yield item

在上面的代码中,我们引入了刚刚定义的 ScrapycnblogsItem 类,然后将爬取过滤的信息复制给 item ,最后 yield 出去,这里所做的操作会将我们的信息交给 scrapyCnblogs/pipelines.py 文件,接下来我们就只需要在 pipelines.py 文件中对我们的数据进行操作就可以了。

pipelines.py 代码如下:

1 # -*- coding: utf-8 -*- 2 3 # Define your item pipelines here 4 # 5 # Don't forget to add your pipeline to the ITEM_PIPELINES setting 6 # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html 7 8 import json 9 10 11 class ScrapycnblogsPipeline(object): 12 # __init__ 方法是可选的,作为类的初始化方法 13 def __init__(self): 14 self.filename = open('cnblogs.json', 'w') 15 16 # process_item 方法是必须写的,用来处理item数据 17 def process_item(self, item, spider): 18 text = json.dumps(dict(item), ensure_ascii=False) + ', ' 19 self.filename.write(text.encode('utf-8')) 20 return item 21 22 # close_spider 方法是可选的,结束时调用这个方法 23 def close_spider(self, spider): 24 self.filename.close()

在上面的代码中 ScrapycnblogsPipeline 类中的 process_item() 方法就会接收到 cnblogs.py 所返回的 item 信息,我们在 process_item() 方法中将所获取的 item 写入到了一个 cnblogs.json 的文件中。

最后还需要做的一步就是去 scrapyCnblogs/settings.py 文件中放开我们定义的这个管道文件了。

settings.py 代码如下:

1 # -*- coding: utf-8 -*- 2 3 # Scrapy settings for scrapyCnblogs project 4 # 5 # For simplicity, this file contains only settings considered important or 6 # commonly used. You can find more settings consulting the documentation: 7 # 8 # https://docs.scrapy.org/en/latest/topics/settings.html 9 # https://docs.scrapy.org/en/latest/topics/downloader-middleware.html 10 # https://docs.scrapy.org/en/latest/topics/spider-middleware.html 11 12 BOT_NAME = 'scrapyCnblogs' 13 14 SPIDER_MODULES = ['scrapyCnblogs.spiders'] 15 NEWSPIDER_MODULE = 'scrapyCnblogs.spiders' 16 17 # Crawl responsibly by identifying yourself (and your website) on the user-agent 18 # USER_AGENT = 'scrapyCnblogs (+http://www.yourdomain.com)' 19 20 # Obey robots.txt rules 21 ROBOTSTXT_OBEY = True 22 23 # Configure maximum concurrent requests performed by Scrapy (default: 16) 24 # CONCURRENT_REQUESTS = 32 25 26 # Configure a delay for requests for the same website (default: 0) 27 # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay 28 # See also autothrottle settings and docs 29 # 延迟 3 秒获取信息 30 DOWNLOAD_DELAY = 3 31 # The download delay setting will honor only one of: 32 # CONCURRENT_REQUESTS_PER_DOMAIN = 16 33 # CONCURRENT_REQUESTS_PER_IP = 16 34 35 # Disable cookies (enabled by default) 36 # COOKIES_ENABLED = False 37 38 # Disable Telnet Console (enabled by default) 39 # TELNETCONSOLE_ENABLED = False 40 41 # Override the default request headers: 42 # 定义报头信息 43 DEFAULT_REQUEST_HEADERS = { 44 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36', 45 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 46 'Accept-Language': 'en', 47 } 48 49 # Enable or disable spider middlewares 50 # See https://docs.scrapy.org/en/latest/topics/spider-middleware.html 51 # SPIDER_MIDDLEWARES = { 52 # 'scrapyCnblogs.middlewares.ScrapycnblogsSpiderMiddleware': 543, 53 # } 54 55 # Enable or disable downloader middlewares 56 # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html 57 # DOWNLOADER_MIDDLEWARES = { 58 # 'scrapyCnblogs.middlewares.ScrapycnblogsDownloaderMiddleware': 543, 59 # } 60 61 # Enable or disable extensions 62 # See https://docs.scrapy.org/en/latest/topics/extensions.html 63 # EXTENSIONS = { 64 # 'scrapy.extensions.telnet.TelnetConsole': None, 65 # } 66 67 # Configure item pipelines 68 # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html 69 # 管道文件 70 ITEM_PIPELINES = { 71 'scrapyCnblogs.pipelines.ScrapycnblogsPipeline': 300, # 优先级,越小优先级越高 72 } 73 74 # Enable and configure the AutoThrottle extension (disabled by default) 75 # See https://docs.scrapy.org/en/latest/topics/autothrottle.html 76 # AUTOTHROTTLE_ENABLED = True 77 # The initial download delay 78 # AUTOTHROTTLE_START_DELAY = 5 79 # The maximum download delay to be set in case of high latencies 80 # AUTOTHROTTLE_MAX_DELAY = 60 81 # The average number of requests Scrapy should be sending in parallel to 82 # each remote server 83 # AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 84 # Enable showing throttling stats for every response received: 85 # AUTOTHROTTLE_DEBUG = False 86 87 # Enable and configure HTTP caching (disabled by default) 88 # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings 89 # HTTPCACHE_ENABLED = True 90 # HTTPCACHE_EXPIRATION_SECS = 0 91 # HTTPCACHE_DIR = 'httpcache' 92 # HTTPCACHE_IGNORE_HTTP_CODES = [] 93 # HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

在上面的代码第 70 行,我们就设置了 ScrapycnblogsPipelines 类的管道,同时还设置了一下延迟时间和报头信息,延迟时间如果不设置的话经常访问可能会被对方发现察觉而封IP,在爬取多页面信息的时候也有助于上次信息处理成功后再处理下次请求,避免数据不完整,报头信息是模拟浏览器的信息,都是为了增加我们的信息爬取成功率。

最后我们在终端输入:

scrapy crawl cnblogs

在我们的目录下就会生成一个 cnblogs.json 的文件,如下:

至此我们就完成了一个相对完整的基于 scrapy 框架爬取博客园首页博客列表的爬虫了!