''' Created on 2017年11月15日 @author: weizhen ''' import tensorflow as tf import pandas as pd import numpy as np import os input_x_size = 80; field_size = 8; vector_dimension = 3; total_plan_train_steps = 1000; MODEL_SAVE_PATH = "TFModel" MODEL_NAME = "FFM" BATCH_SIZE = 1; def createTwoDimensionWeight(input_x_size, field_size, vector_dimension): weights = tf.truncated_normal([int(input_x_size * (input_x_size + 1) / 2), field_size, vector_dimension ]) tf_weights = tf.Variable(weights); return tf_weights;

def createOneDimensionWeight(input_x_size): weights = tf.truncated_normal([input_x_size]) tf_weights = tf.Variable(weights) return tf_weights;

def createZeroDimensionWeight(): weights = tf.truncated_normal([1]) tf_weights = tf.Variable(weights) return tf_weights;

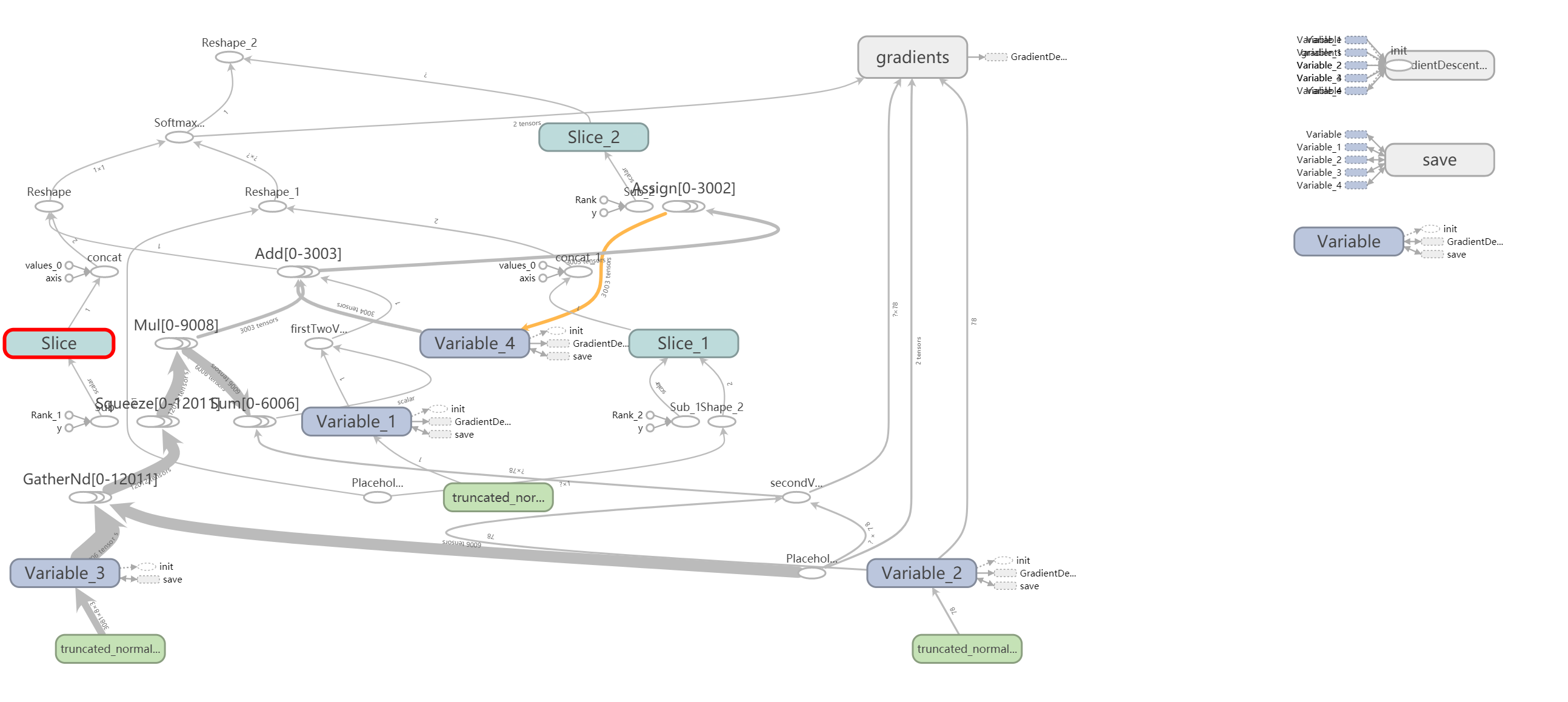

def inference(input_x, input_x_field): """计算回归模型输出的值""" zeroWeights = createZeroDimensionWeight(); # 随机初始化常数项的权重 oneDimWeights = createOneDimensionWeight(input_x_size); # 随机初始化一次项的权重 secondValue = tf.reduce_sum(tf.multiply(oneDimWeights, input_x, name="secondVale")); # 计算一次项的权重和x的点积,和点积后的和 firstTwoValue = tf.add(zeroWeights, secondValue, name="firstTwoValue"); # 常数项和一次项的值 thirdWeight = createTwoDimensionWeight(input_x_size, # 创建二次项的权重变量 field_size, vector_dimension); thirdValue = tf.Variable(0.0, dtype=tf.float32); # 初始化二次项的和为0 input_shape = input_x_size; # 得到输入训练数据的大小 for i in range(input_shape): featureIndex1 = i; # 第一个特征的索引编号 fieldIndex1 = int(input_x_field[i]); # 第一个特征所在域的索引编号 for j in range(i + 1, input_shape): featureIndex2 = j; # 第二个特征的索引编号 fieldIndex2 = int(input_x_field[j]); # 第二个特征的所在域索引编号 vectorLeft = tf.convert_to_tensor([[featureIndex1, fieldIndex2, 0], [featureIndex1, fieldIndex2, 1], [featureIndex1, fieldIndex2, 2]]) weightLeft = tf.gather_nd(thirdWeight, vectorLeft) weightLeftAfterCut = tf.squeeze(weightLeft) vectorRight = tf.convert_to_tensor([[featureIndex2, fieldIndex1, 0], [featureIndex2, fieldIndex1, 1], [featureIndex2, fieldIndex1, 2]]) weightRight = tf.gather_nd(thirdWeight, vectorRight) weightRightAfterCut = tf.squeeze(weightRight) tempValue = tf.reduce_sum(tf.multiply(weightLeftAfterCut, weightRightAfterCut)) indices2 = [i] indices3 = [j] xi = tf.squeeze(tf.gather_nd(input_x, indices2)); xj = tf.squeeze(tf.gather_nd(input_x, indices3)); product = tf.reduce_sum(tf.multiply(xi, xj)); secondItemVal = tf.multiply(tempValue, product) tf.assign(thirdValue, tf.add(thirdValue, secondItemVal)) fowardY = tf.add(firstTwoValue, thirdValue) return fowardY;

def read_csv(): f = open('train_sales_data.csv') df = pd.read_csv(f) y = np.array(df['UNIT_SALES']) x1 = np.array(df['ITEM_NBR']) x2 = np.array(df['STORE_NBR']) x3 = np.array(df['CITY_GUAYAQUIL']) x4 = np.array(df['CITY_BABAHOY']) x5 = np.array(df['CITY_PLAYAS']) x6 = np.array(df['CITY_LOJA']) x7 = np.array(df['CITY_EL_CARMEN']) x8 = np.array(df['CITY_LATACUNGA']) x9 = np.array(df['CITY_GUARAN']) x10 = np.array(df['CITY_CUENC']) x11 = np.array(df['CITY_ESMERALDAS']) x12 = np.array(df['CITY_QUITO']) x13 = np.array(df['CITY_CAYAMBE']) x14 = np.array(df['CITY_SALINAS']) x15 = np.array(df['CITY_RIOBAMBA']) x16 = np.array(df['CITY_SANTO_DOMINGO']) x17 = np.array(df['CITY_DAULE']) x18 = np.array(df['CITY_MACHALA']) x19 = np.array(df['CITY_MACHALA_1']) x20 = np.array(df['CITY_QUEVEDO']) x21 = np.array(df['STATE_AZUAY']) x22 = np.array(df['STATE_BOLIVAR']) x23 = np.array(df['STATE_CHIMBORAZO']) x24 = np.array(df['STATE_COTOPAXI']) x25 = np.array(df['STATE_EL_ORO']) x26 = np.array(df['STATE_ESMERALDAS']) x27 = np.array(df['STATE_GUAYAS']) x28 = np.array(df['STATE_IMBABURA']) x29 = np.array(df['STATE_LOJA']) x30 = np.array(df['STATE_LOS_RIOS']) x31 = np.array(df['STATE_MANABI']) x32 = np.array(df['STATE_PICHINCHA']) x33 = np.array(df['STATE_SANTA_ELENA']) x34 = np.array(df['STATE_SANTO_DOMINGO_DE_LOS']) x35 = np.array(df['STATE_TUNGURAHUA']) x36 = np.array(df['N_CLUSTER_1']) x37 = np.array(df['N_CLUSTER_2']) x38 = np.array(df['N_CLUSTER_3']) x39 = np.array(df['N_CLUSTER_4']) x40 = np.array(df['N_CLUSTER_5']) x41 = np.array(df['N_CLUSTER_6']) x42 = np.array(df['N_CLUSTER_7']) x43 = np.array(df['N_CLUSTER_8']) x44 = np.array(df['N_CLUSTER_9']) x45 = np.array(df['N_CLUSTER_10']) x46 = np.array(df['N_CLUSTER_11']) x47 = np.array(df['N_CLUSTER_12']) x48 = np.array(df['N_CLUSTER_13']) x49 = np.array(df['N_CLUSTER_14']) x50 = np.array(df['N_CLUSTER_15']) x51 = np.array(df['N_CLUSTER_16']) x52 = np.array(df['N_CLUSTER_17']) x53 = np.array(df['FAMILY_CLEANING']) x54 = np.array(df['FAMILY_BREAD_BAKERY']) x55 = np.array(df['FAMILY_LIQUOR_WINE_BEER']) x56 = np.array(df['FAMILY_PREPARED_FOODS']) x57 = np.array(df['FAMILY_MEATS']) x58 = np.array(df['FAMILY_BEAUTY']) x59 = np.array(df['FAMILY_HARDWARE']) x60 = np.array(df['FAMILY_BEVERAGES']) x61 = np.array(df['FAMILY_DAIRY']) x62 = np.array(df['FAMILY_GROCERY_II']) x63 = np.array(df['FAMILY_POULTRY']) x64 = np.array(df['FAMILY_SEAFOOD']) x65 = np.array(df['FAMILY_LAWN_AND_GARDEN']) x66 = np.array(df['FAMILY_EGGS']) x67 = np.array(df['FAMILY_DELI']) x68 = np.array(df['FAMILY_LINGERIE']) x69 = np.array(df['FAMILY_FROZEN_FOODS']) x70 = np.array(df['FAMILY_AUTOMOTIVE']) x71 = np.array(df['FAMILY_GROCERY_I']) x72 = np.array(df['FAMILY_PERSONAL_CARE']) x73 = np.array(df['PERISHABLE_TRUE']) x74 = np.array(df['TYPE_HOLIDAY']) x75 = np.array(df['TYPE_WORK_DAY']) x76 = np.array(df['LOCALE_NATIONAL']) x77 = np.array(df['LOCALE_NAME_ECUADOR']) x78 = np.array(df['LOCALE_PRIMER_DIA_DEL_ANO']) x79 = np.array(df['LOCALE_RECUPERO_PUENTE_NAVIDAD']) x80 = np.array(df['LOCALE_RECUPERO_PUENTE']) x81 = np.array(df["FIELD_CATEGORY"]) train_x, train_y, train_x_field = [], [], [] for j in range(80): train_x_field.append(x81[j]) print(x81[j]) for i in range(y.shape[0]): train_x_temp = [] train_y_temp = [] train_x_temp.append(x1[i]) train_x_temp.append(x2[i]) train_x_temp.append(x3[i]) train_x_temp.append(x4[i]) train_x_temp.append(x5[i]) train_x_temp.append(x6[i]) train_x_temp.append(x7[i]) train_x_temp.append(x8[i]) train_x_temp.append(x9[i]) train_x_temp.append(x10[i]) train_x_temp.append(x11[i]) train_x_temp.append(x12[i]) train_x_temp.append(x13[i]) train_x_temp.append(x14[i]) train_x_temp.append(x15[i]) train_x_temp.append(x16[i]) train_x_temp.append(x17[i]) train_x_temp.append(x18[i]) train_x_temp.append(x19[i]) train_x_temp.append(x20[i]) train_x_temp.append(x21[i]) train_x_temp.append(x22[i]) train_x_temp.append(x23[i]) train_x_temp.append(x24[i]) train_x_temp.append(x25[i]) train_x_temp.append(x26[i]) train_x_temp.append(x27[i]) train_x_temp.append(x28[i]) train_x_temp.append(x29[i]) train_x_temp.append(x30[i]) train_x_temp.append(x31[i]) train_x_temp.append(x32[i]) train_x_temp.append(x33[i]) train_x_temp.append(x34[i]) train_x_temp.append(x35[i]) train_x_temp.append(x36[i]) train_x_temp.append(x37[i]) train_x_temp.append(x38[i]) train_x_temp.append(x39[i]) train_x_temp.append(x40[i]) train_x_temp.append(x41[i]) train_x_temp.append(x42[i]) train_x_temp.append(x43[i]) train_x_temp.append(x44[i]) train_x_temp.append(x45[i]) train_x_temp.append(x46[i]) train_x_temp.append(x47[i]) train_x_temp.append(x48[i]) train_x_temp.append(x49[i]) train_x_temp.append(x50[i]) train_x_temp.append(x51[i]) train_x_temp.append(x52[i]) train_x_temp.append(x53[i]) train_x_temp.append(x54[i]) train_x_temp.append(x55[i]) train_x_temp.append(x56[i]) train_x_temp.append(x57[i]) train_x_temp.append(x58[i]) train_x_temp.append(x59[i]) train_x_temp.append(x60[i]) train_x_temp.append(x61[i]) train_x_temp.append(x62[i]) train_x_temp.append(x63[i]) train_x_temp.append(x64[i]) train_x_temp.append(x65[i]) train_x_temp.append(x66[i]) train_x_temp.append(x67[i]) train_x_temp.append(x68[i]) train_x_temp.append(x69[i]) train_x_temp.append(x70[i]) train_x_temp.append(x71[i]) train_x_temp.append(x72[i]) train_x_temp.append(x73[i]) train_x_temp.append(x74[i]) train_x_temp.append(x75[i]) train_x_temp.append(x76[i]) train_x_temp.append(x77[i]) train_x_temp.append(x78[i]) train_x_temp.append(x79[i]) train_x_temp.append(x80[i]) train_y_temp.append(y[i]) train_x.append(train_x_temp); train_y.append(train_y_temp); f.close(); return (train_x, train_y, train_x_field)

1 if __name__ == "__main__": 2 global_step = tf.Variable(0, trainable=False) 3 (train_x, train_y, train_x_field) = read_csv(); 4 input_x = tf.placeholder(tf.float32, [None, 80]) 5 input_y = tf.placeholder(tf.float32, [None, 1]) 6 y_ = inference(input_x, train_x_field) 7 cross_entropy = tf.nn.softmax_cross_entropy_with_logits(logits=y_, labels=input_y); 8 train_step = tf.train.GradientDescentOptimizer(0.001, name="GradientDescentOptimizer").minimize(cross_entropy, global_step=global_step); 9 10 saver = tf.train.Saver(); 11 with tf.Session() as sess: 12 tf.global_variables_initializer().run() 13 for i in range(total_plan_train_steps): 14 input_x_batch = train_x[int(i * BATCH_SIZE):int((i + 1) * BATCH_SIZE)] 15 input_y_batch = train_y[int(i * BATCH_SIZE):int((i + 1) * BATCH_SIZE)] 16 17 predict_loss , steps = sess.run([train_step, global_step], feed_dict={input_x:input_x_batch, input_y:input_y_batch}) 18 if (i + 1) % 2 == 0: 19 print("After {step} training step(s) , loss on training batch is {predict_loss} " 20 .format(step=steps, predict_loss=predict_loss)) 21 22 saver.save(sess, os.path.join(MODEL_SAVE_PATH, MODEL_NAME), global_step=steps) 23 writer = tf.summary.FileWriter(os.path.join(MODEL_SAVE_PATH, MODEL_NAME), tf.get_default_graph()) 24 writer.close()