好久不见,最近很多小伙伴说Azure的监控不大灵光,所以就去回去好好看了一下目前Azure上服务的监控支持情况。整理来看AZURE目前GLOBAL从运维监控角度的支持好于国内AZURE,无论是基础组件的日志支持能力还是平台级的运维监控PaaS工具都是比较完整的,对应到国内AZURE应该只是时间的问题。今天的博客以NSG网络安全组日志监控分析为例,为大家抛砖引玉,希望对大家有所启发。以NSG网络安全组分析功能为例,我们首先从两个维度来分析一下方案的可行性,第一,NSG网络安全组可以提供哪些日志,第二,日志监控分析工具目前有哪些解决方案(比如AZURE平台是否有直接可用的平台工具,如果没有有哪些开源工具可以借鉴)。

NSG网络安全组日志支持情况

目前AZURE在GLOBAL已经提供了完整的网络安全组日志,比较有帮助的是flow logs,它可以帮助我们记录所有flow的信息,帮助运维人员了解应用的基线数据(如某应用的服务端口的连接数),帮助安全管理员发现安全实践(比如日志中出现大量Hit拒绝访问策略的flow)。以下面的日志为例,其中的flowTuples字段包含了完整的flow信息,可以帮助用户来做Telemetry分析。"1505789728,10.0.3.4,191.238.64.200,46072,443,T,O,A"这条日志代表在UNIX时间1505789728,有一条出方向(字母O表示)TCP(字母T表示)连接,源IP是10.0.3.4,目地IP是191.238.64.200,源端口为46072,目的端口为443,匹配策略DefaultRule_AllowInternetOutBound后被放行(字母A表示allow)。

{

"records":

[

{

"time": "2017-09-19T02:56:29.1800000Z",

"systemId": "c8a12ed8-7a83-42b1-bdf2-b66716c51de6",

"category": "NetworkSecurityGroupFlowEvent",

"resourceId": "/SUBSCRIPTIONS/4507938F-A0AC-4571-978E-7CC741A60AF8/RESOURCEGROUPS/SHADOWSOCKS/PROVIDERS/MICROSOFT.NETWORK/NETWORKSECURITYGROUPS/NSGDEMOA",

"operationName": "NetworkSecurityGroupFlowEvents",

"properties": {"Version":1,"flows":[{"rule":"DefaultRule_AllowInternetOutBound","flows":[{"mac":"000D3AA2802C","flowTuples":["1505789728,10.0.3.4,191.238.64.200,46072,443,T,O,A","1505789728,10.0.3.4,191.238.64.200,46074,443,T,O,A","1505789731,10.0.3.4,191.238.64.200,46078,443,T,O,A","1505789731,10.0.3.4,191.238.64.200,46080,443,T,O,A","1505789734,10.0.3.4,191.238.64.200,46084,443,T,O,A","1505789734,10.0.3.4,191.238.64.200,46086,443,T,O,A","1505789738,10.0.3.4,191.238.64.200,46090,443,T,O,A","1505789738,10.0.3.4,191.238.64.200,46092,443,T,O,A","1505789741,10.0.3.4,191.238.64.200,46096,443,T,O,A","1505789741,10.0.3.4,191.238.64.200,46098,443,T,O,A","1505789744,10.0.3.4,191.238.64.200,46102,443,T,O,A","1505789744,10.0.3.4,191.238.64.200,46104,443,T,O,A","1505789748,10.0.3.4,191.238.64.200,46108,443,T,O,A","1505789749,10.0.3.4,191.238.64.200,46110,443,T,O,A","1505789752,10.0.3.4,191.238.64.200,46114,443,T,O,A","1505789752,10.0.3.4,191.238.64.200,46116,443,T,O,A","1505789752,10.0.3.4,172.104.34.215,57374,123,U,O,A","1505789753,10.0.3.4,118.189.177.157,40489,123,U,O,A","1505789754,10.0.3.4,202.156.0.34,42260,123,U,O,A","1505789755,10.0.3.4,191.238.64.200,46120,443,T,O,A","1505789755,10.0.3.4,191.238.64.200,46122,443,T,O,A","1505789755,10.0.3.4,202.73.57.107,50910,123,U,O,A","1505789759,10.0.3.4,191.238.64.200,46126,443,T,O,A","1505789759,10.0.3.4,191.238.64.200,46128,443,T,O,A","1505789762,10.0.3.4,191.238.64.200,46132,443,T,O,A","1505789762,10.0.3.4,191.238.64.200,46134,443,T,O,A","1505789765,10.0.3.4,191.238.64.200,46138,443,T,O,A","1505789765,10.0.3.4,191.238.64.200,46140,443,T,O,A","1505789769,10.0.3.4,191.238.64.200,46144,443,T,O,A","1505789769,10.0.3.4,191.238.64.200,46146,443,T,O,A","1505789772,10.0.3.4,191.238.64.200,46150,443,T,O,A","1505789772,10.0.3.4,191.238.64.200,46152,443,T,O,A","1505789776,10.0.3.4,191.238.64.200,46156,443,T,O,A","1505789776,10.0.3.4,191.238.64.200,46158,443,T,O,A","1505789780,10.0.3.4,191.238.64.200,46162,443,T,O,A","1505789780,10.0.3.4,191.238.64.200,46164,443,T,O,A","1505789783,10.0.3.4,191.238.64.200,46168,443,T,O,A","1505789783,10.0.3.4,191.238.64.200,46170,443,T,O,A","1505789786,10.0.3.4,191.238.64.200,46174,443,T,O,A","1505789786,10.0.3.4,191.238.64.200,46176,443,T,O,A"]}]},{"rule":"DefaultRule_DenyAllInBound","flows":[{"mac":"000D3AA2802C","flowTuples":["1505789756,163.172.147.216,10.0.3.4,5104,5060,U,I,D"]}]},{"rule":"UserRule_default-allow-ssh","flows":[]}]}

}]}

日志监控分析工具

在AZURE平台原生的工具中,Log Analytics和PowerBI都可以做很好的运维监控视图,由于是平台级原生服务所以在日志数据ETL处理方面适配接口已经就绪,无需额外的开发工作量。另外在OSS的世界里面也有很多很好的工具,如ELK,Zabbix等,AZURE平台的开放性的一个优势的地方就是与OSS世界的开源工具有很好的集成和整合。本文以ELK为例,AZURE已经为LogStash提供了与AZURE Blob存储对接的input plug-in (https://github.com/Azure/azure-diagnostics-tools/tree/master/Logstash/logstash-input-azureblob),在数据导入方面可以做到即插即用。除此之外AZURE平台在Marketplace中也放入了预装好的ELK实例帮助大家做环境的快速部署。另外小伙伴想自己通过VM搭建与AZURE IaaS平台的ELK最佳时间AZURE也帮大家Cook好了(https://docs.microsoft.com/en-us/azure/architecture/elasticsearch/)

部署过程

ELK安装

本文采用VM实例自己安装ELK的方法,ELK全家桶的安装非常简单,将Elastic的Repo加入后直接通过yum或者apt-get即可安装完成,本文以CentOS为例。

安装ELK

1. 下载可信签名证书

$ rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

2. 创建Repo

进入/etc/yum.repo目录,创建文件elasticsearch.repo

[elasticsearch-5.x]

name=Elasticsearch repository for 5.x packages

baseurl=https://artifacts.elastic.co/packages/5.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

3. 安装Elasticsearch

$ yum install elasticsearch.service #安装

$ systemctl enable elasticsearch.service #设置开机自启动

$ systemctl start elasticsearch.service #启动

4. 安装Logstash

$ yum install logstash.service #安装

$ systemctl enable logstash.service #设置开机自启动

$ systemctl start logstash.service #启动

5. 安装Logstash azure blob插件

$ /usr/share/logstash/bin/logstash-plugin install logstash-input-azureblob

6. 配置Logstash数据处理配置文件

进入/etc/logstash/conf.d/目录

创建logstash.conf文件

input {

azureblob

{

storage_account_name => "mystorageaccount" #此处更改为NSG flow log使用的存储账户

storage_access_key => "VGhpcyBpcyBhIGZha2Uga2V5Lg==" #此处更改为NSG flow log使用存储账户的访问密钥

container => "insights-logs-networksecuritygroupflowevent"

codec => "json"

# Refer https://docs.microsoft.com/en-us/azure/network-watcher/network-watcher-read-nsg-flow-logs

# Typical numbers could be 21/9 or 12/2 depends on the nsg log file types

file_head_bytes => 21

file_tail_bytes => 9

# Enable / tweak these settings when event is too big for codec to handle.

# break_json_down_policy => "with_head_tail"

# break_json_batch_count => 2

}

}

filter {

split { field => "[records]" }

split { field => "[records][properties][flows]"}

split { field => "[records][properties][flows][flows]"}

split { field => "[records][properties][flows][flows][flowTuples]"}

mutate{

split => { "[records][resourceId]" => "/"}

add_field => {"Subscription" => "%{[records][resourceId][2]}"

"ResourceGroup" => "%{[records][resourceId][4]}"

"NetworkSecurityGroup" => "%{[records][resourceId][8]}"}

convert => {"Subscription" => "string"}

convert => {"ResourceGroup" => "string"}

convert => {"NetworkSecurityGroup" => "string"}

split => { "[records][properties][flows][flows][flowTuples]" => ","}

add_field => {

"unixtimestamp" => "%{[records][properties][flows][flows][flowTuples][0]}"

"srcIp" => "%{[records][properties][flows][flows][flowTuples][1]}"

"destIp" => "%{[records][properties][flows][flows][flowTuples][2]}"

"srcPort" => "%{[records][properties][flows][flows][flowTuples][3]}"

"destPort" => "%{[records][properties][flows][flows][flowTuples][4]}"

"protocol" => "%{[records][properties][flows][flows][flowTuples][5]}"

"trafficflow" => "%{[records][properties][flows][flows][flowTuples][6]}"

"traffic" => "%{[records][properties][flows][flows][flowTuples][7]}"

}

convert => {"unixtimestamp" => "integer"}

convert => {"srcPort" => "integer"}

convert => {"destPort" => "integer"}

}

date{

match => ["unixtimestamp" , "UNIX"]

}

}

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => "localhost"

index => "nsg-flow-logs"

}

}

$ systemctl restart logstash #生效配置

7. 安装Kibana

$ yum install kibana.service #安装

$ systemctl enable kibana.service #设置开机自启动

$ systemctl start kibana.service #启动

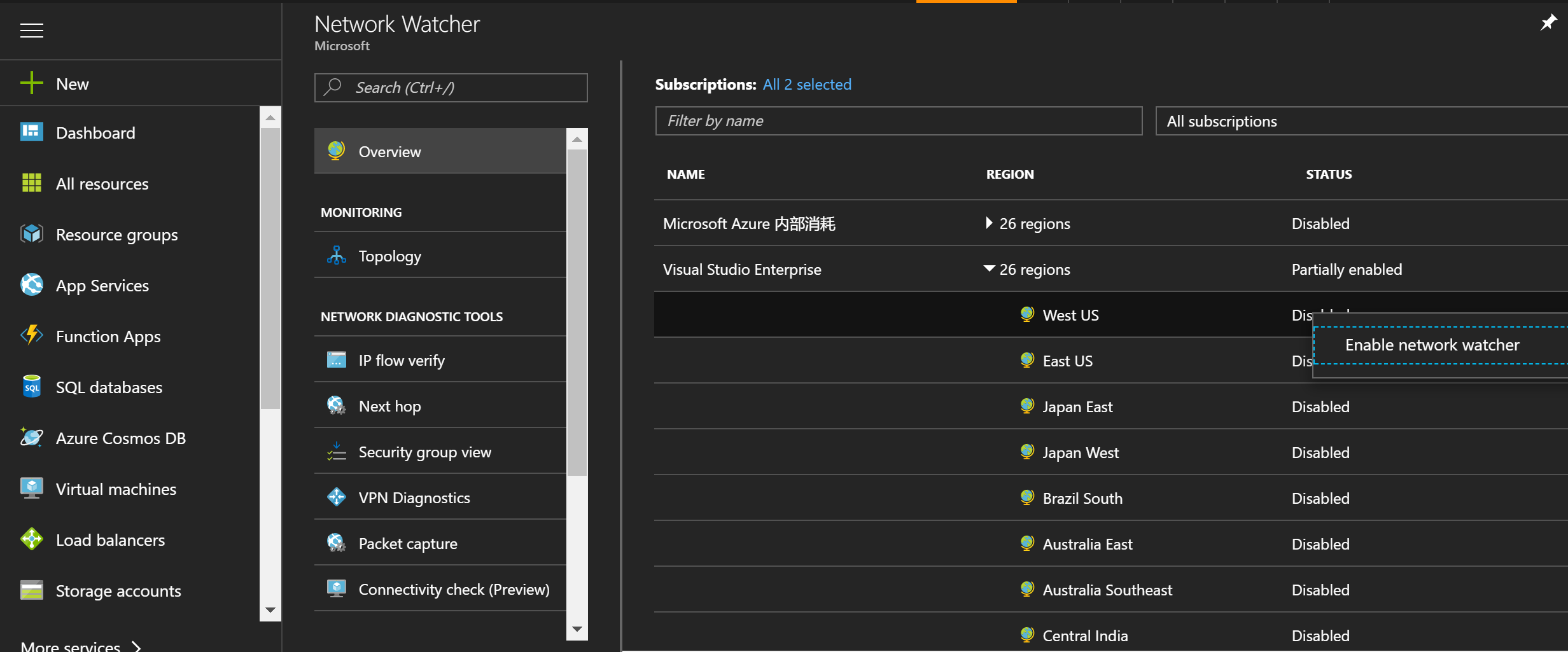

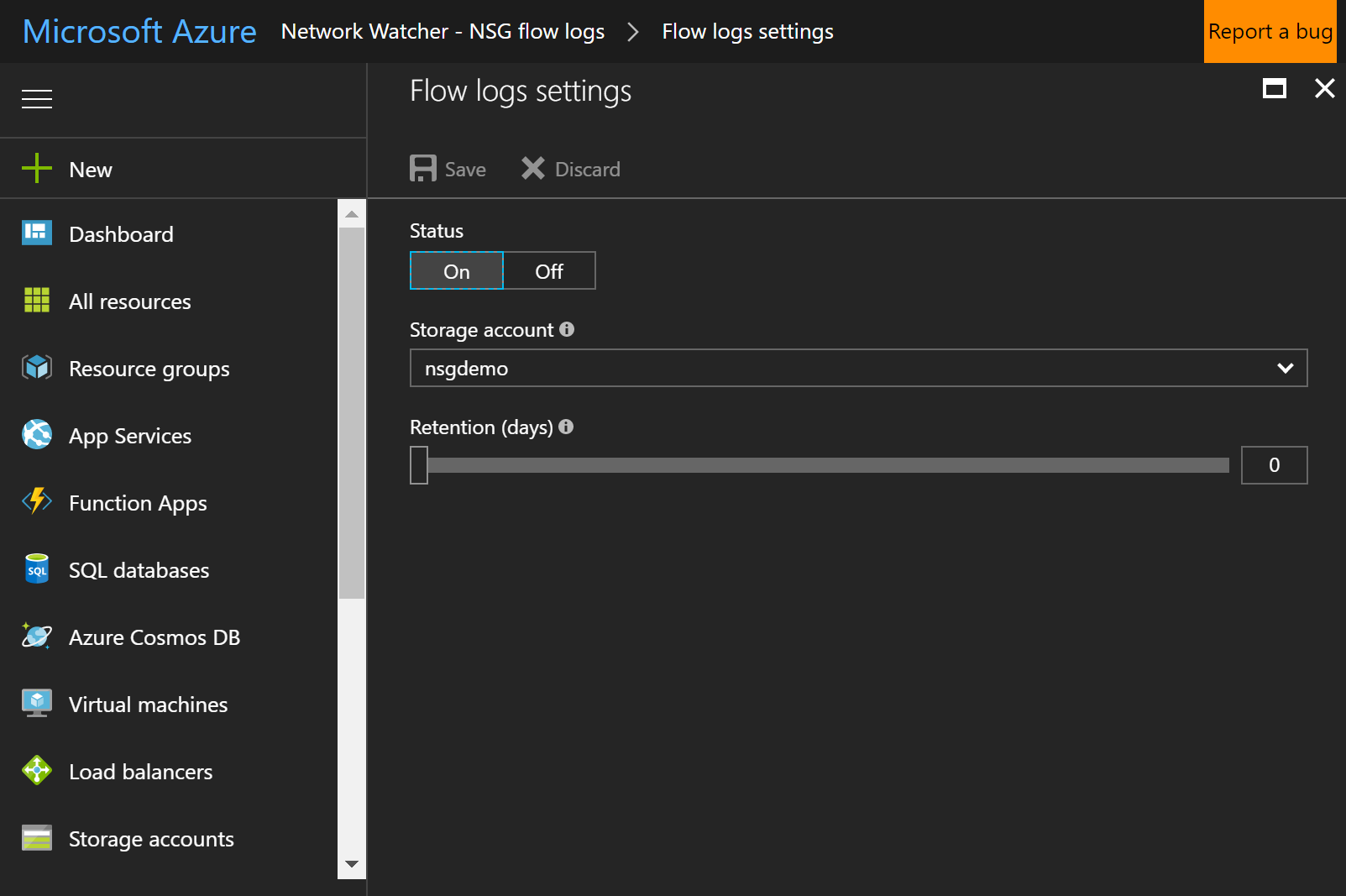

开启NSG flow log

1. 创建NetworkWatcher

2. 对NSG启用flow log

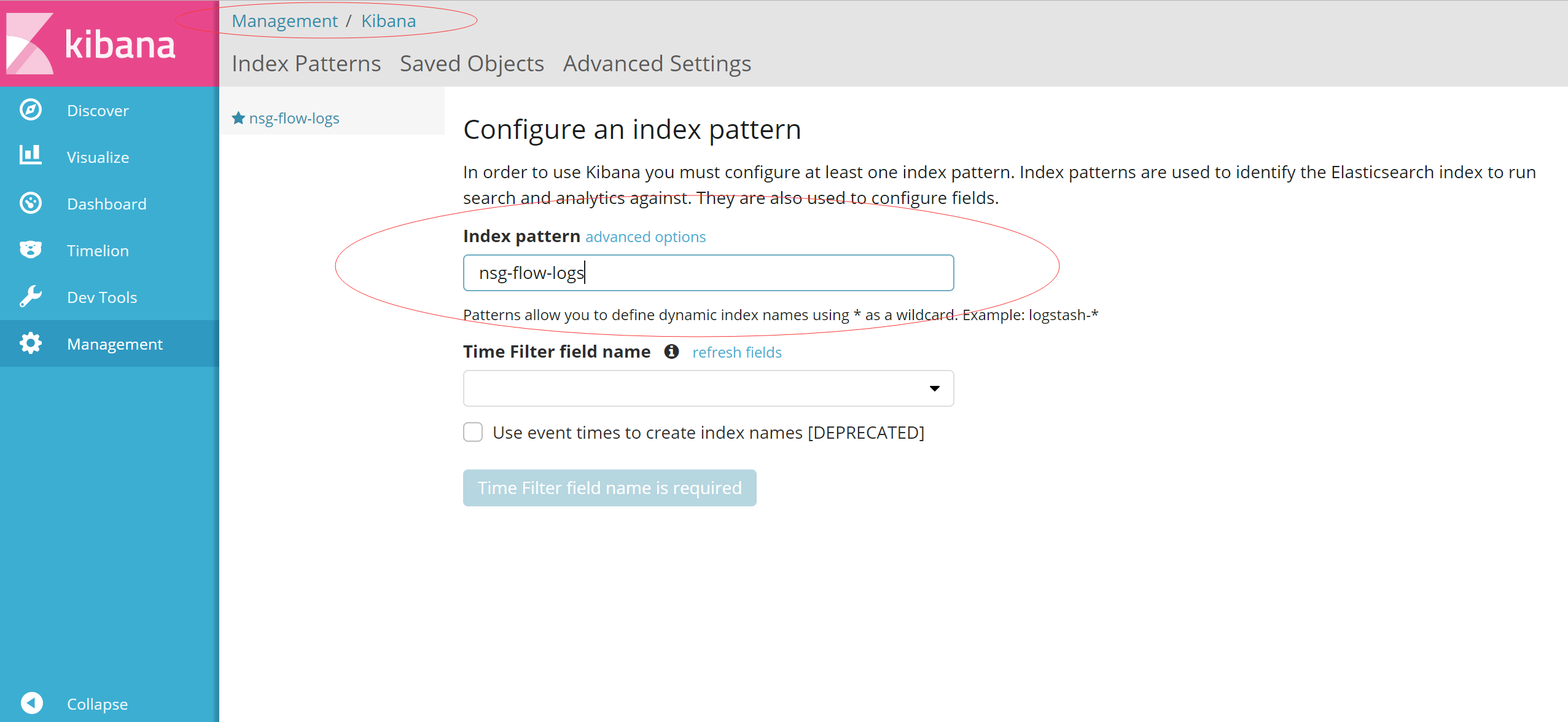

访问Kibana创建运维监控视图

Kibana其实是Elasticsearch的搜索和视图报表前端,进入Kibana后我们首先需要引入NSG flow log在elasticsearch中创建的Index。

1. 导入Elasticsearch Index

在前面的logstash配置文件中可以看到index的命名是nsg-flow-logs

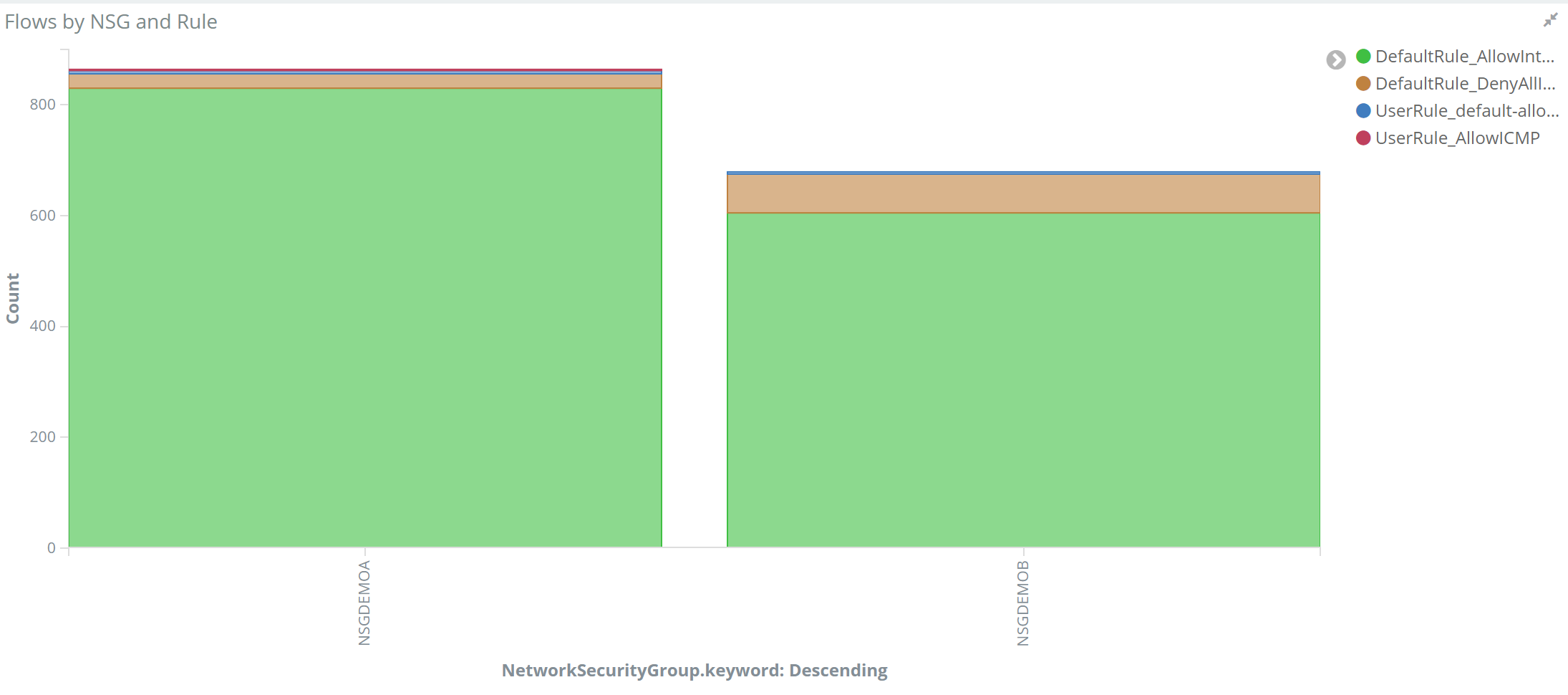

2. 创建报表

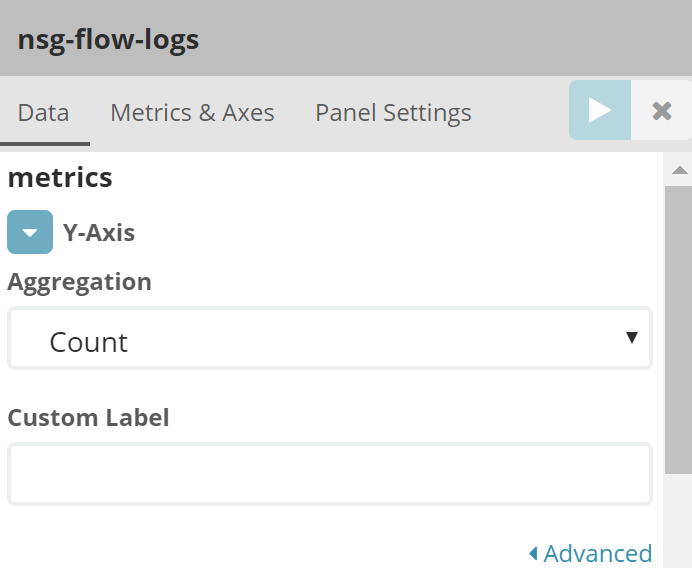

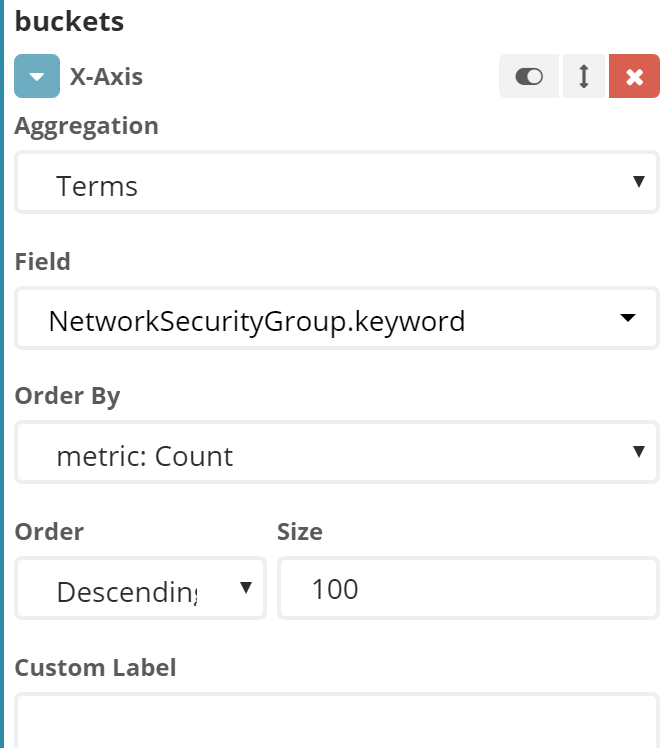

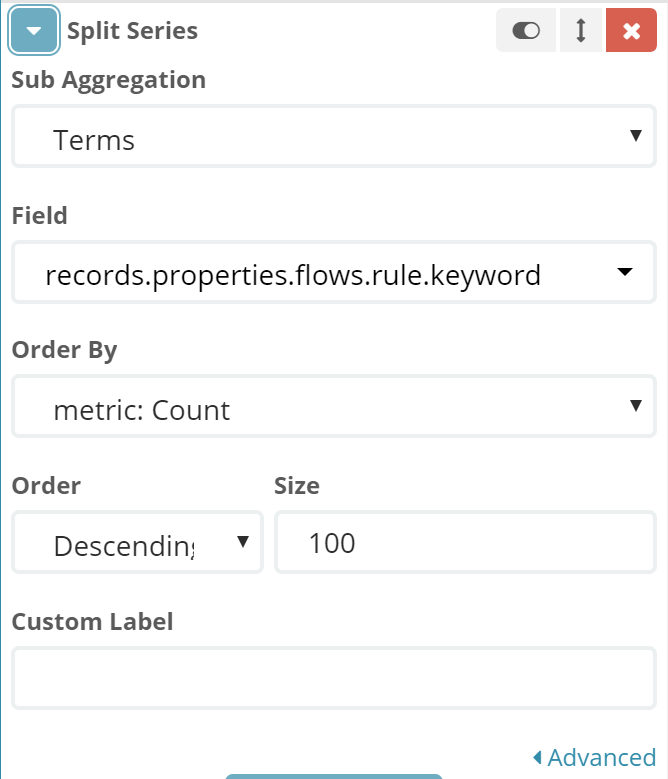

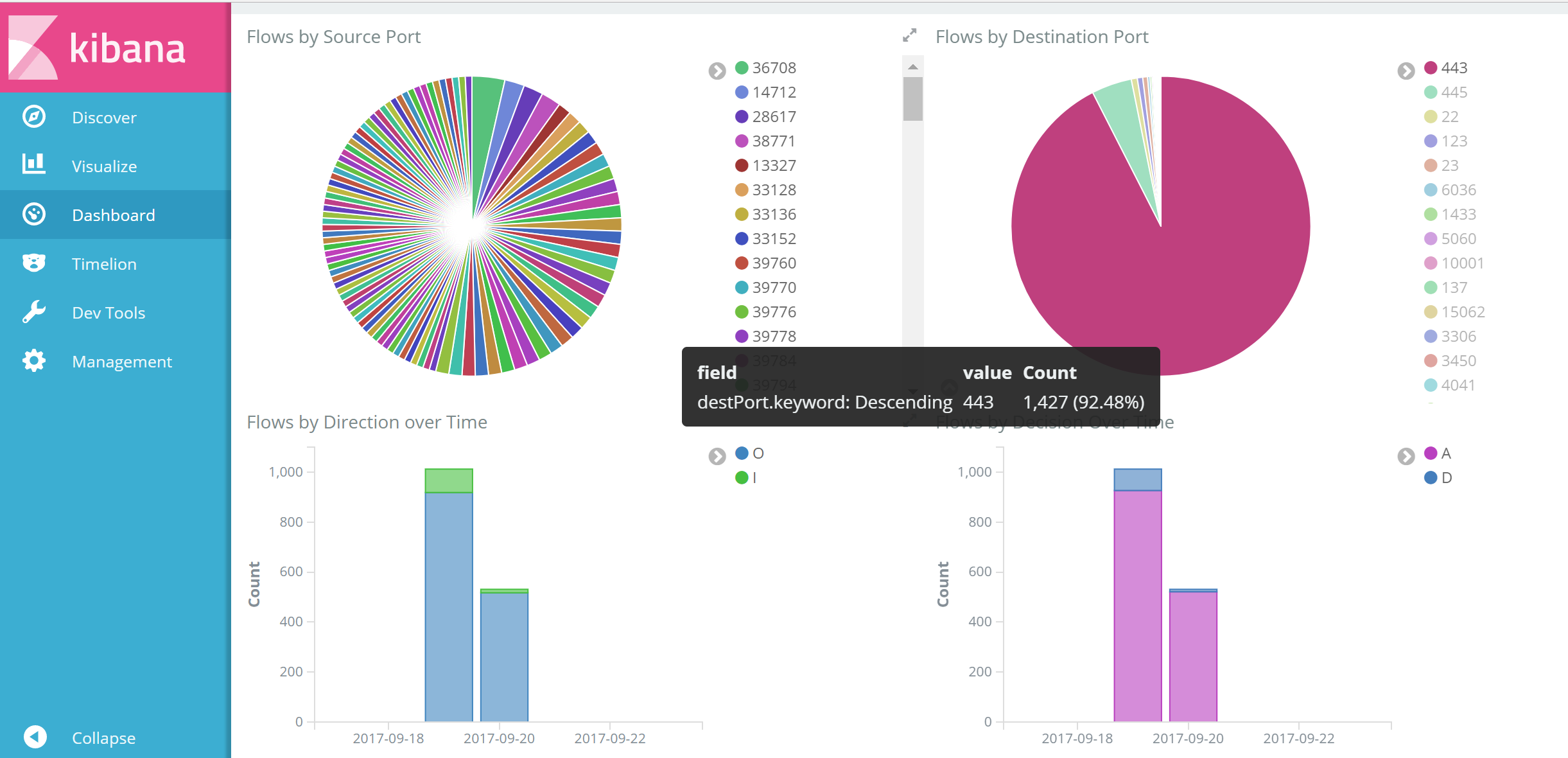

在elasticsearch index中存储的每个documents中对应了nsg flow log中每个flow的完整信息,我们可以通过kibana提供的聚合分类能力实现如查看目的端口分布,查看NSG安全策略命中统计等,此部分以NSG安全策略命中统计为例。创建一个柱状监控试图,X轴按照NSG组名称分类,Y轴表示命中策略总数,并且对每一个NSG Rule进行分类统计

再来看一些有趣的视图

ELK有很强的搜索聚合报表能力,小伙伴们可以发挥想象,这里我就不做深入赘述了。老规矩资源都给大家,大家自行Deepdive吧。下次再来给大家说说Azure Log Analytics。

资源汇总:

NSG Flow Log说明:https://docs.microsoft.com/en-us/azure/network-watcher/network-watcher-nsg-flow-logging-overview

AZURE Service Log Support Matrix:https://docs.microsoft.com/en-us/azure/monitoring-and-diagnostics/monitoring-diagnostic-logs-schema AZURE哪些服务支持什么log,schema什么样子不知道看这里

Azure Logstash Plug-In:https://github.com/Azure/azure-diagnostics-tools

ELK快速入门:https://www.safaribooksonline.com/library/view/learning-elasticsearch/9781787128453/ 耐着性子静心开完立刻开车上路