InputFormat数据输入

1.切片与mapTask并行度决定机制

1.1 问题引出

MapTask的并行度决map阶段的任务处理并发度,进而影响到整个Job的处理速度;

1G的数据,启动八个MapTask,可以提高集群的并发处理能力,那么IK的数据,也启动八个MapTask,会提高集群的性能吗?MapTask并行任务是否越多越好?哪些因素影响了mapTask并行度?

1.2 MapTask并行度决定机制

数据块:block是HDFS物理上把数据分成一块一块;

数据切片:数据切片只是在逻辑上对输入进行分片,并不会在磁盘上将其分成进行存储;

数据切片与MapTask并行度决定机制

(1) 一个Job的Map阶段并行度由客户端在提交Job时的切片数决定;

(2) 每一个split切片分配一个MapTask并行实例处理;

(3) 默认情况下,切片大小=blocksize;

(4) 切片时不考虑数据集整体,而是逐个针对每一个文件单独切片;

2.Job提交流程源码和切片源码详解

2.1 Job提交流程源码

2.1.1 首先从waitForCompletion函数

public boolean waitForCompletion(boolean verbose) throws IOException, InterruptedException, ClassNotFoundException {

//判断state,当state为DEFINE时可以提交,进入submit方法

if (this.state == Job.JobState.DEFINE) {

this.submit();

}

if (verbose) {

this.monitorAndPrintJob();

} else {

int completionPollIntervalMillis = getCompletionPollInterval(this.cluster.getConf());

while(!this.isComplete()) {

try {

Thread.sleep((long)completionPollIntervalMillis);

} catch (InterruptedException var4) {

}

}

}

return this.isSuccessful();

}

2.1.2 进入submi()方法

public void submit() throws IOException, InterruptedException, ClassNotFoundException {

this.ensureState(Job.JobState.DEFINE); //确认JobState状态为可提交状态,否则不能提交

this.setUseNewAPI(); //设置使用最新的API

this.connect(); //进入connect()方法,MapReduce作业提交时连接集群是通过Job类的connect()方法实现的;

final JobSubmitter submitter = this.getJobSubmitter(this.cluster.getFileSystem(), this.cluster.getClient()); //connect方法执行完成之后,定义提交者submit

this.status = (JobStatus)this.ugi.doAs(new PrivilegedExceptionAction<JobStatus>() {

public JobStatus run() throws IOException, InterruptedException, ClassNotFoundException {

return submitter.submitJobInternal(Job.this, Job.this.cluster); //这里的核心方法是submitJobInternal(),提交Job的内部方法,实现了提交Job的所有业务逻辑

}

});

this.state = Job.JobState.RUNNING; //提交之后state状态改变

LOG.info("The url to track the job: " + this.getTrackingURL());

}

2.1.3 进入connect()方法

MapReduce作业提交时连接集群通过Job的Connect()方法实现,它实际上是构建集群Cluster实例cluster

cluster是连接MapReduce集群的一种工具,提供了获取MapReduce集群信息的方法;

在Cluster内部,有一个集群进行通信的客户端通信协议ClientProtocol的实例client,它由ClientProtocolProvider的静态create()方法构造;

在create内部,hadoop2.x中提供了两种模式的ClientProtocol,分别为Yarn模式的YARNRunner和Local模式的LocalJobRunner,Cluster实际上是由他们负责与集群进行通信的;

private synchronized void connect() throws IOException, InterruptedException, ClassNotFoundException {

if (this.cluster == null) { //cluster提供了获取MapReduce的方法

this.cluster = (Cluster)this.ugi.doAs(new PrivilegedExceptionAction<Cluster>() {

public Cluster run() throws IOException, InterruptedException, ClassNotFoundException {

return new Cluster(Job.this.getConfiguration());

}

});

}

}

2.1.4 进入Cluster()构造器

public Cluster(Configuration conf) throws IOException { //首先调用一个参数的构造器,间接调用两个参数的构造器

this((InetSocketAddress)null, conf);

}

public Cluster(InetSocketAddress jobTrackAddr, Configuration conf) throws IOException {

this.fs = null;

this.sysDir = null;

this.stagingAreaDir = null;

this.jobHistoryDir = null;

this.conf = conf;

this.ugi = UserGroupInformation.getCurrentUser();

this.initialize(jobTrackAddr, conf); //最重要的initialize方法

}

//cluster中要关注的两个成员变量是客户端通讯协议提供者ClientProtocolProvider和客户端协议ClientProtocol实例client

private void initialize(InetSocketAddress jobTrackAddr, Configuration conf) throws IOException {

synchronized(frameworkLoader) {

Iterator var4 = frameworkLoader.iterator();

while(var4.hasNext()) {

ClientProtocolProvider provider = (ClientProtocolProvider)var4.next();

LOG.debug("Trying ClientProtocolProvider : " + provider.getClass().getName());

ClientProtocol clientProtocol = null;

try {

if (jobTrackAddr == null) { //如果配置文件没有配置yarn信息,则构建LocalRunner,MR任务本地运行;如果配置了yarn信息,则构建YarnRunner,MR任务在yarn集群上运行

clientProtocol = provider.create(conf);

} else {

clientProtocol = provider.create(jobTrackAddr, conf); //客户端通讯协议client是调用clientProtocolProvider的create()方法实现的

}

if (clientProtocol == null) {

LOG.debug("Cannot pick " + provider.getClass().getName() + " as the ClientProtocolProvider - returned null protocol");

} else {

this.clientProtocolProvider = provider;

this.client = clientProtocol;

LOG.debug("Picked " + provider.getClass().getName() + " as the ClientProtocolProvider");

break;

}

} catch (Exception var9) {

LOG.info("Failed to use " + provider.getClass().getName() + " due to error: ", var9);

}

}

}

if (null == this.clientProtocolProvider || null == this.client) {

throw new IOException("Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses.");

}

}

2.1.5 进入submitJobInternal()方法

job的内部提交方法,用于提交job到集群;

JobStatus submitJobInternal(Job job, Cluster cluster) throws ClassNotFoundException, InterruptedException, IOException {

this.checkSpecs(job); //检查结果的输出路径是否已经存在,如果存在会报异常

Configuration conf = job.getConfiguration(); //conf里边的集群的xml配置文件信息

addMRFrameworkToDistributedCache(conf); //添加MR框架到分布式缓存中

Path jobStagingArea = JobSubmissionFiles.getStagingDir(cluster, conf); //获取提交执行相关资源的临时存放路径

InetAddress ip = InetAddress.getLocalHost();

if (ip != null) { //记录提交作业的主机IP,主机名,并且设置配置信息conf

this.submitHostAddress = ip.getHostAddress();

this.submitHostName = ip.getHostName();

conf.set("mapreduce.job.submithostname", this.submitHostName);

conf.set("mapreduce.job.submithostaddress", this.submitHostAddress);

}

JobID jobId = this.submitClient.getNewJobID(); //获取JobID

job.setJobID(jobId); //设置jobID

Path submitJobDir = new Path(jobStagingArea, jobId.toString()); //提交作业的路径path,它会将两个参数拼接为一个路径

JobStatus status = null; //JOB状态

JobStatus var24;

try {

conf.set("mapreduce.job.user.name", UserGroupInformation.getCurrentUser().getShortUserName());

conf.set("hadoop.http.filter.initializers", "org.apache.hadoop.yarn.server.webproxy.amfilter.AmFilterInitializer");

conf.set("mapreduce.job.dir", submitJobDir.toString());

LOG.debug("Configuring job " + jobId + " with " + submitJobDir + " as the submit dir");

TokenCache.obtainTokensForNamenodes(job.getCredentials(), new Path[]{submitJobDir}, conf);

this.populateTokenCache(conf, job.getCredentials());

if (TokenCache.getShuffleSecretKey(job.getCredentials()) == null) {

KeyGenerator keyGen;

try {

keyGen = KeyGenerator.getInstance("HmacSHA1");

keyGen.init(64);

} catch (NoSuchAlgorithmException var19) {

throw new IOException("Error generating shuffle secret key", var19);

}

SecretKey shuffleKey = keyGen.generateKey();

TokenCache.setShuffleSecretKey(shuffleKey.getEncoded(), job.getCredentials());

}

if (CryptoUtils.isEncryptedSpillEnabled(conf)) {

conf.setInt("mapreduce.am.max-attempts", 1);

LOG.warn("Max job attempts set to 1 since encrypted intermediatedata spill is enabled");

}

this.copyAndConfigureFiles(job, submitJobDir); //拷贝jar包到集群

Path submitJobFile = JobSubmissionFiles.getJobConfPath(submitJobDir);

LOG.debug("Creating splits at " + this.jtFs.makeQualified(submitJobDir));

int maps = this.writeSplits(job, submitJobDir); //计算切片,生成切片规划文件

conf.setInt("mapreduce.job.maps", maps);

LOG.info("number of splits:" + maps);

String queue = conf.get("mapreduce.job.queuename", "default");

AccessControlList acl = this.submitClient.getQueueAdmins(queue);

conf.set(QueueManager.toFullPropertyName(queue, QueueACL.ADMINISTER_JOBS.getAclName()), acl.getAclString());

TokenCache.cleanUpTokenReferral(conf);

if (conf.getBoolean("mapreduce.job.token.tracking.ids.enabled", false)) {

ArrayList<String> trackingIds = new ArrayList();

Iterator var14 = job.getCredentials().getAllTokens().iterator();

while(var14.hasNext()) {

Token<? extends TokenIdentifier> t = (Token)var14.next();

trackingIds.add(t.decodeIdentifier().getTrackingId());

}

conf.setStrings("mapreduce.job.token.tracking.ids", (String[])trackingIds.toArray(new String[trackingIds.size()]));

}

ReservationId reservationId = job.getReservationId();

if (reservationId != null) {

conf.set("mapreduce.job.reservation.id", reservationId.toString());

}

this.writeConf(conf, submitJobFile);

this.printTokens(jobId, job.getCredentials()); //开始正式提交job

status = this.submitClient.submitJob(jobId, submitJobDir.toString(), job.getCredentials());

if (status == null) {

throw new IOException("Could not launch job");

}

var24 = status;

} finally {

if (status == null) {

LOG.info("Cleaning up the staging area " + submitJobDir);

if (this.jtFs != null && submitJobDir != null) {

this.jtFs.delete(submitJobDir, true);

}

}

}

return var24;

}

2.2 切片

2.2.1 进入writeSplits(job,submitJobDir)方法,计算切片,生成切片规划文件

private int writeSplits(JobContext job, Path jobSubmitDir) throws IOException, InterruptedException, ClassNotFoundException {

JobConf jConf = (JobConf)job.getConfiguration();

int maps;

if (jConf.getUseNewMapper()) {

maps = this.writeNewSplits(job, jobSubmitDir);

} else {

maps = this.writeOldSplits(jConf, jobSubmitDir);

}

return maps;

}

内部会调用writeNewSplits(job,submitJobDir)方法;

writeNewSplits(job,jobSubmitDir)内部定义了一个InputFormat类型的实例input

private <T extends InputSplit> int writeNewSplits(JobContext job, Path jobSubmitDir) throws IOException, InterruptedException, ClassNotFoundException {

Configuration conf = job.getConfiguration();

InputFormat<?, ?> input = (InputFormat)ReflectionUtils.newInstance(job.getInputFormatClass(), conf);

List<InputSplit> splits = input.getSplits(job);

T[] array = (InputSplit[])((InputSplit[])splits.toArray(new InputSplit[splits.size()]));

Arrays.sort(array, new JobSubmitter.SplitComparator());

JobSplitWriter.createSplitFiles(jobSubmitDir, conf, jobSubmitDir.getFileSystem(conf), array);

return array.length;

}

InputFormat主要作用:

验证JOB的输入规范

对输入的文件进行切分,形成多个InputSplit(切片)文件,每一个InputSplit对应着一个map任务(MapTask)

将切片后的数据按照规则形成key,value键值对RecordReader

input调用getSplits()方法:

List<InputSplit> splits = input.getSplits(job);

2.2.2 进入FileInputFormat类下的getSplits(job)方法

public List<InputSplit> getSplits(JobContext job) throws IOException {

StopWatch sw = (new StopWatch()).start();

long minSize = Math.max(this.getFormatMinSplitSize(), getMinSplitSize(job)); //getFormatMinSplitSize()返回值固定为1,getMinSplitSize(job)返回job大小

long maxSize = getMaxSplitSize(job); //getMaxSplitSize(job)返回lang类型的最大值

List<InputSplit> splits = new ArrayList(); //生成切片

List<FileStatus> files = this.listStatus(job);

Iterator var9 = files.iterator();

//获取所有文件

while(true) {

while(true) {

while(var9.hasNext()) {

FileStatus file = (FileStatus)var9.next();

Path path = file.getPath(); //获取文件路径

long length = file.getLen(); //获取文件大小

if (length != 0L) {

BlockLocation[] blkLocations;

if (file instanceof LocatedFileStatus) {

blkLocations = ((LocatedFileStatus)file).getBlockLocations();

} else {

FileSystem fs = path.getFileSystem(job.getConfiguration());

blkLocations = fs.getFileBlockLocations(file, 0L, length);

}

if (this.isSplitable(job, path)) { //判断是否可分割

long blockSize = file.getBlockSize(); //获取块大小,本都环境大小默认为32MB,yarn环境在hadoop2.x新版本为128MB,旧版本为64MB

long splitSize = this.computeSplitSize(blockSize, minSize, maxSize); //计算切片的逻辑带下,默认等于块大小

long bytesRemaining; //文件大小

int blkIndex;

for(bytesRemaining = length; (double)bytesRemaining / (double)splitSize > 1.1D; bytesRemaining -= splitSize) { //每次切片时就要判断切分剩下的部分是否大于切片大小的SPLIT_SLOP(默认为1.1)倍;否则就不在分割,划分为一块

blkIndex = this.getBlockIndex(blkLocations, length - bytesRemaining);

splits.add(this.makeSplit(path, length - bytesRemaining, splitSize, blkLocations[blkIndex].getHosts(), blkLocations[blkIndex].getCachedHosts()));

}

if (bytesRemaining != 0L) {

blkIndex = this.getBlockIndex(blkLocations, length - bytesRemaining);

splits.add(this.makeSplit(path, length - bytesRemaining, bytesRemaining, blkLocations[blkIndex].getHosts(), blkLocations[blkIndex].getCachedHosts()));

}

} else {

splits.add(this.makeSplit(path, 0L, length, blkLocations[0].getHosts(), blkLocations[0].getCachedHosts()));

}

} else {

splits.add(this.makeSplit(path, 0L, length, new String[0]));

}

}

job.getConfiguration().setLong("mapreduce.input.fileinputformat.numinputfiles", (long)files.size());

sw.stop();

if (LOG.isDebugEnabled()) {

LOG.debug("Total # of splits generated by getSplits: " + splits.size() + ", TimeTaken: " + sw.now(TimeUnit.MILLISECONDS));

}

return splits;

}

}

}

(1)程序先找到你数据存储的目录

(2)开始遍历处理(规划切片)目录下的每一个文件

(3)遍历第一个文件1.txt

(3.1) 获取文件大小fs.sizeOf(1.txt)

(3.2) 计算切片大小

computeSplitSize(Math.max(minSize,Math.min(maxSize,blocksize)))=blocksize=128M

(3.3) 默认情况下,切片代销=blocksize

(3.4) 开始切片,开始第一个切片,1.txt—0:128M 第二个切片1.txt—128:256M 第三个切片1.txt—256M:300M

每次切片,都要判断切完剩下的部分是否大于块的1.1倍,不大于1.1倍就划分一块切片

(3.5) 将切片信息写到一个切片规划文件中

(3.6) 整个切片的核心过程在getSplit()方法中完成

(3.7) InputSplit只记录切片的元数据信息,比如起始位置,长度以及所在的节点列表等;

(4)提供切片规划文件到yarn上,yarn上的MrAppMaster就可以根据切片规划文件计算开启MapTask个数;

3.FileInputFormat切片机制

3.1 切片机制

(1)简单地按照文件的内容长度进行切片

(2)切片大小,默认等于block大小

(3)切片时不考虑数据集整体,而是逐个针对每一个文件单独切片

3.2 案例

输入两个文件:

1.txt 320M

2.txt 10M

经过FileInputFormat的切片机制运算后,形成的切片信息如下:

1.txt.split1-- 0~128

1.txt.split2-- 128~256

1.txt.split2-- 256~320

2.txt.split1-- 0~10

4.CombineTextInputFormat切片机制

框架默认的TextInputFormat切片机制是对任务按文件规划切片,不管文件多小,都会是一个单独的切片,都会交给一个MapTask,这样如果有大量小文件,就会产生大量的MapTask,处理效率极其低下;

4.1 应用场景

CombineTextInputFormat用于小文件多的场景,它可以将多个小文件从逻辑上规划到一个切片中,这样,多个小文件就可以交给一个MapTask处理;

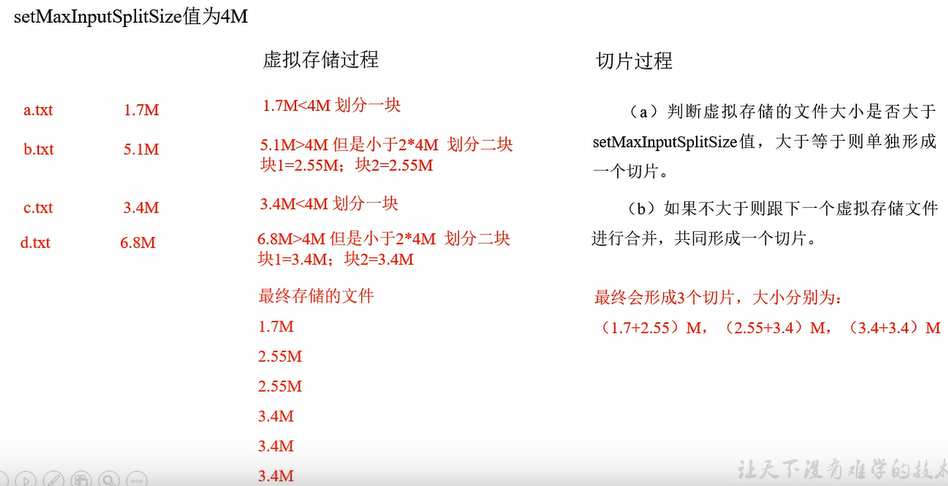

4.2 虚拟存储切片最大值设置

CombineTextInputFormat.setMaxInputSplitSize(job,4194304); //4m

注意:虚拟存储切片最大值设置最好根据实际的小文件大小情况来设置具体的值;

4.3 切片机制

生成切片过程包括:虚拟存储过程和切片过程二部分;

(1)判断虚拟机存储文件大小是否大于setMaxInputSplitSize值,大于等于则单独形成一个切片;

(2)如果不大于则跟下一个虚拟存储文件进行合并,共同形成一个切片;

它的切片方法自定义,KV方法采用CombineFileRecordReader;

5.CombineTextInputFormat案例实操

5.1 不做任何处理,运行wordcount案例程序,观察切片个数为4;

5.2 在wordcountDriver中增加如下代码,运行程序,并观察运行的切片个数为3;

5.3 添加代码如下:

job.setInputFormatClass(CombineTextInputFormat.class)

CombineTextInputFormat.setMaxInputSplitSize(job, 4194304);// 4m

5.3 运行如果为三个切片

5.4 在wordcountDriver中增加如下代码,运行程序,并观察运行的切片个数为1;

5.6 添加代码如下:

//如果不设置InputFormat,它默认用的是TextInputFormat.class

job.setInputFormatClass(CombineTextInputFormat.class);

//虚拟机存储切片最大值设置20M

CombineTextInputFormat.setMinInputSplitSize(job, 2097152);// 2m

6.FileInputFormat实现类

6.1 TextInputFormat

TextInputFormat是默认的FileInputFormat实现类,按行读每条记录;键是存储该行在整个文件中的起始字节偏移量,LongWritable类型,值是这行的内容,不包括任何终止符(换行符合回车符),Text类型;

比如,一个分片包含了如下四条记录:

Rich learning form

Interlligent learning engine

Learning more convenient

Form the real demand for more close to the enterprise

每条记录表示为一下jain/值对:

(0,Rich learning form)

(19,Intelligent learning engine)

(47,Learning more convenient)

(72,Form the real demand for more close to the enterprise)

KV的方法:采用LineRecordReader;

6.2 KeyValueTextInputFormat

每一行均为一条记录,被分隔符分隔为key,value;可以通过在驱动类中色值conf.set(KeyValueLineRecordReader.KEY_VALUE_SEPERATOR," ");来设定分隔符是tab( );

比如,输入是一个包含四条记录的分片,启动——>表示一个(水平方法的)制表符;

line1——>Rich learning form

line2——>Interlligent learning engine

line3——>Learning more convenient

line4——>Form the real demand for more close to the enterprise

每条记录表示为以下键/值对:

(line1,Rich learning form)

(line2,Interlligent learning engine)

(line3,Learning more convenient)

(line4,Form the real demand for more close to the enterprise)

此时的键是每行排在制表符之前的Text序列;

KV的方法:采用KeyValueLineRecordReader;

6.3 NLineInputFormat

如果使用NLineInputFormat,代表每个map进程处理的inputSplit不再按block块区划分,而是按NLineInputFormat指定的行数N来划分,即输入文件的总数N=切片数,如果不整除,切片数=商+1;

比如,有如下四条记录:

Rich learning form

Interlligent learning engine

Learning more convenient

Form the real demand for more close to the enterprise

例如,如果N是2,则每个输入分片包含两行,开启两个MapTask;

(0,Rich learning form)

(19,Inteligent learning engine)

这里的键和值与TextInputFormat生成的一样,都是采用LineRecordReader;

7.自定义InputFormat

在企业开发中,hadoop框架自带的InputFormat类型不能满足所有引用场景,需要自定义InputFormat来解决实际问题;

自定义InputFormat步骤如下:

(1)自定义一个类继承FileInputFormat;

(2)改写RecordReader,是瞎按一次读取一个完整文件封装为KV;

(3)在输出时使用SequenceFileOutFormat输出合并文件;

8.自定义InputFormat案例实操

无论HDFS还是MapReduce,在处理小文件时效率都非常低,但又难免面临处理大量小文件的场景,此时,就需要有相应解决方案;可以自定义InputFormat实现小文件的合并;(对外是一个整文件,对内仍是原先的小文件,节省MapTask)

8.1 需求如下:

将多个小文件合并成一个SequenceFile文件(SequenceFile文件是hadoop用来存储二进制形式的key-value对的文件格式),SequenceFile里面存储着多个文件,存储的形式为文件路径+名称为key,文件内容为value;

(1)输入数据

(2)期望输出文件格式

8.2 步骤:

(1)自定义一个类继承FileInputFormat

重写isSplitable()方法,返回false不可分割;

重写createRecordReader(),创建自定义的RecordReader对象,并初始化;

(2)改写RecordReader,实现一次读取一个完整文件封装为KV

采用IO流一次读取一个文件输出到value中,因为设置了不可切片,最终把所有文件都封装到了value中;

获取文件路径信息+名称,并设置key;

(3)设置Driver

设置输入的inputFormat

设置输入的outputFormat

8.3 案例

8.3.1 MyInputFromat编写

package com.wn.inputformat;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.BytesWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.InputSplit;

import org.apache.hadoop.mapreduce.JobContext;

import org.apache.hadoop.mapreduce.RecordReader;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import java.io.IOException;

//存储的形式为文件路径+名称为key,文件内容为value;读全部文件用到流,byte

//输入key类型为Text,输入value类型为BytesWritable

public class MyInputFromat extends FileInputFormat<Text, BytesWritable> {

@Override

protected boolean isSplitable(JobContext context, Path filename) {

return false; //单个文件不允许在切片

}

@Override

public RecordReader<Text, BytesWritable> createRecordReader(InputSplit inputSplit, TaskAttemptContext taskAttemptContext) throws IOException, InterruptedException {

MyRecordReader myRecordReader = new MyRecordReader();

myRecordReader.initialize(inputSplit,taskAttemptContext);

return myRecordReader;

}

}

8.3.2 MyRecordReader编写

package com.wn.inputformat;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.BytesWritable;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.InputSplit;

import org.apache.hadoop.mapreduce.RecordReader;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import java.io.IOException;

public class MyRecordReader extends RecordReader<Text, BytesWritable> {

//主要针对缺什么补什么

FileSplit split;

Configuration configuration;

Text k=new Text();

BytesWritable v=new BytesWritable();

//标记位

boolean isProgress=true;

@Override

public void initialize(InputSplit inputSplit, TaskAttemptContext taskAttemptContext) throws IOException, InterruptedException {

//初始化

this.split=(FileSplit) split;

configuration = taskAttemptContext.getConfiguration();

}

@Override

public boolean nextKeyValue() throws IOException, InterruptedException {

//核心业务逻辑处理 对key和value进行封装

if (isProgress){

byte[] buf =new byte[(int) split.getLength()];

//获取fs对象

Path path = split.getPath();

FileSystem fs = path.getFileSystem(configuration);

//获取输入流

FSDataInputStream fis = fs.open(path);

//拷贝

IOUtils.readFully(fis,buf,0,buf.length);

//封装

v.set(buf,0,buf.length);

//封装KV本身就是路径名path.toString()既有路径又有文件名称

k.set(path.toString());

//关闭资源

IOUtils.closeStream(fis);

isProgress=false; //说明本文件已经读完了

return true;

}

return false;

}

@Override

public Text getCurrentKey() throws IOException, InterruptedException {

return k;

}

@Override

public BytesWritable getCurrentValue() throws IOException, InterruptedException {

return v;

}

@Override

public float getProgress() throws IOException, InterruptedException {

return 0;

}

@Override

public void close() throws IOException {

}

}

8.3.3 MySequenceFileMapper编写

package com.wn.inputformat;

import org.apache.hadoop.io.BytesWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class MySequenceFileMapper extends Mapper<Text, BytesWritable,Text,BytesWritable> {

@Override

protected void map(Text key, BytesWritable value, Context context) throws IOException, InterruptedException {

//不是一次读取一行,是一次读取整个文件

context.write(key,value);

}

}

10.3.4 MySequenceFileReaducer编写

package com.wn.inputformat;

import org.apache.hadoop.io.BytesWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class MySequenceFileReaducer extends Reducer<Text, BytesWritable,Text,BytesWritable> {

@Override

protected void reduce(Text key, Iterable<BytesWritable> values, Context context) throws IOException, InterruptedException {

//循环写出,每次都是一个文件的全部内容

for (BytesWritable value:values)

context.write(key,value);

}

}

8.3.5 MySequenceFileDriver编写

package com.wn.inputformat;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.BytesWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat;

import java.io.IOException;

public class MySequenceFileDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

//输入输出路径需要根据自己电脑上的实际输入输出路径设置

args=new String[]{

"E:\123","E:\123\output123"

};

//获取job对象

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

//设置jar包存储位置,关联自定义的mapper和reader

job.setJarByClass(MySequenceFileDriver.class);

job.setMapperClass(MySequenceFileMapper.class);

job.setReducerClass(MySequenceFileReaducer.class);

//设置输入的inputFormat

job.setInputFormatClass(MyInputFromat.class);

//设置输出的outputFormat ,默认Text.class

job.setOutputFormatClass(SequenceFileOutputFormat.class);

//设置map输出端的KV类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(BytesWritable.class);

//设置最终输出端的KV类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(BytesWritable.class);

//设置输入输出的路径

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

//提交job

job.waitForCompletion(true);

}

}