摘要:hadoop安装完成后,像学习其他语言一样,要开始写一个“hello world!” ,看了一些学习资料,模仿写了个程序。对于一个C#程序员来说,写个java程序,并调用hadoop的包,并跑在linux系统下,是一次新的尝试。

hadoop ncdc气象数据:

http://down.51cto.com/data/1127100

数据说明:

第15-19个字符是year

第45-50位是温度表示,+表示零上 -表示零下,且温度的值不能是9999,9999表示异常数据

第50位值只能是0、1、4、5、9几个数字

1.代码编写

新建项目,命名MaxTemperature,新建lib,将hadoop下的jar包放到lib目录下,(可以将 hadoop-1.2.1-1.x86_64.rpm解压后的目录下的所有jar包导出)。选择lib目录下的所有jar包,右击,选择Build Path,添加到项目中。

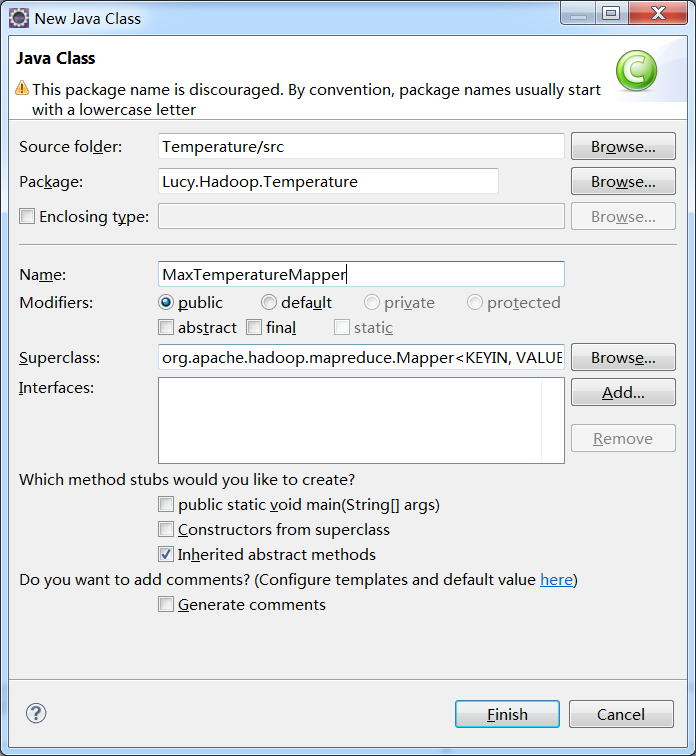

src->New->Class,创建Mapper类继承hadoop的Mapper类:

代码编写:

package Lucy.Hadoop.Temperature; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; public class MaxTemperatureMapper extends Mapper<LongWritable, Text, Text, IntWritable> { private static final int MISSING = 9999; @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String line = value.toString(); String year = line.substring(15, 19); int airTemperature; if (line.charAt(87) == '+') { airTemperature = Integer.parseInt(line.substring(88, 92)); } else { airTemperature = Integer.parseInt(line.substring(87, 92)); } String quality = line.substring(92, 93); if (airTemperature != MISSING && quality.matches("[01459]")) { context.write(new Text(year), new IntWritable(airTemperature)); } } }

src->New->Class,创建Reducer类继承hadoop的Reducer类:

package Lucy.Hadoop.Temperature; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; public class MaxTemperatureReducer extends Reducer<Text, IntWritable, Text, IntWritable> { @Override protected void reduce(Text keyin, Iterable<IntWritable> values,Context context) throws IOException, InterruptedException { int maxValue = Integer.MIN_VALUE; for (IntWritable value : values) { maxValue = Math.max(maxValue, value.get()); } context.write(keyin, new IntWritable(maxValue)); } }

src->New->Class,创建MaxTemperature类做为主程序:

package Lucy.Hadoop.Temperature; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class MaxTemperature { public static void main(String[] args) throws Exception{ if(args.length != 2) { System.err.println("Usage: MinTemperature<input path> <output path>"); System.exit(-1); } Configuration conf=new Configuration(); conf.set("mapred.jar", "./MaxTemperature.jar"); conf.set("hadoop.job.user","hadoop"); //conf.addResource("classpath:/hadoop/core"); Job job = new Job(conf,"calc Temperature"); job.setJarByClass(MaxTemperature.class); //job.setJobName("Max Temperature"); FileInputFormat.addInputPath(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); job.setMapperClass(MaxTemperatureMapper.class); job.setReducerClass(MaxTemperatureReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

2.编译

右击项目,选择Export,选择JAR file,设置路径,导出jar包。

3.运行

语法:hadoop jar <jar> [mainClass] args…

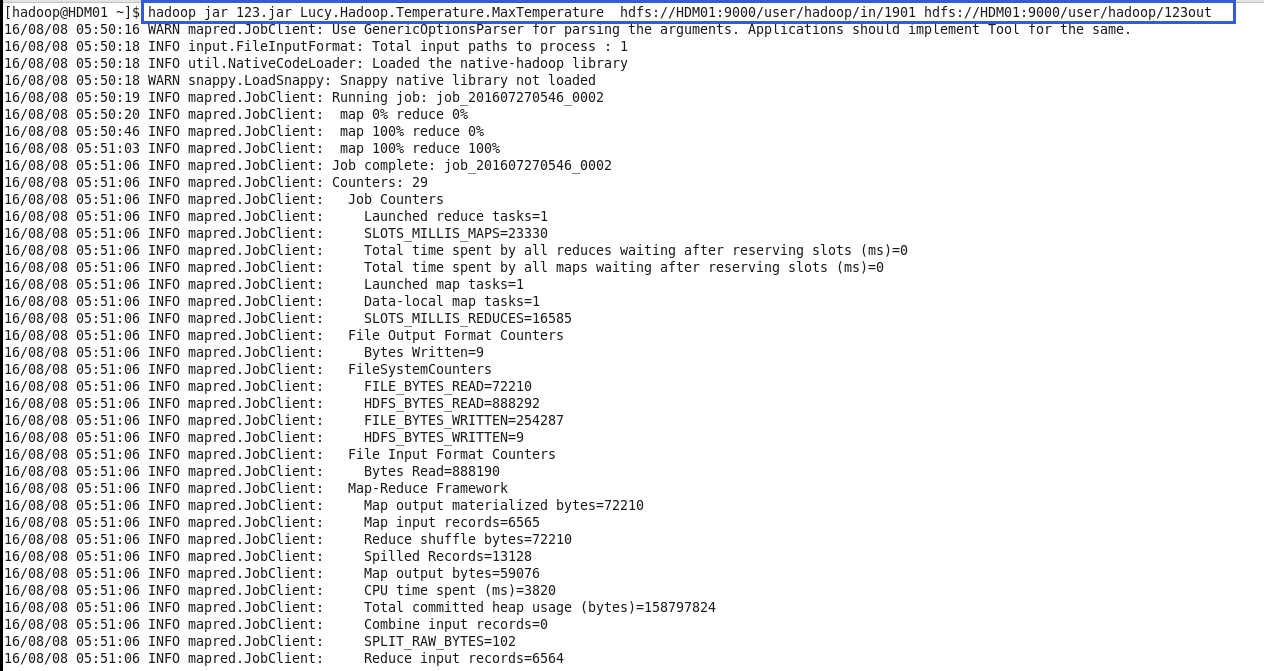

在linux系统上运行:

hadoop jar 123.jar Lucy.Hadoop.Temperature.MaxTemperature hdfs://HDM01:9000/usr/hadoop/in/sample.txt hdfs://HDM01:9000/usr/hadoop/123out

3.查看结果

3.HDFS文件说明

调用hdfs命令,添加文件到hdfs:

hadoop fs -copyFromLocal sample.txt /usr/hadoop/in