训练时, solver.prototxt中使用的是train_val.prototxt

./build/tools/caffe/train -solver ./models/bvlc_reference_caffenet/solver.prototxt

使用上面训练的网络提取特征,使用的网络模型是deploy.prototxt

./build/tools/extract_features.bin models/bvlc_refrence_caffenet.caffemodel models/bvlc_refrence_caffenet/deploy.prototxt

Caffe finetune

1、准备finetune的数据

image文件夹子里面放好来finetune的图片

train.txt中放上finetune的训练图片绝对路径,及其对应的类别

test.txt中放上finetune的测试图片绝对路径,及其对应的类别

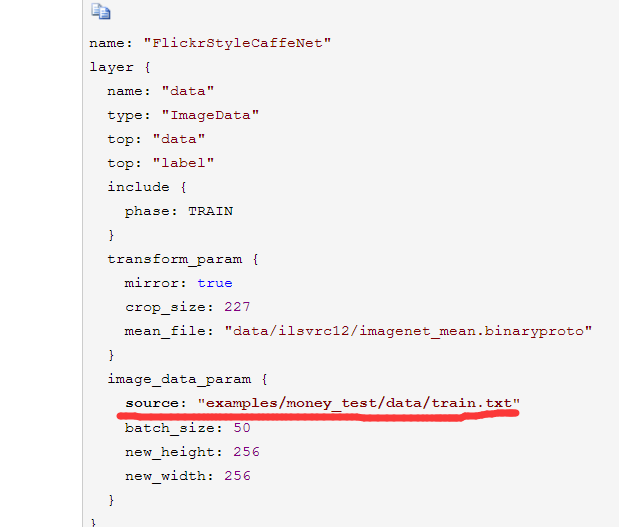

2、更改train_val.prototxt

更改最后一层

a)输出个数改变

b)最后一层学习率变大,由2变成20

3、更改solver.prototxt

a)stepsize变小:由100000变成20000

b)max_iter变小:450000变成50000

c)base_lr变小:0.01变成0.001

d)test_iter变小:1000变成100

4、调用命令finetune

caffe % ./build/tools/caffe train -solver models/finetune_flickr_style/solver.prototxt -weights models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel -gpu 0

注意:学习率有两个是一个是weight,一个是bias的学习率,一般bias的学习率是weight的两倍

decay是权值衰减,是加了正则项目,防止overfitting

the global weight_decay multiplies the parameter-specific decay_mult

solver.prototxt具体设置解释:

rmsprop: net: "examples/mnist/lenet_train_test.prototxt" test_iter: 100 test_interval: 500 #The base learning rate, momentum and the weight decay of the network. base_lr: 0.01 momentum: 0.0 weight_decay: 0.0005 #The learning rate policy lr_policy: "inv" gamma: 0.0001 power: 0.75 display: 100 max_iter: 10000 snapshot: 5000 snapshot_prefix: "examples/mnist/lenet_rmsprop" solver_mode: GPU type: "RMSProp" rms_decay: 0.98 Adam: net: "examples/mnist/lenet_train_test.prototxt" test_iter: 100 test_interval: 500 #All parameters are from the cited paper above base_lr: 0.001 momentum: 0.9 momentum2: 0.999 #since Adam dynamically changes the learning rate, we set the base learning #rate to a fixed value lr_policy: "fixed" display: 100 #The maximum number of iterations max_iter: 10000 snapshot: 5000 snapshot_prefix: "examples/mnist/lenet" type: "Adam" solver_mode: GPU multistep: net: "examples/mnist/lenet_train_test.prototxt" test_iter: 100 test_interval: 500 #The base learning rate, momentum and the weight decay of the network. base_lr: 0.01 momentum: 0.9 weight_decay: 0.0005 #The learning rate policy lr_policy: "multistep" gamma: 0.9 stepvalue: 5000 stepvalue: 7000 stepvalue: 8000 stepvalue: 9000 stepvalue: 9500 # Display every 100 iterations display: 100 #The maximum number of iterations max_iter: 10000 #snapshot intermediate results snapshot: 5000 snapshot_prefix: "examples/mnist/lenet_multistep" #solver mode: CPU or GPU solver_mode: GPU

卷积层的group参数,可以实现channel-wise的卷积操作