一、需求

data: 将相同名字合并为一个,并计算出平均数 tom 12 小明 23 jerry 45 2哈 34 tom 45 tom 65 小明 34

二、编码

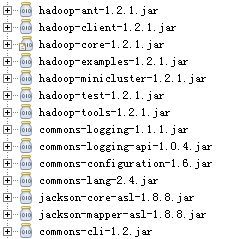

1.导入jar包

2.编码

2.1Map编写

package com.wzy.studentscore; import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; /** * @author:吴兆跃 * @version 创建时间:2018年6月5日 下午5:58:55 * 类说明: */ public class ScoreMap extends Mapper<LongWritable, Text, Text, IntWritable>{ @Override public void map(LongWritable key, Text value, Context context) throws IOException,InterruptedException{ String line = value.toString(); //一行的数据 StringTokenizer tokenizerArticle = new StringTokenizer(line, " "); System.out.println("key: "+key); System.out.println("value-line: "+line); System.out.println("count: "+tokenizerArticle.countTokens()); while(tokenizerArticle.hasMoreTokens()){ String token = tokenizerArticle.nextToken(); System.out.println("token: "+token); StringTokenizer tokenizerLine = new StringTokenizer(token); String strName = tokenizerLine.nextToken(); // 得到name String strScore = tokenizerLine.nextToken(); // 得到分数 Text name = new Text(strName); int scoreInt = Integer.parseInt(strScore); context.write(name, new IntWritable(scoreInt)); } System.out.println("context: "+context.toString()); } }

2.2Reduce编写

package com.wzy.studentscore; import java.io.IOException; import java.util.Iterator; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; /** * @author:吴兆跃 * @version 创建时间:2018年6月5日 下午6:50:28 * 类说明: */ public class ScoreReduce extends Reducer<Text, IntWritable, Text, IntWritable>{ @Override public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException{ int sum = 0; int count = 0; Iterator<IntWritable> iterator = values.iterator(); while(iterator.hasNext()){ sum += iterator.next().get(); //求和 count++; } int average = (int)sum / count; //求平均数 context.write(key, new IntWritable(average)); } }

2.3运行类编写

package com.wzy.studentscore; import org.apache.hadoop.conf.Configured; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; import org.apache.hadoop.util.Tool; import org.apache.hadoop.util.ToolRunner; /** * @author:吴兆跃 * @version 创建时间:2018年6月5日 下午6:59:29 * 类说明: */ public class ScoreProcess extends Configured implements Tool{ public static void main(String[] args) throws Exception { int ret = ToolRunner.run(new ScoreProcess(), new String[]{"input","output"}); System.exit(ret); } @Override public int run(String[] args) throws Exception { Job job = new Job(getConf()); job.setJarByClass(ScoreProcess.class); job.setJobName("score_process"); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); job.setMapperClass(ScoreMap.class); job.setCombinerClass(ScoreReduce.class); job.setReducerClass(ScoreReduce.class); job.setInputFormatClass(TextInputFormat.class); job.setOutputFormatClass(TextOutputFormat.class); FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); boolean success = job.waitForCompletion(true); return success ? 0 : 1; } }

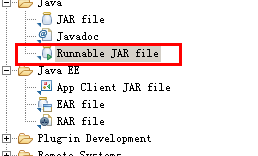

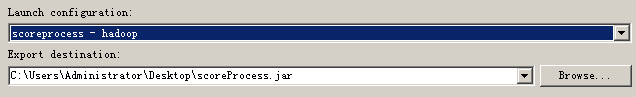

3.打包

三、调试

1. java本地运行

root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ls input part scoreProcess.jar root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# java -jar scoreProcess.jar Jun 06, 2018 5:28:26 AM org.apache.hadoop.util.NativeCodeLoader <clinit> WARNING: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Jun 06, 2018 5:28:26 AM org.apache.hadoop.mapreduce.lib.input.FileInputFormat listStatus INFO: Total input paths to process : 1 Jun 06, 2018 5:28:26 AM org.apache.hadoop.io.compress.snappy.LoadSnappy <clinit> WARNING: Snappy native library not loaded Jun 06, 2018 5:28:27 AM org.apache.hadoop.mapred.JobClient monitorAndPrintJob INFO: Running job: job_local1903623691_0001 Jun 06, 2018 5:28:27 AM org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable run INFO: Starting task: attempt_local1903623691_0001_m_000000_0 Jun 06, 2018 5:28:27 AM org.apache.hadoop.mapred.LocalJobRunner$Job run INFO: Waiting for map tasks Jun 06, 2018 5:28:27 AM org.apache.hadoop.util.ProcessTree isSetsidSupported INFO: setsid exited with exit code 0 Jun 06, 2018 5:28:27 AM org.apache.hadoop.mapred.Task initialize INFO: Using ResourceCalculatorPlugin : org.apache.hadoop.util.LinuxResourceCalculatorPlugin@5ddf714a Jun 06, 2018 5:28:27 AM org.apache.hadoop.mapred.MapTask runNewMapper INFO: Processing split: file:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess/input/data:0+72 Jun 06, 2018 5:28:27 AM org.apache.hadoop.mapred.MapTask$MapOutputBuffer <init> INFO: io.sort.mb = 100 Jun 06, 2018 5:28:27 AM org.apache.hadoop.mapred.MapTask$MapOutputBuffer <init> INFO: data buffer = 79691776/99614720 Jun 06, 2018 5:28:27 AM org.apache.hadoop.mapred.MapTask$MapOutputBuffer <init> INFO: record buffer = 262144/327680 key: 0 value-line: tom 12 count: 1 token: tom 12 context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9 key: 8 value-line: 小明 23 count: 1 token: 小明 23 context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9 key: 20 value-line: jerry 45 count: 1 token: jerry 45 context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9 key: 31 value-line: 哈2 34 count: 1 token: 哈2 34 context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9 key: 41 value-line: tom 45 count: 1 token: tom 45 context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9 key: 50 value-line: tom 65 count: 1 token: tom 65 context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9 key: 60 value-line: 小明 34 count: 1 token: 小明 34 context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9

root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ls input output part scoreProcess.jar root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# cd output/ root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess/output# ls part-r-00000 _SUCCESS root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess/output# cat part-r-00000 jerry 45 tom 40 哈2 34 小明 28

2. 在hadoop hdfs上运行

2.1 data文件上传到hdfs

root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ../../bin/hadoop fs -mkdir /user root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ../../bin/hadoop fs -mkdir /user/root root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ../../bin/hadoop fs -mkdir /user/root/input root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ../../bin/hadoop fs -put input/data /user/root/input root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ../../bin/hadoop fs -ls /user/root/input Found 1 items -rw-r--r-- 1 root supergroup 72 2018-06-06 04:00 /user/root/input/data

2.2 运行

root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ../../bin/hadoop jar scoreProcess.jar 18/06/06 04:00:52 INFO input.FileInputFormat: Total input paths to process : 1 18/06/06 04:00:53 INFO mapred.JobClient: Running job: job_201806060358_0002 18/06/06 04:00:54 INFO mapred.JobClient: map 0% reduce 0% 18/06/06 04:01:02 INFO mapred.JobClient: map 100% reduce 0% 18/06/06 04:01:14 INFO mapred.JobClient: map 100% reduce 100% 18/06/06 04:01:16 INFO mapred.JobClient: Job complete: job_201806060358_0002 18/06/06 04:01:16 INFO mapred.JobClient: Counters: 17 18/06/06 04:01:16 INFO mapred.JobClient: Map-Reduce Framework 18/06/06 04:01:16 INFO mapred.JobClient: Combine output records=4 18/06/06 04:01:16 INFO mapred.JobClient: Spilled Records=8 18/06/06 04:01:16 INFO mapred.JobClient: Reduce input records=4 18/06/06 04:01:16 INFO mapred.JobClient: Reduce output records=4 18/06/06 04:01:16 INFO mapred.JobClient: Map input records=7 18/06/06 04:01:16 INFO mapred.JobClient: Map output records=7 18/06/06 04:01:16 INFO mapred.JobClient: Map output bytes=65 18/06/06 04:01:16 INFO mapred.JobClient: Reduce shuffle bytes=52 18/06/06 04:01:16 INFO mapred.JobClient: Combine input records=7 18/06/06 04:01:16 INFO mapred.JobClient: Reduce input groups=4 18/06/06 04:01:16 INFO mapred.JobClient: FileSystemCounters 18/06/06 04:01:16 INFO mapred.JobClient: HDFS_BYTES_READ=72 18/06/06 04:01:16 INFO mapred.JobClient: FILE_BYTES_WRITTEN=136 18/06/06 04:01:16 INFO mapred.JobClient: FILE_BYTES_READ=52 18/06/06 04:01:16 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=34 18/06/06 04:01:16 INFO mapred.JobClient: Job Counters 18/06/06 04:01:16 INFO mapred.JobClient: Launched map tasks=1 18/06/06 04:01:16 INFO mapred.JobClient: Launched reduce tasks=1 18/06/06 04:01:16 INFO mapred.JobClient: Data-local map tasks=1

2.3 查看结果

root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ../../bin/hadoop fs -ls /user/root/output/ Found 2 items drwxr-xr-x - root supergroup 0 2018-06-06 04:00 /user/root/output/_logs -rw-r--r-- 1 root supergroup 34 2018-06-06 04:01 /user/root/output/part-r-00000

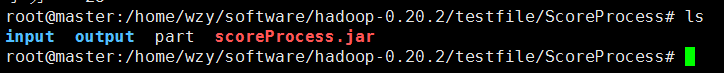

root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ../../bin/hadoop fs -get /user/root/output/part-r-00000 part root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# ls input output part scoreProcess.jar root@master:/home/wzy/software/hadoop-0.20.2/testfile/ScoreProcess# cat part jerry 45 tom 40 2哈 34 小明 28