功能要求为:1,数据采集,定期从网络中爬取信息领域的相关热词

2,数据清洗:对热词信息进行数据清洗,并采用自动分类技术生成自动分类计数生成信息领域热词目录。

3,热词解释:针对每个热词名词自动添加中文解释(参照百度百科或维基百科)

4,热词引用:并对近期引用热词的文章或新闻进行标记,生成超链接目录,用户可以点击访问;

5,数据可视化展示:① 用字符云或热词图进行可视化展示;② 用关系图标识热词之间的紧密程度。

6,数据报告:可将所有热词目录和名词解释生成 WORD 版报告形式导出。

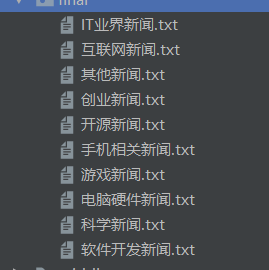

这次完成了按照目录得到相关的热词和解释,目录是参照博客园的新闻分类,

代码如下:

import requests

from lxml import etree

import time

import pymysql

import datetime

import urllib

import json

import jieba

import pandas as pd

import re

import os

from collections import Counter

def getKeyWords(filehandle,indexname):

print("getKeyWords")

mystr = filehandle.read()

#print(mystr)

seg_list = jieba.cut(mystr) # 默认是精确模式

print(seg_list)

stopwords = {}.fromkeys([line.rstrip() for line in open(r'stopwords.txt')])

c = Counter()

for x in seg_list:

if x not in stopwords:

if len(x) > 1 and x != '

':

c[x] += 1

print('

词频统计结果:')

# 创建热词文件

f = open("final/"+indexname+".txt", "a+", encoding='utf-8')

for (k, v) in c.most_common(2): # 输出词频最高的前两个词

print("%s:%d" % (k, v))

f.write(k + '

')

#print(mystr)

filehandle.close();

def getDetail(href,title):

pass

def getEachPage(page,i,type):

url = "https://news.cnblogs.com/n/c" + str(i) +"?page=" +str(page)

r = requests.get(url)

html = r.content.decode("utf-8")

html1 = etree.HTML(html)

href = html1.xpath('//h2[@class="news_entry"]/a/@href')

title = html1.xpath('//h2[@class="news_entry"]/a/text()')

indexname1 = html1.xpath('//div[@id = "guide"]/h3/text()')

indexname = indexname1[0].replace(' ', '').replace('/','')

print(indexname)

file = open("middle/"+indexname+".txt", "a+", encoding='utf-8')

print(len(href))

for a in range(0, 18):

print(href[a],title[a])

#得到标题和链接

getDetail(href[a], title[a])

file.write(title[a]+ '

')

print("页数:"+str(page))

if type == '一般' and page ==4:

print("函数里")

file = open("middle/" + indexname + ".txt", "r", encoding='utf-8')

getKeyWords(file,indexname)

if type == '其他' and page == 4:

file = open("middle/" + indexname + ".txt", "r", encoding='utf-8')

getKeyWords(file, indexname)

def getDiffPage(i):

if i == 1199:

#86页数据

for page in range(0, 5):

#得到每页信息

type = '其他'

getEachPage(page,i,type)

else:

#100页数据

for page in range(0, 5):

# 得到每页信息

type = '一般'

getEachPage(page,i,type)

def loopNewsType():

for i in range(1101,1111):

if i == 1104 or i ==1105 or i ==1106 or i ==1107 or i ==1108 or i ==1109:

i = i+5

elif i == 1110:

i = 1199

#遍历页数

getDiffPage(i)

#爬取解释

def climingExplain(line):

line1=line.replace('

','')

print(line1)

url = "https://baike.baidu.com/item/"+str(line1)

#print(url)

head = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36',

'cookie':'BAIDUID=AB4524A16BFAFC491C2D9D7D4CAE56D0:FG=1; BIDUPSID=AB4524A16BFAFC491C2D9D7D4CAE56D0; PSTM=1563684388; MCITY=-253%3A; BDUSS=jZnQkVhbnBIZkNuZXdYd21jMG9VcjdoanlRfmFaTjJ-T1lKVTVYREkxVWp2V2RlSVFBQUFBJCQAAAAAAAAAAAEAAACTSbM~Z3JlYXTL3tGpwOTS9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACMwQF4jMEBed; pcrightad9384=showed; H_PS_PSSID=1454_21120; delPer=0; PSINO=3; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; __yjsv5_shitong=1.0_7_a3331e3bd00d7cbd253c9e353f581eb2494f_300_1581332649909_58.243.250.219_d03e4deb; yjs_js_security_passport=069e28a2b81f7392e2f39969d08f61c07150cc18_1581332656_js; Hm_lvt_55b574651fcae74b0a9f1cf9c8d7c93a=1580800784,1581160267,1581268654,1581333414; BK_SEARCHLOG=%7B%22key%22%3A%5B%22%E7%96%AB%E6%83%85%22%2C%22%E6%95%B0%E6%8D%AE%22%2C%22%E9%9D%9E%E6%AD%A3%E5%BC%8F%E6%B2%9F%E9%80%9A%22%2C%22mapper%22%5D%7D; Hm_lpvt_55b574651fcae74b0a9f1cf9c8d7c93a=1581334123'

}

r = requests.get(url,headers = head)

#print(r.status_code)

html = r.content.decode("utf-8")

#print(html)

html1 = etree.HTML(html)

#print(html1)

content1 = html1.xpath('//div[@class="lemma-summary"]')

#print(content1[0])

if len(content1)==0:

#custom_dot para-list list-paddingleft-1

content1 =html1.xpath('string(//ul[@class="custom_dot para-list list-paddingleft-1"])')

print(content1)

if len(content1)==0:

print('未找到解释')

content1 = '未找到解释'

return content1

else:

content2 =content1[0].xpath ('string(.)').replace(' ','').replace('

','')

return content2

print(content2)

def getExpalin(filename):

lines =[]

for line in open("final/"+filename,encoding='utf-8'):

explain = climingExplain(line)

line = line +" "+explain

print(explain)

print("line:"+line.replace("

",""))

lines.append(line.replace("

",""))

#f = open("final/"+filename, 'w+',encoding='utf-8')

# f.write(line + "

")

f = open("final/"+filename, 'w+',encoding='utf-8')

for i in range(0, len(lines)):

f.write(lines[i] + "

")

f.close()

def wordsExplain():

for root, dirs, files in os.walk("final"):

print(files) # 当前路径下所有非目录子文件

print(len(files))

for i in range(0,len(files)):

# filename = files[i].replace(".txt","")

print(files[i])

getExpalin(files[i])

#break

if __name__=='__main__':

#遍历得到不同新闻类型链接尾部数字

#loopNewsType()

#热词解释

wordsExplain()

运行结果如下: