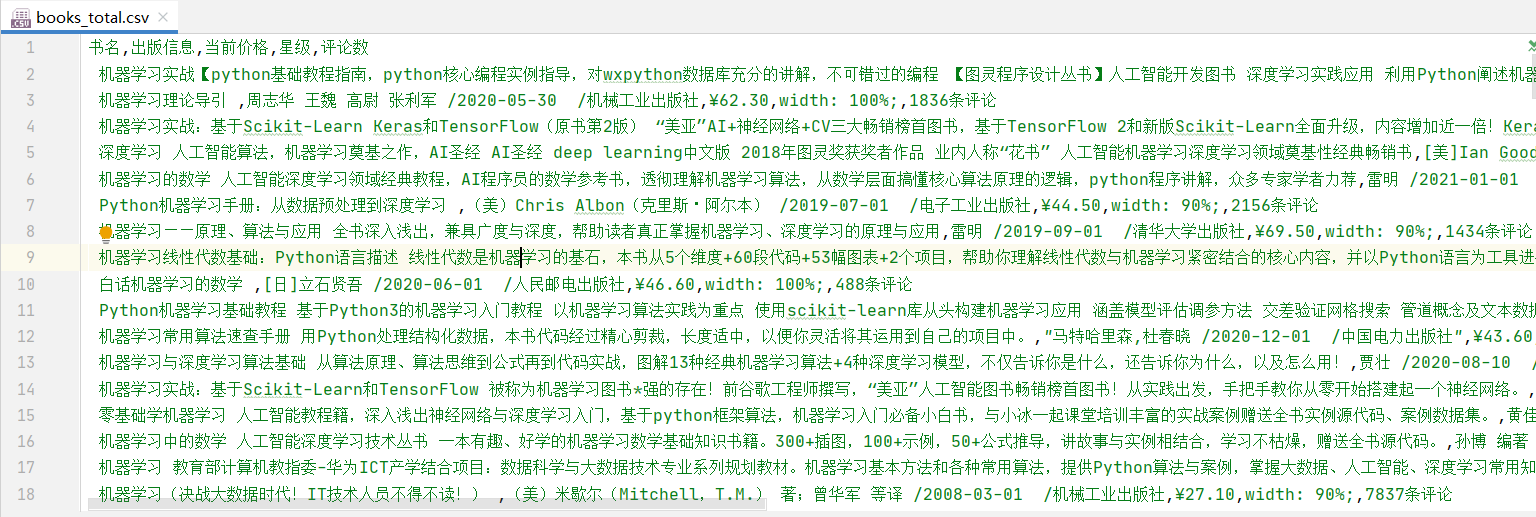

爬取当当网前十页,关键字为机器学习的数据,并保存为csv格式

import requests import pandas as pd import time from lxml import etree key_word = "机器学习" max_page = 10 books_total = [] def extract_books_from_content(content_page): books = [] page = etree.HTML(content_page) book_name = page.xpath("//li/p/a[@name='itemlist-title']/@title") pub_info = page.xpath("//li/p[@class='search_book_author']") pub_info = [book_pub.xpath('string(.)') for book_pub in pub_info] price_now = page.xpath("//li/p/span[@class='search_now_price']/text()") stars = page.xpath("//li/p/span[@class='search_star_black']/span/@style") comment_num = page.xpath("//li/p/a[@class='search_comment_num']/text()") for book in zip(book_name,pub_info,price_now,stars,comment_num): books.append(list(book)) return books for page in range(1,max_page+1): url = 'http://search.dangdang.com/?key=' + key_word + "&page_index=" + str(page) page_content = requests.get(url).text books = extract_books_from_content(page_content) books_total.extend(books) print("page " + str(page) + ", " + str(len(books)) + " books downloaded.") time.sleep(5) # 停顿5秒再下载下一页 books_total_df = pd.DataFrame(data=books_total,columns=["书名","出版信息","当前价格","星级","评论数"]) books_total_df.to_csv("./input/books_total.csv",index=None)

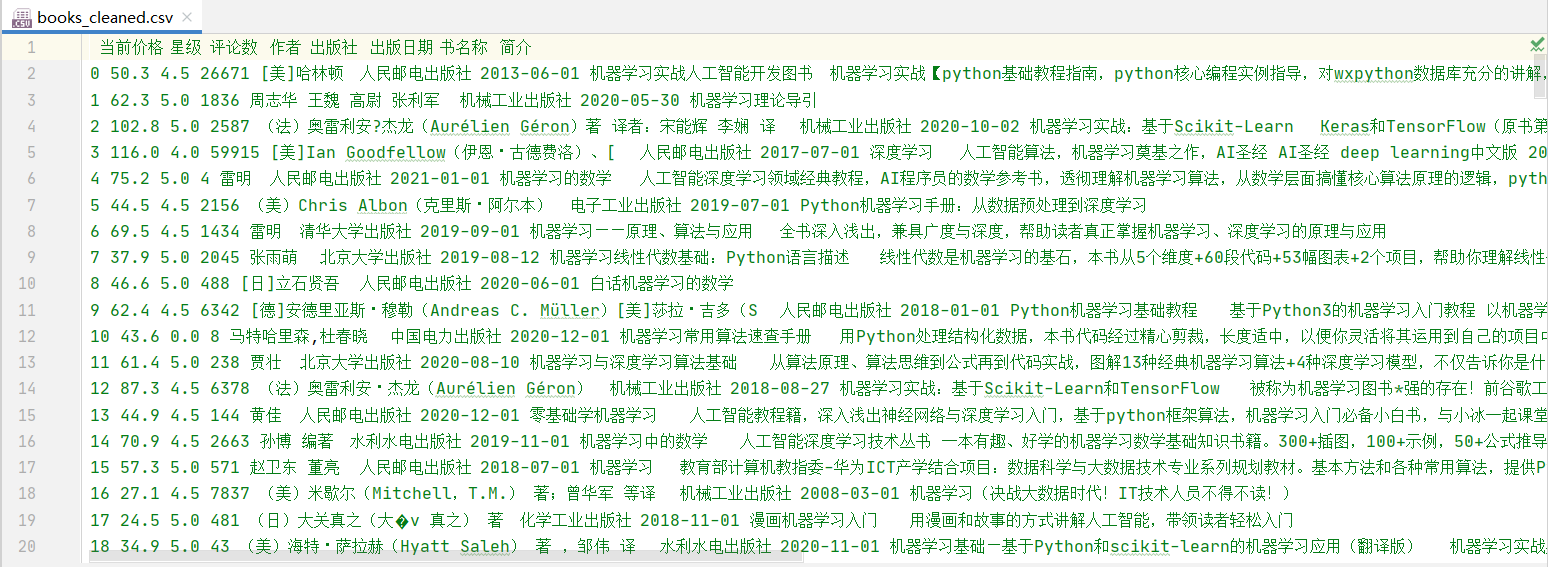

对数据进行清洗

import pandas as pd import re data = pd.read_csv('./input/books_total.csv') #获取数字 def get_numers(x): regex_num = "d{1,4}.{0,1}d{0,2}" return float(re.search(regex_num,x)[0]) # 提取出版社 def get_publisher(x): regex_pub = "/(S{1,10}出版社)" pub_match_result = re.search(regex_pub,x) if pub_match_result !=None: return pub_match_result[1].strip() else: return "" #提取出版日期 def get_pubdate(x): regex_date = "/(d{4}-d{2}-d{2})" pubdate_match_result = re.search(regex_date,x) if pubdate_match_result !=None: return pubdate_match_result[1].strip() else: return "" #提取书名 def get_book_name(x): x = x.strip() x = re.sub("【.*?】", "", x) x = re.sub("[.*?]", "", x) return x.split(" ")[0] data['当前价格'] = data['当前价格'].map(get_numers) data['评论数'] = data['评论数'].map(get_numers) data['评论数'] = data['评论数'].astype(int)#float转为int data['星级'] = data['星级'].map(get_numers)/20 data['作者'] = data['出版信息'].map(lambda x:x.split('/')[0]) data['出版社'] = data['出版信息'].map(get_publisher) data['出版日期'] = pd.to_datetime(data['出版信息'].map(get_pubdate)) del data["出版信息"] data['书名称'] = data['书名'].map(get_book_name) data['简介'] = data['书名'].map(lambda x:x.replace(get_book_name(x),"")) del data['书名'] data.to_csv("./input/books_cleaned.csv",index="None",sep=" ",encoding="utf8")