代码:

# -*- coding: utf-8 -*-

"""

Created on Fri Jul 13 16:13:52 2018

@author: a

"""

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

import time

import urllib.request

import urllib.parse

from urllib.error import URLError

from urllib.error import HTTPError

import requests

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--headless')#无界面模式

#下面的代码是错误的使用方式

#browser = webdriver.Chrome(chrome_options=chrome_options,executable_path = "C:Program Files (x86)GoogleChromeApplicationchrome.exe")

#下面的代码是使用无界面模式

browser = webdriver.Chrome(chrome_options=chrome_options)

#browser = webdriver.Chrome()

print("xiaojie")

url="https://passport.csdn.net/account/login"

try:

browser.get(url)

data=browser.page_source

print (len(data))

target=browser.find_element_by_xpath('/html/body/div[3]/div/div/div[2]/div/h3/a')

print("target:",target)

target.click()

locator=(By.ID,'username')

WebDriverWait(browser, 20, 0.5).until(EC.presence_of_element_located(locator))

username=browser.find_element_by_id('username')

print ("username:",username)

time.sleep(3)

username.clear()

username.send_keys('183247166@qq.com')

password=browser.find_element_by_id('password')

print ("password:",password)

password.clear()

password.send_keys('xxx')

submit=browser.find_element_by_xpath('//*[@id="fm1"]/input[8]')

print ("submit:",submit)

submit.click()

#time.sleep(10)#不用等待页面刷新。这步操作是不需要的。

#保存cookie信息。

cookies=browser.get_cookies()

except Exception as e:

print("driver出现异常")

print (e)

finally:

browser.close()

print("over")

None

#将之前selenium登录获得的cookie信息保存到requests的会话当中。

s=requests.session()

c = requests.cookies.RequestsCookieJar()

for item in cookies:

c.set(item["name"],item["value"])

s.cookies.update(c)

headers={

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.26 Safari/537.36 Core/1.63.5193.400 QQBrowser/10.0.1066.400"

}

url2="https://download.csdn.net/my"#这是从网页登录以后,才能进入的个人空间。

try:

print("开始爬网页")

response=s.get(url2,headers=headers)

data=response.text

print (len(data))

print (data)

fhandle=open("./验证cookie能使用的网页,断网打开.html","w",encoding='utf-8')

fhandle.write(data.encode('utf-8').decode())

fhandle.close()

print ("验证cookie能使用的网页,断网打开.html已经成功生成")

except Exception as e:

print(e)

这里面,取出cookie信息以后,浏览器就关闭了。然后用requests.session去访问个人中心页面,携带cookie信息,发现可以成功保持个人中心页面!

我做过其它方面的实验,就是,如下的代码:

# -*- coding: utf-8 -*-

"""

Created on Fri Jul 13 16:13:52 2018

@author: a

"""

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

import time

import urllib.request

import urllib.parse

from urllib.error import URLError

from urllib.error import HTTPError

import requests

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--headless')#无界面模式

#下面的代码是错误的使用方式

#browser = webdriver.Chrome(chrome_options=chrome_options,executable_path = "C:Program Files (x86)GoogleChromeApplicationchrome.exe")

#下面的代码是使用无界面模式

#browser = webdriver.Chrome(chrome_options=chrome_options)

browser = webdriver.Chrome()

print("xiaojie")

url="https://passport.csdn.net/account/login"

try:

browser.get(url)

data=browser.page_source

print (len(data))

curpage_url=browser.current_url

print (curpage_url)

target=browser.find_element_by_xpath('/html/body/div[3]/div/div/div[2]/div/h3/a')

print("target:",target)

target.click()

locator=(By.ID,'username')

WebDriverWait(browser, 20, 0.5).until(EC.presence_of_element_located(locator))

username=browser.find_element_by_id('username')

print ("username:",username)

time.sleep(3)

username.clear()

username.send_keys('183247166@qq.com')

password=browser.find_element_by_id('password')

print ("password:",password)

password.clear()

password.send_keys('xxx')

submit=browser.find_element_by_xpath('//*[@id="fm1"]/input[8]')

print ("submit:",submit)

submit.click()

curpage_url=browser.current_url

print (curpage_url)

#time.sleep(10)#不用等待页面刷新。这步操作是不需要的。

#保存cookie信息。

cookie =[item["name"] + ":" + item["value"] for item in browser.get_cookies()]

print (cookie)

cookiestr = ';'.join(item for item in cookie)

cook_map = {}

for item in cookie:

str = item.split(':')

cook_map[str[0]] = str[1]

print (cook_map)

cookies = requests.utils.cookiejar_from_dict(cook_map, cookiejar=None, overwrite=True)

s = requests.Session()

s.cookies = cookies

#使用urllib爬取网页

#下面的代码并不能成功执行。

headers=("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.26 Safari/537.36 Core/1.63.5193.400 QQBrowser/10.0.1066.400")

opener=urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cookies))

opener.addheaders=[headers]

url2="https://download.csdn.net/my"#这是从网页登录以后,才能进入的个人空间。

try:

urllib.request.install_opener(opener)

response=urllib.request.urlopen(url2)

print("开始爬网页")

fhandle=open("./验证cookie能使用的网页,断网打开.html","w",encoding='utf-8')

fhandle.write(data.encode('utf-8').decode())

fhandle.write(data)

fhandle.close()

print ("验证cookie能使用的网页,断网打开.html已经成功生成")

except HTTPError as e:

print("出现HTTP异常")

print(e.code)

print(e.reason)

except URLError as e:

print("出现URL异常")

print(e.reason)

except Exception as e:

print("driver出现异常")

print (e)

finally:

browser.close()

print("over")

None

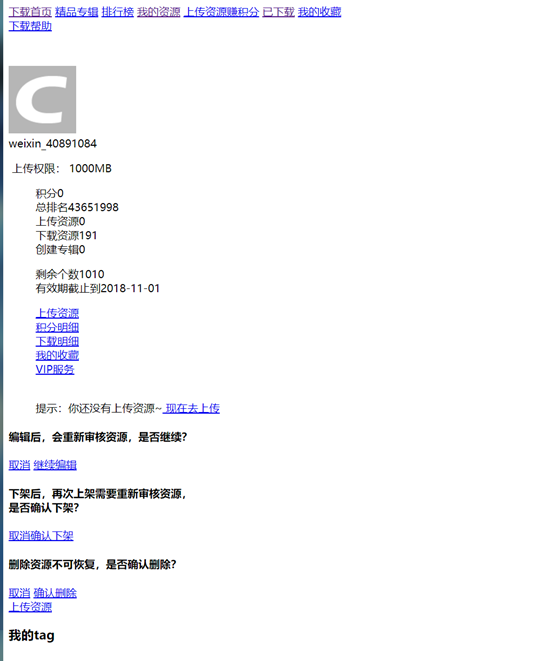

我写的上面的代码是有问题的。打开保存的网页是:说明没有保持登录状态。

如果还使用urllib.request.urlopen,并且将selenium模拟登录之后的cookie转变为CookieJar的方式,会发现不能保持登录状态访问。而精通Python网络爬虫一书中是要先创建CookieJar对象,创建全局的opener,然后再登录,这样的话,cookie会保存到全局的opener中,之后就能保持登录状态,继续使用urllib。但是,我这里先用selenimu模拟登录,之后才是基于得到的cookie创建CookieJar,然后再使用urllib。为什么会不行呢,

参考如下博客:

https://blog.csdn.net/warrior_zhang/article/details/50198699

python 利用selenium模拟登录帐号验证网站并获取cookie

其中包含两步:

6.通过对象的方法获取当前访问网站的session cookie:

|

#get the session cookie cookie = [item["name"] + "=" + item["value"] for item in sel.get_cookies()] #print cookie cookiestr = ';'.join(item for item in cookie) print cookiestr |

7.得到cookie之后,就可以通过urllib2访问相应的网站,并可实现网页爬取等工作:

|

import urllib2 print '%%%using the urllib2 !!' homeurl = sel.current_url print 'homeurl: %s' % homeurl headers = {'cookie':cookiestr} req = urllib2.Request(homeurl, headers = headers) try: response = urllib2.urlopen(req) text = response.read() fd = open('homepage', 'w') fd.write(text) fd.close() print '###get home page html success!!' except: print '### get home page html error!!' |

它是将cookie放在headers中,之后再使用urllib。我们测试一下:

编写代码:

# -*- coding: utf-8 -*-

"""

Created on Fri Jul 13 16:13:52 2018

@author: a

"""

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

import time

import urllib.request

import urllib.parse

from urllib.error import URLError

from urllib.error import HTTPError

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--headless')#无界面模式

#下面的代码是错误的使用方式

#browser = webdriver.Chrome(chrome_options=chrome_options,executable_path = "C:Program Files (x86)GoogleChromeApplicationchrome.exe")

#下面的代码是使用无界面模式

browser = webdriver.Chrome(chrome_options=chrome_options)

#browser = webdriver.Chrome()

print("xiaojie")

url="https://passport.csdn.net/account/login"

try:

browser.get(url)

data=browser.page_source

print (len(data))

curpage_url=browser.current_url

print (curpage_url)

target=browser.find_element_by_xpath('/html/body/div[3]/div/div/div[2]/div/h3/a')

print("target:",target)

target.click()

locator=(By.ID,'username')

WebDriverWait(browser, 20, 0.5).until(EC.presence_of_element_located(locator))

username=browser.find_element_by_id('username')

print ("username:",username)

time.sleep(3)

username.clear()

username.send_keys('183247166@qq.com')

password=browser.find_element_by_id('password')

print ("password:",password)

password.clear()

password.send_keys('xxx')

submit=browser.find_element_by_xpath('//*[@id="fm1"]/input[8]')

print ("submit:",submit)

submit.click()

curpage_url=browser.current_url

print (curpage_url)

#time.sleep(10)#不用等待页面刷新。这步操作是不需要的。

#保存cookie信息。

cookie =[item["name"] + ":" + item["value"] for item in browser.get_cookies()]

print (cookie)

cookiestr = ';'.join(item for item in cookie)

#使用urllib爬取网页

#下面的代码并不能成功执行。

headers={

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.26 Safari/537.36 Core/1.63.5193.400 QQBrowser/10.0.1066.400",

"cookie":cookiestr

}

url2="https://download.csdn.net/my"#这是从网页登录以后,才能进入的个人空间。

try:

req=urllib.request.Request(url2,headers=headers)

response=urllib.request.urlopen(req)

data=response.read()

print("开始爬网页")

fhandle=open("./验证cookie能使用的网页,断网打开.html","wb")

fhandle.write(data)

fhandle.close()

print ("验证cookie能使用的网页,断网打开.html已经成功生成")

except HTTPError as e:

print("出现HTTP异常")

print(e.code)

print(e.reason)

except URLError as e:

print("出现URL异常")

print(e.reason)

except Exception as e:

print("driver出现异常")

print (e)

finally:

browser.close()

print("over")

None

结果还是不行。具体原因有待研究。