脚本通过ssh检查大数据环境中各节点的相关实例是否运行正常,包括:zk、hadoop-ha、hdfs、yarn、hbase、hive-metastore、hiveserver2、kafka。

注意修改成自己的集群节点名称及kafka版本

#!/bin/bash # 定义机器名前缀 HN_PREFIX="hadoop.test" KFK_NAME="kafka_2.12-1.1.0" # 各服务对应节点 # zk 节点 ZK_NODES="$HN_PREFIX.n1 $HN_PREFIX.n2 $HN_PREFIX.n3" # hadoop HA DFSZKFailoverController 节点 DFC_NODES="$HN_PREFIX.m1 $HN_PREFIX.m2" # hadoop HA journalnode 节点 JOURNAL_NODES="$HN_PREFIX.n1 $HN_PREFIX.n2 $HN_PREFIX.n3 $HN_PREFIX.n4 $HN_PREFIX.n5" # DFS namenode 节点 NAME_NODES="$HN_PREFIX.m1 $HN_PREFIX.m2" # DFS datanode 节点 DATA_NODES="$HN_PREFIX.n1 $HN_PREFIX.n2 $HN_PREFIX.n3 $HN_PREFIX.n4 $HN_PREFIX.n5" # YARN resourcemanager 节点 RM_NODES="$HN_PREFIX.m1 $HN_PREFIX.m2" # YARN nodemanager 节点 NM_NODES="$HN_PREFIX.n1 $HN_PREFIX.n2 $HN_PREFIX.n3 $HN_PREFIX.n4 $HN_PREFIX.n5" # hadoop jobhistoryserver 节点 JOBHIS_NODES="$HN_PREFIX.m1" # hbase hmaster 节点 HMASTER_NODES="$HN_PREFIX.m1 $HN_PREFIX.m2" # hbase hregionserver 节点 HREGION_NODES="$HN_PREFIX.n1 $HN_PREFIX.n2 $HN_PREFIX.n3 $HN_PREFIX.n4 $HN_PREFIX.n5" # hive metastore 节点 META_NODES="$HN_PREFIX.n1" # hive hiveserver2 节点 HIVE2_NODES="$HN_PREFIX.n1" # kafka 节点 KAFKA_NODES="$HN_PREFIX.n1 $HN_PREFIX.n2 $HN_PREFIX.n3" # 检测 zookeeper echo "-------------------------------------------------------" echo "zk - QuorumPeerMain :" for ip in $ZK_NODES do ssh hadoop@$ip "ps -fe|grep QuorumPeerMain |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hadoop HA DFSZKFailoverController echo "-------------------------------------------------------" echo "hadoop HA - DFSZKFailoverController :" for ip in $DFC_NODES do ssh hadoop@$ip "ps -fe|grep DFSZKFailoverController |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hadoop HA JournalNode echo "-------------------------------------------------------" echo "hadoop HA - JournalNode :" for ip in $JOURNAL_NODES do ssh hadoop@$ip "ps -fe|grep JournalNode |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hadoop HDFS NameNode echo "-------------------------------------------------------" echo "hadoop HDFS - NameNode :" for ip in $NAME_NODES do ssh hadoop@$ip "ps -fe|grep NameNode |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hadoop HDFS DataNode echo "-------------------------------------------------------" echo "hadoop HDFS - DataNode :" for ip in $DATA_NODES do ssh hadoop@$ip "ps -fe|grep DataNode |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hadoop YARN ResourceManager echo "-------------------------------------------------------" echo "hadoop YARN - ResourceManager :" for ip in $RM_NODES do ssh hadoop@$ip "ps -fe|grep ResourceManager |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hadoop YARN NodeManager echo "-------------------------------------------------------" echo "hadoop YARN - NodeManager :" for ip in $NM_NODES do ssh hadoop@$ip "ps -fe|grep NodeManager |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hadoop JobHistoryServer echo "-------------------------------------------------------" echo "hadoop - JobHistoryServer :" for ip in $JOBHIS_NODES do ssh hadoop@$ip "ps -fe|grep JobHistoryServer |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hbase HMaster echo "-------------------------------------------------------" echo "hbase - HMaster :" for ip in $HMASTER_NODES do ssh hadoop@$ip "ps -fe|grep HMaster |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hbase HRegionServer echo "-------------------------------------------------------" echo "hbase - HRegionServer :" for ip in $HREGION_NODES do ssh hadoop@$ip "ps -fe|grep HRegionServer |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hive metastore echo "-------------------------------------------------------" echo "hive - HiveMetaStore :" for ip in $META_NODES do ssh hadoop@$ip "ps -fe|grep HiveMetaStore |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 hive hiveserver2 echo "-------------------------------------------------------" echo "hive - HiveServer2 :" for ip in $HIVE2_NODES do ssh hadoop@$ip "ps -fe|grep HiveServer2 |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done # 检测 Kafka echo "-------------------------------------------------------" echo "Kafka - $KFK_NAME :" for ip in $KAFKA_NODES do ssh hadoop@$ip "ps -fe|grep $KFK_NAME |grep -v grep >/dev/null 2>&1" if [ $? -ne 0 ] then echo -e "$ip: [�33[31m未启动�33[0m]" else echo -e "$ip: [�33[32m正常�33[0m]" fi done

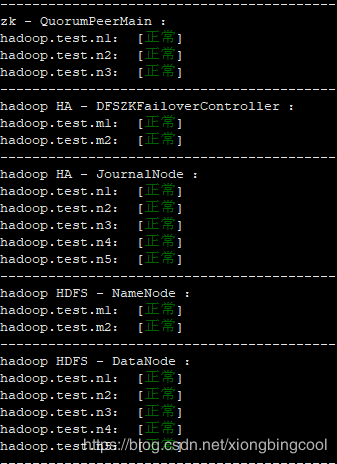

运行效果: