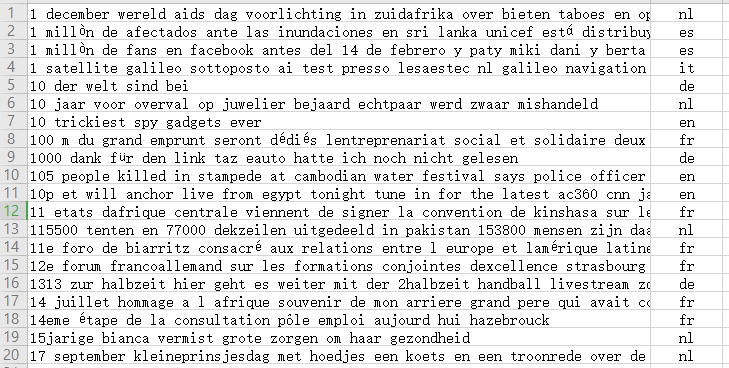

首先看一下数据集:

基本上每行就是一句话,所属类别,这里包含English, French, German, Spanish, Italian 和 Dutch 6种语言)

先导入相应的包:

import os from sklearn.model_selection import train_test_split from sklearn.feature_extraction.text import CountVectorizer from sklearn.naive_bayes import MultinomialNB import re

首先读取数据集:

def get_train_test_data(): #获取当前文件的绝对目录 path_dir=os.path.dirname(os.path.abspath(__file__)) #获取数据集 data_path = path_dir + "\Database\data.csv" #存放数据 data = [] #存放标签 label= [] with open(data_path,'r') as fp: lines=fp.readlines() for line in lines: line=line.split(",") data.append(line[0]) label.append(line[1].strip()) #切分数据集 x_train,x_test,y_train,y_test = train_test_split(data,label,random_state=1) return x_train,x_test,y_train,y_test

然后是过滤掉一些噪声:

w是匹配包括下划线的任意字符,S是匹配任何非空字符,+号表示匹配一个或多个字符

def remove_noise(document): noise_pattern = re.compile("|".join(["httpS+", "@w+", "#w+"])) clean_text = re.sub(noise_pattern, "", document) return clean_text.strip()

下一步,再降噪数据上抽取出有用的特征,抽取1-gram和2-gram的统计特征

vec = CountVectorizer( lowercase=True, # lowercase the text analyzer='char_wb', # tokenise by character ngrams ngram_range=(1,2), # use ngrams of size 1 and 2 max_features=1000, # keep the most common 1000 ngrams preprocessor=remove_noise ) vec.fit(x_train) def get_features(x): vec.transform(x)

最后就是进行分类:

classifier = MultinomialNB()

classifier.fit(vec.transform(x_train), y_train)

classifier.score(vec.transform(x_test), y_test)

将以上代码整合成一个类:

import os from sklearn.model_selection import train_test_split from sklearn.feature_extraction.text import CountVectorizer from sklearn.naive_bayes import MultinomialNB import re def get_train_test_data(): #获取当前文件的绝对目录 path_dir=os.path.dirname(os.path.abspath(__file__)) #获取数据集 data_path = path_dir + "\Database\data.csv" #存放数据 data = [] #存放标签 label= [] with open(data_path,'r') as fp: lines=fp.readlines() for line in lines: line=line.split(",") data.append(line[0]) label.append(line[1].strip()) #切分数据集 x_train,x_test,y_train,y_test = train_test_split(data,label,random_state=1) return x_train,x_test,y_train,y_test class LanguageDetector(): def __init__(self,classifier=MultinomialNB()): self.classifier=classifier self.vectorizer=CountVectorizer( lowercase=True, analyzer='char_wb', ngram_range=(1, 2), max_features=1000, preprocessor=self._remove_noise, ) def _remove_noise(self, document): noise_pattern = re.compile("|".join(["httpS+", "@w+", "#w+"])) clean_text = re.sub(noise_pattern, "", document) return clean_text def features(self, X): return self.vectorizer.transform(X) def fit(self, X, y): self.vectorizer.fit(X) self.classifier.fit(self.features(X), y) def predict(self, x): return self.classifier.predict(self.features([x])) def score(self, X, y): return self.classifier.score(self.features(X), y) language_detector = LanguageDetector() x_train,x_test,y_train,y_test = get_train_test_data() language_detector.fit(x_train, y_train) print(language_detector.predict('This is an English sentence')) print(language_detector.score(x_test, y_test))

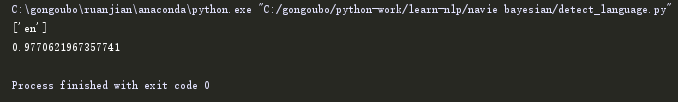

最终结果:

相关数据及代码:链接: https://pan.baidu.com/s/1tjHcnZuEdGpDb9vtCHYRWA 提取码: aqfs