实现的功能就是两个手机在一个局域网内可以互相观看对方的摄像头图像,当然如果都是连接公网那么就能远程互看了,,,,和视频聊天差不多,,不过没有声音,,,,,,,,

源码是在网上找的(具体地址忘了,如有侵犯请告知),亲测能用,,其实一开始想直接用到自己现在做的东西上 ,不过直接加到自己现在的软件上,调试了一下发现,,我想多了 ,老天总是不让自己那么轻易的.......................

,老天总是不让自己那么轻易的.......................

因为自己手头上只有一个手机,所以就自己发给自己了,本想像写其它文章似得详细叙述一番,看了一下表,,感觉还是算了吧,,昨天把程序加到自己的软件上然后修改,测试一直熬到了1点,然后下午上班的时候头疼,困,然后就睡了1个小时.............年轻人不要老熬夜,,对身体不好,

上面的是自己的摄像头预览的,

下面的是通过TCP传输过来的

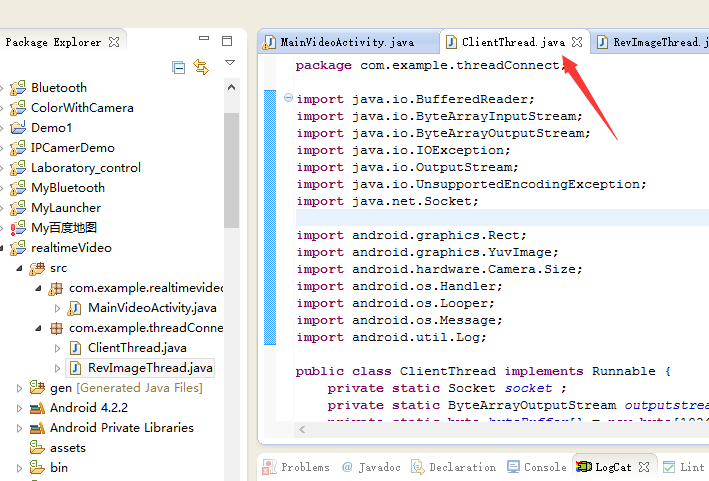

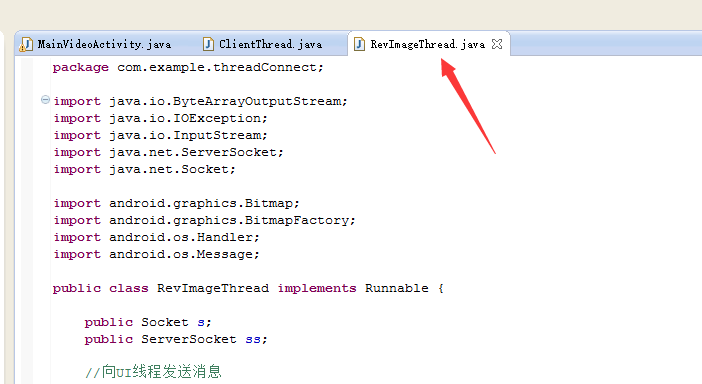

源码如下

package com.example.realtimevideo; import java.io.ByteArrayOutputStream; import com.example.threadConnect.ClientThread; import com.example.threadConnect.RevImageThread; import android.graphics.Bitmap; import android.graphics.ImageFormat; import android.graphics.YuvImage; import android.hardware.Camera; import android.hardware.Camera.PreviewCallback; import android.hardware.Camera.Size; import android.os.Bundle; import android.os.Handler; import android.os.Message; import android.app.Activity; import android.util.DisplayMetrics; import android.util.Log; import android.view.Menu; import android.view.SurfaceHolder; import android.view.Window; import android.view.WindowManager; import android.view.SurfaceHolder.Callback; import android.view.SurfaceView; import android.widget.ImageView; import android.widget.RelativeLayout; public class MainVideoActivity extends Activity{ RevImageThread revImageThread; public static ImageView image; private static Bitmap bitmap; private static final int COMPLETED = 0x222; MyHandler handler; ClientThread clientThread; ByteArrayOutputStream outstream; SurfaceView surfaceView; SurfaceHolder sfh; Camera camera; boolean isPreview = false; //是否在浏览中 static int screenWidth=300; static int screenHeight=300; @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); // 设置全屏 requestWindowFeature(Window.FEATURE_NO_TITLE); getWindow().setFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN,WindowManager.LayoutParams.FLAG_FULLSCREEN); setContentView(R.layout.activity_main_video); surfaceView = (SurfaceView)findViewById(R.id.surfaceView); image=(ImageView)findViewById(R.id.imageView1); handler = new MyHandler(); clientThread = new ClientThread(); new Thread(clientThread).start(); revImageThread = new RevImageThread(handler); new Thread(revImageThread).start(); DisplayMetrics dm = new DisplayMetrics(); getWindowManager().getDefaultDisplay().getMetrics(dm); screenWidth = dm.widthPixels;// 获取屏幕分辨率宽度 screenHeight = dm.heightPixels; image.setLayoutParams(new RelativeLayout.LayoutParams(screenWidth,screenHeight)); //image.setMaxHeight(screenHeight); //image.setMaxWidth(screenWidth); image.setMaxHeight(screenHeight/2); sfh = surfaceView.getHolder(); sfh.setFixedSize(screenWidth, screenHeight/2); sfh.addCallback(new Callback(){ @Override public void surfaceChanged(SurfaceHolder arg0, int arg1, int arg2, int arg3) { // TODO Auto-generated method stub } @Override public void surfaceCreated(SurfaceHolder arg0) { // TODO Auto-generated method stub initCamera(); } @Override public void surfaceDestroyed(SurfaceHolder arg0) { if (camera != null) { if (isPreview) camera.stopPreview(); camera.release(); camera = null; } } }); } @Override public boolean onCreateOptionsMenu(Menu menu) { // Inflate the menu; this adds items to the action bar if it is present. getMenuInflater().inflate(R.menu.main_video, menu); return true; } @SuppressWarnings("deprecation") private void initCamera() { if (!isPreview) { int k = 0; if((k=FindBackCamera())!=-1){ camera = Camera.open(k); }else{ camera = Camera.open(); } ClientThread.size = camera.getParameters().getPreviewSize(); } if (camera != null && !isPreview) { try{ camera.setPreviewDisplay(sfh); // 通过SurfaceView显示取景画面 Camera.Parameters parameters = camera.getParameters(); parameters.setPreviewSize(screenWidth, screenHeight/4*3); /* 每秒从摄像头捕获5帧画面, */ parameters.setPreviewFrameRate(5); parameters.setPictureFormat(ImageFormat.NV21); // 设置图片格式 parameters.setPictureSize(screenWidth, screenHeight/4*3); // 设置照片的大小 camera.setDisplayOrientation(90); camera.setPreviewCallback(new PreviewCallback(){ @Override public void onPreviewFrame(byte[] data, Camera c) { // TODO Auto-generated method stub Size size = camera.getParameters().getPreviewSize(); try{ //调用image.compressToJpeg()将YUV格式图像数据data转为jpg格式 YuvImage image = new YuvImage(data, ImageFormat.NV21, size.width, size.height, null); if(image!=null){ Message msg = clientThread.revHandler.obtainMessage(); msg.what=0x111; msg.obj=image; clientThread.revHandler.sendMessage(msg); } }catch(Exception ex){ Log.e("Sys","Error:"+ex.getMessage()); } } }); camera.startPreview(); // 开始预览 camera.autoFocus(null); // 自动对焦 } catch (Exception e) { e.printStackTrace(); } isPreview = true; } } static class MyHandler extends Handler{ @Override public void handleMessage(Message msg){ if (msg.what == COMPLETED) { bitmap = (Bitmap)msg.obj; //image.setPivotX(image.getWidth()/2); //image.setPivotX(image.getHeight()/2); //image.setImageBitmap(bitmap); image.setLayoutParams(new RelativeLayout.LayoutParams(screenWidth,screenHeight)); image.setImageBitmap(bitmap); image.setRotation(90); super.handleMessage(msg); } } } //调用前置摄像头 private int FindFrontCamera(){ int cameraCount = 0; Camera.CameraInfo cameraInfo = new Camera.CameraInfo(); cameraCount = Camera.getNumberOfCameras(); // get cameras number for ( int camIdx = 0; camIdx < cameraCount;camIdx++ ) { Camera.getCameraInfo( camIdx, cameraInfo ); // get camerainfo if ( cameraInfo.facing ==Camera.CameraInfo.CAMERA_FACING_FRONT ) { // 代表摄像头的方位,目前有定义值两个分别为CAMERA_FACING_FRONT前置和CAMERA_FACING_BACK后置 return camIdx; } } return -1; } //调用后置摄像头 private int FindBackCamera(){ int cameraCount = 0; Camera.CameraInfo cameraInfo = new Camera.CameraInfo(); cameraCount = Camera.getNumberOfCameras(); // get cameras number for ( int camIdx = 0; camIdx < cameraCount;camIdx++ ) { Camera.getCameraInfo( camIdx, cameraInfo ); // get camerainfo if ( cameraInfo.facing ==Camera.CameraInfo.CAMERA_FACING_BACK ) { // 代表摄像头的方位,目前有定义值两个分别为CAMERA_FACING_FRONT前置和CAMERA_FACING_BACK后置 return camIdx; } } return -1; } }

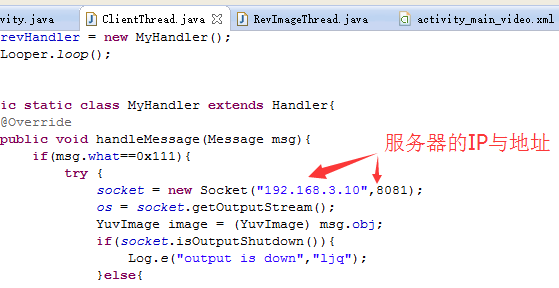

package com.example.threadConnect; import java.io.BufferedReader; import java.io.ByteArrayInputStream; import java.io.ByteArrayOutputStream; import java.io.IOException; import java.io.OutputStream; import java.io.UnsupportedEncodingException; import java.net.Socket; import android.graphics.Rect; import android.graphics.YuvImage; import android.hardware.Camera.Size; import android.os.Handler; import android.os.Looper; import android.os.Message; import android.util.Log; public class ClientThread implements Runnable { private static Socket socket ; private static ByteArrayOutputStream outputstream; private static byte byteBuffer[] = new byte[1024]; public static Size size; //接受UI线程消息 public MyHandler revHandler; BufferedReader br= null; static OutputStream os = null; @Override public void run() { Looper.prepare(); //接受UI发来的信息 revHandler = new MyHandler(); Looper.loop(); } public static class MyHandler extends Handler{ @Override public void handleMessage(Message msg){ if(msg.what==0x111){ try { socket = new Socket("192.168.3.10",8081); os = socket.getOutputStream(); YuvImage image = (YuvImage) msg.obj; if(socket.isOutputShutdown()){ Log.e("output is down","ljq"); }else{ os = socket.getOutputStream(); outputstream = new ByteArrayOutputStream(); image.compressToJpeg(new Rect(0, 0, size.width, size.height), 80, outputstream); ByteArrayInputStream inputstream = new ByteArrayInputStream(outputstream.toByteArray()); int amount; while ((amount = inputstream.read(byteBuffer)) != -1) { os.write(byteBuffer, 0, amount); } os.write(" ".getBytes()); outputstream.flush(); outputstream.close(); os.flush(); os.close(); socket.close(); } } catch (UnsupportedEncodingException e) { // TODO Auto-generated catch block e.printStackTrace(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } } }

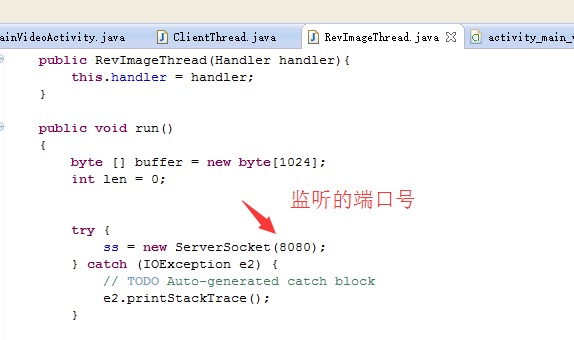

package com.example.threadConnect; import java.io.ByteArrayOutputStream; import java.io.IOException; import java.io.InputStream; import java.net.ServerSocket; import java.net.Socket; import android.graphics.Bitmap; import android.graphics.BitmapFactory; import android.os.Handler; import android.os.Message; public class RevImageThread implements Runnable { public Socket s; public ServerSocket ss; //向UI线程发送消息 private Handler handler; private Bitmap bitmap; private static final int COMPLETED = 0x222; public RevImageThread(Handler handler){ this.handler = handler; } public void run() { byte [] buffer = new byte[1024]; int len = 0; try { ss = new ServerSocket(8080); } catch (IOException e2) { // TODO Auto-generated catch block e2.printStackTrace(); } InputStream ins = null; while(true){ try { s = ss.accept(); ins = s.getInputStream(); ByteArrayOutputStream outStream = new ByteArrayOutputStream(); while( (len=ins.read(buffer)) != -1){ outStream.write(buffer, 0, len); } ins.close(); byte data[] = outStream.toByteArray(); bitmap = BitmapFactory.decodeByteArray(data, 0, data.length); Message msg =handler.obtainMessage(); msg.what = COMPLETED; msg.obj = bitmap; handler.sendMessage(msg); outStream.flush(); outStream.close(); if(!s.isClosed()){ s.close(); } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } //Bitmap bitmap = BitmapFactory.decodeStream(ins); } } }

布局

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android" xmlns:tools="http://schemas.android.com/tools" android:layout_width="match_parent" android:layout_height="match_parent" android:paddingBottom="@dimen/activity_vertical_margin" android:paddingLeft="@dimen/activity_horizontal_margin" android:paddingRight="@dimen/activity_horizontal_margin" android:paddingTop="@dimen/activity_vertical_margin" android:orientation="vertical" tools:context=".MainVideoActivity" > <SurfaceView android:id="@+id/surfaceView" android:layout_width="fill_parent" android:layout_height="fill_parent" android:scaleType="fitCenter" /> <ImageView android:id="@+id/imageView1" android:layout_width="fill_parent" android:layout_height="fill_parent" android:src="@drawable/ic_launcher"/> </RelativeLayout>

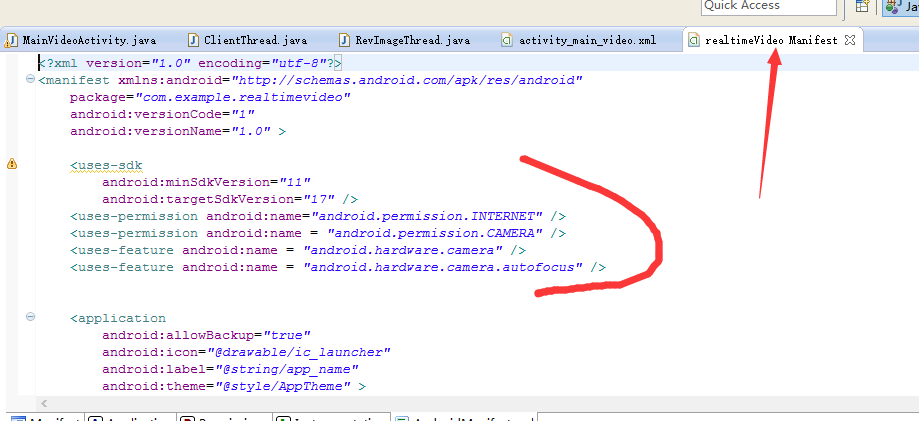

权限

对了关于如何使用

这个程序把发送图像和接收图像做在了一块了

其实只有知道TCP通信应该就会用,,不对源程序没提供地址输入框,,,,,,,后期自己加上了,不过现在感觉需要修改,因为源程序是不停的申请不停的释放,,,,,,

未完,,待续,,改好了就把自己完善的代码奉上, 加详细解释,一步一步的写出来 ..................

..................