Spark 1.5.2 Spark Streaming 学习笔记和编程练习

Overview 概述

Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data streams. Data can be ingested from many sources like Kafka, Flume, Twitter, ZeroMQ, Kinesis, or TCP sockets, and can be processed using complex algorithms expressed with high-level functions like map, reduce, join and window. Finally, processed data can be pushed out to filesystems, databases, and live dashboards. In fact, you can apply Spark’s machine learning and graph processing algorithms on data streams.

Spark Streaming 是核心Spark API的一个扩展,其处理实时流数据具有可扩展性、高吞吐量,容错性。数据可以通过多种源加载进来,如Kafka, Flume, Twitter, ZeroMQ, Kinesis, or TCP sockets;并且能够使用像map, reduce, join and window这样高级别的复杂算法处理。数据处理后可以输出到文件系统,如databases, and live dashboards。你也可以使用spark的机器学习,图处理算法在数据流上。

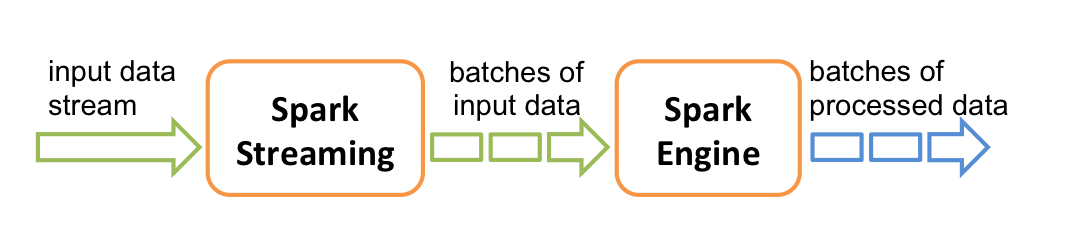

Internally, it works as follows. Spark Streaming receives live input data streams and divides the data into batches, which are then processed by the Spark engine to generate the final stream of results in batches.

Spark Streaming provides a high-level abstraction called discretized stream or DStream, which represents a continuous stream of data. DStreams can be created either from input data streams from sources such as Kafka, Flume, and Kinesis, or by applying high-level operations on other DStreams. Internally, a DStream is represented as a sequence of RDDs.

Spark Streaming提出了一个高度抽象的概念叫做离散流或者DStream,来表达一个连续的流数据。一个Dstream可以看作一系列RDD。

Java编程练习:

一个spark streaming从socket获取数据进行单词统计的例子:(pom文件要添加spark相关依赖)

socket代码:

说明:启动一个socket服务端,等待连接,连接之后,重复输出一个字符串到连接的socket中。socket地址为本机,9999端口。

1 import java.io.*; 2 import java.net.ServerSocket; 3 import java.net.Socket; 4 import java.util.Date; 5 6 /** 7 * socket服务端简单实现,主要作用往socket客户端发送数据 8 */ 9 public class SocketServerPut { 10 public static void main(String[] args) { 11 try { 12 ServerSocket serverSocket = new ServerSocket(9999); 13 Socket socket=null; 14 while(true) { 15 socket = serverSocket.accept(); 16 while(socket.isConnected()) { 17 // 向服务器端发送数据 18 OutputStream os = socket.getOutputStream(); 19 DataOutputStream bos = new DataOutputStream(os); 20 //每隔20ms发送一次数据 21 String str="Connect 123 test spark streaming abc xyz hik "; 22 while(true){ 23 bos.writeUTF(str); 24 bos.flush(); 25 System.out.println(str); 26 //20ms发送一次数据 27 try { 28 Thread.sleep(500L); 29 } catch (InterruptedException e) { 30 e.printStackTrace(); 31 } 32 } 33 } 34 //10ms检测一次连接 35 try { 36 Thread.sleep(10L); 37 } catch (InterruptedException e) { 38 e.printStackTrace(); 39 } 40 } 41 } catch (IOException e) { 42 e.printStackTrace(); 43 } 44 } 45 }

Spark Streaming 处理代码:

1 import org.apache.spark.SparkConf; 2 import org.apache.spark.api.java.function.FlatMapFunction; 3 import org.apache.spark.api.java.function.Function2; 4 import org.apache.spark.api.java.function.PairFunction; 5 import org.apache.spark.streaming.Durations; 6 import org.apache.spark.streaming.api.java.JavaDStream; 7 import org.apache.spark.streaming.api.java.JavaPairDStream; 8 import org.apache.spark.streaming.api.java.JavaReceiverInputDStream; 9 import org.apache.spark.streaming.api.java.JavaStreamingContext; 10 import scala.Tuple2; 11 12 import java.util.Arrays; 13 14 /** 15 * streaming从socket获取数据处理 16 */ 17 public class StreamingFromSocket { 18 public static void main(String[] args) { 19 //设置运行模式local 设置appname 20 SparkConf conf=new SparkConf().setMaster("local[2]").setAppName("StreamingFromSocketTest"); 21 //初始化,设置窗口大小为2s 22 JavaStreamingContext jssc=new JavaStreamingContext(conf, Durations.seconds(2L)); 23 //从本地Socket的9999端口读取数据 24 JavaReceiverInputDStream<String> lines= jssc.socketTextStream("localhost", 9999); 25 //把一行数据转化成单个单次 以空格分隔 26 JavaDStream<String> words = lines.flatMap(new FlatMapFunction<String,String>(){ 27 @Override 28 public Iterable<String> call(String x){ 29 return Arrays.asList(x.split(" ")); 30 } 31 }); 32 //计算每一个单次在一个batch里出现的个数 33 JavaPairDStream<String, Integer> pairs= words.mapToPair(new PairFunction<String, String, Integer>() { 34 @Override 35 public Tuple2<String, Integer> call(String s) throws Exception { 36 return new Tuple2<String, Integer>(s,1); 37 } 38 }); 39 JavaPairDStream<String,Integer> wordCounts=pairs.reduceByKey(new Function2<Integer, Integer, Integer>() { 40 @Override 41 public Integer call(Integer integer, Integer integer2) throws Exception { 42 return integer+integer2; 43 } 44 }); 45 //输出统计结果 46 wordCounts.print(); 47 jssc.start(); 48 //20s后结束 49 jssc.awaitTerminationOrTimeout(20*1000L); 50 51 } 52 }

输出结果:

-------------------------------------------

Time: 1470385522000 ms

-------------------------------------------

(hik,4)

(123,4)

(streaming,4)

(abc,4)

(test,4)

初始化streamingContext

1、方式一:使用sparkconf初始化

import org.apache.spark.*; import org.apache.spark.streaming.api.java.*; SparkConf conf = new SparkConf().setAppName(appName).setMaster(master); JavaStreamingContext ssc = new JavaStreamingContext(conf, Duration(1000));

2、由已存在的sparkcontext初始化

import org.apache.spark.streaming.api.java.*; JavaSparkContext sc = ... //existing JavaSparkContext JavaStreamingContext ssc = new JavaStreamingContext(sc, Durations.seconds(1));

After a context is defined, you have to do the following.

- Define the input sources by creating input DStreams.

- Define the streaming computations by applying transformation and output operations to DStreams.

- Start receiving data and processing it using

streamingContext.start(). - Wait for the processing to be stopped (manually or due to any error) using

streamingContext.awaitTermination(). - The processing can be manually stopped using

streamingContext.stop().

Points to remember:

- Once a context has been started, no new streaming computations can be set up or added to it.

- Once a context has been stopped, it cannot be restarted.

- Only one StreamingContext can be active in a JVM at the same time.

- stop() on StreamingContext also stops the SparkContext. To stop only the StreamingContext, set the optional parameter of

stop()calledstopSparkContextto false. - A SparkContext can be re-used to create multiple StreamingContexts, as long as the previous StreamingContext is stopped (without stopping the SparkContext) before the next StreamingContext is created.

初始化Context后,需要做如下几件事情,才能完成一个job。

1)定义一个输入源,从而产生DStreams;

2)定义streaming计算通过对DStreams应用转换和输出操作;

3)使用streamingContext.start()语句开始接受数据并进行处理;

4)使用streamingContext.awaitTermination().让程序等待job完成;程序异常也可导致停止job;

5)使用streamingContext.stop()可以停止job;

注意项:

1)当context开始后,新的streaming computation不能被设置和添加进来;

2)context停止后,不能重启;

3)同一时间JVM(java虚拟机)中只允许一个StreamingContext存在;

4)停止StreamingContext后,sparkcontext也会停止;如果你只想停止StreamingContext,你可以在stop的参数中设置stopSparkContext为false;

5)一个SparkContext可以被重复使用去创建StreamingContext,但新的StreamingContext被创建前,前一个StreamingContext要停止。

未完待续