Hadoop生态圈-flume日志收集工具完全分布式部署

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

目前为止,Hadoop的一个主流应用就是对于大规模web日志的分析和处理,因此想要把web服务的日志导入到Hadoop来进行分析就得借助日志收集工具了。目前主流的Hadoop日志收集工具能够跟Hadoop进行交接的有三个工具,即flume,scribe和chukwa。本篇博客的主角是Apache的flume。

一.主流日志收集工具简介

二.Flume简介

1>.访问Flume官网(http://flume.apache.org/)

2>.flume核心组件介绍

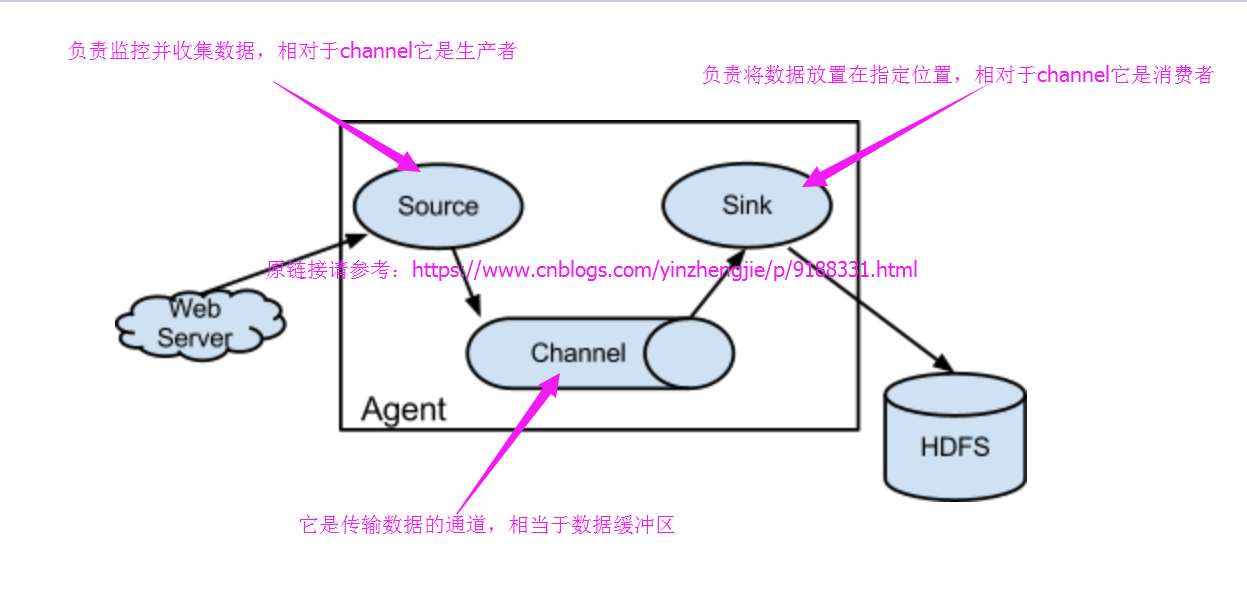

Flume的客户端时Agent,从官网查看的Flume架构图可以明显的看出它有三个组件,即source,channel以及sink。这三个组件的作用如下:

a>.souce:生产源数据,负责监控并收集数据,相对于channel是生产者;

b>.channel:它是传输数据的通道,相当于数据缓冲区;

c>.sink:它是沉槽,负责将数据放置在指定位置,相对于channel是消费者;

三.Flume的完全分布式部署方式

最新版本的部署方式请参考:http://flume.apache.org/FlumeUserGuide.html,以下是我部署Flume的详细笔记。

1>.解压并创建符号链接

[yinzhengjie@s101 data]$ tar zxf apache-flume-1.8.0-bin.tar.gz -C /soft/ [yinzhengjie@s101 data]$ ln -s /soft/apache-flume-1.8.0-bin/ /soft/flume [yinzhengjie@s101 data]$

2>.修改环境变量并使之生效

[yinzhengjie@s101 data]$ sudo vi /etc/profile [sudo] password for yinzhengjie: [yinzhengjie@s101 data]$ [yinzhengjie@s101 data]$ [yinzhengjie@s101 data]$ tail -3 /etc/profile #ADD Flume PATH export FLUME_HOME=/soft/flume PATH=$PATH:$FLUME_HOME/bin [yinzhengjie@s101 data]$ [yinzhengjie@s101 data]$ source /etc/profile [yinzhengjie@s101 data]$

3>.查看flume版本

[yinzhengjie@s101 data]$ flume-ng version Flume 1.8.0 Source code repository: https://git-wip-us.apache.org/repos/asf/flume.git Revision: 99f591994468633fc6f8701c5fc53e0214b6da4f Compiled by denes on Fri Sep 15 14:58:00 CEST 2017 From source with checksum fbb44c8c8fb63a49be0a59e27316833d [yinzhengjie@s101 data]$

4>.创建flume环境变量文件(flume-env.sh)

[yinzhengjie@s101 data]$ cp /soft/flume/conf/flume-env.sh.template /soft/flume/conf/flume-env.sh [yinzhengjie@s101 data]$ [yinzhengjie@s101 data]$ [yinzhengjie@s101 data]$ sed -i 's@# export JAVA_HOME=/usr/lib/jvm/java-8-oracle@export JAVA_HOME=/soft/jdk@g' /soft/flume/conf/flume-env.sh [yinzhengjie@s101 data]$ [yinzhengjie@s101 data]$ more /soft/flume/conf/flume-env.sh | grep "JAVA_HOME" export JAVA_HOME=/soft/jdk [yinzhengjie@s101 data]$

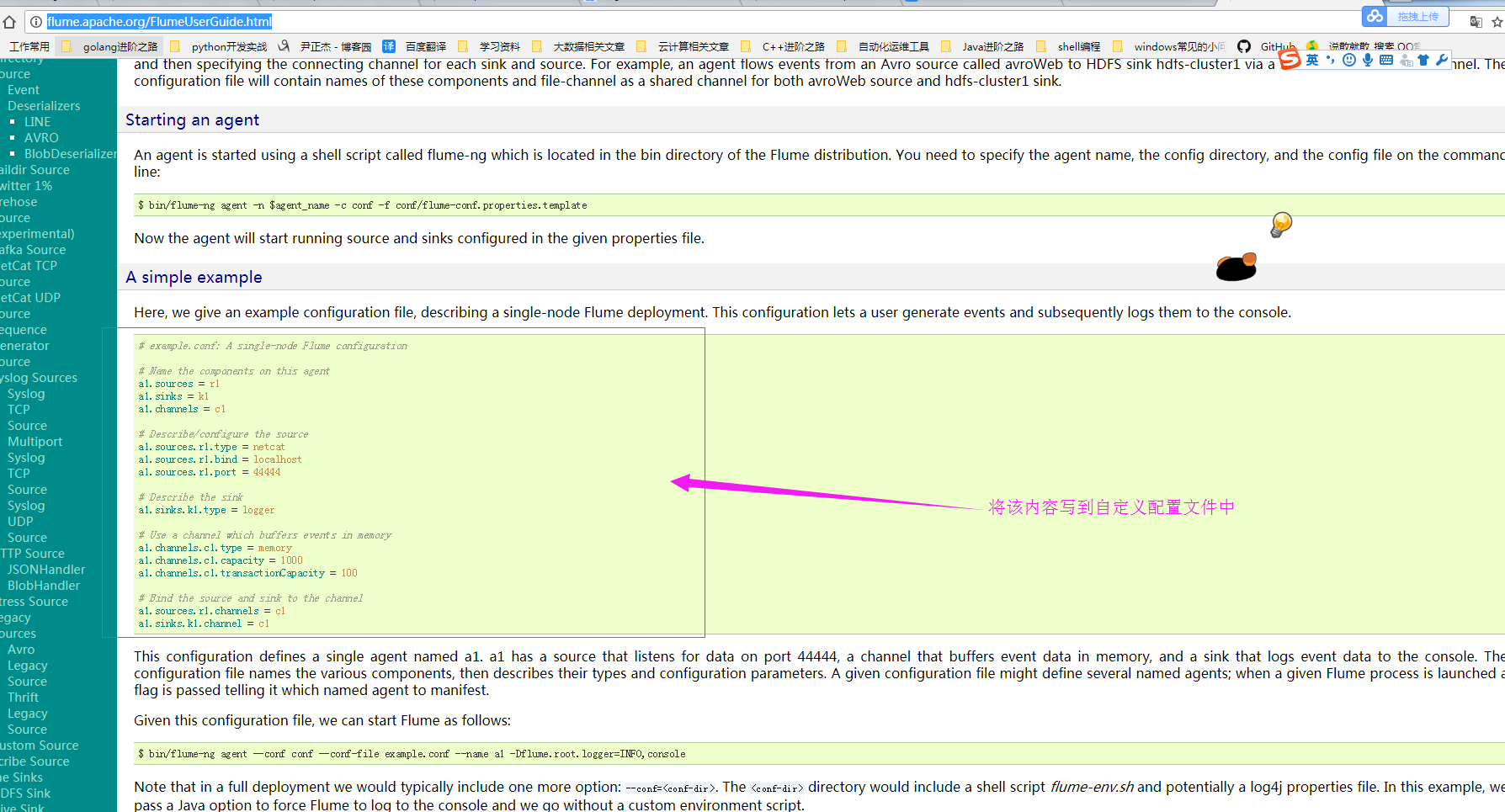

5>.生产一个自定义配置文件(yinzhengjie.conf)

[yinzhengjie@s101 data]$ more /soft/flume/conf/yinzhengjie.conf # example.conf: A single-node Flume configuration # Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 # Describe the sink a1.sinks.k1.type = logger # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 [yinzhengjie@s101 data]$

6>.启动flume(flume-ng agent -f /soft/flume/conf/yinzhengjie.conf -n a1)

[yinzhengjie@s101 data]$ flume-ng agent -f /soft/flume/conf/yinzhengjie.conf -n a1 Warning: No configuration directory set! Use --conf <dir> to override. Warning: JAVA_HOME is not set! Info: Including Hadoop libraries found via (/soft/hadoop/bin/hadoop) for HDFS access Info: Including HBASE libraries found via (/soft/hbase/bin/hbase) for HBASE access Info: Including Hive libraries found via () for Hive access + exec /soft/jdk/bin/java -Xmx20m -cp '/soft/flume/lib/*:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/soft/hbase/bin/../conf:/soft/jdk//lib/tools.jar:/soft/hbase/bin/..:/soft/hbase/bin/../lib/activation-1.1.jar:/soft/hbase/bin/../lib/aopalliance-1.0.jar:/soft/hbase/bin/../lib/apacheds-i18n-2.0.0-M15.jar:/soft/hbase/bin/../lib/apacheds-kerberos-codec-2.0.0-M15.jar:/soft/hbase/bin/../lib/api-asn1-api-1.0.0-M20.jar:/soft/hbase/bin/../lib/api-util-1.0.0-M20.jar:/soft/hbase/bin/../lib/asm-3.1.jar:/soft/hbase/bin/../lib/avro-1.7.4.jar:/soft/hbase/bin/../lib/commons-beanutils-1.7.0.jar:/soft/hbase/bin/../lib/commons-beanutils-core-1.8.0.jar:/soft/hbase/bin/../lib/commons-cli-1.2.jar:/soft/hbase/bin/../lib/commons-codec-1.9.jar:/soft/hbase/bin/../lib/commons-collections-3.2.2.jar:/soft/hbase/bin/../lib/commons-compress-1.4.1.jar:/soft/hbase/bin/../lib/commons-configuration-1.6.jar:/soft/hbase/bin/../lib/commons-daemon-1.0.13.jar:/soft/hbase/bin/../lib/commons-digester-1.8.jar:/soft/hbase/bin/../lib/commons-el-1.0.jar:/soft/hbase/bin/../lib/commons-httpclient-3.1.jar:/soft/hbase/bin/../lib/commons-io-2.4.jar:/soft/hbase/bin/../lib/commons-lang-2.6.jar:/soft/hbase/bin/../lib/commons-logging-1.2.jar:/soft/hbase/bin/../lib/commons-math-2.2.jar:/soft/hbase/bin/../lib/commons-math3-3.1.1.jar:/soft/hbase/bin/../lib/commons-net-3.1.jar:/soft/hbase/bin/../lib/disruptor-3.3.0.jar:/soft/hbase/bin/../lib/findbugs-annotations-1.3.9-1.jar:/soft/hbase/bin/../lib/guava-12.0.1.jar:/soft/hbase/bin/../lib/guice-3.0.jar:/soft/hbase/bin/../lib/guice-servlet-3.0.jar:/soft/hbase/bin/../lib/hadoop-annotations-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-auth-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-hdfs-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-app-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-core-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-jobclient-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-shuffle-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-api-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-server-common-2.5.1.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-client-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-examples-1.2.6.jar:/soft/hbase/bin/../lib/hbase-external-blockcache-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop2-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-prefix-tree-1.2.6.jar:/soft/hbase/bin/../lib/hbase-procedure-1.2.6.jar:/soft/hbase/bin/../lib/hbase-protocol-1.2.6.jar:/soft/hbase/bin/../lib/hbase-resource-bundle-1.2.6.jar:/soft/hbase/bin/../lib/hbase-rest-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-shell-1.2.6.jar:/soft/hbase/bin/../lib/hbase-thrift-1.2.6.jar:/soft/hbase/bin/../lib/htrace-core-3.1.0-incubating.jar:/soft/hbase/bin/../lib/httpclient-4.2.5.jar:/soft/hbase/bin/../lib/httpcore-4.4.1.jar:/soft/hbase/bin/../lib/jackson-core-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-jaxrs-1.9.13.jar:/soft/hbase/bin/../lib/jackson-mapper-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-xc-1.9.13.jar:/soft/hbase/bin/../lib/jamon-runtime-2.4.1.jar:/soft/hbase/bin/../lib/jasper-compiler-5.5.23.jar:/soft/hbase/bin/../lib/jasper-runtime-5.5.23.jar:/soft/hbase/bin/../lib/javax.inject-1.jar:/soft/hbase/bin/../lib/java-xmlbuilder-0.4.jar:/soft/hbase/bin/../lib/jaxb-api-2.2.2.jar:/soft/hbase/bin/../lib/jaxb-impl-2.2.3-1.jar:/soft/hbase/bin/../lib/jcodings-1.0.8.jar:/soft/hbase/bin/../lib/jersey-client-1.9.jar:/soft/hbase/bin/../lib/jersey-core-1.9.jar:/soft/hbase/bin/../lib/jersey-guice-1.9.jar:/soft/hbase/bin/../lib/jersey-json-1.9.jar:/soft/hbase/bin/../lib/jersey-server-1.9.jar:/soft/hbase/bin/../lib/jets3t-0.9.0.jar:/soft/hbase/bin/../lib/jettison-1.3.3.jar:/soft/hbase/bin/../lib/jetty-6.1.26.jar:/soft/hbase/bin/../lib/jetty-sslengine-6.1.26.jar:/soft/hbase/bin/../lib/jetty-util-6.1.26.jar:/soft/hbase/bin/../lib/joni-2.1.2.jar:/soft/hbase/bin/../lib/jruby-complete-1.6.8.jar:/soft/hbase/bin/../lib/jsch-0.1.42.jar:/soft/hbase/bin/../lib/jsp-2.1-6.1.14.jar:/soft/hbase/bin/../lib/jsp-api-2.1-6.1.14.jar:/soft/hbase/bin/../lib/junit-4.12.jar:/soft/hbase/bin/../lib/leveldbjni-all-1.8.jar:/soft/hbase/bin/../lib/libthrift-0.9.3.jar:/soft/hbase/bin/../lib/log4j-1.2.17.jar:/soft/hbase/bin/../lib/metrics-core-2.2.0.jar:/soft/hbase/bin/../lib/MyHbase-1.0-SNAPSHOT.jar:/soft/hbase/bin/../lib/netty-all-4.0.23.Final.jar:/soft/hbase/bin/../lib/paranamer-2.3.jar:/soft/hbase/bin/../lib/phoenix-4.10.0-HBase-1.2-client.jar:/soft/hbase/bin/../lib/protobuf-java-2.5.0.jar:/soft/hbase/bin/../lib/servlet-api-2.5-6.1.14.jar:/soft/hbase/bin/../lib/servlet-api-2.5.jar:/soft/hbase/bin/../lib/slf4j-api-1.7.7.jar:/soft/hbase/bin/../lib/slf4j-log4j12-1.7.5.jar:/soft/hbase/bin/../lib/snappy-java-1.0.4.1.jar:/soft/hbase/bin/../lib/spymemcached-2.11.6.jar:/soft/hbase/bin/../lib/xmlenc-0.52.jar:/soft/hbase/bin/../lib/xz-1.0.jar:/soft/hbase/bin/../lib/zookeeper-3.4.6.jar:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*::/soft/hive/lib/*:/soft/hive/lib/*:/contrib/capacity-scheduler/*.jar:/conf:/lib/*' -Djava.library.path=:/soft/hadoop-2.7.3/lib/native:/soft/hadoop-2.7.3/lib/native org.apache.flume.node.Application -f /soft/flume/conf/yinzhengjie.conf -n a1 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/apache-flume-1.8.0-bin/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/phoenix-4.10.0-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 18/06/14 16:28:36 INFO node.PollingPropertiesFileConfigurationProvider: Configuration provider starting 18/06/14 16:28:36 INFO node.PollingPropertiesFileConfigurationProvider: Reloading configuration file:/soft/flume/conf/yinzhengjie.conf 18/06/14 16:28:36 INFO conf.FlumeConfiguration: Added sinks: k1 Agent: a1 18/06/14 16:28:36 INFO conf.FlumeConfiguration: Processing:k1 18/06/14 16:28:36 INFO conf.FlumeConfiguration: Processing:k1 18/06/14 16:28:36 INFO conf.FlumeConfiguration: Post-validation flume configuration contains configuration for agents: [a1] 18/06/14 16:28:36 INFO node.AbstractConfigurationProvider: Creating channels 18/06/14 16:28:36 INFO channel.DefaultChannelFactory: Creating instance of channel c1 type memory 18/06/14 16:28:36 INFO node.AbstractConfigurationProvider: Created channel c1 18/06/14 16:28:36 INFO source.DefaultSourceFactory: Creating instance of source r1, type netcat 18/06/14 16:28:36 INFO sink.DefaultSinkFactory: Creating instance of sink: k1, type: logger 18/06/14 16:28:36 INFO node.AbstractConfigurationProvider: Channel c1 connected to [r1, k1] 18/06/14 16:28:36 INFO node.Application: Starting new configuration:{ sourceRunners:{r1=EventDrivenSourceRunner: { source:org.apache.flume.source.NetcatSource{name:r1,state:IDLE} }} sinkRunners:{k1=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@68970aaa counterGroup:{ name:null counters:{} } }} channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} } 18/06/14 16:28:36 INFO node.Application: Starting Channel c1 18/06/14 16:28:37 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: CHANNEL, name: c1: Successfully registered new MBean. 18/06/14 16:28:37 INFO instrumentation.MonitoredCounterGroup: Component type: CHANNEL, name: c1 started 18/06/14 16:28:37 INFO node.Application: Starting Sink k1 18/06/14 16:28:37 INFO node.Application: Starting Source r1 18/06/14 16:28:37 INFO source.NetcatSource: Source starting 18/06/14 16:28:37 INFO source.NetcatSource: Created serverSocket:sun.nio.ch.ServerSocketChannelImpl[/127.0.0.1:44444]

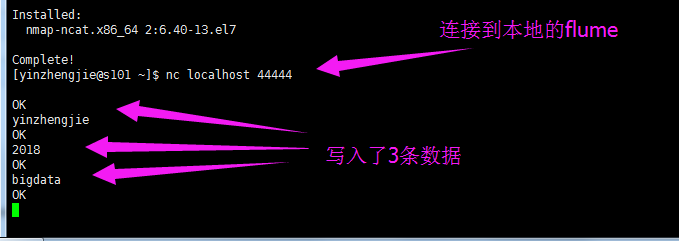

7>.开启另一会话,启动netcat

[yinzhengjie@s101 ~]$ sudo yum -y install nc Loaded plugins: fastestmirror Loading mirror speeds from cached hostfile * base: mirrors.tuna.tsinghua.edu.cn * epel: mirrors.tuna.tsinghua.edu.cn * extras: mirrors.tuna.tsinghua.edu.cn * updates: mirrors.nwsuaf.edu.cn Resolving Dependencies --> Running transaction check ---> Package nmap-ncat.x86_64 2:6.40-13.el7 will be installed --> Finished Dependency Resolution Dependencies Resolved ================================================================================================================================================================================================================ Package Arch Version Repository Size ================================================================================================================================================================================================================ Installing: nmap-ncat x86_64 2:6.40-13.el7 base 205 k Transaction Summary ================================================================================================================================================================================================================ Install 1 Package Total download size: 205 k Installed size: 423 k Downloading packages: nmap-ncat-6.40-13.el7.x86_64.rpm | 205 kB 00:00:00 Running transaction check Running transaction test Transaction test succeeded Running transaction Installing : 2:nmap-ncat-6.40-13.el7.x86_64 1/1 Verifying : 2:nmap-ncat-6.40-13.el7.x86_64 1/1 Installed: nmap-ncat.x86_64 2:6.40-13.el7 Complete! [yinzhengjie@s101 ~]$

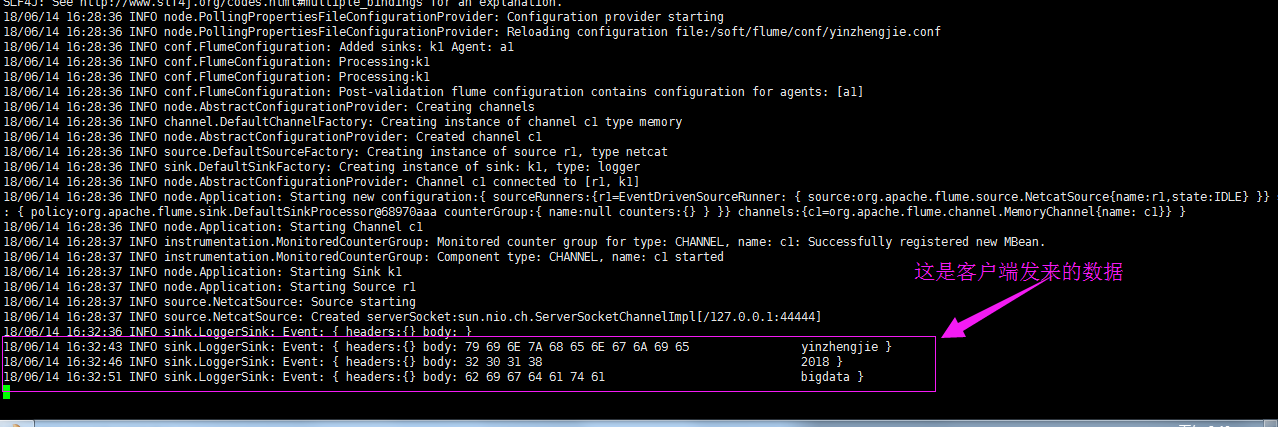

8>.在nc输入数据。flume会接收到数据(如果出现下图试验结果说明你flume部署成功啦!)

[yinzhengjie@s101 data]$ flume-ng agent -f /soft/flume/conf/yinzhengjie.conf -n a1 Warning: No configuration directory set! Use --conf <dir> to override. Warning: JAVA_HOME is not set! Info: Including Hadoop libraries found via (/soft/hadoop/bin/hadoop) for HDFS access Info: Including HBASE libraries found via (/soft/hbase/bin/hbase) for HBASE access Info: Including Hive libraries found via () for Hive access + exec /soft/jdk/bin/java -Xmx20m -cp '/soft/flume/lib/*:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/soft/hbase/bin/../conf:/soft/jdk//lib/tools.jar:/soft/hbase/bin/..:/soft/hbase/bin/../lib/activation-1.1.jar:/soft/hbase/bin/../lib/aopalliance-1.0.jar:/soft/hbase/bin/../lib/apacheds-i18n-2.0.0-M15.jar:/soft/hbase/bin/../lib/apacheds-kerberos-codec-2.0.0-M15.jar:/soft/hbase/bin/../lib/api-asn1-api-1.0.0-M20.jar:/soft/hbase/bin/../lib/api-util-1.0.0-M20.jar:/soft/hbase/bin/../lib/asm-3.1.jar:/soft/hbase/bin/../lib/avro-1.7.4.jar:/soft/hbase/bin/../lib/commons-beanutils-1.7.0.jar:/soft/hbase/bin/../lib/commons-beanutils-core-1.8.0.jar:/soft/hbase/bin/../lib/commons-cli-1.2.jar:/soft/hbase/bin/../lib/commons-codec-1.9.jar:/soft/hbase/bin/../lib/commons-collections-3.2.2.jar:/soft/hbase/bin/../lib/commons-compress-1.4.1.jar:/soft/hbase/bin/../lib/commons-configuration-1.6.jar:/soft/hbase/bin/../lib/commons-daemon-1.0.13.jar:/soft/hbase/bin/../lib/commons-digester-1.8.jar:/soft/hbase/bin/../lib/commons-el-1.0.jar:/soft/hbase/bin/../lib/commons-httpclient-3.1.jar:/soft/hbase/bin/../lib/commons-io-2.4.jar:/soft/hbase/bin/../lib/commons-lang-2.6.jar:/soft/hbase/bin/../lib/commons-logging-1.2.jar:/soft/hbase/bin/../lib/commons-math-2.2.jar:/soft/hbase/bin/../lib/commons-math3-3.1.1.jar:/soft/hbase/bin/../lib/commons-net-3.1.jar:/soft/hbase/bin/../lib/disruptor-3.3.0.jar:/soft/hbase/bin/../lib/findbugs-annotations-1.3.9-1.jar:/soft/hbase/bin/../lib/guava-12.0.1.jar:/soft/hbase/bin/../lib/guice-3.0.jar:/soft/hbase/bin/../lib/guice-servlet-3.0.jar:/soft/hbase/bin/../lib/hadoop-annotations-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-auth-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-hdfs-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-app-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-core-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-jobclient-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-shuffle-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-api-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-server-common-2.5.1.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-client-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-examples-1.2.6.jar:/soft/hbase/bin/../lib/hbase-external-blockcache-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop2-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-prefix-tree-1.2.6.jar:/soft/hbase/bin/../lib/hbase-procedure-1.2.6.jar:/soft/hbase/bin/../lib/hbase-protocol-1.2.6.jar:/soft/hbase/bin/../lib/hbase-resource-bundle-1.2.6.jar:/soft/hbase/bin/../lib/hbase-rest-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-shell-1.2.6.jar:/soft/hbase/bin/../lib/hbase-thrift-1.2.6.jar:/soft/hbase/bin/../lib/htrace-core-3.1.0-incubating.jar:/soft/hbase/bin/../lib/httpclient-4.2.5.jar:/soft/hbase/bin/../lib/httpcore-4.4.1.jar:/soft/hbase/bin/../lib/jackson-core-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-jaxrs-1.9.13.jar:/soft/hbase/bin/../lib/jackson-mapper-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-xc-1.9.13.jar:/soft/hbase/bin/../lib/jamon-runtime-2.4.1.jar:/soft/hbase/bin/../lib/jasper-compiler-5.5.23.jar:/soft/hbase/bin/../lib/jasper-runtime-5.5.23.jar:/soft/hbase/bin/../lib/javax.inject-1.jar:/soft/hbase/bin/../lib/java-xmlbuilder-0.4.jar:/soft/hbase/bin/../lib/jaxb-api-2.2.2.jar:/soft/hbase/bin/../lib/jaxb-impl-2.2.3-1.jar:/soft/hbase/bin/../lib/jcodings-1.0.8.jar:/soft/hbase/bin/../lib/jersey-client-1.9.jar:/soft/hbase/bin/../lib/jersey-core-1.9.jar:/soft/hbase/bin/../lib/jersey-guice-1.9.jar:/soft/hbase/bin/../lib/jersey-json-1.9.jar:/soft/hbase/bin/../lib/jersey-server-1.9.jar:/soft/hbase/bin/../lib/jets3t-0.9.0.jar:/soft/hbase/bin/../lib/jettison-1.3.3.jar:/soft/hbase/bin/../lib/jetty-6.1.26.jar:/soft/hbase/bin/../lib/jetty-sslengine-6.1.26.jar:/soft/hbase/bin/../lib/jetty-util-6.1.26.jar:/soft/hbase/bin/../lib/joni-2.1.2.jar:/soft/hbase/bin/../lib/jruby-complete-1.6.8.jar:/soft/hbase/bin/../lib/jsch-0.1.42.jar:/soft/hbase/bin/../lib/jsp-2.1-6.1.14.jar:/soft/hbase/bin/../lib/jsp-api-2.1-6.1.14.jar:/soft/hbase/bin/../lib/junit-4.12.jar:/soft/hbase/bin/../lib/leveldbjni-all-1.8.jar:/soft/hbase/bin/../lib/libthrift-0.9.3.jar:/soft/hbase/bin/../lib/log4j-1.2.17.jar:/soft/hbase/bin/../lib/metrics-core-2.2.0.jar:/soft/hbase/bin/../lib/MyHbase-1.0-SNAPSHOT.jar:/soft/hbase/bin/../lib/netty-all-4.0.23.Final.jar:/soft/hbase/bin/../lib/paranamer-2.3.jar:/soft/hbase/bin/../lib/phoenix-4.10.0-HBase-1.2-client.jar:/soft/hbase/bin/../lib/protobuf-java-2.5.0.jar:/soft/hbase/bin/../lib/servlet-api-2.5-6.1.14.jar:/soft/hbase/bin/../lib/servlet-api-2.5.jar:/soft/hbase/bin/../lib/slf4j-api-1.7.7.jar:/soft/hbase/bin/../lib/slf4j-log4j12-1.7.5.jar:/soft/hbase/bin/../lib/snappy-java-1.0.4.1.jar:/soft/hbase/bin/../lib/spymemcached-2.11.6.jar:/soft/hbase/bin/../lib/xmlenc-0.52.jar:/soft/hbase/bin/../lib/xz-1.0.jar:/soft/hbase/bin/../lib/zookeeper-3.4.6.jar:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*::/soft/hive/lib/*:/soft/hive/lib/*:/contrib/capacity-scheduler/*.jar:/conf:/lib/*' -Djava.library.path=:/soft/hadoop-2.7.3/lib/native:/soft/hadoop-2.7.3/lib/native org.apache.flume.node.Application -f /soft/flume/conf/yinzhengjie.conf -n a1 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/apache-flume-1.8.0-bin/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/phoenix-4.10.0-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 18/06/14 16:28:36 INFO node.PollingPropertiesFileConfigurationProvider: Configuration provider starting 18/06/14 16:28:36 INFO node.PollingPropertiesFileConfigurationProvider: Reloading configuration file:/soft/flume/conf/yinzhengjie.conf 18/06/14 16:28:36 INFO conf.FlumeConfiguration: Added sinks: k1 Agent: a1 18/06/14 16:28:36 INFO conf.FlumeConfiguration: Processing:k1 18/06/14 16:28:36 INFO conf.FlumeConfiguration: Processing:k1 18/06/14 16:28:36 INFO conf.FlumeConfiguration: Post-validation flume configuration contains configuration for agents: [a1] 18/06/14 16:28:36 INFO node.AbstractConfigurationProvider: Creating channels 18/06/14 16:28:36 INFO channel.DefaultChannelFactory: Creating instance of channel c1 type memory 18/06/14 16:28:36 INFO node.AbstractConfigurationProvider: Created channel c1 18/06/14 16:28:36 INFO source.DefaultSourceFactory: Creating instance of source r1, type netcat 18/06/14 16:28:36 INFO sink.DefaultSinkFactory: Creating instance of sink: k1, type: logger 18/06/14 16:28:36 INFO node.AbstractConfigurationProvider: Channel c1 connected to [r1, k1] 18/06/14 16:28:36 INFO node.Application: Starting new configuration:{ sourceRunners:{r1=EventDrivenSourceRunner: { source:org.apache.flume.source.NetcatSource{name:r1,state:IDLE} }} sinkRunners:{k1=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@68970aaa counterGroup:{ name:null counters:{} } }} channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} } 18/06/14 16:28:36 INFO node.Application: Starting Channel c1 18/06/14 16:28:37 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: CHANNEL, name: c1: Successfully registered new MBean. 18/06/14 16:28:37 INFO instrumentation.MonitoredCounterGroup: Component type: CHANNEL, name: c1 started 18/06/14 16:28:37 INFO node.Application: Starting Sink k1 18/06/14 16:28:37 INFO node.Application: Starting Source r1 18/06/14 16:28:37 INFO source.NetcatSource: Source starting 18/06/14 16:28:37 INFO source.NetcatSource: Created serverSocket:sun.nio.ch.ServerSocketChannelImpl[/127.0.0.1:44444] 18/06/14 16:32:36 INFO sink.LoggerSink: Event: { headers:{} body: } 18/06/14 16:32:43 INFO sink.LoggerSink: Event: { headers:{} body: 79 69 6E 7A 68 65 6E 67 6A 69 65 yinzhengjie } 18/06/14 16:32:46 INFO sink.LoggerSink: Event: { headers:{} body: 32 30 31 38 2018 } 18/06/14 16:32:51 INFO sink.LoggerSink: Event: { headers:{} body: 62 69 67 64 61 74 61 bigdata }

9>.将flume分发到其他节点(被分发的机器需要手动“source /etc/profile”文件方能正常使用!如果能重启的话就不用手动source)

[yinzhengjie@s101 ~]$ more `which xrsync.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -lt 1 ];then echo "请输入参数"; exit fi #获取文件路径 file=$@ #获取子路径 filename=`basename $file` #获取父路径 dirpath=`dirname $file` #获取完整路径 cd $dirpath fullpath=`pwd -P` #同步文件到DataNode for (( i=102;i<=105;i++ )) do #使终端变绿色 tput setaf 2 echo =========== s$i %file =========== #使终端变回原来的颜色,即白灰色 tput setaf 7 #远程执行命令 rsync -lr $filename `whoami`@s$i:$fullpath #判断命令是否执行成功 if [ $? == 0 ];then echo "命令执行成功" fi done [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xrsync.sh /soft/flume/ =========== s102 %file =========== 命令执行成功 =========== s103 %file =========== 命令执行成功 =========== s104 %file =========== 命令执行成功 =========== s105 %file =========== 命令执行成功 [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xrsync.sh /soft/apache-flume-1.8.0-bin/ =========== s102 %file =========== 命令执行成功 =========== s103 %file =========== 命令执行成功 =========== s104 %file =========== 命令执行成功 =========== s105 %file =========== 命令执行成功 [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ su root Password: [root@s101 yinzhengjie]# [root@s101 yinzhengjie]# xrsync.sh /etc/profile =========== s102 %file =========== 命令执行成功 =========== s103 %file =========== 命令执行成功 =========== s104 %file =========== 命令执行成功 =========== s105 %file =========== 命令执行成功 [root@s101 yinzhengjie]# exit exit [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$