1.在宿主机上下载好安装包:hadoop-2.8.5.tar.gz ; jdk1.8.0_131.tar.gz共两个包

2.在Docker仓库中拉去镜像:

docker pull centos

3.创建容器:

docker run -i -t -d --name centos_hdp centos:centos

4.从宿主机拷贝hadoop-2.8.5.tar.gz 、 jdk1.8.0_131.tar.gz两个包到容器中:

docker cp jdk-8u131-linux-x64.tar.gz centos_hdp:/usr/local

docker cp hadoop-2.8.5.tar.gz centos_hdp:/opt/

5.进入后台运行的容器中:

docker exec -it centos_hdp bash

tar -zxvf /usr/local/jdk1.8.0_131.tar.gz -C /usr/local/

tar -zxvf /opt/hadoop-2.8.5.tar.gz -C /opt/

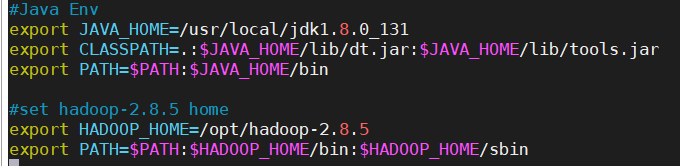

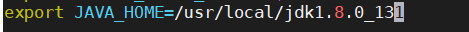

vim /etc/proflie

source /etc/profile

vim /opt/hadoop-2.8.5/etc/hadoop/core-site.xml

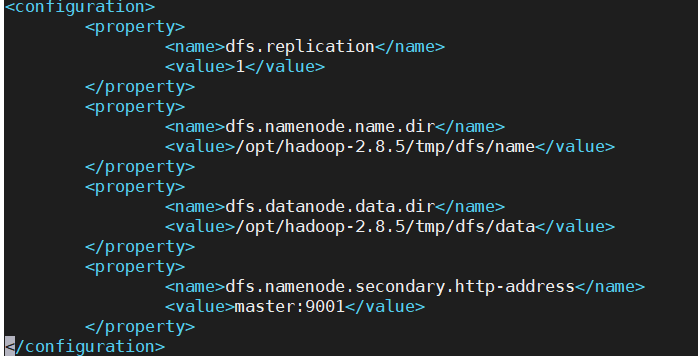

vim /opt/hadoop-2.8.5/etc/hadoop/hdfs-site.xml

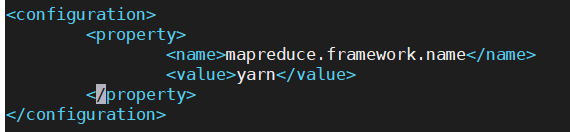

vim /opt/hadoop-2.8.5/etc/hadoop/mapred-site.xml

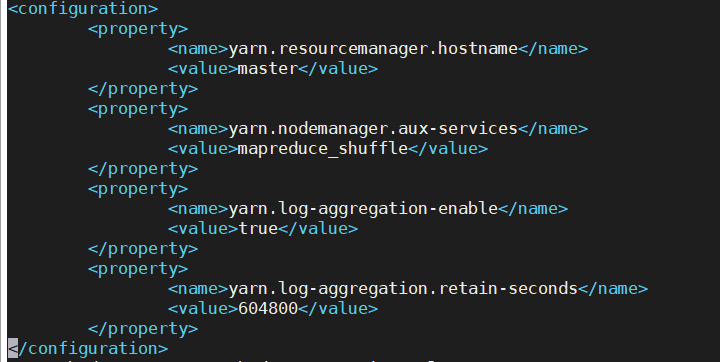

vim /opt/hadoop-2.8.5/etc/hadoop/yarn-site.xml

vim /opt/hadoop-2.8.5/etc/hadoop/hadoop-env.sh

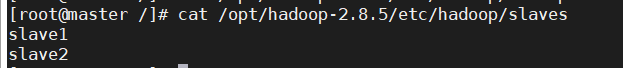

cat /opt/hadoop-2.8.5/etc/hadoop/slave

6.退出容器,把当前容器打包成镜像:

docker commit -a "mayunzhen" -m "hadoop base images" centos_hdp centos_hdp:2.8.5

7.导出镜像成jar包

docker save centos_hdp:2.8.5 -o centos_hdp.jar

8.centos_hdp.jar镜像包传到其他宿主机上

scp centos_hdp.jar root@192.168.130.166:/

scp centos_hdp.jar root@192.168.130.167:/

scp centos_hdp.jar root@192.168.130.168:/

9.分别在三台(192.168.130.166,192.168.130.167,192.168.130.168)宿主机上执行导入镜像

docker load -i centos_hdp.jar

10.在每个宿主机上,配置docker weave ,让不同宿主机上的容器能通信

详情见链接:https://www.cnblogs.com/kevingrace/p/6859173.html

11.运行hadoop容器命令:

(192.168.130.166主机上)docker run -itd -h hadoop-master --name hadoop-master --net=hadoop -v /etc/localtime:/etc/localtime:ro -p 50070:50070 -p 8088:8088 -p 9000:9000 iammayunzhen/hadoop:2.8.52

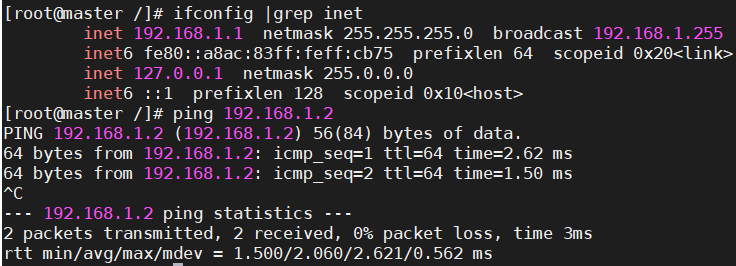

weave attach 192.168.1.11/24 hadoop-master

(192.168.130.167主机上)docker run -itd -h hadoop-slave1 --name hadoop-slave1 --net=hadoop -v /etc/localtime:/etc/localtime:ro iammayunzhen/hadoop:2.8.52

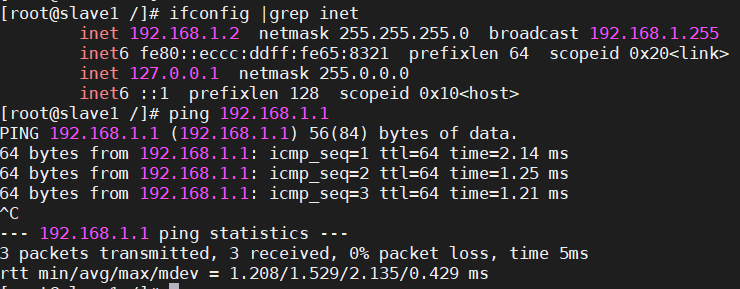

weave attach 192.168.1.12/24 hadoop-slave1

(192.168.130.168主机上)docker run -itd -h hadoop-slave2 --name hadoop-slave2 --net=hadoop -v /etc/localtime:/etc/localtime:ro iammayunzhen/hadoop:2.8.52

weave attach 192.168.1.13/24 hadoop-slave2

测试三个容器IP(192.168.1.1,192.168.1.2,192.168.1.3)互相能通信:

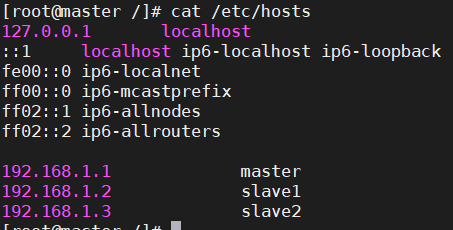

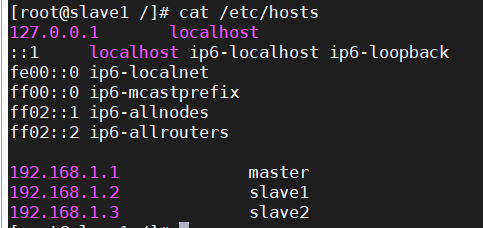

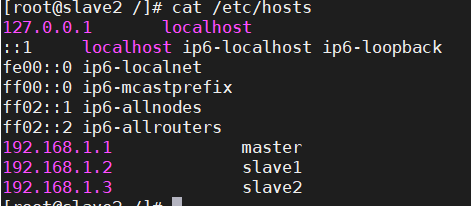

12.分别在三个容器(192.168.1.1,192.168.1.2,192.168.1.3)上配置/etc/hosts

13.分别在三个容器(192.168.1.1,192.168.1.2,192.168.1.3)上配置无密码登录

详情:https://www.cnblogs.com/shuochen/p/10441455.html

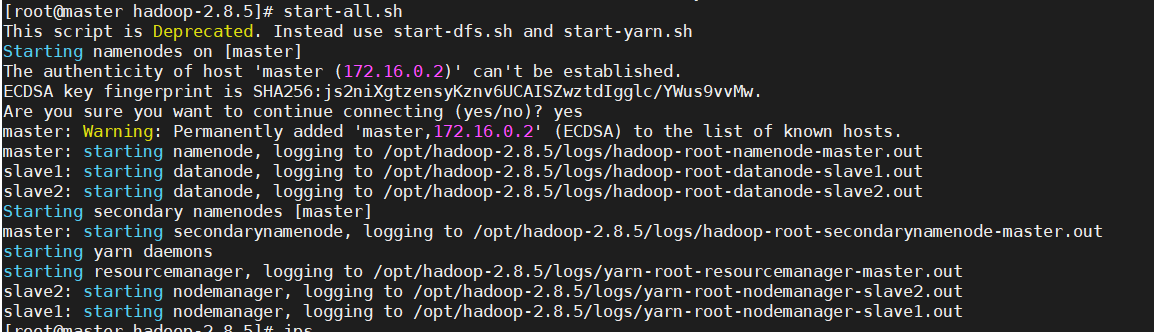

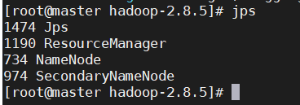

14.master容器中执行启动hadoop

source /etc/profile

start-all.sh

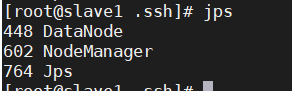

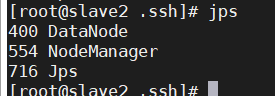

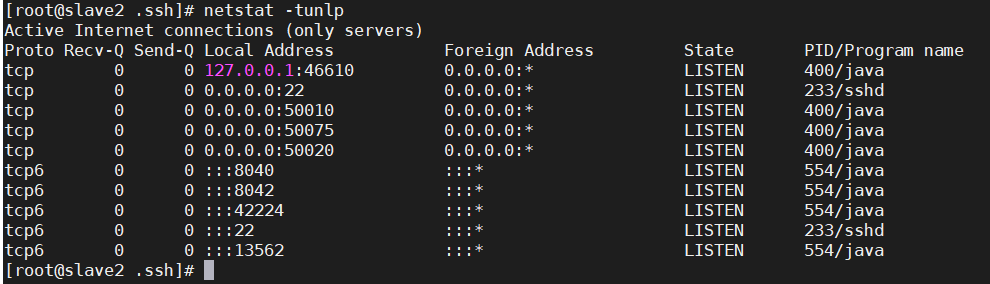

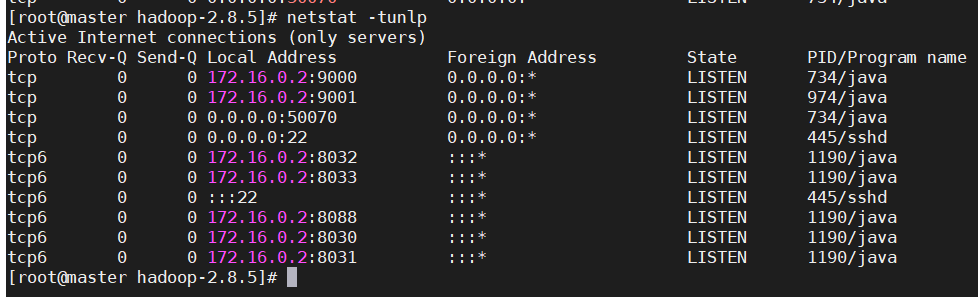

15.查看集群状态:

制作好的docker hadoop:2.8.5镜像已经上传到https://hub.docker.com/repository/docker/iammayunzhen/centos_hdp(或者可 docker pull iammayunzhen/centos_hdp:2.8.5进行下载),有需要,可供大家下载,参考。

Refereces:

docker hadoop镜像制作:https://www.jianshu.com/p/bf76dfedef2f

docker容器时间和宿主机同步:https://www.cnblogs.com/kevingrace/p/5570597.html

多宿主机容器之间通信:https://www.cnblogs.com/kevingrace/p/6859173.html

docker容器中IP无法ssh登录:http://blog.chinaunix.net/uid-26168435-id-5732463.html

安装netstat命令:yum install net-tools

查看端口情况:netstat -tulnp |grep 22

安装passwd命令:yum install -y passwd

多容器IP无密码登录:https://www.cnblogs.com/shuochen/p/10441455.html

yum安装ssh客户端 : yum -y install openssh-clients https://www.cnblogs.com/nulige/articles/9324564.html