一、scrapy工作流程

(出自该博客:https://www.cnblogs.com/hewanli/p/12090042.html)

Spider中初始的Request是通过调用start_requests() 来获取的。start_requests() 读取start_urls 中的URL,并以parse为回调函数生成Request 。

2)Request对象进入调度器(Scheduler)按某种算法进行排队,之后的每个时刻调度器将其出列,送往下载器。

3)下载器(Downloader)根据Request对象中的URL地址发送一次HTTP请求到网络服务器把资源下载下来,并封装成应答包(Response)。

4)应答包Response对象最终会被递送给爬虫(Spider)的页面解析函数进行处理。

5)若是解析出实体(Item),则交给实体管道(Item Pipeline)进行进一步的处理。由Spider返回的Item将被存到数据库(由某些Item Pipeline处理)或使用Feed exports存入到文件中。

6)若是解析出的是链接(URL),则把URL交给调度器(Scheduler)等待抓取。

以上就是Scrapy框架的运行流程,也就是它的工作原理。Request和Response对象是血液,Item是代谢产物。

(1)安装:

pip install scrapy

(2)创建项目

2.1 进入需要创建爬虫项目的文件下,cd 命令

2.2 创建项目:scrapy startproject scrapystudy(项目名)

(3) 创建爬虫:scrapy genspider xxx(爬虫名)xxx.com (爬取域,只加域名即可,不要带上前面的http://或https://)

(4)运行爬虫:scrapy crawl XXX

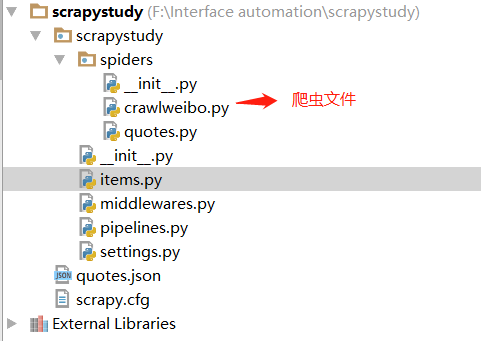

(5)项目目录

介绍:items:用来保存数据的一个数据结构

middlewares:在爬取过程中定义的一些中间件,可以处理request和response

pipelines:项目管道,输出一些items,负责处理spider从网页中抽取的数据,主要是负责清洗,验证和向数据库中存储数据

settings:定义一些配置信息

二、简单应用

爬取例子:http://quotes.toscrape.com/

2.1、items.py文件定义项目字段(需要爬取的字段),如下:

1 # Define here the models for your scraped items 2 # See documentation in: 3 # https://docs.scrapy.org/en/latest/topics/items.html 4 5 import scrapy 6 7 class QuotesItem(scrapy.Item): 8 # define the fields for your item here like: 9 # 定义爬取内容的存储字段 10 text = scrapy.Field() 11 author = scrapy.Field() 12 tags = scrapy.Field()

2.2、quotes.py爬虫文件,处理页面信息,并提取结果,如下:

1 import scrapy 2 from scrapystudy.items import QuotesItem 3 4 class QuotesSpider(scrapy.Spider): 5 name = 'quotes' 6 allowed_domains = ['quotes.toscrape.com'] 7 start_urls = ['http://quotes.toscrape.com/'] 8 9 def parse(self, response): 10 quotes = response.css(".quote") 11 for quote in quotes: 12 items = QuotesItem() 13 text = quote.css(".text::text").extract_first() #extract_first()提取第一个结果,extract()提取全部结果 14 author = quote.css(".author::text").extract_first() 15 tags = quote.css(".tags .tag::text").extract() #多个tag处理方式 16 # 赋值 17 items["text"] = text 18 items["author"] = author 19 items["tags"] = tags 20 yield items 21 # 处理翻页 22 next_page = response.css(".pager .next a::attr(href)").extract_first() 23 url = response.urljoin(next_page) #获取一个完整的url 24 yield scrapy.Request(url=url,callback=self.parse) #callback回调函数,自己调用自己 25 # 保存数据,命令行输入:scrapy crawl quotes -o quotes.json

保存数据:

1 # 命令行输入以下代码,可以保存不同格式的文件 2 scrapy crawl quotes -o quotes.json 3 scrapy crawl quotes -o quotes.jl 4 scrapy crawl quotes -o quotes.csv 5 scrapy crawl quotes -o quotes.xml 6 7 # 保存到json文件,处理中文,在使用scrapy crawl -o file.json时,对于中文,保存的是unicode编码字符,因为需要转换为utf-8中文编码 8 9 转换命令:scrapy crawl crawlboke -o boke.json -s FEED_EXPORT_ENCODING=UTF-8

2.3、pipelines.py文件处理items内容长度,以及存储到MongoDB的操作,如下:

1 # Define your item pipelines here 2 # 3 # Don't forget to add your pipeline to the ITEM_PIPELINES setting 4 # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html 5 6 7 # useful for handling different item types with a single interface 8 from itemadapter import ItemAdapter 9 from scrapy.exceptions import DropItem 10 import pymongo 11 12 class TextPipeline: 13 14 def __init__(self): 15 self.limit = 50 16 17 def process_item(self, item, spider): 18 "处理items长度" 19 if item['text']: 20 if len(item['text'])>self.limit: 21 item['text'] = item['text'][0:self.limit].rstrip()+'...' 22 return item 23 else: 24 return DropItem('Missing Text') 25 26 class MongoPipeline(object): 27 "将数据存储在MongoDB中" 28 29 def __init__(self,mongo_url,mongo_db): 30 self.mongo_url = mongo_url 31 self.mongo_db = mongo_db 32 33 @classmethod 34 def from_crawler(cls,crawler): 35 return cls( 36 mongo_url = crawler.settings.get('MONGO_URL'), 37 mongo_db = crawler.settings.get('MONGO_DB') 38 ) 39 40 def open_spider(self,spider): 41 self.client = pymongo.MongoClient(self.mongo_url) 42 self.db = self.client[self.mongo_db] 43 44 def process_item(self,item,spider): 45 name = item.__class__.__name__ 46 self.db[name].insert(dict(item)) 47 return item 48 49 def close_spider(self,spider): 50 self.client.close()

2.4、setting.py文件配置生效信息

1 # Scrapy settings for scrapystudy project 2 # 3 # For simplicity, this file contains only settings considered important or 4 # commonly used. You can find more settings consulting the documentation: 5 # 6 # https://docs.scrapy.org/en/latest/topics/settings.html 7 # https://docs.scrapy.org/en/latest/topics/downloader-middleware.html 8 # https://docs.scrapy.org/en/latest/topics/spider-middleware.html 9 10 BOT_NAME = 'scrapystudy' 11 12 SPIDER_MODULES = ['scrapystudy.spiders'] 13 NEWSPIDER_MODULE = 'scrapystudy.spiders' 14 15 MONGO_URL = "localhost" 16 MONGO_DB = "mydb" 17 18 19 # Crawl responsibly by identifying yourself (and your website) on the user-agent 20 #USER_AGENT = 'scrapystudy (+http://www.yourdomain.com)' 21 22 # Obey robots.txt rules 23 ROBOTSTXT_OBEY = False 24 25 # Configure maximum concurrent requests performed by Scrapy (default: 16) 26 #CONCURRENT_REQUESTS = 32 27 28 # Configure a delay for requests for the same website (default: 0) 29 # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay 30 # See also autothrottle settings and docs 31 #DOWNLOAD_DELAY = 3 32 # The download delay setting will honor only one of: 33 #CONCURRENT_REQUESTS_PER_DOMAIN = 16 34 #CONCURRENT_REQUESTS_PER_IP = 16 35 36 # Disable cookies (enabled by default) 37 #COOKIES_ENABLED = False 38 39 # Disable Telnet Console (enabled by default) 40 #TELNETCONSOLE_ENABLED = False 41 42 # Override the default request headers: 43 #DEFAULT_REQUEST_HEADERS = { 44 # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 45 # 'Accept-Language': 'en', 46 #} 47 48 # Enable or disable spider middlewares 49 # See https://docs.scrapy.org/en/latest/topics/spider-middleware.html 50 #SPIDER_MIDDLEWARES = { 51 # 'scrapystudy.middlewares.ScrapystudySpiderMiddleware': 543, 52 #} 53 54 # Enable or disable downloader middlewares 55 # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html 56 #DOWNLOADER_MIDDLEWARES = { 57 # 'scrapystudy.middlewares.ScrapystudyDownloaderMiddleware': 543, 58 #} 59 60 # Enable or disable extensions 61 # See https://docs.scrapy.org/en/latest/topics/extensions.html 62 #EXTENSIONS = { 63 # 'scrapy.extensions.telnet.TelnetConsole': None, 64 #} 65 66 # Configure item pipelines 67 # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html 68 ITEM_PIPELINES = { 69 'scrapystudy.pipelines.TextPipeline': 300, 70 'scrapystudy.pipelines.MongoPipeline': 400, 71 } 72 73 # Enable and configure the AutoThrottle extension (disabled by default) 74 # See https://docs.scrapy.org/en/latest/topics/autothrottle.html 75 #AUTOTHROTTLE_ENABLED = True 76 # The initial download delay 77 #AUTOTHROTTLE_START_DELAY = 5 78 # The maximum download delay to be set in case of high latencies 79 #AUTOTHROTTLE_MAX_DELAY = 60 80 # The average number of requests Scrapy should be sending in parallel to 81 # each remote server 82 #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 83 # Enable showing throttling stats for every response received: 84 #AUTOTHROTTLE_DEBUG = False 85 86 # Enable and configure HTTP caching (disabled by default) 87 # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings 88 #HTTPCACHE_ENABLED = True 89 #HTTPCACHE_EXPIRATION_SECS = 0 90 #HTTPCACHE_DIR = 'httpcache' 91 #HTTPCACHE_IGNORE_HTTP_CODES = [] 92 #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'