前言

第一次发到博客上..不太会排版见谅

最近在看一些爬虫教学的视频,有感而发,大学的时候看盗版小说网站觉得很能赚钱,心想自己也要搞个,正好想爬点小说能不能试试做个网站(网站搭建啥的都不会...)

站点拥有的全部小说不全,只能使用crawl爬全站

不过写完之后发现用scrapy爬的也没requests多线程爬的快多少,保存也不好一本保存,由于scrapy是异步爬取,不好保存本地为txt文件,只好存mongodb 捂脸

下面是主代码

# -*- coding: utf-8 -*- import scrapy from scrapy.linkextractors import LinkExtractor from scrapy.spiders import CrawlSpider, Rule from biquge5200.items import Biquge5200Item class BqgSpider(CrawlSpider): name = 'bqg' allowed_domains = ['bqg5200.com'] start_urls = ['https://www.bqg5200.com/'] rules = ( Rule(LinkExtractor(allow=r'https://www.bqg5200.com/book/d+/'), follow=True), Rule(LinkExtractor(allow=r'https://www.bqg5200.com/xiaoshuo/d+/d+/'), follow=False), Rule(LinkExtractor(allow=r'https://www.bqg5200.com/xiaoshuo/d+/d+/d+/'), callback='parse_item', follow=False), ) def parse_item(self, response): name = response.xpath('//div[@id="smallcons"][1]/h1/text()').get() zuozhe = response.xpath('//div[@id="smallcons"][1]/span[1]/text()').get() fenlei = response.xpath('//div[@id="smallcons"][1]/span[2]/a/text()').get() content_list = response.xpath('//div[@id="readerlist"]/ul/li') for li in content_list: book_list_url = li.xpath('./a/@href').get() book_list_url = response.urljoin(book_list_url) yield scrapy.Request(book_list_url, callback=self.book_content, meta={'info':(name,zuozhe,fenlei)}) def book_content(self,response): name, zuozhe, fenlei,= response.meta.get('info') item = Biquge5200Item(name=name,zuozhe=zuozhe,fenlei=fenlei) item['title'] = response.xpath('//div[@class="title"]/h1/text()').get() content = response.xpath('//div[@id="content"]//text()').getall() # 试试可不可以把 列表前两个值不要 取[2:] content = list(map(lambda x:x.replace(' ',''),content)) content = list(map(lambda x: x.replace('ads_yuedu_txt();', ''), content)) item['content'] = list(map(lambda x: x.replace('xa0', ''), content)) item['url'] = response.url yield item

items.py

import scrapy class Biquge5200Item(scrapy.Item): name = scrapy.Field() zuozhe = scrapy.Field() fenlei = scrapy.Field() title = scrapy.Field() content = scrapy.Field() url = scrapy.Field()

middlewares.py

import user_agent class Biquge5200DownloaderMiddleware(object): def process_request(self, request, spider): request.headers['user-agent'] = user_agent.generate_user_agent()

这是当初看视频学到随机useragent库,但是忘记到底是怎么导入的了....

由于网站没有反爬,我只习惯性谢了个user-agent, 有需要你们到时候自己写一个ua和ip的把..

Pipeline.py

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import pymongo class Biquge5200Pipeline(object): def open_spider(self,spider): self.client = pymongo.MongoClient() self.db = self.client.bqg def process_item(self, item, spider): name = item['name'] zuozhe = item['zuozhe'] fenlei = item['fenlei'] coll = ' '.join([name,zuozhe,fenlei]) self.db[coll].insert({"_id":item['url'], "title":item['title'], "content":item['content']}) return item def close_spider(self, spider): self.client.close()

将获取到的item中书名,作者,分类作为数据库的集合名,将_id替换为item['url'],之后可以用find().sort("_id":1)排序,默认存储在本地的mongodb中,

windows端开启mongodb,开启方式--->>net start mongodb

linux端不太清楚,请百度

settings.py

BOT_NAME = 'biquge5200' SPIDER_MODULES = ['biquge5200.spiders'] NEWSPIDER_MODULE = 'biquge5200.spiders' DEFAULT_REQUEST_HEADERS = { 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'Accept-Language': 'en', } DOWNLOADER_MIDDLEWARES = { 'biquge5200.middlewares.Biquge5200DownloaderMiddleware': 543, }

ITEM_PIPELINES = {

'biquge5200.pipelines.Biquge5200Pipeline': 300,

}

完成...

如果嫌弃爬的慢,使用scrapy_redis分布式,在本机布置几个分布式,适用于只有一台电脑,我默认你安装了scrapy_redis

现在settings.py中 添加几个参数

#使用Scrapy-Redis的调度器 SCHEDULER = "scrapy_redis.scheduler.Scheduler" #利用Redis的集合实现去重 DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" #允许继续爬取 SCHEDULER_PERSIST = True #设置优先级 SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.SpiderPriorityQueue' REDIS_HOST = 'localhost' # ---------> 本机ip REDIS_PORT = 6379

在主程序中将以下代码

class BqgSpider(CrawlSpider): name = 'bqg' allowed_domains = ['bqg5200.com'] start_urls = ['https://www.bqg5200.com/']

改为

from scrapy_redis.spiders import RedisCrawlSpider # -----> 导入 class BqgSpider(RedisCrawlSpider): # ------> 改变爬虫父类 name = 'bqg' allowed_domains = ['bqg5200.com'] # start_urls = ['https://www.bqg5200.com/'] redis_key = 'bqg:start_urls' # ------> 记住这个redis终端有用,格式 一般写爬虫名:start_urls

开启mongodb

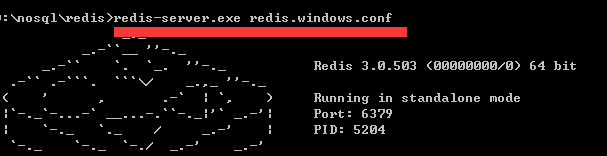

开启redis服务 ---->>> 进入redis安装目录 redis-server.exe redis.windows.conf

多开几个cmd窗口进入爬虫文件主程序文件中执行 scrapy runspider 爬虫名 ,爬虫进入监听状态

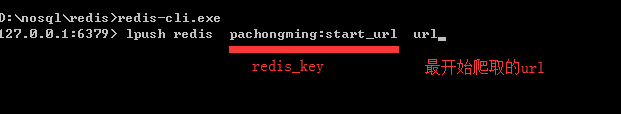

开启reids终端 --->>> redis-cli.exe

输入启动启动名称和url,是你需要开始爬取的页面

调试完成可以等待爬虫爬取了

多台主机爬取,需要看将那一台主机作为主机端,将settings.py中REDIS_HOST改为主机端的ip

保存的数据存储在哪也要考虑,如果直接保存在每台爬虫端,不需要要改动,如果想要汇总到一台机器上,

在Pipeline.py中修改

mongoclient(host="汇总数据的ip",post="monodb默认端口")

将修改好的文件复制每台爬虫端开启,汇总数据的电脑开启mongodb ,主机端开启redis服务,进入终端 输入 lpush 爬虫名:start_urls url