部署主机规划

| IP地址 | 主机名 | 角色 |

| 10.0.0.50 | master | Master |

| 10.0.0.51 | node1 | Node |

| 10.0.0.52 | node2 | Node |

| 10.0.0.53 | node3 | Node |

1 环境准备

关闭SELinux

[root@master ~]# getenforce Permissive [root@master ~]# vim /etc/sysconfig/selinux # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=diabled # SELINUXTYPE= can take one of three two values: # targeted - Targeted processes are protected, # minimum - Modification of targeted policy. Only selected processes are protected. # mls - Multi Level Security protection. SELINUXTYPE=targeted

关闭firewall

[root@master ~]# systemctl status firewalld.service ● firewalld.service - firewalld - dynamic firewall daemon Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled) Active: inactive (dead) Docs: man:firewalld(1)

配置hosts解析

[root@master ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.0.0.50 master 10.0.0.51 node1 10.0.0.52 node2 10.0.0.53 node3

2 安装docker(每节点操作)

2.1 卸载旧版本docker

yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-selinux docker-engine-selinux docker-engine

2.2 配置yum仓库

rm -rfv /etc/yum.repos.d/* curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

2.3 安装docker-ce

yum install -y yum-utils device-mapper-persistent-data lvm 配置yum源 yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo 安装docker-ce yum -y install docker-ce

2.4 添加阿里云docker仓库加速

tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://fl791z1h.mirror.aliyuncs.com"] } EOF systemctl daemon-reload

systemctl enable docker && systemctl start docker

2.5 添加阿里kubernetes源

vim /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes Repo baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg enabled=1

2.6 关闭swap

不配置的话,启动kubelet不起来

[root@master ~]# kubectl get nodes The connection to the server 10.0.0.50:6443 was refused - did you specify the right host or port?

[root@master ~]# swapoff -a

[root@master ~]# vim /etc/fstab

#

# /etc/fstab

# Created by anaconda on Sat Jun 29 08:50:28 2019

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

UUID=0bbd5e50-606c-4c47-8cd7-1ae67f812437 / xfs defaults 0 0

UUID=bba2c917-8540-41c8-97e6-f1d73d9143ba /boot xfs defaults 0 0

#UUID=1c0f8351-49f0-4dd8-9a8b-1aff1d4a77b0 swap swap defaults 0 0

#UUID=6cfbe843-e280-4d85-9ae3-60b0ebcc5909 /data xfs defaults 0 0

2.7 配置防火墙规则

配置内核参数,将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

Master节点安装kubectl、kubelet、kubeadm

安装设置开机自启动

yum install kubectl kubelet kubeadm

systemctl enable kubelet

初始化k8s集群

kubeadm init --kubernetes-version=1.18.0 --apiserver-advertise-address=10.0.0.50 --image-repository registry.aliyuncs.com/google_containers --service-cidr=10.10.0.0/16 --pod-network-cidr=10.122.0.0/16

POD的网段为: 10.122.0.0/16, api server地址就是master本机IP。

这一步很关键,由于kubeadm 默认从官网k8s.grc.io下载所需镜像,国内无法访问,因此需要通过–image-repository指定阿里云镜像仓库地址。

初始化信息如下

W0519 01:47:26.317891 2272 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] [init] Using Kubernetes version: v1.18.0 [preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.10.0.1 10.0.0.50] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [master localhost] and IPs [10.0.0.50 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [10.0.0.50 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" W0519 01:50:33.295075 2272 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [control-plane] Creating static Pod manifest for "kube-scheduler" W0519 01:50:33.296322 2272 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 23.005020 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: q1k6jx.wnw5mn8qqt0ia3wc [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.0.0.50:6443 --token q1k6jx.wnw5mn8qqt0ia3wc --discovery-token-ca-cert-hash sha256:c99d2b8e33ab852ec41e9a0fe816683d5d791d7afa8041a802b986cac8456553

标记部分要记录,在其他节点加入集群时使用

创建kubectl

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl自动补全

source <(kubectl completion bash)

查看下载的镜像

[root@master yum.repos.d]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.aliyuncs.com/google_containers/kube-proxy v1.18.0 43940c34f24f 7 weeks ago 117MB registry.aliyuncs.com/google_containers/kube-apiserver v1.18.0 74060cea7f70 7 weeks ago 173MB registry.aliyuncs.com/google_containers/kube-scheduler v1.18.0 a31f78c7c8ce 7 weeks ago 95.3MB registry.aliyuncs.com/google_containers/kube-controller-manager v1.18.0 d3e55153f52f 7 weeks ago 162MB registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 3 months ago 683kB registry.aliyuncs.com/google_containers/coredns 1.6.7 67da37a9a360 3 months ago 43.8MB registry.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 6 months ago 288MB

查看cluster组件状态信息

[root@master yum.repos.d]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true"} 或者

[root@master yum.repos.d]# kubectl get componentstatuses NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true"}

获取节点信息

[root@master yum.repos.d]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady master 17m v1.18.2

node节点为NotReady,因为corednspod没有启动,缺少pod网络

部署flannel或calico

部署flannel

github地址 :https://github.com/coreos/flannel

文件:https://github.com/coreos/flannel/blob/master/Documentation/kube-flannel.yml

[root@master ~]# cat kube-flannel.yml --- apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default spec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false allowedCapabilities: ['NET_ADMIN'] defaultAddCapabilities: [] requiredDropCapabilities: [] hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 seLinux: rule: 'RunAsAny' --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged'] - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-amd64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - amd64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-amd64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-amd64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - arm64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-arm64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-arm64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - arm hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-arm command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-arm command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-ppc64le namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - ppc64le hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-ppc64le command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-ppc64le command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-s390x namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: kubernetes.io/arch operator: In values: - s390x hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-s390x command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-s390x command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg

部署calico

# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.apps/calico-node created serviceaccount/calico-node created deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created

再次查看node和pod状态

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 35m v1.18.2

[root@master ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-7ff77c879f-d92wp 1/1 Running 0 36m coredns-7ff77c879f-n49kz 1/1 Running 0 36m etcd-master 1/1 Running 0 36m kube-apiserver-master 1/1 Running 0 36m kube-controller-manager-master 1/1 Running 0 36m kube-flannel-ds-amd64-xxnk2 1/1 Running 0 7m12s kube-proxy-cbvsb 1/1 Running 0 36m kube-scheduler-master 1/1 Running 0 36m

查看各组件的监听情况

[root@master kubelet]# ss -lntp LISTEN 0 128 127.0.0.1:10248 *:* users:(("kubelet",pid=41819,fd=27)) LISTEN 0 128 127.0.0.1:10249 *:* users:(("kube-proxy",pid=43458,fd=15)) LISTEN 0 128 127.0.0.1:2379 *:* users:(("etcd",pid=42348,fd=6)) LISTEN 0 128 10.0.0.50:2379 *:* users:(("etcd",pid=42348,fd=5)) LISTEN 0 128 10.0.0.50:2380 *:* users:(("etcd",pid=42348,fd=3)) LISTEN 0 128 127.0.0.1:2381 *:* users:(("etcd",pid=42348,fd=11)) LISTEN 0 128 *:30000 *:* users:(("kube-proxy",pid=43458,fd=8)) LISTEN 0 128 127.0.0.1:10257 *:* users:(("kube-controller",pid=42464,fd=6)) LISTEN 0 128 127.0.0.1:10259 *:* users:(("kube-scheduler",pid=42463,fd=6)) LISTEN 0 128 127.0.0.1:44214 *:* users:(("kubelet",pid=41819,fd=10)) LISTEN 0 128 [::]:10250 [::]:* users:(("kubelet",pid=41819,fd=23)) LISTEN 0 128 [::]:6443 [::]:* users:(("kube-apiserver",pid=42358,fd=5)) LISTEN 0 128 [::]:10251 [::]:* users:(("kube-scheduler",pid=42463,fd=5)) LISTEN 0 128 [::]:10252 [::]:* users:(("kube-controller",pid=42464,fd=5)) LISTEN 0 128 [::]:10256 [::]:* users:(("kube-proxy",pid=43458,fd=16))

部署Node节点

添加阿里kubernetes源

scp /etc/yum.repos.d/kubernetes.repo node1:/etc/yum.repos.d/

scp /etc/yum.repos.d/kubernetes.repo node2:/etc/yum.repos.d/

scp /etc/yum.repos.d/kubernetes.repo node3:/etc/yum.repos.d/

scp /etc/docker/daemon.json node1:/etc/docker/

scp /etc/docker/daemon.json node2:/etc/docker/

scp /etc/docker/daemon.json node3:/etc/docker/

安装kubelet kubeadm docker-ce

[root@node3 ~]# yum install -y kubelet kubeadm docker-ce

启动docker设置开机自启动

[root@node3 ~]# systemctl enable docker kubelet.service

[root@node3 ~]# systemctl start docker

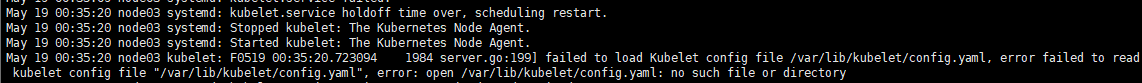

注意,这里不需要启动kubelet,初始化的过程中会自动启动的,如果此时启动了会出现如下报错,忽略即可

节点加入集群

[root@node3 ~]# kubeadm join 10.0.0.50:6443 --token q1k6jx.wnw5mn8qqt0ia3wc > --discovery-token-ca-cert-hash sha256:c99d2b8e33ab852ec41e9a0fe816683d5d791d7afa8041a802b986cac8456553 W0519 00:49:00.139059 2625 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set. [preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [WARNING Hostname]: hostname "node3" could not be reached [WARNING Hostname]: hostname "node3": lookup node3 on 223.5.5.5:53: no such host [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

Master节点查看运行状态

[root@master kubelet]# kubectl get nodes -w NAME STATUS ROLES AGE VERSION master Ready master 11h v1.18.2 node1 NotReady <none> 38s v1.18.2 node2 NotReady <none> 54s v1.18.2 node3 Ready <none> 28m v1.18.2 node2 NotReady <none> 66s v1.18.2 master Ready master 11h v1.18.2 node1 Ready <none> 2m52s v1.18.2 node1 Ready <none> 2m52s v1.18.2 node1 Ready <none> 2m53s v1.18.2 node1 Ready <none> 3m2s v1.18.2

------------------------------------------------ 至此搭建完成 ---------------------------------------------------