hadoop3.1的结构请查看http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/HdfsDesign.html

一、环境准备

1- 三台linux机器(本例为虚拟机)

机器1 192.168.234.129 master 主机 --namecode

机器2 192.168.234.130 node1 主机 --secondarynamecode and datacode

机器3 192.168.234.131 node2 主机 --datacode

查询master主机的操作系统信息、内核信息、发行版本

[root@localhost opt]# uname -a

Linux localhost.localdomain 2.6.32-642.3.1.el6.x86_64 #1 SMP Tue Jul 12 18:30:56 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

[root@localhost opt]# cat /proc/version

Linux version 2.6.32-642.3.1.el6.x86_64 (mockbuild@worker1.bsys.centos.org) (gcc version 4.4.7 20120313 (Red Hat 4.4.7-17) (GCC) ) #1 SMP Tue Jul 12 18:30:56 UTC 2016

[root@localhost opt]# cat /etc/issue

CentOS release 6.5 (Final)

Kernel

on an m

查询slave1主机的操作系统信息、内核信息、发行版本

[oracle@localhost Desktop]$ uname -a

Linux localhost.localdomain 2.6.32-642.3.1.el6.x86_64 #1 SMP Tue Jul 12 18:30:56 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

[oracle@localhost Desktop]$ cat /proc/version

Linux version 2.6.32-642.3.1.el6.x86_64 (mockbuild@worker1.bsys.centos.org) (gcc version 4.4.7 20120313 (Red Hat 4.4.7-17) (GCC) ) #1 SMP Tue Jul 12 18:30:56 UTC 2016

[oracle@localhost Desktop]$ cat /etc/issue

CentOS release 6.5 (Final)

Kernel

on an m

[oracle@localhost Desktop]$

二、依赖软件安装

1- 修改hostname

修改master主机别名:

IP 域名 别名

域名是interet用,别名局域网内用。

[root@localhost ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.234.129 master.hadoop master

192.168.234.130 node1.hadoop node1

192.168.234.131 node1.hadoop node2

(注意,这里将node1的IP和别名也设置在这里,是为了在双机互联时提高解析速度。)

[root@localhost ~]# vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=master

[root@localhost ~]# hostname

master

[root@localhost ~]# hostname -i

192.168.234.129

[root@localhost ~]#

重新连接客户端:

Last login: Wed Apr 11 16:56:29 2018 from 192.168.234.1

[root@master ~]# hostname

master

同理修改node1主机的别名为node1

同理修改node2主机的别名为node2

更多 hosts可以参看 https://www.linuxidc.com/Linux/2016-10/135886.htm

2- ssh配置

[root@master .ssh]# cd /root/.ssh/

[root@master .ssh]# ll

total 8

-rw-------. 1 root root 1671 Apr 11 18:04 id_rsa

-rw-r--r--. 1 root root 393 Apr 11 18:04 id_rsa.pub

[root@master .ssh]# cat id_rsa.pub >> authorized_keys

[root@master .ssh]# scp authorized_keys node1:/root/.ssh/

The authenticity of host 'node1 (192.168.234.130)' can't be established.

RSA key fingerprint is e7:25:4e:31:d4:e0:f6:e4:ba:76:f3:9b:32:59:b2:3e.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node1,192.168.234.130' (RSA) to the list of known hosts.

root@node1's password:

authorized_keys 100% 393 0.4KB/s 00:00

[root@master .ssh]# ssh node1

Last login: Wed Apr 11 18:04:51 2018 from 192.168.234.130

[root@node1 ~]# exit

logout

Connection to node1 closed.

[root@master .ssh]# ll

total 16

-rw-r--r--. 1 root root 393 Apr 11 18:09 authorized_keys

-rw-------. 1 root root 1671 Apr 11 18:04 id_rsa

-rw-r--r--. 1 root root 393 Apr 11 18:04 id_rsa.pub

-rw-r--r--. 1 root root 403 Apr 11 18:10 known_hosts

[root@master .ssh]# cat known_hosts

node1,192.168.234.130 ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAz8eaNcdxAtBXARUY4DAzk+P7x+13ozVxU9N42DBBgnVYLrjNW8b/VXdZte1z+fzyBIaqwbkkU2ekUZt+VjauxiqY8ya5Y3zw6AotAN313bQ78l4g9OpVfinDNKBBafBC+VbOGJorQaFXEi8QkqiYPEuBu04EAi6xDF5F0SJJO7kg4S8/+/JMo3C2OqZ7267JMOYglJ2DTbYvNGvzyC4WtX5FdU78D5GV7dTQWggre44IYr5/9ZBSswQdTiK+apbVWD40xrpRtJCEgvazYyPdJrjNOd5I8w651TyHuBttYcoXjm5ArYXKm9tjhURHCURiBEfEmQ4Q/UGB9PN9cEg++Q==

node2,192.168.234.131 ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAz8eaNcdxAtBXARUY4DAzk+P7x+13ozVxU9N42DBBgnVYLrjNW8b/VXdZte1z+fzyBIaqwbkkU2ekUZt+VjauxiqY8ya5Y3zw6AotAN313bQ78l4g9OpVfinDNKBBafBC+VbOGJorQaFXEi8QkqiYPEuBu04EAi6xDF5F0SJJO7kg4S8/+/JMo3C2OqZ7267JMOYglJ2DTbYvNGvzyC4WtX5FdU78D5GV7dTQWggre44IYr5/9ZBSswQdTiK+apbVWD40xrpRtJCEgvazYyPdJrjNOd5I8w651TyHuBttYcoXjm5ArYXKm9tjhURHCURiBEfEmQ4Q/UGB9PN9cEg++Q==

[root@master .ssh]#

3- SUN JDK 安装

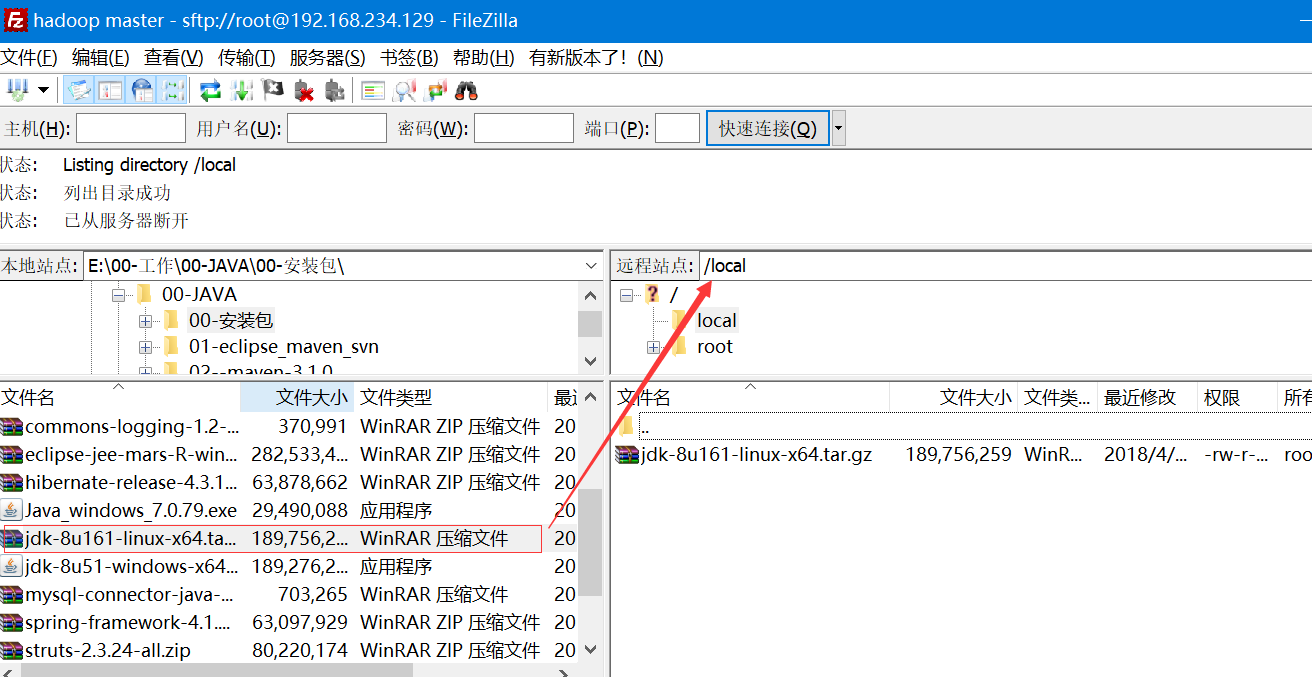

登录oracle官网下载jdk8 for linux然后上传到linux服务器。

[root@master local]# tar -zxvf jdk-8u161-linux-x64.tar.gz

……

[root@master local]# ll

total 185316

drwxr-xr-x. 8 uucp 143 4096 Dec 19 16:24 jdk1.8.0_161

-rw-r--r--. 1 root root 189756259 Apr 11 18:22 jdk-8u161-linux-x64.tar.gz

[root@master local]# mv jdk1.8.0_161/ /opt/jdk

[root@master ~]# cd /opt/jdk/

[root@master jdk]# pwd

/opt/jdk

设置环境变量:

[root@master bin]# vi /etc/profile

在文件末尾追加以下内容

export JAVA_HOME=/opt/jdk

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$JAVA_HOME/bin:$PATH

[root@master bin]# source /etc/profile

[root@master bin]# java -version

java version "1.8.0_161"

Java(TM) SE Runtime Environment (build 1.8.0_161-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.161-b12, mixed mode)

node1,node2 配置jdk

[root@master bin]# scp -r /opt/jdk node1:/opt/

[root@node1 ~]# source /etc/profile

[root@node1 ~]# java -version

java version "1.8.0_161"

Java(TM) SE Runtime Environment (build 1.8.0_161-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.161-b12, mixed mode)

[root@node1 ~]# exit

logout

Connection to node1 closed.

[root@master bin]#

三、hadoop安装

[root@master opt]# cd /local/

[root@master local]# wget http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-3.1.0/hadoop-3.1.0.tar.gz

--2018-04-11 19:06:06-- http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-3.1.0/hadoop-3.1.0.tar.gz

Resolving mirror.bit.edu.cn... 202.204.80.77, 2001:da8:204:2001:250:56ff:fea1:22

Connecting to mirror.bit.edu.cn|202.204.80.77|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 325902823 (311M) [application/octet-stream]

Saving to: 鈥渉adoop-3.1.0.tar.gz鈥

100%[========================================================================================================================================================================>] 325,902,823 878K/s in 6m 44s

2018-04-11 19:12:50 (788 KB/s) - 鈥渉adoop-3.1.0.tar.gz鈥saved [325902823/325902823]

[root@master local]# ll

total 503580

-rw-r--r--. 1 root root 325902823 Apr 5 13:19 hadoop-3.1.0.tar.gz

-rw-r--r--. 1 root root 189756259 Apr 11 18:22 jdk-8u161-linux-x64.tar.gz

[root@master local]# tar -zxvf hadoop-3.1.0.tar.gz

[root@master local]# mv /local/hadoop-3.1.0/* /opt/hadoop/

[root@master local]# cd /opt/hadoop/

[root@master hadoop]# ls

bin etc include lib libexec LICENSE.txt NOTICE.txt README.txt sbin share

[root@master hadoop]# pwd

/opt/hadoop

设置环境变量

[root@master hadoop]# vi /etc/profile

#在文件末尾追加两行以下代码

export HADOOP_HOME=/opt/soft/hadoop-2.7.2

export PATH=$PATH:$HADOOP_HOME/bin

[root@master hadoop]# source /etc/profile

修改hadoop配置文件:

配置hadoop-env.sh

[root@master hadoop]# cd /opt/hadoop/etc/hadoop

[root@master hadoop]# vi hadoop-env.sh

#在文件末尾追加两行以下代码

export JAVA_HOME=/opt/jdk

export HADOOP_HOME=/opt/hadoop

配置core-site.xml

[root@master hadoop]# cd /opt/hadoop/etc/hadoop

[root@master hadoop]# vi core-site.xml

<configuration>

<!-- 指定HDFS(namenode)的通信地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<!-- 指定hadoop运行时产生文件的存储路径 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/tmp</value>

</property>

</configuration>

~

配置hdfs-site.xml

<configuration>

<!-- 设置namenode的http通讯地址 -->

<property>

<name>dfs.namenode.http-address</name>

<value>master:50070</value>

</property>

<!-- 设置secondarynamenode的http通讯地址 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node1:50090</value>

</property>

<!-- 设置namenode存放的路径 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoop/name</value>

</property>

<!-- 设置hdfs副本数量 -->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 设置datanode存放的路径 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/hadoop/data</value>

</property>

</configuration>

配置mapred-site.xml

<configuration>

<!-- 通知框架MR使用YARN -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

~

配置yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<!-- 设置 resourcemanager 在哪个节点-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<!-- reducer取数据的方式是mapreduce_shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

配置slavs

备注:hadoop3以后有一个workers文件用来存储slaves,对应的是datanode.

[root@master hadoop]# cat /opt/hadoop/etc/hadoop/workers

node1

node2

丛机配置:

[root@master hadoop]# scp -r /opt/hadoop/ node1:/opt

[root@master hadoop]# scp -r /opt/hadoop/ node2:/opt

[root@master hadoop]# scp /etc/profile node1:/etc

[root@master hadoop]# scp /etc/profile node2:/etc

然后再source一下全局变量。

格式化:

[root@master bin]# pwd

/opt/hadoop/bin

[root@master bin]# hdfs namenode -format

启动dfs

./sbin/start-dfs.sh

启动yarn

./sbin/start-yarn.sh

或者 ./sbin/start-all.sh

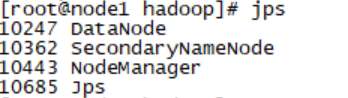

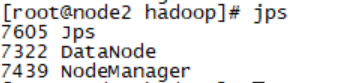

Check

master

slaves

node1:

node2:

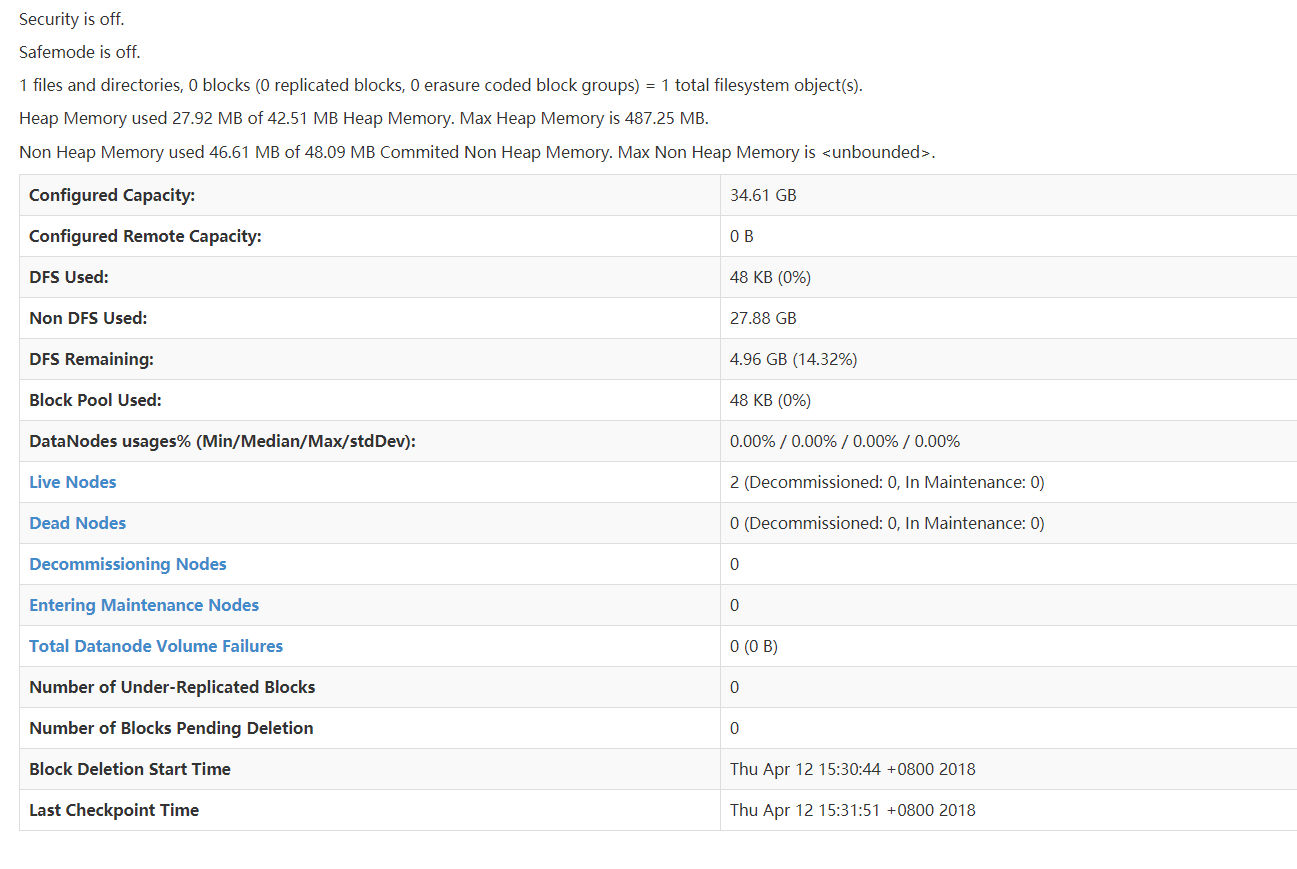

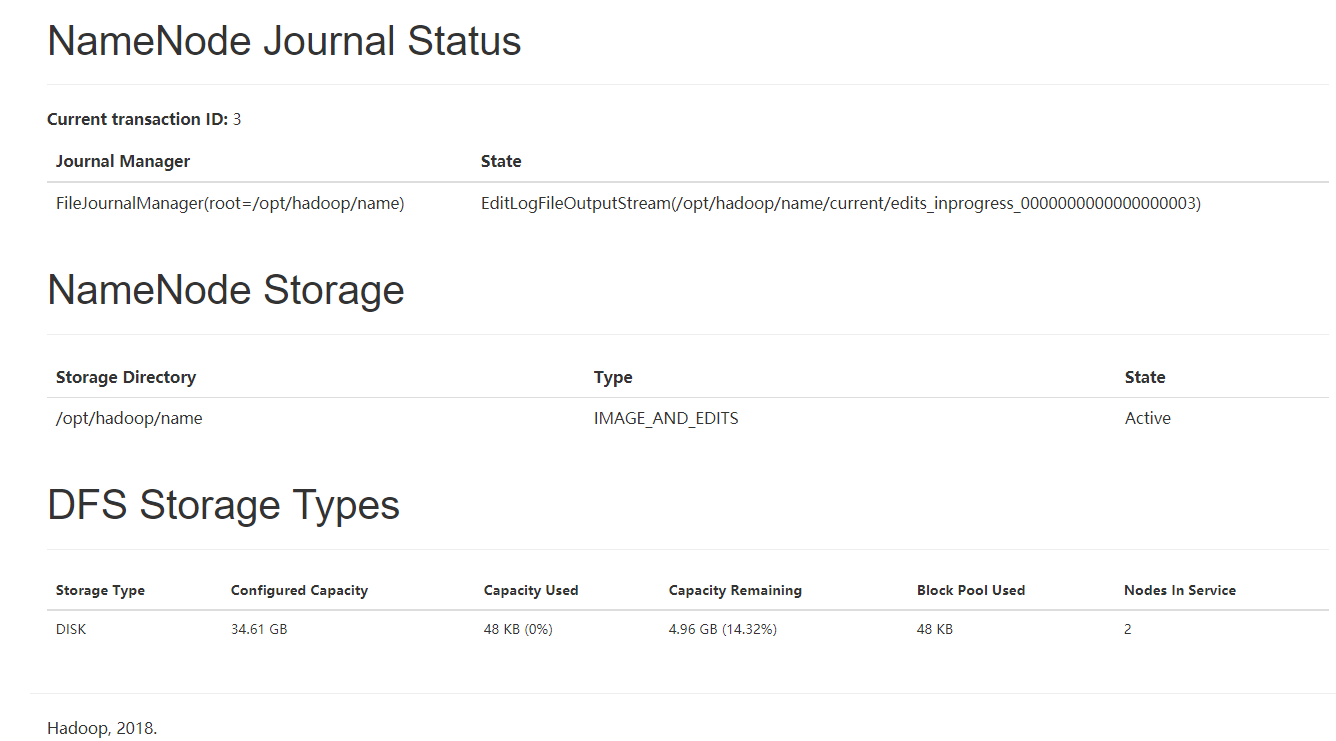

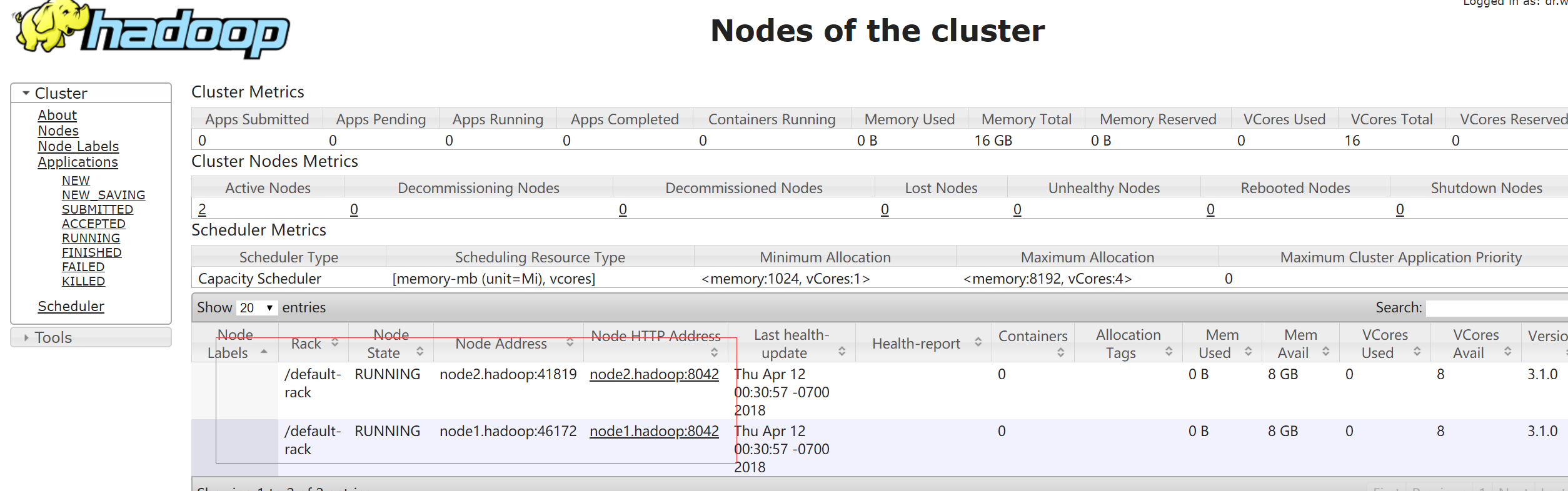

前台查看一下:

测试HDFS

http://192.168.234.129:50070/dfshealth.html#tab-overview

测试YARN

http://192.168.234.129:8088/cluster/nodes

四、安装问题汇总

1) hadoop 3.1版本的slaves的配置文件是workers

2) 启动/停止hdfs时候出现用户未指定的错误

解决方法如下:

[root@master sbin]# head -5 start-dfs.sh

#!/usr/bin/env bash

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

在stop-dfs.sh同样加入以上定义。

3) 启动/停止 YARN时候出现用户未指定的错误

[root@master sbin]# head -5 start-yarn.sh

#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

在stop-yarn.sh同样加入以上定义。

4) datanode无法启动

原因:多次格式化导致namespace id 不一样。check data/current/VERSION中的namespace与name/current/VERSION中的namespace是否一致

方法1: 在各个节点上删除data 目录和name目录,重新格式化

方法2:哪个节点的datanode没启动,就check data/current/VERSION中的namespace与name/current/VERSION中的namespace是否一致.如果不一致,将data下的改成与name下的。

或参考其他网址:https://blog.csdn.net/hackerwin7/article/details/19973045

5) hdfs前台summary信息为空值

解决方法:保证各个节点的hosts与master的一致。

6)hdfs dfsadmin -report 报错

解决方法:

把hosts里面的::1那行注释掉。

#::1 namenode localhost6.localdomain6 localhost6

重启(执行:$./stop-all.sh 再执行 $./start-all.sh)

具体请参看https://blog.csdn.net/xiaoshunzi111/article/details/52366854

7) WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform...

解决方法: 在etc/hadoop/log4j.properties文件中添加 log4j.logger.org.apache.hadoop.util.NativeCodeLoader=ERROR

具体请参看https://blog.csdn.net/l1028386804/article/details/51538611