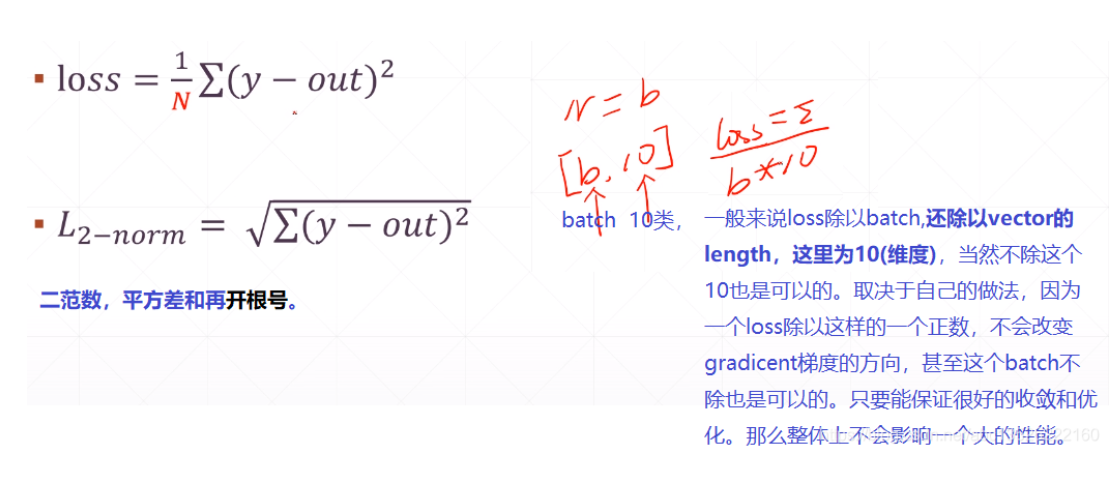

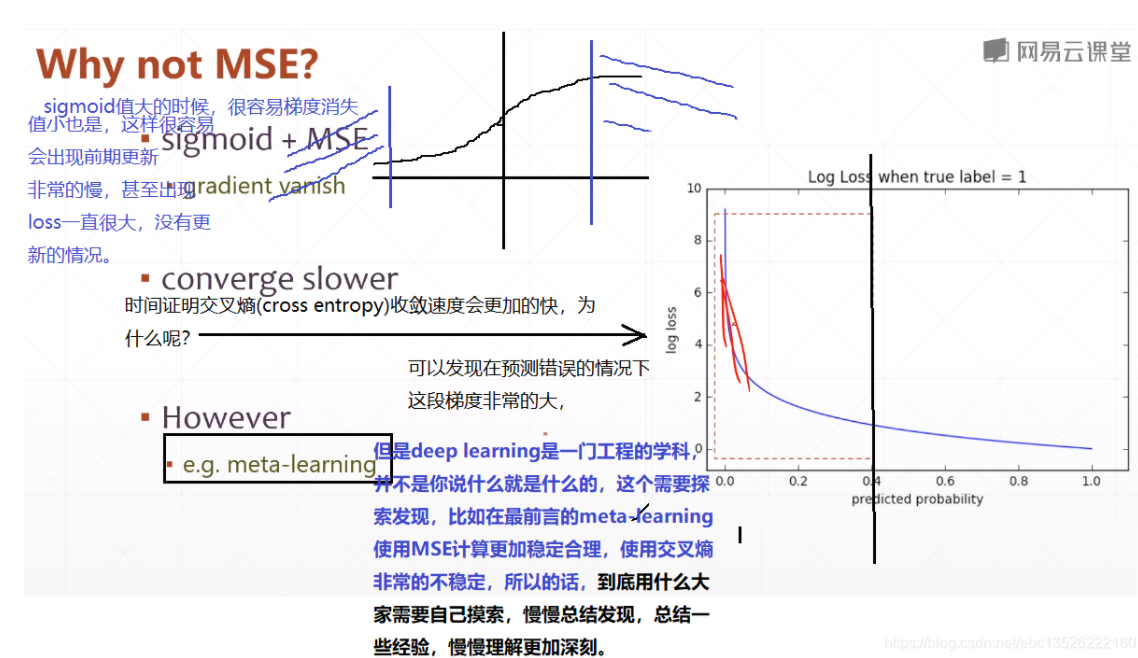

一、均方误差

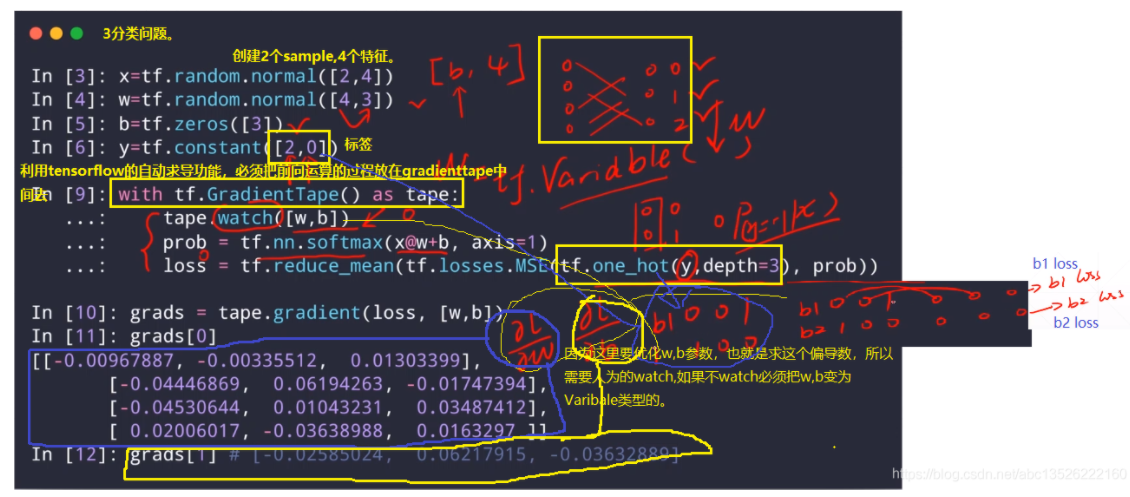

import tensorflow as tf x = tf.random.normal([2, 4]) w = tf.random.normal([4, 3]) b = tf.zeros([3]) y = tf.constant([2, 0]) #标签值 with tf.GradientTape() as tape: tape.watch([w, b]) prob = tf.nn.softmax(x@w+b, axis=1) loss = tf.reduce_mean(tf.losses.MSE(tf.one_hot(y, depth=3), prob)) grads = tape.gradient(loss, [w, b]) print(grads[0], '\n') print(grads[1])

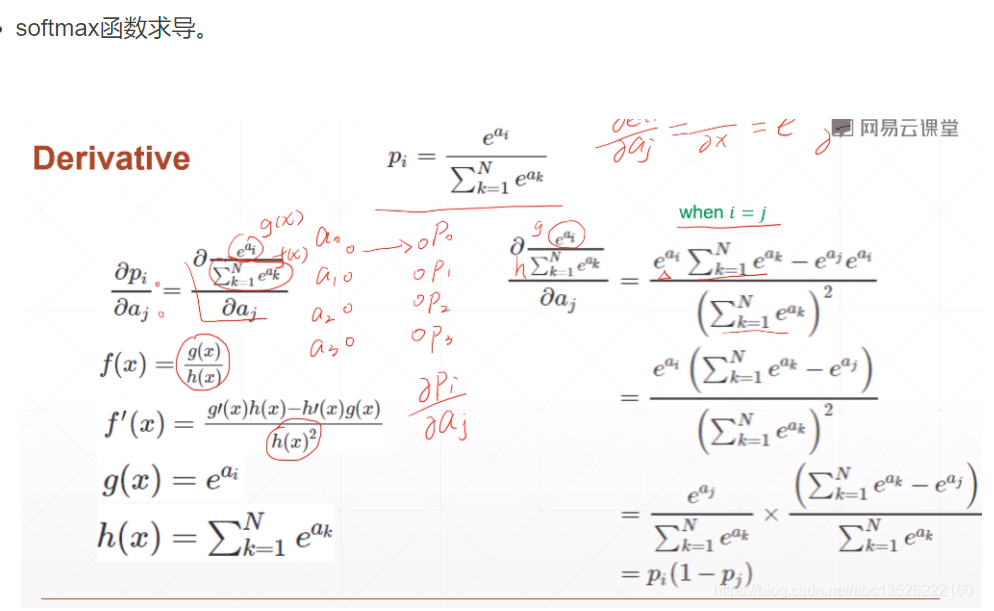

二、交叉熵-Entropy

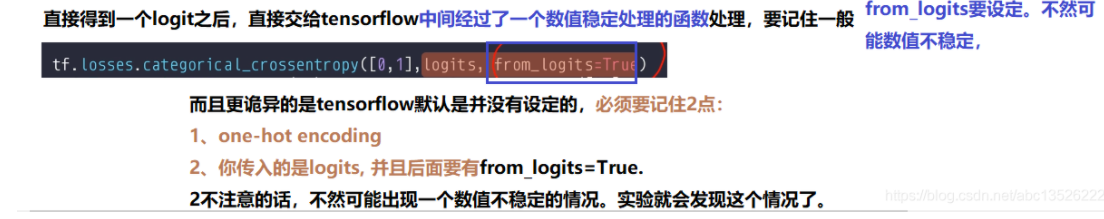

import tensorflow as tf x = tf.random.normal([2, 4]) #2个4维样本 w = tf.random.normal([4, 3]) b = tf.zeros([3]) y = tf.constant([2, 0]) #2个样本的实际标签 with tf.GradientTape() as tape: tape.watch([w, b]) logits = x @ w + b loss = tf.reduce_mean(tf.losses.categorical_crossentropy(tf.one_hot(y, depth=3), logits, from_logits = True)) grads = tape.gradient(loss, [w, b]) print("w的偏导数:\n", grads[0]) print("b的偏导数:\n", grads[1])

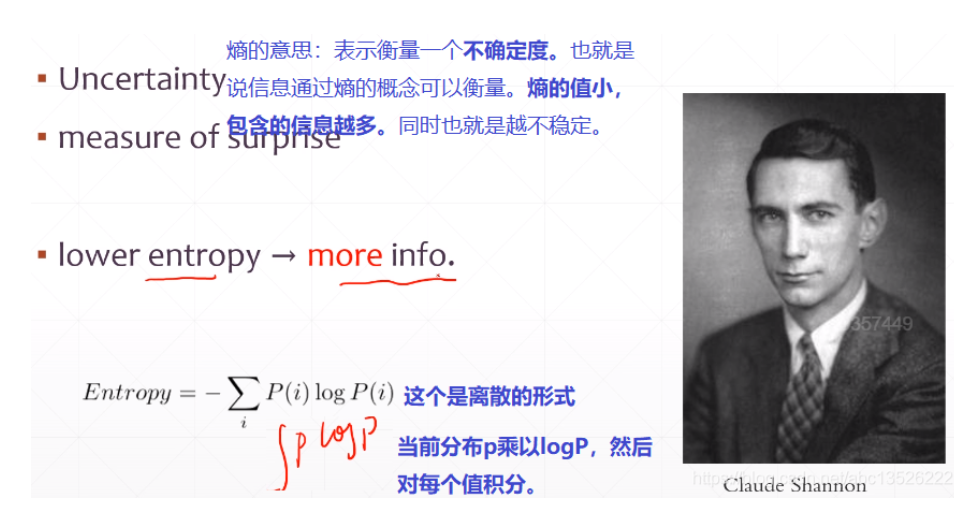

三、熵的概念

import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' a = tf.fill([4],0.25) b = tf.math.log(a) / tf.math.log(2.) #tensorflow中默认以e为底,变为2为底。 print(b) print(-tf.reduce_sum(a*b).numpy()) a1 = tf.constant([0.1, 0.1, 0.1, 0.7]) b1 = tf.math.log(a1) / tf.math.log(2.) #tensorflow中默认以e为底,变为2为底。 print(-tf.reduce_sum(a1*b1).numpy()) a2 = tf.constant([0.01, 0.01, 0.01, 0.97]) b2 = tf.math.log(a2) / tf.math.log(2.) #tensorflow中默认以e为底,变为2为底。 print(-tf.reduce_sum(a2*b2).numpy())

import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' #函数大写形式 criteon = tf.losses.BinaryCrossentropy() #首先声明这样一个类,对instance做一个调用。 loss = criteon([1], [0.1]) print(loss) #函数小写的形式。直接调用就可以了。 loss1 = tf.losses.binary_crossentropy([1],[0.1]) print(loss1)

import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' x = tf.random.normal([1,784]) w = tf.random.normal([784,2]) b = tf.zeros(2) logits = x@w+b print("前向传播结果:",logits.numpy()) prob = tf.math.softmax(logits, axis=1) print("经过softmax数值为:",prob.numpy()) loss = tf.losses.categorical_crossentropy([0,1],logits,from_logits=True) print("交叉熵数值为:{0}".format(loss))