一、Kubernetes是什么及其架构

1.1、kubernetes是什么?

Kubernetes是Google开源的一个容器编排引擎,它支持自动化部署、大规模可伸缩、应用容器化管理。在生产环境中部署一个应用程序时,通常要部署该应用的多个实例以便对应用请求进行负载均衡。在Kubernetes中,我们可以创建多个容器,每个容器里面运行一个应用实例,然后通过内置的负载均衡策略,实现对这一组应用实例的管理、发现、访问,而这些细节都不需要运维人员去进行复杂的手工配置和处理。kubernetes,简称k8s,是用8代替8个字符“ubernete”而成的缩写。不说了,具体详细情况:各位自己搜索吧!

1.2、kubernetes的架构及各个组件介绍

话不多说,直接先上一张图看一下(下图来自官网),官网的地址是:https://kubernetes.io/zh/docs/concepts/overview/components/

上图展示了包含所有相互关联组件的 Kubernetes 集群组件,下面分别进行介绍:

控制平面组件

控制平面的组件对集群做出全局决策(比如调度),以及检测和响应集群事件(例如,当不满足部署的 replicas 字段时,启动新的 pod)。控制平面组件可以在集群中的任何节点上运行。 然而,为了简单起见,设置脚本通常会在同一个计算机上启动所有控制平面组件, 并且不会在此计算机上运行用户容器。 控制平台主要包含如下组件:

- kube-apiserver

- kube-scheduler

- kube-controller-manager

- etcd

kube-apiserver

API 服务器是 Kubernetes 控制面的组件, 该组件公开了 Kubernetes API。 API 服务器是 Kubernetes 控制面的前端。Kubernetes API 服务器的主要实现是 kube-apiserver。 kube-apiserver 设计上考虑了水平伸缩,也就是说,它可通过部署多个实例进行伸缩。 你可以运行 kube-apiserver 的多个实例,并在这些实例之间平衡流量。默认端口是:6443

kube-scheduler

控制平面组件,负责监视新创建的、未指定运行节点(node)的 Pods,选择节点让 Pod 在上面运行。调度决策考虑的因素包括单个 Pod 和 Pod 集合的资源需求、硬件/软件/策略约束、亲和性和反亲和性规范、数据位置、工作负载间的干扰和最后时限。默认端口是:10251

调度步骤如下:

- 通过调度算法为待调度的pod列表中的每个pod从可用node上选择一个最适合的Node

- Node节点上的kubelet会通过Apiserver监听到scheduler产生的pod绑定信息,然后获取pod清单,下载image并启动荣区

Node选择策略:

- LeastRequestedPriority: 优先选择资源消耗最小的节点

- CaculateNodeLabelPriority: 优先选择含有指定label的节点

- BalancedResourceAllocation:优先从备选节点中选择资源使用率最均衡的节点

kube-controller-manager

包括一些子控制器(副本控制器,节点控制器,命名空间控制器和服务账号控制器等),作为集群内部的管理控制中心,负责集群内部的Node,Pod副本,服务端点,namespace,服务账号,资源定额的管理,当某个Node意外宕机时,controller manager会及时发现并执行自动修复流程确保集群中的pod副本数始终处于预期的状态

特点:

- 每隔5s检查一次节点状态

- 如果没有收到自节点的心跳。该节点会被标记为不可达

- 标记为不可达之前会等待40s

- nide节点标记不可达5s之后还没有恢复,controllerManager会删除当前node节点的所有pod并在其他可用节点重建这些pod

etcd

etcd 是兼具一致性和高可用性的键值数据库,作为保存 Kubernetes 所有集群数据的后台数据库;Kubernetes 集群的 etcd 数据库通常需要高可用架构和有个备份计划。生产环境可以单独部署载独立的服务器上,节点数量一般是:3、5、7这样的奇数个数。

工作平面组件(Node):

kubelet

一个在集群中每个节点(node)上运行的代理。 它保证容器(containers)都运行在 Pod 中。

具体功能如下:

- 向master(api-server)汇报node节点的状态信息

- 接受指令并在pod中创建docker容器

- 准备pod所需要的数据卷

- 返回pod状态

- 在node节点执行容日健康检查

kube-proxy

kube-proxy 是集群中每个节点上运行的网络代理, 实现 Kubernetes 服务(Service)概念的一部分。kube-proxy 维护节点上的网络规则。这些网络规则允许从集群内部或外部的网络会话与 Pod 进行网络通信。如果操作系统提供了数据包过滤层并可用的话,kube-proxy 会通过它来实现网络规则。否则, kube-proxy 仅转发流量本身。

不同版本支持三种工作模式:

- userspace: k8s1.1版本之前使用,1.2之后淘汰

- iptables: k8s1.1版本开始支持,1.2开始为默认

- ipvs: k8s1.9引入,1.11成为正式版本,需要安装ipvsadm,ipset工具包加载ip_vs内核模块

其它插件(Addons)

插件使用 Kubernetes 资源(DaemonSet、 Deployment等)实现集群功能。 因为这些插件提供集群级别的功能,插件中命名空间域的资源属于 kube-system 命名空间。

下面描述众多插件中的几种。有关可用插件的完整列表,请参见 插件(Addons)。

DNS

尽管其他插件都并非严格意义上的必需组件,但几乎所有 Kubernetes 集群都应该 有集群 DNS, 因为很多示例都需要 DNS 服务。集群 DNS 是一个 DNS 服务器,和环境中的其他 DNS 服务器一起工作,它为 Kubernetes 服务提供 DNS 记录。Kubernetes 启动的容器自动将此 DNS 服务器包含在其 DNS 搜索列表中。

Web 界面(仪表盘)

Dashboard 是 Kubernetes 集群的通用的、基于 Web 的用户界面。 它使用户可以管理集群中运行的应用程序以及集群本身并进行故障排除。

容器资源监控

容器资源监控 将关于容器的一些常见的时间序列度量值保存到一个集中的数据库中,并提供用于浏览这些数据的界面。

集群层面日志

集群层面日志 机制负责将容器的日志数据 保存到一个集中的日志存储中,该存储能够提供搜索和浏览接口。

最后,再来看一张K8S的架构图:

1.3、在kubernetes中创建pod的调度流程

Pod是Kubernetes中最基本的部署调度单元,可以包含container,逻辑上表示某种应用的一个实例。例如一个web站点应用由前端、后端及数据库构建而成,这三个组件将运行在各自的容器中,那么我们可以创建包含三个container的pod。本文将对Kubernetes的基本处理流程做一个简单的分析,首先先看下面的流程图:

- 1、kubelet client(或restful api等客户端等方式) 端执行kubelet create pod命令提交post kubelet apiserver请求

- 2、apiserver 监听接受到请求:

- 2.1、对请求进行、解析、认证、授权、超时处理、审计通过

- 2.2、pod请求事件进入MUX和route流程,apiserver会根据请求匹配对应pod类型的定义,apiserver会进行一个convert工作,将请求内容转换成super version对象

- 2.3、apiserver会先进行admission() 准入控制,比如添加标签,添加sidecar容器等和校验个字段合法性

- 2.4、apiserver将验证通过的api对象转换成用户最初提交的版本,进行序列化操作,并调用etcd api保存apiserver处理pod事件信息,apiserver把pod对象add到调度队列中

- 3、schedule相关情况:

- 3.1、schedule调度器会通过监听apiserver add到pod对象队列,

- 3.2、调度器开始尝试调度,筛选出适合调度的节点,并打分选出一个最高分的节点

- 3.3、调度器会将pod对象与node绑定,调度器将信息同步apiserver保存到etcd中完成调度

- 4、工作节点上的相关情况:

- 4.1、节点kubelet通过watch监听机制,监听与自己相关的pod对象,kubelet会把相关pod信息podcache缓存到节点内存

- 4.2、kubelet通过检查该pod对象在kubelet内存状态,kubelet就能够判断出是一个新调度pod对象

- 4.3、kubelet会启动 pod update worker、单独goroutine处理pod对象生成对应的pod status,检查pod所生命的volume、网络是否准备好

- 4.4、kubelet调用docker api 容器运行时CRI,发起插件pod所定义容器Container Runtime Interface, CRI请求

- 4.5、docker 容器运行时比如docker响应请求,然后开始创建对应容器

上述太多了点,来个简版的:

1. 用户提交创建Pod的请求,可以通过API Server的REST API ,也可用Kubectl命令行工具,支持Json和Yaml两种格式; 2. API Server 处理用户请求,存储Pod数据到Etcd; 3. Schedule通过和 API Server的watch机制,查看到新的pod,尝试为Pod绑定Node; 4. 过滤主机:调度器用一组规则过滤掉不符合要求的主机,比如Pod指定了所需要的资源,那么就要过滤掉资源不够的主机; 5. 主机打分:对第一步筛选出的符合要求的主机进行打分,在主机打分阶段,调度器会考虑一些整体优化策略,比如把一个Replication Controller的副本分布到不同的主机上,使用最低负载的主机等; 6. 选择主机:选择打分最高的主机,进行binding操作,结果存储到Etcd中; 7. kubelet根据调度结果执行Pod创建操作: 绑定成功后,会启动container, docker run, scheduler会调用API Server的API在etcd中创建一个bound pod对象,描述在一个工作节点上绑定运行的所有pod信息。运行在每个工作节点上的kubelet也会定期与etcd同步bound pod信息,一旦发现应该在该工作节点上运行的bound pod对象没有更新,则调用Docker API创建并启动pod内的容器。

二、使用Kubeasz部署一个集群

2.1 本次实验的相关主机环境情况:

| 主机IP地址 | 角色 | 操作系统 | 备注 |

| 192.168.11.111 | 部署和客户端 | Ubuntu20.04 | |

| 192.168.11.120 | master节点 | Ubuntu20.04 | |

| 192.168.11.130 | node节点 | Ubuntu20.04 | |

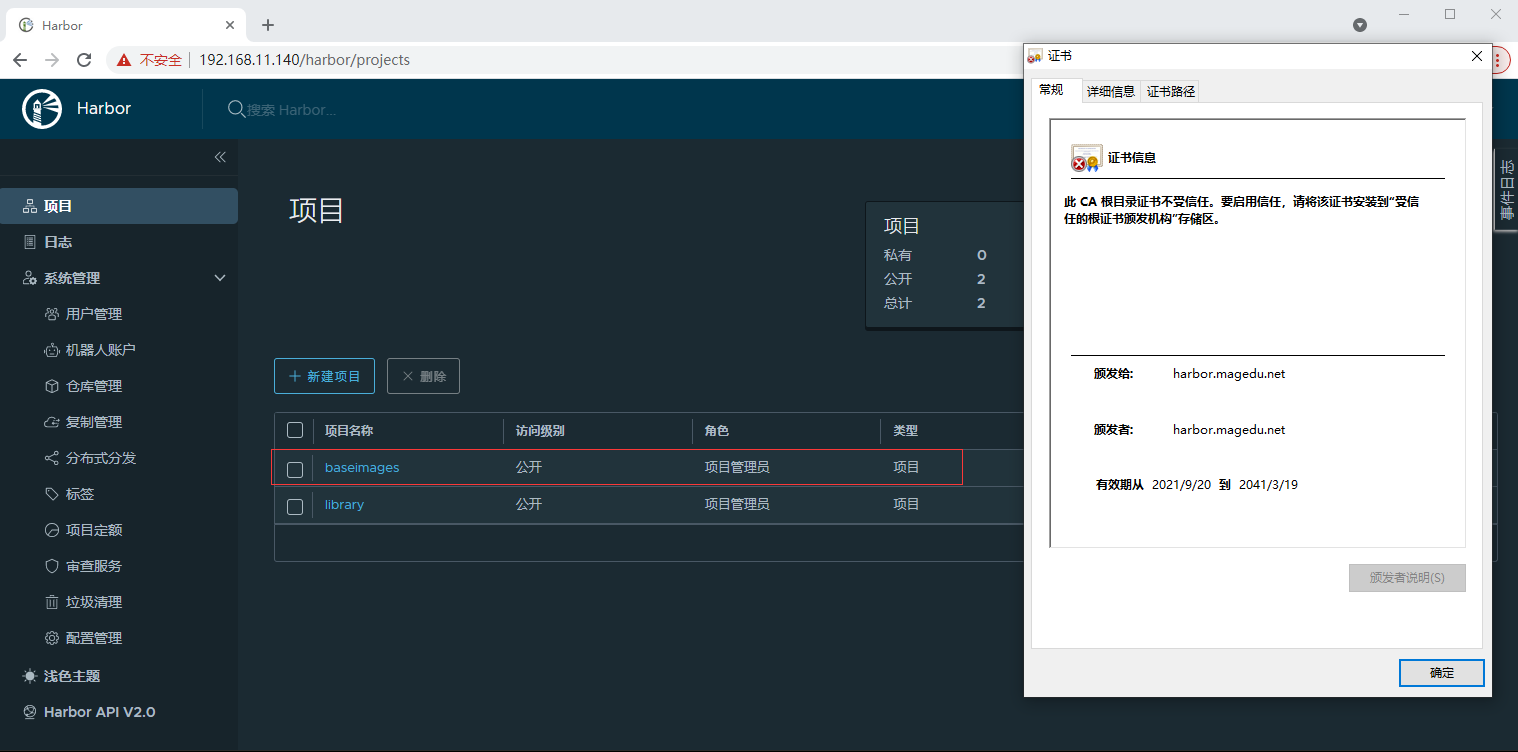

| 192.168.11.140 | Harbor节点 | Ubuntu20.04 | 开启了SSL |

2.2、所有节点Ubuntu20.04上安装docker-ce指定版本:19.03.15,具体详细安装文档参考官网:https://docs.docker.com/engine/install/ubuntu/

1 apt update 2 sudo apt-get install apt-transport-https ca-certificates curl gnupg lsb-release -y 3 echo "deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null 4 apt-get update 5 apt-cache madison docker-ce 6 apt-get install docker-ce=5:19.03.15~3-0~ubuntu-focal docker-ce-cli=5:19.03.15~3-0~ubuntu-focal containerd.io -y 7 docker info/version

2.3、所有节点Ubuntu20.04上设置时间同步和时区一致

1 root@ubuntu:~# apt install chrony -y 2 3 root@ubuntu:~# tzselect 4 Please identify a location so that time zone rules can be set correctly. 5 Please select a continent, ocean, "coord", or "TZ". 6 1) Africa 5) Atlantic Ocean 9) Pacific Ocean 7 2) Americas 6) Australia 10) coord - I want to use geographical coordinates. 8 3) Antarctica 7) Europe 11) TZ - I want to specify the timezone using the Posix TZ format. 9 4) Asia 8) Indian Ocean 10 #? 4 11 Please select a country whose clocks agree with yours. 12 1) Afghanistan 8) Cambodia 15) Indonesia 22) Korea (North) 29) Malaysia 36) Philippines 43) Taiwan 50) Yemen 13 2) Armenia 9) China 16) Iran 23) Korea (South) 30) Mongolia 37) Qatar 44) Tajikistan 14 3) Azerbaijan 10) Cyprus 17) Iraq 24) Kuwait 31) Myanmar (Burma) 38) Russia 45) Thailand 15 4) Bahrain 11) East Timor 18) Israel 25) Kyrgyzstan 32) Nepal 39) Saudi Arabia 46) Turkmenistan 16 5) Bangladesh 12) Georgia 19) Japan 26) Laos 33) Oman 40) Singapore 47) United Arab Emirates 17 6) Bhutan 13) Hong Kong 20) Jordan 27) Lebanon 34) Pakistan 41) Sri Lanka 48) Uzbekistan 18 7) Brunei 14) India 21) Kazakhstan 28) Macau 35) Palestine 42) Syria 49) Vietnam 19 #? 9 20 Please select one of the following timezones. 21 1) Beijing Time 22 2) Xinjiang Time 23 #? 1 24 25 The following information has been given: 26 27 China 28 Beijing Time 29 30 Therefore TZ='Asia/Shanghai' will be used. 31 Selected time is now: Mon Sep 20 16:46:29 CST 2021. 32 Universal Time is now: Mon Sep 20 08:46:29 UTC 2021. 33 Is the above information OK? 34 1) Yes 35 2) No 36 #? 1 37 38 You can make this change permanent for yourself by appending the line 39 TZ='Asia/Shanghai'; export TZ 40 to the file '.profile' in your home directory; then log out and log in again. 41 42 Here is that TZ value again, this time on standard output so that you 43 can use the /usr/bin/tzselect command in shell scripts: 44 Asia/Shanghai 45 root@ubuntu:~# 46 root@ubuntu:~# ln -svf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime 47 '/etc/localtime' -> '/usr/share/zoneinfo/Asia/Shanghai' 48 root@ubuntu:~# date 49 Mon 20 Sep 2021 04:47:27 PM CST 50 root@ubuntu:~# 51 root@ubuntu:~# 52 root@ubuntu:~# timedatectl 53 Local time: Mon 2021-09-20 16:48:10 CST 54 Universal time: Mon 2021-09-20 08:48:10 UTC 55 RTC time: Mon 2021-09-20 08:48:10 56 Time zone: Asia/Shanghai (CST, +0800) 57 System clock synchronized: yes 58 NTP service: active 59 RTC in local TZ: no 60 root@ubuntu:~# date 61 Mon 20 Sep 2021 04:48:23 PM CST 62 root@ubuntu:~#

2.4、Harbor节点(192.168.11.140)上安装docker-compose,创建harbor.magedu.net域名的证书并安装Harbor

创建证书:

1 openssl genrsa -out harbor-ca.key 2 openssl req -x509 -new -nodes -key harbor-ca.key -subj "/CN=harbor.magedu.net" -days 7120 -out harbor-ca.crt

安装Harbor:

1 cd /usr/local/bin/ 2 mv docker-compose-Linux-x86_64_1.24.1 docker-compose 3 chmod +x docker-compose 4 docker-compose version 5 tar -zxvf harbor-offline-installer-v2.3.2.tgz 6 mv harbor /usr/local/ 7 cd /usr/local/harbor 8 cp harbor.yml.tmpl harbor.yml 9 vim harbor.yml 相关配置文件修改如下图: 10 ./install.sh

登陆并验证安装后harbor并创建一个baseimages项目(如果重启了harbor服务器,请使用如下命令启动# docker-compose start )

2.5、deploy客户端配置docker加载证书并上传一个busybox测试镜像——并载所有几点进行下面的配置(所有节点docker都加载证书)

1 mkdir -p /etc/docker/certs.d/harbor.magedu.net 2 cd /etc/docker/certs.d/harbor.magedu.net 3 scp 192.168.11.140:/usr/local/harbor/cert/harbor-ca.crt /etc/docker/certs.d/harbor.magedu.net # 重启docker 4 注意点:添加域名和IP地址解析:echo '192.168.11.140 harbor.magedu.net' >> /etc/hosts 5 root@deploy:/etc/docker/certs.d/harbor.magedu.net# docker login harbor.magedu.net # 登陆上传镜像测试验证 6 Username: admin 7 Password: 8 WARNING! Your password will be stored unencrypted in /root/.docker/config.json. 9 Configure a credential helper to remove this warning. See 10 https://docs.docker.com/engine/reference/commandline/login/#credentials-store 11 12 Login Succeeded 13 root@deploy:/etc/docker/certs.d/harbor.magedu.net# docker pull busybox 14 Using default tag: latest 15 latest: Pulling from library/busybox 16 24fb2886d6f6: Pull complete 17 Digest: sha256:52f73a0a43a16cf37cd0720c90887ce972fe60ee06a687ee71fb93a7ca601df7 18 Status: Downloaded newer image for busybox:latest 19 docker.io/library/busybox:latest 20 root@deploy:/etc/docker/certs.d/harbor.magedu.net# 21 root@deploy:/etc/docker/certs.d/harbor.magedu.net# docker images 22 REPOSITORY TAG IMAGE ID CREATED SIZE 23 busybox latest 16ea53ea7c65 6 days ago 1.24MB 24 root@deploy:/etc/docker/certs.d/harbor.magedu.net# docker tag busybox:latest harbor.magedu.net/baseimages/busybox:latest 25 root@deploy:/etc/docker/certs.d/harbor.magedu.net# docker images 26 REPOSITORY TAG IMAGE ID CREATED SIZE 27 busybox latest 16ea53ea7c65 6 days ago 1.24MB 28 harbor.magedu.net/baseimages/busybox latest 16ea53ea7c65 6 days ago 1.24MB 29 root@deploy:/etc/docker/certs.d/harbor.magedu.net# docker push harbor.magedu.net/baseimages/busybox:latest 30 The push refers to repository [harbor.magedu.net/baseimages/busybox] 31 cfd97936a580: Pushed 32 latest: digest: sha256:febcf61cd6e1ac9628f6ac14fa40836d16f3c6ddef3b303ff0321606e55ddd0b size: 527 33 root@deploy:/etc/docker/certs.d/harbor.magedu.net#

2.6、部署机上(192.168.11.111)下载kubeasz脚本并下载相关安装安装文件

至于什么是kubeasz,直接去GitHub上看吧,具体连接是:https://github.com/easzlab/kubeasz ; 下面直接进入主题吧

1 export release=3.1.0 # 下载工具脚本ezdown,举例使用kubeasz版本3.1.0 2 curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown 3 chmod +x ezdown

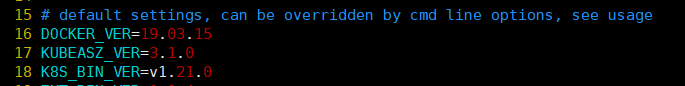

修改ezdown配置文件的16行:指定使用相关版本的docker:

下载项目源码、二进制及离线镜像:./ezdown -D,具体的下载过程如下:

1 root@deploy:~# ./ezdown --help 2 ./ezdown: illegal option -- - 3 Usage: ezdown [options] [args] 4 option: -{DdekSz} 5 -C stop&clean all local containers 6 -D download all into "/etc/kubeasz" 7 -P download system packages for offline installing 8 -R download Registry(harbor) offline installer 9 -S start kubeasz in a container 10 -d <ver> set docker-ce version, default "19.03.15" 11 -e <ver> set kubeasz-ext-bin version, default "0.9.4" 12 -k <ver> set kubeasz-k8s-bin version, default "v1.21.0" 13 -m <str> set docker registry mirrors, default "CN"(used in Mainland,China) 14 -p <ver> set kubeasz-sys-pkg version, default "0.4.1" 15 -z <ver> set kubeasz version, default "3.1.0" 16 root@deploy:~# ./ezdown -D 17 2021-09-20 17:52:59 INFO Action begin: download_all 18 2021-09-20 17:52:59 INFO downloading docker binaries, version 19.03.15 19 % Total % Received % Xferd Average Speed Time Time Time Current 20 Dload Upload Total Spent Left Speed 21 100 59.5M 100 59.5M 0 0 5408k 0 0:00:11 0:00:11 --:--:-- 5969k 22 2021-09-20 17:53:12 WARN docker is already running. 23 2021-09-20 17:53:12 INFO downloading kubeasz: 3.1.0 24 2021-09-20 17:53:12 DEBUG run a temporary container 25 Unable to find image 'easzlab/kubeasz:3.1.0' locally 26 3.1.0: Pulling from easzlab/kubeasz 27 540db60ca938: Pull complete 28 d037ddac5dde: Pull complete 29 05d0edf52df4: Pull complete 30 54d94e388fb8: Pull complete 31 b25964b87dc1: Pull complete 32 aedfadb13329: Pull complete 33 7f3f0ac4a403: Pull complete 34 Digest: sha256:b31e3ed9624fc15a6da18445a17ece1e01e6316b7fdaa096559fcd2f7ab0ae13 35 Status: Downloaded newer image for easzlab/kubeasz:3.1.0 36 440c7c757c7c8a8ab3473d196621bb8d28796c470fc1153a1098ebae83f7b09e 37 2021-09-20 17:53:48 DEBUG cp kubeasz code from the temporary container 38 2021-09-20 17:53:48 DEBUG stop&remove temporary container 39 temp_easz 40 2021-09-20 17:53:48 INFO downloading kubernetes: v1.21.0 binaries 41 v1.21.0: Pulling from easzlab/kubeasz-k8s-bin 42 532819f3e44c: Pull complete 43 02d2aa9e7900: Pull complete 44 Digest: sha256:eaa667fa41a407eb547bd639dd70aceb85d39f1cfb55d20aabcd845dea7c4e80 45 Status: Downloaded newer image for easzlab/kubeasz-k8s-bin:v1.21.0 46 docker.io/easzlab/kubeasz-k8s-bin:v1.21.0 47 2021-09-20 17:54:58 DEBUG run a temporary container 48 59481d2763c58a49e48f8e8e2a9e986001aabe9abfe5de7e5429e2609691cf54 49 2021-09-20 17:54:59 DEBUG cp k8s binaries 50 2021-09-20 17:55:01 DEBUG stop&remove temporary container 51 temp_k8s_bin 52 2021-09-20 17:55:01 INFO downloading extral binaries kubeasz-ext-bin:0.9.4 53 0.9.4: Pulling from easzlab/kubeasz-ext-bin 54 532819f3e44c: Already exists 55 f8df0a3f2b36: Pull complete 56 d69f8f462012: Pull complete 57 1085086eb00a: Pull complete 58 af48509c9825: Pull complete 59 Digest: sha256:627a060a42260065d857b64df858f6a0f3b773563bce63bc87f74531ccd701d3 60 Status: Downloaded newer image for easzlab/kubeasz-ext-bin:0.9.4 61 docker.io/easzlab/kubeasz-ext-bin:0.9.4 62 2021-09-20 17:56:27 DEBUG run a temporary container 63 26a48514ab1b2fe7360da7f13245fcc3e1e8ac02e6edd0513d38d2352543ec94 64 2021-09-20 17:56:28 DEBUG cp extral binaries 65 2021-09-20 17:56:29 DEBUG stop&remove temporary container 66 temp_ext_bin 67 2021-09-20 17:56:30 INFO downloading offline images 68 v3.15.3: Pulling from calico/cni 69 1ff8efc68ede: Pull complete 70 dbf74493f8ac: Pull complete 71 6a02335af7ae: Pull complete 72 a9d90ecd95cb: Pull complete 73 269efe44f16b: Pull complete 74 d94997f3700d: Pull complete 75 8c7602656f2e: Pull complete 76 34fcbf8be9e7: Pull complete 77 Digest: sha256:519e5c74c3c801ee337ca49b95b47153e01fd02b7d2797c601aeda48dc6367ff 78 Status: Downloaded newer image for calico/cni:v3.15.3 79 docker.io/calico/cni:v3.15.3 80 v3.15.3: Pulling from calico/pod2daemon-flexvol 81 3fb48f9dffa9: Pull complete 82 a820112abeeb: Pull complete 83 10d8d066ec17: Pull complete 84 217b4fd6d612: Pull complete 85 06c30d5e085d: Pull complete 86 ca0fd3d60e05: Pull complete 87 a1c12287b32b: Pull complete 88 Digest: sha256:cec7a31b08ab5f9b1ed14053b91fd08be83f58ddba0577e9dabd8b150a51233f 89 Status: Downloaded newer image for calico/pod2daemon-flexvol:v3.15.3 90 docker.io/calico/pod2daemon-flexvol:v3.15.3 91 v3.15.3: Pulling from calico/kube-controllers 92 22d9887128f5: Pull complete 93 8824e2076f71: Pull complete 94 8b26373ef5b7: Pull complete 95 Digest: sha256:b88f0923b02090efcd13a2750da781622b6936df72d6c19885fcb2939647247b 96 Status: Downloaded newer image for calico/kube-controllers:v3.15.3 97 docker.io/calico/kube-controllers:v3.15.3 98 v3.15.3: Pulling from calico/node 99 0a63a759fe25: Pull complete 100 9d6c79b335fa: Pull complete 101 0c7b599aaa59: Pull complete 102 641ec2b3d585: Pull complete 103 682bbf5a5743: Pull complete 104 b7275bfed8bc: Pull complete 105 f9c5a243b960: Pull complete 106 eafb01686242: Pull complete 107 3a8a3042bbc5: Pull complete 108 e4fa8d582cf2: Pull complete 109 6ff16d4df057: Pull complete 110 8b0afdee71db: Pull complete 111 aa370255d6dd: Pull complete 112 Digest: sha256:1d674438fd05bd63162d9c7b732d51ed201ee7f6331458074e3639f4437e34b1 113 Status: Downloaded newer image for calico/node:v3.15.3 114 docker.io/calico/node:v3.15.3 115 1.8.0: Pulling from coredns/coredns 116 c6568d217a00: Pull complete 117 5984b6d55edf: Pull complete 118 Digest: sha256:cc8fb77bc2a0541949d1d9320a641b82fd392b0d3d8145469ca4709ae769980e 119 Status: Downloaded newer image for coredns/coredns:1.8.0 120 docker.io/coredns/coredns:1.8.0 121 1.17.0: Pulling from easzlab/k8s-dns-node-cache 122 e5a8c1ed6cf1: Pull complete 123 f275df365c13: Pull complete 124 6a2802bb94f4: Pull complete 125 cb3853c52da4: Pull complete 126 db342cbe4b1c: Pull complete 127 9a72dd095a53: Pull complete 128 a79e969cb748: Pull complete 129 02edbb2551d4: Pull complete 130 Digest: sha256:4b9288fa72c3ec3e573f61cc871b6fe4dc158b1dc3ea56f9ce528548f4cabe3d 131 Status: Downloaded newer image for easzlab/k8s-dns-node-cache:1.17.0 132 docker.io/easzlab/k8s-dns-node-cache:1.17.0 133 v2.2.0: Pulling from kubernetesui/dashboard 134 7cccffac5ec6: Pull complete 135 7f06704bb864: Pull complete 136 Digest: sha256:148991563e374c83b75e8c51bca75f512d4f006ddc791e96a91f1c7420b60bd9 137 Status: Downloaded newer image for kubernetesui/dashboard:v2.2.0 138 docker.io/kubernetesui/dashboard:v2.2.0 139 v0.13.0-amd64: Pulling from easzlab/flannel 140 df20fa9351a1: Pull complete 141 0fbfec51320e: Pull complete 142 734a6c0a0c59: Pull complete 143 41745b624d5f: Pull complete 144 feca50c5fe05: Pull complete 145 071b96dd834b: Pull complete 146 5154c0aa012a: Pull complete 147 Digest: sha256:34860ea294a018d392e61936f19a7862d5e92039d196cac9176da14b2bbd0fe3 148 Status: Downloaded newer image for easzlab/flannel:v0.13.0-amd64 149 docker.io/easzlab/flannel:v0.13.0-amd64 150 v1.0.6: Pulling from kubernetesui/metrics-scraper 151 47a33a630fb7: Pull complete 152 62498b3018cb: Pull complete 153 Digest: sha256:1f977343873ed0e2efd4916a6b2f3075f310ff6fe42ee098f54fc58aa7a28ab7 154 Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.6 155 docker.io/kubernetesui/metrics-scraper:v1.0.6 156 v0.3.6: Pulling from mirrorgooglecontainers/metrics-server-amd64 157 e8d8785a314f: Pull complete 158 b2f4b24bed0d: Pull complete 159 Digest: sha256:c9c4e95068b51d6b33a9dccc61875df07dc650abbf4ac1a19d58b4628f89288b 160 Status: Downloaded newer image for mirrorgooglecontainers/metrics-server-amd64:v0.3.6 161 docker.io/mirrorgooglecontainers/metrics-server-amd64:v0.3.6 162 3.4.1: Pulling from easzlab/pause-amd64 163 fac425775c9d: Pull complete 164 Digest: sha256:9ec1e780f5c0196af7b28f135ffc0533eddcb0a54a0ba8b32943303ce76fe70d 165 Status: Downloaded newer image for easzlab/pause-amd64:3.4.1 166 docker.io/easzlab/pause-amd64:3.4.1 167 v4.0.1: Pulling from easzlab/nfs-subdir-external-provisioner 168 9e4425256ce4: Pull complete 169 f32b919eb4ab: Pull complete 170 Digest: sha256:62a05dd29623964c4ff7b591d1ffefda0c2e13cfdc6f1253223c9d718652993f 171 Status: Downloaded newer image for easzlab/nfs-subdir-external-provisioner:v4.0.1 172 docker.io/easzlab/nfs-subdir-external-provisioner:v4.0.1 173 3.1.0: Pulling from easzlab/kubeasz 174 Digest: sha256:b31e3ed9624fc15a6da18445a17ece1e01e6316b7fdaa096559fcd2f7ab0ae13 175 Status: Image is up to date for easzlab/kubeasz:3.1.0 176 docker.io/easzlab/kubeasz:3.1.0 177 2021-09-20 18:01:48 INFO Action successed: download_all 178 root@deploy:~#

下载好后,相关文件会在:/etc/kubeasz/目录下,这里穿插一步:部署集群到相关节点的ssh免密登陆和部署机器上的ansble的安装,下面贴一下主要步骤:

1 # 安装ansible: 2 apt install ansible -y 3 # 或者这样安装 4 apt install python3-pip 5 pip install ansible 6 # 同步密钥或用杰哥上课用的脚本 7 ansible -i hosts -m authorized_key -a "user=root state=present key='{{ lookup('file', '/root/.ssh/id_rsa.pub') }}'" all

进入相关目录修改配置文件:

下面是/etc/kubeasz/clusters/k8s-01/hosts配置文件全部内容:

1 root@deploy:/etc/kubeasz/clusters/k8s-01# cat hosts 2 # 'etcd' cluster should have odd member(s) (1,3,5,...) 3 [etcd] 4 192.168.11.120 5 #192.168.1.2 6 #192.168.1.3 7 8 # master node(s) 9 [kube_master] 10 192.168.11.120 11 #192.168.1.2 12 13 # work node(s) 14 [kube_node] 15 192.168.11.130 16 #192.168.1.4 17 18 # [optional] harbor server, a private docker registry 19 # 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one 20 [harbor] 21 #192.168.1.8 NEW_INSTALL=false 22 23 # [optional] loadbalance for accessing k8s from outside 24 [ex_lb] 25 #192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443 26 #192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443 27 28 # [optional] ntp server for the cluster 29 [chrony] 30 #192.168.1.1 31 32 [all:vars] 33 # --------- Main Variables --------------- 34 # Secure port for apiservers 35 SECURE_PORT="6443" 36 37 # Cluster container-runtime supported: docker, containerd 38 CONTAINER_RUNTIME="docker" 39 40 # Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn 41 CLUSTER_NETWORK="calico" 42 43 # Service proxy mode of kube-proxy: 'iptables' or 'ipvs' 44 PROXY_MODE="ipvs" 45 46 # K8S Service CIDR, not overlap with node(host) networking 47 SERVICE_CIDR="10.100.0.0/16" 48 49 # Cluster CIDR (Pod CIDR), not overlap with node(host) networking 50 CLUSTER_CIDR="172.200.0.0/16" 51 52 # NodePort Range 53 NODE_PORT_RANGE="30000-32767" 54 55 # Cluster DNS Domain 56 CLUSTER_DNS_DOMAIN="magedu.local" 57 58 # -------- Additional Variables (don't change the default value right now) --- 59 # Binaries Directory 60 bin_dir="/opt/kube/bin" 61 62 # Deploy Directory (kubeasz workspace) 63 base_dir="/etc/kubeasz" 64 65 # Directory for a specific cluster 66 cluster_dir="{{ base_dir }}/clusters/k8s-01" 67 68 # CA and other components cert/key Directory 69 ca_dir="/etc/kubernetes/ssl" 70 root@deploy:/etc/kubeasz/clusters/k8s-01#

主要调整如下:只保留一个etc、master和node节点,pod和service的网络地址段,网络类型为calico和集群的dns域名

1 [etcd] 2 192.168.11.120 3 4 [kube_master] 5 192.168.11.120 6 7 [kube_node] 8 192.168.11.130 9 10 # Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn 11 CLUSTER_NETWORK="calico" 12 # K8S Service CIDR, not overlap with node(host) networking 13 SERVICE_CIDR="10.100.0.0/16" 14 15 # Cluster CIDR (Pod CIDR), not overlap with node(host) networking 16 CLUSTER_CIDR="172.200.0.0/16" 17 18 # Cluster DNS Domain # 这个其实不用改,只是个名称 19 CLUSTER_DNS_DOMAIN="magedu.local"

下面是/etc/kubeasz/clusters/k8s-01/config.yml配置文件全部内容:

1 root@deploy:/etc/kubeasz/clusters/k8s-01# cat config.yml 2 ############################ 3 # prepare 4 ############################ 5 # 可选离线安装系统软件包 (offline|online) 6 INSTALL_SOURCE: "online" 7 8 # 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening 9 OS_HARDEN: false 10 11 # 设置时间源服务器【重要:集群内机器时间必须同步】 12 ntp_servers: 13 - "ntp1.aliyun.com" 14 - "time1.cloud.tencent.com" 15 - "0.cn.pool.ntp.org" 16 17 # 设置允许内部时间同步的网络段,比如"10.0.0.0/8",默认全部允许 18 local_network: "0.0.0.0/0" 19 20 21 ############################ 22 # role:deploy 23 ############################ 24 # default: ca will expire in 100 years 25 # default: certs issued by the ca will expire in 50 years 26 CA_EXPIRY: "876000h" 27 CERT_EXPIRY: "438000h" 28 29 # kubeconfig 配置参数 30 CLUSTER_NAME: "cluster1" 31 CONTEXT_NAME: "context-{{ CLUSTER_NAME }}" 32 33 34 ############################ 35 # role:etcd 36 ############################ 37 # 设置不同的wal目录,可以避免磁盘io竞争,提高性能 38 ETCD_DATA_DIR: "/var/lib/etcd" 39 ETCD_WAL_DIR: "" 40 41 42 ############################ 43 # role:runtime [containerd,docker] 44 ############################ 45 # ------------------------------------------- containerd 46 # [.]启用容器仓库镜像 47 ENABLE_MIRROR_REGISTRY: false 48 49 # [containerd]基础容器镜像 50 SANDBOX_IMAGE: "easzlab/pause-amd64:3.4.1" 51 52 # [containerd]容器持久化存储目录 53 CONTAINERD_STORAGE_DIR: "/var/lib/containerd" 54 55 # ------------------------------------------- docker 56 # [docker]容器存储目录 57 DOCKER_STORAGE_DIR: "/var/lib/docker" 58 59 # [docker]开启Restful API 60 ENABLE_REMOTE_API: false 61 62 # [docker]信任的HTTP仓库 63 INSECURE_REG: '["127.0.0.1/8"]' 64 65 66 ############################ 67 # role:kube-master 68 ############################ 69 # k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名) 70 MASTER_CERT_HOSTS: 71 - "10.1.1.1" 72 - "k8s.test.io" 73 #- "www.test.com" 74 75 # node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址) 76 # 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段 77 # https://github.com/coreos/flannel/issues/847 78 NODE_CIDR_LEN: 24 79 80 81 ############################ 82 # role:kube-node 83 ############################ 84 # Kubelet 根目录 85 KUBELET_ROOT_DIR: "/var/lib/kubelet" 86 87 # node节点最大pod 数 88 MAX_PODS: 110 89 90 # 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量 91 # 数值设置详见templates/kubelet-config.yaml.j2 92 KUBE_RESERVED_ENABLED: "yes" 93 94 # k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况; 95 # 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2 96 # 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留 97 # 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存 98 SYS_RESERVED_ENABLED: "no" 99 100 # haproxy balance mode 101 BALANCE_ALG: "roundrobin" 102 103 104 ############################ 105 # role:network [flannel,calico,cilium,kube-ovn,kube-router] 106 ############################ 107 # ------------------------------------------- flannel 108 # [flannel]设置flannel 后端"host-gw","vxlan"等 109 FLANNEL_BACKEND: "vxlan" 110 DIRECT_ROUTING: false 111 112 # [flannel] flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64" 113 flannelVer: "v0.13.0-amd64" 114 flanneld_image: "easzlab/flannel:{{ flannelVer }}" 115 116 # [flannel]离线镜像tar包 117 flannel_offline: "flannel_{{ flannelVer }}.tar" 118 119 # ------------------------------------------- calico 120 # [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md 121 CALICO_IPV4POOL_IPIP: "Always" 122 123 # [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现 124 IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}" 125 126 # [calico]设置calico 网络 backend: brid, vxlan, none 127 CALICO_NETWORKING_BACKEND: "brid" 128 129 # [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x] 130 calico_ver: "v3.15.3" 131 132 # [calico]calico 主版本 133 calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}" 134 135 # [calico]离线镜像tar包 136 calico_offline: "calico_{{ calico_ver }}.tar" 137 138 # ------------------------------------------- cilium 139 # [cilium]CILIUM_ETCD_OPERATOR 创建的 etcd 集群节点数 1,3,5,7... 140 ETCD_CLUSTER_SIZE: 1 141 142 # [cilium]镜像版本 143 cilium_ver: "v1.4.1" 144 145 # [cilium]离线镜像tar包 146 cilium_offline: "cilium_{{ cilium_ver }}.tar" 147 148 # ------------------------------------------- kube-ovn 149 # [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点 150 OVN_DB_NODE: "{{ groups['kube_master'][0] }}" 151 152 # [kube-ovn]离线镜像tar包 153 kube_ovn_ver: "v1.5.3" 154 kube_ovn_offline: "kube_ovn_{{ kube_ovn_ver }}.tar" 155 156 # ------------------------------------------- kube-router 157 # [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet" 158 OVERLAY_TYPE: "full" 159 160 # [kube-router]NetworkPolicy 支持开关 161 FIREWALL_ENABLE: "true" 162 163 # [kube-router]kube-router 镜像版本 164 kube_router_ver: "v0.3.1" 165 busybox_ver: "1.28.4" 166 167 # [kube-router]kube-router 离线镜像tar包 168 kuberouter_offline: "kube-router_{{ kube_router_ver }}.tar" 169 busybox_offline: "busybox_{{ busybox_ver }}.tar" 170 171 172 ############################ 173 # role:cluster-addon 174 ############################ 175 # coredns 自动安装 176 dns_install: "no" 177 corednsVer: "1.8.0" 178 ENABLE_LOCAL_DNS_CACHE: false 179 dnsNodeCacheVer: "1.17.0" 180 # 设置 local dns cache 地址 181 LOCAL_DNS_CACHE: "169.254.20.10" 182 183 # metric server 自动安装 184 metricsserver_install: "no" 185 metricsVer: "v0.3.6" 186 187 # dashboard 自动安装 188 dashboard_install: "no" 189 dashboardVer: "v2.2.0" 190 dashboardMetricsScraperVer: "v1.0.6" 191 192 # ingress 自动安装 193 ingress_install: "no" 194 ingress_backend: "traefik" 195 traefik_chart_ver: "9.12.3" 196 197 # prometheus 自动安装 198 prom_install: "no" 199 prom_namespace: "monitor" 200 prom_chart_ver: "12.10.6" 201 202 # nfs-provisioner 自动安装 203 nfs_provisioner_install: "no" 204 nfs_provisioner_namespace: "kube-system" 205 nfs_provisioner_ver: "v4.0.1" 206 nfs_storage_class: "managed-nfs-storage" 207 nfs_server: "192.168.1.10" 208 nfs_path: "/data/nfs" 209 210 ############################ 211 # role:harbor 212 ############################ 213 # harbor version,完整版本号 214 HARBOR_VER: "v2.1.3" 215 HARBOR_DOMAIN: "harbor.yourdomain.com" 216 HARBOR_TLS_PORT: 8443 217 218 # if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down' 219 HARBOR_SELF_SIGNED_CERT: true 220 221 # install extra component 222 HARBOR_WITH_NOTARY: false 223 HARBOR_WITH_TRIVY: false 224 HARBOR_WITH_CLAIR: false 225 HARBOR_WITH_CHARTMUSEUM: true 226 root@deploy:/etc/kubeasz/clusters/k8s-01#

主要修改是:不自动安装coredns、dashboard、ingress及prometheus

1 # coredns 自动安装 2 dns_install: "no" 3 4 # metric server 自动安装 5 metricsserver_install: "no" 6 7 # dashboard 自动安装 8 dashboard_install: "no" 9 10 # ingress 自动安装 11 ingress_install: "no"

下面是进入到/etc/kubeasz目录下,执行如下命令:如果没有大问题,就OK了

./ezctl setup k8s-01 01 # 01-创建证书和安装准备 ./ezctl setup k8s-01 02 # 02-安装etcd集群 # ./ezctl setup k8s-01 03 # 由于我更喜欢手动安装docker,我就手动安装了:特别讨厌改变docker默认的安装的路径 ./ezctl setup k8s-01 04 # 04-安装master节点 ./ezctl setup k8s-01 05 # 05-安装node节点 ./ezctl setup k8s-01 06 # 06-安装集群网络

过程前后相关镜像的上传情况:

1 root@deploy:~# docker tag k8s.gcr.io/coredns/coredns:v1.8.3 harbor.magedu.net/baseimages/coredns:v1.8.3 2 root@deploy:~# docker push harbor.magedu.net/baseimages/coredns:v1.8.3 3 The push refers to repository [harbor.magedu.net/baseimages/coredns] 4 85c53e1bd74e: Pushed 5 225df95e717c: Pushed 6 v1.8.3: digest: sha256:db4f1c57978d7372b50f416d1058beb60cebff9a0d5b8bee02bfe70302e1cb2f size: 739 7 root@deploy:~# 8 9 root@node1:~# docker images 10 REPOSITORY TAG IMAGE ID CREATED SIZE 11 harbor.magedu.net/baseimages/busybox latest 16ea53ea7c65 6 days ago 1.24MB 12 kubernetesui/dashboard v2.3.1 e1482a24335a 3 months ago 220MB 13 harbor.magedu.net/baseimages/coredns v1.8.3 3885a5b7f138 6 months ago 43.5MB 14 easzlab/pause-amd64 3.4.1 0f8457a4c2ec 8 months ago 683kB 15 kubernetesui/metrics-scraper v1.0.6 48d79e554db6 11 months ago 34.5MB 16 calico/node v3.15.3 d45bf977dfbf 12 months ago 262MB 17 calico/pod2daemon-flexvol v3.15.3 963564fb95ed 12 months ago 22.8MB 18 calico/cni v3.15.3 ca5564c06ea0 12 months ago 110MB 19 calico/kube-controllers v3.15.3 0cb2976cbb7d 12 months ago 52.9MB 20 root@node1:~# docker tag kubernetesui/metrics-scraper:v1.0.6 harbor.magedu.net/baseimages/metrics-scraper:v1.0.6 21 root@node1:~# docker tag kubernetesui/dashboard:v2.3.1 harbor.magedu.net/baseimages/dashboard:v2.3.1 22 root@node1:~# docker push harbor.magedu.net/baseimages/dashboard:v2.3.1 23 The push refers to repository [harbor.magedu.net/baseimages/dashboard] 24 c94f86b1c637: Pushed 25 8ca79a390046: Pushed 26 v2.3.1: digest: sha256:e5848489963be532ec39d454ce509f2300ed8d3470bdfb8419be5d3a982bb09a size: 736 27 root@node1:~# 28 root@node1:~# docker push harbor.magedu.net/baseimages/metrics-scraper:v1.0.6 29 The push refers to repository [harbor.magedu.net/baseimages/metrics-scraper] 30 a652c34ae13a: Pushed 31 6de384dd3099: Pushed 32 v1.0.6: digest: sha256:c09adb7f46e1a9b5b0bde058713c5cb47e9e7f647d38a37027cd94ef558f0612 size: 736 33 root@node1:~#

2.7、安装成功后相关验证:

node节点上验证calico网络:

1 root@node1:~# /opt/kube/bin/calicoctl node status 2 Calico process is running. 3 4 IPv4 BGP status 5 +----------------+-------------------+-------+----------+-------------+ 6 | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | 7 +----------------+-------------------+-------+----------+-------------+ 8 | 192.168.11.120 | node-to-node mesh | up | 11:35:58 | Established | 9 +----------------+-------------------+-------+----------+-------------+ 10 11 IPv6 BGP status 12 No IPv6 peers found. 13 14 root@node1:~#

master节点上验证etcd和节点情况:

1 root@master1:~# ETCD_API=3 /opt/kube/bin/etcdctl --endpoints=https://192.168.11.120:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint status 2 https://192.168.11.120:2379, 6a03caad54fdc2e7, 3.4.13, 3.2 MB, true, false, 5, 23783, 23783, 3 root@master1:~# ETCD_API=3 /opt/kube/bin/etcdctl --endpoints=https://192.168.11.120:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health 4 https://192.168.11.120:2379 is healthy: successfully committed proposal: took = 5.333748ms 5 root@master1:~# 6 root@master1:~# 7 root@master1:~# /opt/kube/bin/kubectl get nodes 8 NAME STATUS ROLES AGE VERSION 9 192.168.11.120 Ready,SchedulingDisabled master 44h v1.21.0 10 192.168.11.130 Ready node 44h v1.21.0 11 root@master1:~#

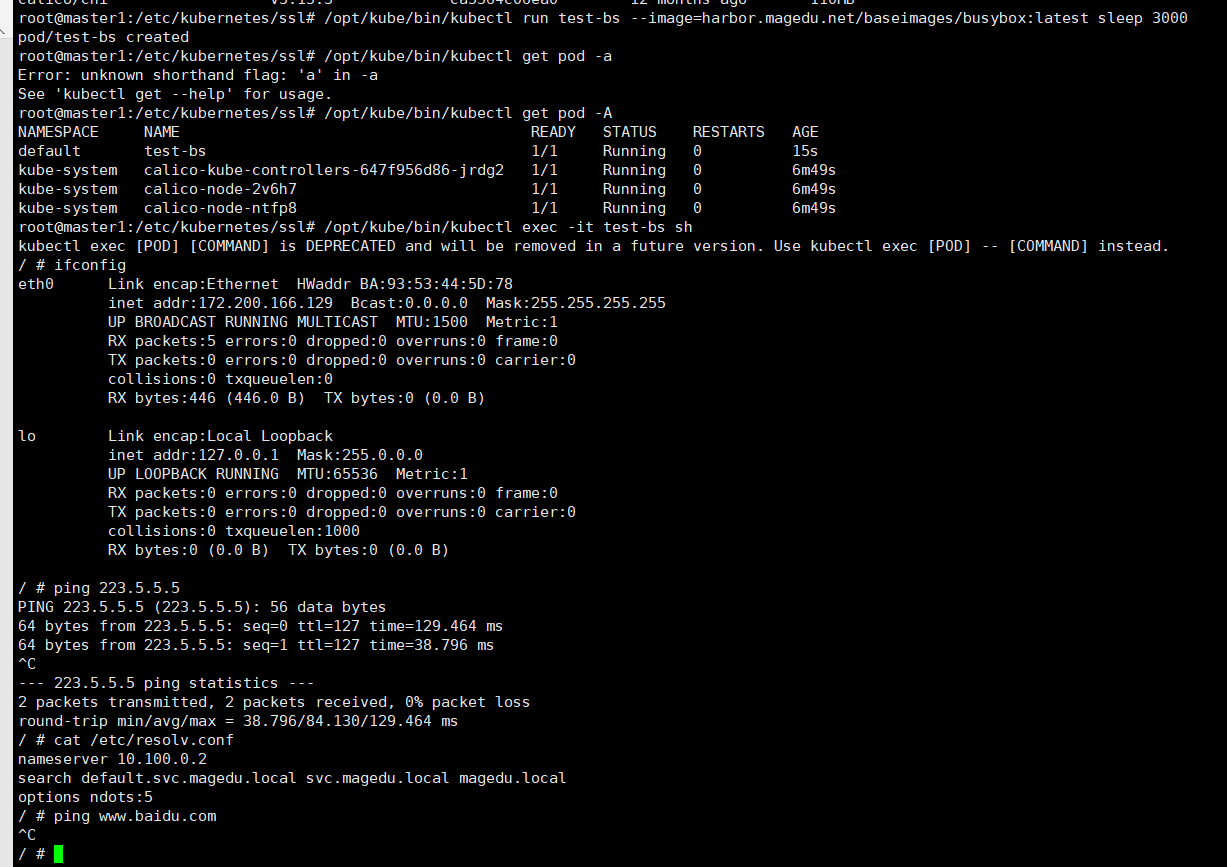

创建一个pod测试网络:没有DNS解析,但是可以直接使用IP地址访问

2.8、安装coredns:直接使用杰哥上课提供的yml文件——里面的相关域名、监控端口类型都已经改好了,直接:/opt/kube/bin/kubectl apply -f coredns-n56.yaml

再次创建pod验证:ping百度

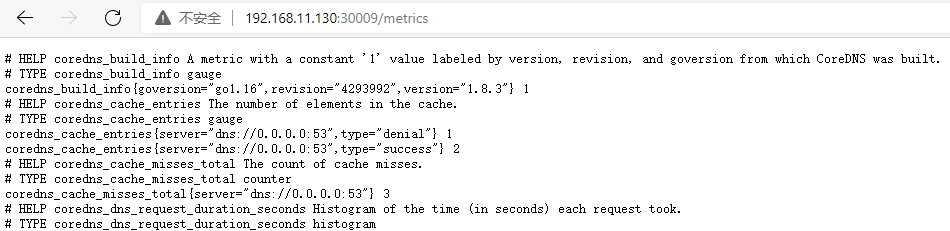

查看metrics情况:

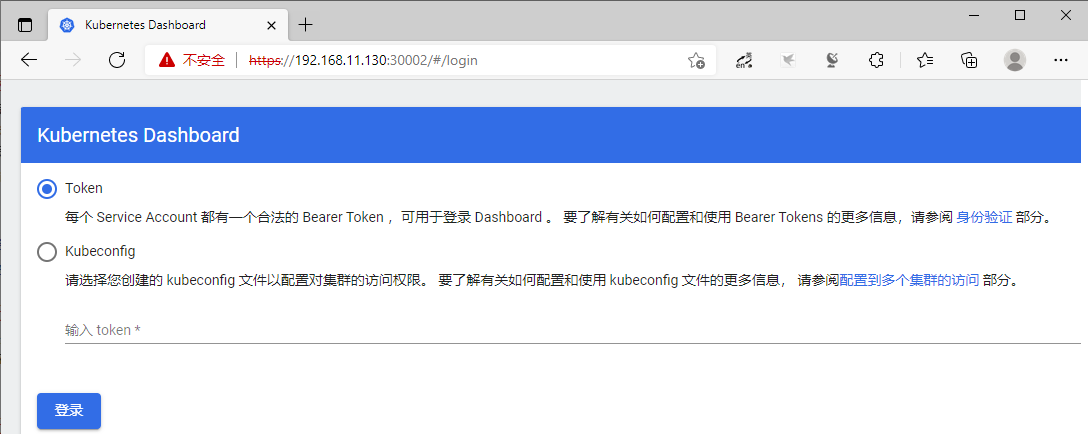

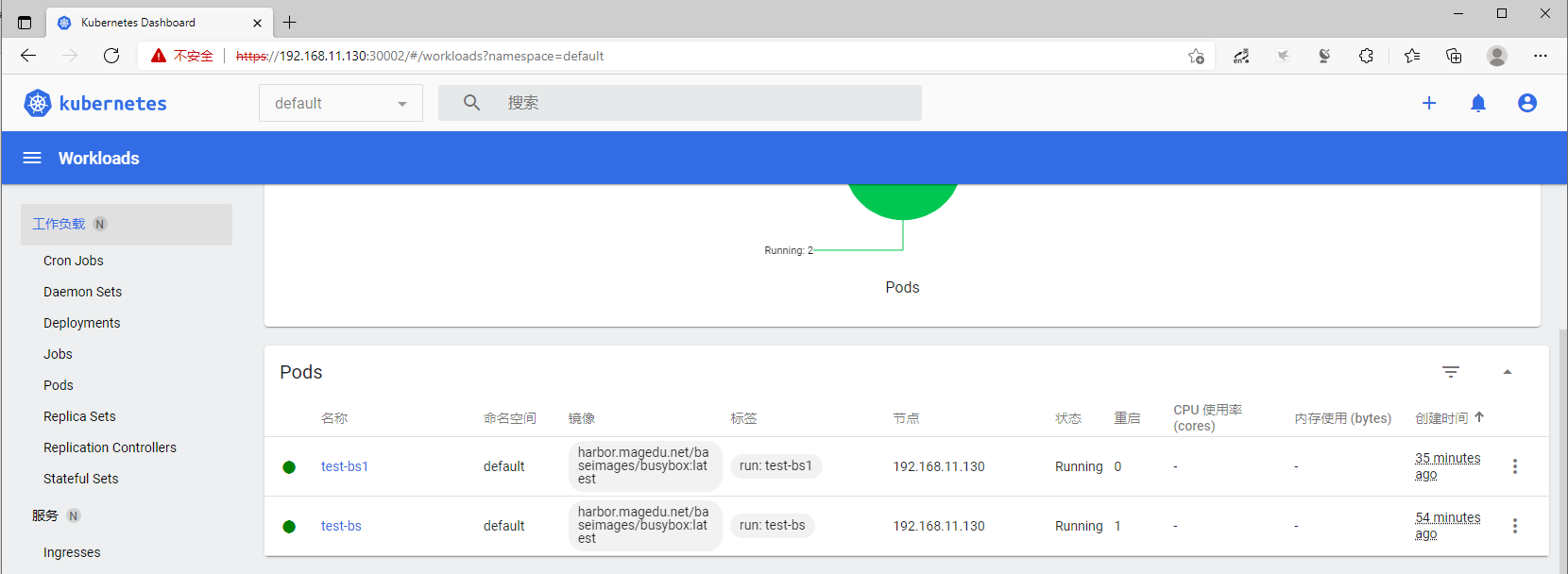

2.9、安装dashboard并创建相关账号——使用token登陆:直接使用杰哥上课提供的yml文件

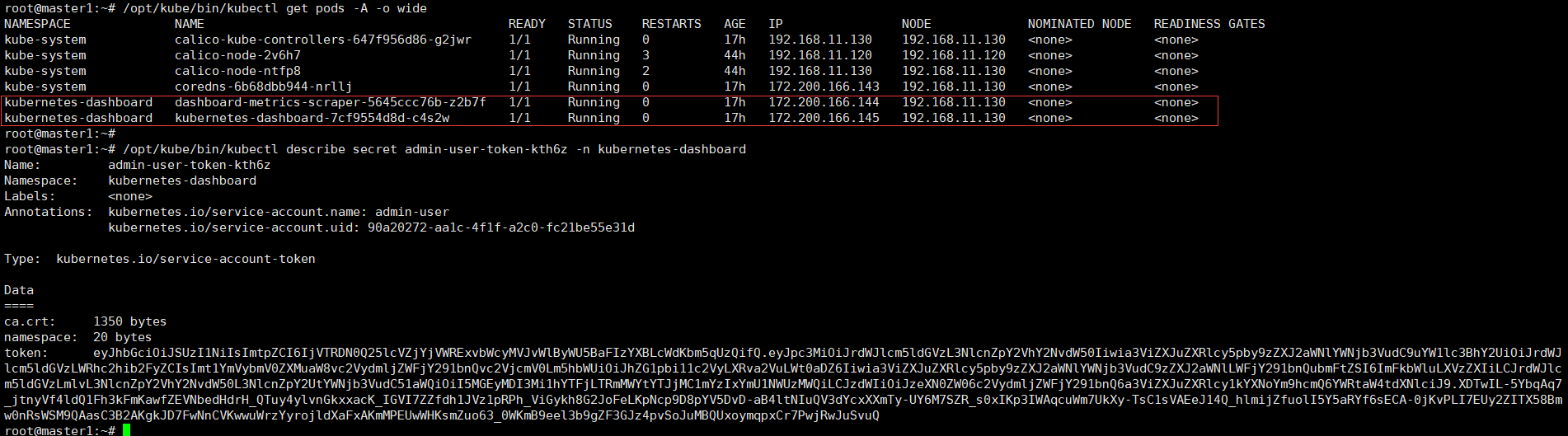

主要步骤如下:

1 /opt/kube/bin/kubectl apply -f dashboard-v2.3.1.yaml 2 /opt/kube/bin/kubectl get pods -A -o wide # 查看并确认dashboardpod运行状态中 3 /opt/kube/bin/kubectl apply -f admin-user.yml 4 /opt/kube/bin/kubectl get secret -A |grep admin 5 /opt/kube/bin/kubectl describe secret admin-user-token-kth6z -n kubernetes-dashboard

dashboard的yaml文件内容,当然你也可以直接官网下载:

1 root@master1:~# cat dashboard-v2.3.1.yaml 2 # Copyright 2017 The Kubernetes Authors. 3 # 4 # Licensed under the Apache License, Version 2.0 (the "License"); 5 # you may not use this file except in compliance with the License. 6 # You may obtain a copy of the License at 7 # 8 # http://www.apache.org/licenses/LICENSE-2.0 9 # 10 # Unless required by applicable law or agreed to in writing, software 11 # distributed under the License is distributed on an "AS IS" BASIS, 12 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 # See the License for the specific language governing permissions and 14 # limitations under the License. 15 16 apiVersion: v1 17 kind: Namespace 18 metadata: 19 name: kubernetes-dashboard 20 21 --- 22 23 apiVersion: v1 24 kind: ServiceAccount 25 metadata: 26 labels: 27 k8s-app: kubernetes-dashboard 28 name: kubernetes-dashboard 29 namespace: kubernetes-dashboard 30 31 --- 32 33 kind: Service 34 apiVersion: v1 35 metadata: 36 labels: 37 k8s-app: kubernetes-dashboard 38 name: kubernetes-dashboard 39 namespace: kubernetes-dashboard 40 spec: 41 type: NodePort 42 ports: 43 - port: 443 44 targetPort: 8443 45 nodePort: 30002 46 selector: 47 k8s-app: kubernetes-dashboard 48 49 --- 50 51 apiVersion: v1 52 kind: Secret 53 metadata: 54 labels: 55 k8s-app: kubernetes-dashboard 56 name: kubernetes-dashboard-certs 57 namespace: kubernetes-dashboard 58 type: Opaque 59 60 --- 61 62 apiVersion: v1 63 kind: Secret 64 metadata: 65 labels: 66 k8s-app: kubernetes-dashboard 67 name: kubernetes-dashboard-csrf 68 namespace: kubernetes-dashboard 69 type: Opaque 70 data: 71 csrf: "" 72 73 --- 74 75 apiVersion: v1 76 kind: Secret 77 metadata: 78 labels: 79 k8s-app: kubernetes-dashboard 80 name: kubernetes-dashboard-key-holder 81 namespace: kubernetes-dashboard 82 type: Opaque 83 84 --- 85 86 kind: ConfigMap 87 apiVersion: v1 88 metadata: 89 labels: 90 k8s-app: kubernetes-dashboard 91 name: kubernetes-dashboard-settings 92 namespace: kubernetes-dashboard 93 94 --- 95 96 kind: Role 97 apiVersion: rbac.authorization.k8s.io/v1 98 metadata: 99 labels: 100 k8s-app: kubernetes-dashboard 101 name: kubernetes-dashboard 102 namespace: kubernetes-dashboard 103 rules: 104 # Allow Dashboard to get, update and delete Dashboard exclusive secrets. 105 - apiGroups: [""] 106 resources: ["secrets"] 107 resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"] 108 verbs: ["get", "update", "delete"] 109 # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. 110 - apiGroups: [""] 111 resources: ["configmaps"] 112 resourceNames: ["kubernetes-dashboard-settings"] 113 verbs: ["get", "update"] 114 # Allow Dashboard to get metrics. 115 - apiGroups: [""] 116 resources: ["services"] 117 resourceNames: ["heapster", "dashboard-metrics-scraper"] 118 verbs: ["proxy"] 119 - apiGroups: [""] 120 resources: ["services/proxy"] 121 resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"] 122 verbs: ["get"] 123 124 --- 125 126 kind: ClusterRole 127 apiVersion: rbac.authorization.k8s.io/v1 128 metadata: 129 labels: 130 k8s-app: kubernetes-dashboard 131 name: kubernetes-dashboard 132 rules: 133 # Allow Metrics Scraper to get metrics from the Metrics server 134 - apiGroups: ["metrics.k8s.io"] 135 resources: ["pods", "nodes"] 136 verbs: ["get", "list", "watch"] 137 138 --- 139 140 apiVersion: rbac.authorization.k8s.io/v1 141 kind: RoleBinding 142 metadata: 143 labels: 144 k8s-app: kubernetes-dashboard 145 name: kubernetes-dashboard 146 namespace: kubernetes-dashboard 147 roleRef: 148 apiGroup: rbac.authorization.k8s.io 149 kind: Role 150 name: kubernetes-dashboard 151 subjects: 152 - kind: ServiceAccount 153 name: kubernetes-dashboard 154 namespace: kubernetes-dashboard 155 156 --- 157 158 apiVersion: rbac.authorization.k8s.io/v1 159 kind: ClusterRoleBinding 160 metadata: 161 name: kubernetes-dashboard 162 roleRef: 163 apiGroup: rbac.authorization.k8s.io 164 kind: ClusterRole 165 name: kubernetes-dashboard 166 subjects: 167 - kind: ServiceAccount 168 name: kubernetes-dashboard 169 namespace: kubernetes-dashboard 170 171 --- 172 173 kind: Deployment 174 apiVersion: apps/v1 175 metadata: 176 labels: 177 k8s-app: kubernetes-dashboard 178 name: kubernetes-dashboard 179 namespace: kubernetes-dashboard 180 spec: 181 replicas: 1 182 revisionHistoryLimit: 10 183 selector: 184 matchLabels: 185 k8s-app: kubernetes-dashboard 186 template: 187 metadata: 188 labels: 189 k8s-app: kubernetes-dashboard 190 spec: 191 containers: 192 - name: kubernetes-dashboard 193 image: harbor.magedu.net/baseimages/dashboard:v2.3.1 194 imagePullPolicy: Always 195 ports: 196 - containerPort: 8443 197 protocol: TCP 198 args: 199 - --auto-generate-certificates 200 - --namespace=kubernetes-dashboard 201 # Uncomment the following line to manually specify Kubernetes API server Host 202 # If not specified, Dashboard will attempt to auto discover the API server and connect 203 # to it. Uncomment only if the default does not work. 204 # - --apiserver-host=http://my-address:port 205 volumeMounts: 206 - name: kubernetes-dashboard-certs 207 mountPath: /certs 208 # Create on-disk volume to store exec logs 209 - mountPath: /tmp 210 name: tmp-volume 211 livenessProbe: 212 httpGet: 213 scheme: HTTPS 214 path: / 215 port: 8443 216 initialDelaySeconds: 30 217 timeoutSeconds: 30 218 securityContext: 219 allowPrivilegeEscalation: false 220 readOnlyRootFilesystem: true 221 runAsUser: 1001 222 runAsGroup: 2001 223 volumes: 224 - name: kubernetes-dashboard-certs 225 secret: 226 secretName: kubernetes-dashboard-certs 227 - name: tmp-volume 228 emptyDir: {} 229 serviceAccountName: kubernetes-dashboard 230 nodeSelector: 231 "kubernetes.io/os": linux 232 # Comment the following tolerations if Dashboard must not be deployed on master 233 tolerations: 234 - key: node-role.kubernetes.io/master 235 effect: NoSchedule 236 237 --- 238 239 kind: Service 240 apiVersion: v1 241 metadata: 242 labels: 243 k8s-app: dashboard-metrics-scraper 244 name: dashboard-metrics-scraper 245 namespace: kubernetes-dashboard 246 spec: 247 ports: 248 - port: 8000 249 targetPort: 8000 250 selector: 251 k8s-app: dashboard-metrics-scraper 252 253 --- 254 255 kind: Deployment 256 apiVersion: apps/v1 257 metadata: 258 labels: 259 k8s-app: dashboard-metrics-scraper 260 name: dashboard-metrics-scraper 261 namespace: kubernetes-dashboard 262 spec: 263 replicas: 1 264 revisionHistoryLimit: 10 265 selector: 266 matchLabels: 267 k8s-app: dashboard-metrics-scraper 268 template: 269 metadata: 270 labels: 271 k8s-app: dashboard-metrics-scraper 272 annotations: 273 seccomp.security.alpha.kubernetes.io/pod: 'runtime/default' 274 spec: 275 containers: 276 - name: dashboard-metrics-scraper 277 image: harbor.magedu.net/baseimages/metrics-scraper:v1.0.6 278 ports: 279 - containerPort: 8000 280 protocol: TCP 281 livenessProbe: 282 httpGet: 283 scheme: HTTP 284 path: / 285 port: 8000 286 initialDelaySeconds: 30 287 timeoutSeconds: 30 288 volumeMounts: 289 - mountPath: /tmp 290 name: tmp-volume 291 securityContext: 292 allowPrivilegeEscalation: false 293 readOnlyRootFilesystem: true 294 runAsUser: 1001 295 runAsGroup: 2001 296 serviceAccountName: kubernetes-dashboard 297 nodeSelector: 298 "kubernetes.io/os": linux 299 # Comment the following tolerations if Dashboard must not be deployed on master 300 tolerations: 301 - key: node-role.kubernetes.io/master 302 effect: NoSchedule 303 volumes: 304 - name: tmp-volume 305 emptyDir: {} 306 root@master1:~#

admin-user.yml的内容:

1 root@master1:~# cat admin-user.yml 2 apiVersion: v1 3 kind: ServiceAccount 4 metadata: 5 name: admin-user 6 namespace: kubernetes-dashboard 7 8 --- 9 apiVersion: rbac.authorization.k8s.io/v1 10 kind: ClusterRoleBinding 11 metadata: 12 name: admin-user 13 roleRef: 14 apiGroup: rbac.authorization.k8s.io 15 kind: ClusterRole 16 name: cluster-admin 17 subjects: 18 - kind: ServiceAccount 19 name: admin-user 20 namespace: kubernetes-dashboard 21 22 root@master1:~#

获取token登陆:

先这样吧,后面的添加节点等,敬请期待……