64*64*3小图(12KB),batchSize=128,训练样本100万,

全部load进来内存受不了,load一次需要大半天

训练时读入一个batch,ali云服务器上每个batch读入时间1.9~3.2s不等,迭代一次2s多

由于有多个label不能用caffe自带的lmdb转了,输入是自己写的python层,试着用pickel

import os, sys import cv2 import numpy as np import numpy.random as npr import cPickle as pickle wk_dir = "/Users/xxx/wkspace/caffe_space/detection/caffe/data/1103reg64/" InputSize = int(sys.argv[1]) BatchSize = int(sys.argv[2]) trainfile = "train.txt" testfile = "test.txt" print "gen imdb with for net input:", InputSize, "batchSize:", BatchSize with open(wk_dir+trainfile, 'r') as f: trainlines = f.readlines() with open(wk_dir+testfile, 'r') as f: testlines = f.readlines() ####################################### # we seperate train data by batchsize # ####################################### to_dir = wk_dir + "/trainIMDB/" if not os.path.isdir(to_dir): os.makedirs(to_dir) train_list = [] cur_ = 0 sum_ = len(trainlines) for line in trainlines: cur_ += 1 words = line.split() image_file_name = words[0] im = cv2.imread(wk_dir + image_file_name) h,w,ch = im.shape if h!=InputSize or w!=InputSize: im = cv2.resize(im,(InputSize,InputSize)) roi = [float(words[2]),float(words[3]),float(words[4]),float(words[5])] train_list.append([im, roi]) if (cur_ % BatchSize == 0): print "write batch:" , cur_/BatchSize fid = open(to_dir +'train'+ str(BatchSize) + '_'+str(cur_/BatchSize),'w') pickle.dump(train_list, fid) fid.close() train_list[:] = [] print len(train_list), "train data generated " ########################### # tests # ########################### to_dir = wk_dir + "/testIMDB/" if not os.path.isdir(to_dir): os.makedirs(to_dir) test_list = [] cur_ = 0 sum_ = len(testlines) for line in testlines: cur_ += 1 words = line.split() image_file_name = words[0] im = cv2.imread(wk_dir + image_file_name) h,w,ch = im.shape if h!=InputSize or w!=InputSize: im = cv2.resize(im,(InputSize,InputSize)) roi = [float(words[2]),float(words[3]),float(words[4]),float(words[5])] test_list.append([im, roi]) if (cur_ % BatchSize == 0): print "write batch:", cur_ / BatchSize fid = open(to_dir +'test'+ str(BatchSize) + '_'+str(cur_/BatchSize), 'w') pickle.dump(test_list, fid) fid.close() test_list[:] = [] print len(test_list), "test data generated "

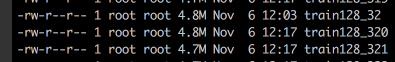

每个batch生成4.8MB的块(约比128张原图占3倍磁盘空间):

训练时读入,ali云训练每个batch时间变为0.2s,可加速10倍

mac上是ssd硬盘,本来读图就很快,一个batch 0.05s, 改成pickel后反而变慢了,load一个batch需要0.2s。