在开始正文前,先说下Axiom3D里遇到的二个BUG.

1.在启动axiom生成的程序中,我发现输出里总是有一些如"billboard_type","billboard_origin"这些不能解析,我开始还在想是不是文件格式版本过期或是啥的,反正后面我查了下,发现这些是有对应解析类的,在对比对应的Ogre相应位置代码,发现ParticleSystemRenderer在Ogre中是多重继承,C#天生不支持,但是我发现ScriptableObject本身是从DisposableObject继承的,那么只需要把ParticleSystemRenderer 从DisposableObject 继承改为从ScriptableObject 继承,然后在BillboardParticleRenderer类中方法SetParameter 修改成如下:

public override bool SetParameter(string attr, string val) { try { Properties[attr] = val; return true; } catch (Exception e) { LogManager.Instance.Write(e.Message); return false; } //if ( this.attribParsers.ContainsKey( attr ) ) //{ // var args = new object[2]; // args[ 0 ] = val.Split( ' ' ); // args[ 1 ] = this; // this.attribParsers[ attr ].Invoke( null, args ); // //ParticleSystemRendererAttributeParser parser = // // (ParticleSystemRendererAttributeParser)attribParsers[attr]; // //// call the parser method // //parser(val.Split(' '), this); // return true; //} //return false; }

具体修改意义我简单说下,原来的修改格式是针对内部特性方法的,但是BillboardParticleRenderer类里全是内部特性类的,从前面的继承修改加这段代码,就是为了让BillboardParticleRenderer能自己查找自己的特性类并找到对应的设置方法.

2.这个BUG和ManualObject有关,我在设置ManualObject时,发现一些奇怪的问题,设置的颜色不能用,当时还以为是材质,灯光这些,后面全排除后,找到ManualObject里的CopyTempVertexToBuffer方法,发现有处逻辑不对.如下修改:

protected virtual void CopyTempVertexToBuffer() { this.tempVertexPending = false; var rop = this.currentSection.RenderOperation; if (rop.vertexData.vertexCount == 0 && !this.currentUpdating) { // first vertex, autoorganise decl var oldDcl = rop.vertexData.vertexDeclaration; rop.vertexData.vertexDeclaration = oldDcl.GetAutoOrganizedDeclaration(false, false); HardwareBufferManager.Instance.DestroyVertexDeclaration(oldDcl); } ResizeTempVertexBufferIfNeeded(++rop.vertexData.vertexCount); var elemList = rop.vertexData.vertexDeclaration.Elements; #if !AXIOM_SAFE_ONLY unsafe #endif { // get base pointer var buf = BufferBase.Wrap(this.tempVertexBuffer); buf.Ptr = this.declSize * (rop.vertexData.vertexCount - 1); //var pFloat = buf.ToFloatPointer(); //var pRGBA = buf.ToUIntPointer(); foreach (var elem in elemList) { var offest = elem.Offset; buf.Ptr += offest; var pFloat = buf.ToFloatPointer(); var pRGBA = buf.ToUIntPointer(); var idx = 0; RenderSystem rs; int dims; switch (elem.Semantic) { case VertexElementSemantic.Position: pFloat[idx++] = this.tempVertex.position.x; pFloat[idx++] = this.tempVertex.position.y; pFloat[idx] = this.tempVertex.position.z; break; case VertexElementSemantic.Normal: pFloat[idx++] = this.tempVertex.normal.x; pFloat[idx++] = this.tempVertex.normal.y; pFloat[idx] = this.tempVertex.normal.z; break; case VertexElementSemantic.TexCoords: dims = VertexElement.GetTypeCount(elem.Type); for (var t = 0; t < dims; ++t) { pFloat[idx++] = this.tempVertex.texCoord[elem.Index][t]; } break; case VertexElementSemantic.Diffuse: rs = Root.Instance.RenderSystem; if (rs != null) { pRGBA[idx] = (uint)rs.ConvertColor(this.tempVertex.color); } else { pRGBA[idx] = (uint)this.tempVertex.color.ToRGBA(); // pick one! } break; default: // nop ? break; } } } }

简单来说,和原来地形的高度生成错误比较类似,把字节个数直接当做索引来用,ManualObject里的元素都是4个字节,还有一个简单的方法,直接让indx=elem.offset/4应该也是对的,但是我认为这种修改方法不算太好,如采用现在的方法.

现在开始正文,从ManualObject来说,ManualObject是Ogre模仿了Opengl里立即绘制模式的一种写法,但是他和立即绘制模式本质是不同的,写法虽然是这样,但是他也是采用缓冲区的方法,意思来说,就是写法让习惯写立即模式的人爽,效率也是,当然ManualObject里的有些元素写法顺序规定的和OpenGL的不一样.先来看一段代码,这段代码是我从Ogre论坛里考过来的,把里面的C++写法改变下就行了,非常容易.

public static ManualObject CreateTetrahedron(Vector3 position, Single scale) { ManualObject manObTetra = new ManualObject("Tetrahedron"); manObTetra.CastShadows = false; // render just before overlays (so all objects behind the transparent tetrahedron are visible) manObTetra.RenderQueueGroup = RenderQueueGroupID.Overlay - 1; // = 99 Vector3[] c = new Vector3[4]; // corners // calculate corners of tetrahedron (with point of origin in middle of volume) Single mbot = scale * 0.2f; // distance middle to bottom Single mtop = scale * 0.62f; // distance middle to top Single mf = scale * 0.289f; // distance middle to front Single mb = scale * 0.577f; // distance middle to back Single mlr = scale * 0.5f; // distance middle to left right // width / height / depth c[0] = new Vector3(-mlr, -mbot, mf); // left bottom front c[1] = new Vector3(mlr, -mbot, mf); // right bottom front c[2] = new Vector3(0, -mbot, -mb); // (middle) bottom back c[3] = new Vector3(0, mtop, 0); // (middle) top (middle) // add position offset for all corners (move tetrahedron) for (Int16 i = 0; i <= 3; i++) c[i] += position; // create bottom manObTetra.Begin("floor", OperationType.TriangleList); manObTetra.Position(c[2]); manObTetra.Color(ColorEx.Red); manObTetra.Position(c[1]); manObTetra.Position(c[0]); manObTetra.Triangle(0, 1, 2); manObTetra.End(); // create right back side manObTetra.Begin("edm/floor", OperationType.TriangleList); manObTetra.Position(c[1]); manObTetra.Color(ColorEx.Green); manObTetra.Position(c[2]); manObTetra.Position(c[3]); manObTetra.Triangle(0, 1, 2); manObTetra.End(); // create left back side manObTetra.Begin("edm/floor", OperationType.TriangleList); manObTetra.Position(c[3]); manObTetra.Color(ColorEx.Green); manObTetra.Position(c[2]); manObTetra.Color(ColorEx.Red); manObTetra.Position(c[0]); manObTetra.Color(ColorEx.Blue); manObTetra.Triangle(0, 1, 2); manObTetra.End(); // create front side manObTetra.Begin("BaseWhiteNoLighting", OperationType.TriangleList); manObTetra.Color(ColorEx.White); manObTetra.Position(c[0]); manObTetra.Position(c[1]); manObTetra.Position(c[3]); manObTetra.Triangle(0, 1, 2); manObTetra.End(); return manObTetra; }

这段代码也是我发现ManualObject里的颜色不能用的BUG的代码.我们从ManualObject里的代码来看一些注意事项,ManualObject是从Begin()方法调用后,根据第一个顶点到第二个顶点来生成顶点元素组成部分的.简单来说:

1.调用Begin方法后,需要最先调用Position方法,在第一个Position调用前如调用Color,Normal会引起元素实际与声明的组成部分对应不上.

2.在第一个Position和第二个Position之间出现的元素是这个缓冲区顶点的声明组成部分,如在第一个与第二个只出现了Color,那么你在第二个Position后声明Normal是无效的.

3.这个倒是OpenGL里的状态机有点像,在第一个Position与第二个Position调用Color,Normal这些后,不需要在第二个Position之后每次调用,如果调用,color,normal会采用新的值,直接看代码里的TempVertex类型tempVertex属性每次调用Position,Color,Normal就知道原因了.

下面放出上面代码生成效果图:

下面再给出一个立方体生成的ManualObject,和上面有些区别,大致就这二种写法.

public static ManualObject CreateCube(Vector3 center, float length, bool bLine = false) { string material = "BaseWhiteNoLighting"; ManualObject manual = new ManualObject("manualCube"); Vector3[] positions = new Vector3[]{ center + new Vector3(length, length, length), center + new Vector3(-length, length, length), center + new Vector3(-length, -length, length), center + new Vector3(length, -length, length), center + new Vector3(length, length, -length), center + new Vector3(-length, length, -length), center + new Vector3(-length, -length, -length), center + new Vector3(length, -length, -length) }; ushort[] indexs = new ushort[]{ 0,1,2,2,3,0, 0,3,7,7,4,0, 0,4,5,5,1,0, 6,5,4,4,7,6, 6,2,1,1,5,6, 6,7,3,3,2,6 }; if (bLine) manual.Begin(material, OperationType.LineList); else manual.Begin(material, OperationType.TriangleList); for (int i = 0; i < positions.Length; i++) { manual.Position(positions[i]); } for (int i = 0; i < indexs.Length; i++) { manual.Index(indexs[i]); } manual.End(); return manual; }

注意要让三角形与线同一顶点索引,每六个顶点索引中间二个是相同的,这样不生成线,但是三角形能正常生成,注意Ogre里也是逆时针.效果图就不发了.大家一看就知道是啥样的.

其实ManualObject与Mesh的区别不大,都是保存顶点状态与索引,ManualObject也有方法直接ConvertToMesh直接转为Mesh.不过要注意,ManualObject继承MovableObject,可以直接挂接到Node下,而Mesh只是一种资源,需要先以Entity为载体,再挂接到Node下,不过这样也使得Mesh更复杂,能完成与承载的功能也更多.

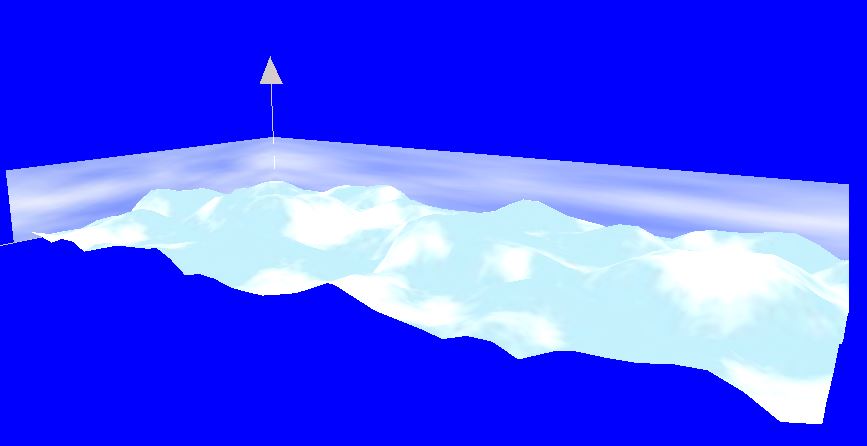

下面我们用Mesh来自定义一个模型,这个模型用来演示一个立方坐标轴,其中,XY与YZ可以用来展示,可以放入不同的材质,而XZ用来演示3D波动数据,这里我们用Cg加三维噪声来模拟,如这过程(柏林噪声实践(二) 水与火,顶点纹理拾取),最后大致效果如下:

图2:

代码分二部分,一部分是坐标轴的建立,这个主要是用到Mesh,一部分是每桢活动的数据,这个部分就用到Cg着色器代码,记的添加Cg着色器解析的插件。

下面是如何构建整个坐标轴的代码:

public class Axis3D { public Mesh Self { get; set; } public Vector3 range; public Axis3D(string name, Vector3 range) { this.range = range; Mesh mesh = MeshManager.Instance.CreateManual(name, "General", null); float arrow1 = 30.0f; float arrow2 = 20.0f; float arrow3 = 3.0f; var vertices = new float[]{ 0.0f,0.0f,0.0f,//Origin range.x,0.0f,0.0f,//x 0.0f,range.y,0.0f,//y 0.0f,0.0f,range.z,//z range.x,range.y,0.0f,//xy plane range.x,0.0f,range.z,//xz plane 0.0f,range.y,range.z,//yz plane range.x,range.y,range.z,//origin offest range.x+arrow1,0.0f,0.0f, range.x+arrow2,0.0f,-arrow3, range.x+arrow2,0.0f,arrow3, 0.0f,range.y+arrow1,0.0f, -arrow3,range.y+arrow2,arrow3, arrow3,range.y+arrow2,-arrow3, 0.0f,0.0f,range.z + arrow1, arrow3,0.0f,range.z+arrow2, -arrow3,0.0f,range.z+arrow2 }; mesh.SharedVertexData = new VertexData(); mesh.SharedVertexData.vertexCount = 17; var decl = mesh.SharedVertexData.vertexDeclaration; decl.AddElement(0, 0, VertexElementType.Float3, VertexElementSemantic.Position); var vbuf = HardwareBufferManager.Instance.CreateVertexBuffer(decl.Clone(0), mesh.SharedVertexData.vertexCount, BufferUsage.StaticWriteOnly); vbuf.WriteData(0, vbuf.Size, vertices, true); mesh.SharedVertexData.vertexBufferBinding.SetBinding(0, vbuf); SubMesh subMeshAxes = mesh.CreateSubMesh("axes"); short[] indexs = new short[]{ 0,8, 0,11, 0,14, }; HardwareIndexBuffer ibuf = HardwareBufferManager.Instance.CreateIndexBuffer(IndexType.Size16, indexs.Length, BufferUsage.StaticWriteOnly); ibuf.WriteData(0, ibuf.Size, indexs, true); subMeshAxes.useSharedVertices = true; subMeshAxes.indexData.indexBuffer = ibuf; subMeshAxes.indexData.indexCount = indexs.Length; subMeshAxes.indexData.indexStart = 0; subMeshAxes.OperationType = OperationType.LineList; SubMesh subMeshArrow = mesh.CreateSubMesh("arrow"); indexs = new short[]{ 8,9,10, 11,12,13, 14,15,16 }; ibuf = HardwareBufferManager.Instance.CreateIndexBuffer(IndexType.Size16, indexs.Length, BufferUsage.StaticWriteOnly); ibuf.WriteData(0, ibuf.Size, indexs, true); subMeshArrow.useSharedVertices = true; subMeshArrow.indexData.indexBuffer = ibuf; subMeshArrow.indexData.indexCount = indexs.Length; subMeshArrow.indexData.indexStart = 0; subMeshArrow.OperationType = OperationType.TriangleList; SubMesh subMeshPlaneXY = mesh.CreateSubMesh("planeXY"); CreatePlaneXY(subMeshPlaneXY); SubMesh subMeshPlaneYZ = mesh.CreateSubMesh("planeYZ"); CreatePlaneYZ(subMeshPlaneYZ); SubMesh subMeshPlaneXZ = mesh.CreateSubMesh("planeXZ"); CreateRenderPlane(subMeshPlaneXZ); mesh.BoundingBox = new AxisAlignedBox(Vector3.Zero, range); mesh.BoundingSphereRadius = range.Length; mesh.Load(); Self = mesh; } private void CreateAxis(SubMesh subMesh) { subMesh.useSharedVertices = false; subMesh.vertexData = new VertexData(); } private void CreatePlaneXY(SubMesh subMesh) { int verticeLong = 4; var vertices = new float[]{ 0.0f,0.0f,0.0f,//Origin 0.0f,0.0f, range.x,0.0f,0.0f,//x 1.0f,0.0f, 0.0f,range.y,0.0f,//y 0.0f,1.0f, range.x,range.y,0.0f,//xy plane 1.0f,1.0f, }; subMesh.useSharedVertices = false; subMesh.vertexData = new VertexData(); subMesh.vertexData.vertexCount = verticeLong; var decl = subMesh.vertexData.vertexDeclaration; decl.AddElement(0, 0, VertexElementType.Float3, VertexElementSemantic.Position); decl.AddElement(0, VertexElement.GetTypeSize(VertexElementType.Float3), VertexElementType.Float2, VertexElementSemantic.TexCoords, 0); var buf = HardwareBufferManager.Instance.CreateVertexBuffer(decl.Clone(0), verticeLong, BufferUsage.StaticWriteOnly); buf.WriteData(0, buf.Size, vertices); subMesh.vertexData.vertexBufferBinding.SetBinding(0, buf); var indexs = new short[]{ 0,1,3, 3,2,0 }; var ibuf = HardwareBufferManager.Instance.CreateIndexBuffer(IndexType.Size16, indexs.Length, BufferUsage.StaticWriteOnly); ibuf.WriteData(0, ibuf.Size, indexs, true); subMesh.indexData.indexBuffer = ibuf; subMesh.indexData.indexCount = indexs.Length; subMesh.indexData.indexStart = 0; subMesh.OperationType = OperationType.TriangleList; subMesh.MaterialName = "axis3D/xy"; } private void CreatePlaneYZ(SubMesh subMesh) { int verticeLong = 4; var vertices = new float[]{ 0.0f,0.0f,0.0f,//Origin 0.0f,0.0f, 0.0f,range.y,0.0f,//y 0.0f,1.0f, 0.0f,0.0f,range.z,//z 1.0f,0.0f, 0.0f,range.y,range.z,//yz plane 1.0f,1.0f, }; subMesh.useSharedVertices = false; subMesh.vertexData = new VertexData(); subMesh.vertexData.vertexCount = verticeLong; var decl = subMesh.vertexData.vertexDeclaration; decl.AddElement(0, 0, VertexElementType.Float3, VertexElementSemantic.Position); decl.AddElement(0, VertexElement.GetTypeSize(VertexElementType.Float3), VertexElementType.Float2, VertexElementSemantic.TexCoords, 0); var buf = HardwareBufferManager.Instance.CreateVertexBuffer(decl.Clone(0), verticeLong, BufferUsage.StaticWriteOnly); buf.WriteData(0, buf.Size, vertices); subMesh.vertexData.vertexBufferBinding.SetBinding(0, buf); var indexs = new short[]{ 0,1,3, 3,2,0 }; var ibuf = HardwareBufferManager.Instance.CreateIndexBuffer(IndexType.Size16, indexs.Length, BufferUsage.StaticWriteOnly); ibuf.WriteData(0, ibuf.Size, indexs, true); subMesh.indexData.indexBuffer = ibuf; subMesh.indexData.indexCount = indexs.Length; subMesh.indexData.indexStart = 0; subMesh.OperationType = OperationType.TriangleList; subMesh.MaterialName = "axis3D/yz"; } public void Rendering(float delt) { } public void CreateRenderPlane(SubMesh subMesh) { var xc = 5.0f; var yc = 5.0f; var xr = (int)(range.x/xc) - 1; var yr = (int)(range.z/yc) - 1; var halfx = 0.0f;// xr * xc * 0.5f; var halfy = 0.0f;// yr * yc * 0.5f; var length = (xr + 1) * (yr + 1) * 3; float[] vertices = new float[length]; int index = 0; for (int j = 0; j <= yr; j++) { for (int i = 0; i <= xr; i++) { vertices[index++] = xc * i - halfx; vertices[index++] = 0.0f; vertices[index++] = yc * j - halfy; //vertices[index++] = 0.3f; //vertices[index++] = 0.3f; //vertices[index++] = 0.3f; } } length = xr * yr * 6; int[] indexs = new int[length]; index = 0; for (int j = 0; j < yr; j++) { for (int i = 0; i < xr; i++) { indexs[index++] = (j + 0) * (xr + 1) + i; indexs[index++] = (j + 1) * (xr + 1) + i; indexs[index++] = (j + 0) * (xr + 1) + i + 1; indexs[index++] = (j + 0) * (xr + 1) + i + 1; indexs[index++] = (j + 1) * (xr + 1) + i; indexs[index++] = (j + 1) * (xr + 1) + i + 1; } } subMesh.useSharedVertices = false; subMesh.vertexData = new VertexData(); subMesh.vertexData.vertexCount = (xr + 1) * (yr + 1); var decl = subMesh.vertexData.vertexDeclaration; decl.AddElement(0, 0, VertexElementType.Float3, VertexElementSemantic.Position); //decl.AddElement(0, VertexElement.GetTypeSize(VertexElementType.Float3), VertexElementType.Float3, VertexElementSemantic., 0); var buf = HardwareBufferManager.Instance.CreateVertexBuffer(decl.Clone(0), subMesh.vertexData.vertexCount, BufferUsage.StaticWriteOnly); buf.WriteData(0, buf.Size, vertices); subMesh.vertexData.vertexBufferBinding.SetBinding(0, buf); var ibuf = HardwareBufferManager.Instance.CreateIndexBuffer(IndexType.Size32, indexs.Length, BufferUsage.StaticWriteOnly); ibuf.WriteData(0, ibuf.Size, indexs, true); subMesh.indexData.indexBuffer = ibuf; subMesh.indexData.indexCount = indexs.Length; subMesh.indexData.indexStart = 0; subMesh.OperationType = OperationType.TriangleList; subMesh.MaterialName = "noise3D"; } //private void }

我们就简化轴,只用一条线和一个三角形来表示,XY与YZ面需要不同的材质,我们各分一个SubMesh更好化分。注意Mesh可以申请顶点缓冲区(所有SubMesh可以直接用这个顶点缓冲缓冲区),但是没有索引缓冲区,意思就是Mesh本身不负责绘制元素,需要SubMesh绘制。而SubMesh由useSharedVertices确认是由采用SubMesh的索引缓冲区。这样做兼容性还是不错的,至少我想到一些文件格式都可以转化成Mesh这种结构。

在这个坐标轴了,Mesh建立了公共顶点,三条线和三个三角形都用到这个公共顶点。其中顶点缓冲区Ogre直接封装的是DX的那些类,申明VertexDeclaration与对应的VectexElement表明顶点元素的组成,前面的ManualObject在第一个Position与第二个Position之间申请的Color,Normal一样是这个过程,只是根据调用的方法,自动创建的VectexElement。而SubMesh如果表明直接用的是公共顶点,则只需要声明索引缓冲区就行了,申明索引缓冲区因为不需要指明顶点的构成元素,只需要说明是用32位字节还是16位字节,再对一些元素,如用法,连接模式行了。

至于建立XY,YZ面,则没用公共顶点缓冲区,那就需要自己申请自己的顶点缓冲区和索引缓冲区,申请过程和上面一样,需要指定useSharedVertices用false,对应的SubMesh可以自己指定对应的MaterialName。

最后则是XZ面,这个面,我们用来建立一些点,然后用Cg着色器代码控制顶点位置的Y轴,如何坐标有平滑性的波动,我们用到Noise,详细请看(柏林噪声实践(二) 水与火,顶点纹理拾取),这里不多说了。

下面先给出对应的材质代码:

1 // CG Vertex shader definition 2 vertex_program noise3D_VS cg 3 { 4 source v4.cg 5 entry_point main 6 profiles vp40 7 default_params 8 { 9 param_named_auto mvp worldviewproj_matrix 10 param_named_auto time2 frame_time 11 param_named_auto time1 time 1 12 param_named_auto time3 time_0_x 10.0 13 } 14 } 15 16 17 fragment_program noise3D_PS cg 18 { 19 source v4.cg 20 entry_point fragmentShader 21 profiles fp30 22 } 23 24 material noise3D 25 { 26 technique 27 { 28 pass 29 { 30 cull_hardware none 31 vertex_program_ref noise3D_VS 32 { 33 //param_named_auto time custom 0 34 } 35 texture_unit 36 { 37 texture cloud.jpg 38 } 39 fragment_program_ref noise3D_PS 40 { 41 } 42 texture_unit 43 { 44 texture cloud.jpg 45 } 46 } 47 } 48 }

Cg着色器代码:

#define ONE 0.00390625 #define ONEHALF 0.001953125 float fade(float t) { //return t*t*(3.0-2.0*t); // Old fade return t*t*t*(t*(t*6.0-15.0)+10.0); // Improved fade } float noise(float3 P,sampler2D permTexture) { float3 Pi = ONE*floor(P)+ONEHALF; float3 Pf = P-floor(P); // Noise contributions from (x=0, y=0), z=0 and z=1 float perm00 = tex2D(permTexture, Pi.xy).a ; float3 grad000 = tex2D(permTexture, float2(perm00, Pi.z)).rgb * 4.0 - 1.0; float n000 = dot(grad000, Pf); float3 grad001 = tex2D(permTexture, float2(perm00, Pi.z + ONE)).rgb * 4.0 - 1.0; float n001 = dot(grad001, Pf - float3(0.0, 0.0, 1.0)); // Noise contributions from (x=0, y=1), z=0 and z=1 float perm01 = tex2D(permTexture, Pi.xy + float2(0.0, ONE)).a ; float3 grad010 = tex2D(permTexture, float2(perm01, Pi.z)).rgb * 4.0 - 1.0; float n010 = dot(grad010, Pf - float3(0.0, 1.0, 0.0)); float3 grad011 = tex2D(permTexture, float2(perm01, Pi.z + ONE)).rgb * 4.0 - 1.0; float n011 = dot(grad011, Pf - float3(0.0, 1.0, 1.0)); // Noise contributions from (x=1, y=0), z=0 and z=1 float perm10 = tex2D(permTexture, Pi.xy + float2(ONE, 0.0)).a ; float3 grad100 = tex2D(permTexture, float2(perm10, Pi.z)).rgb * 4.0 - 1.0; float n100 = dot(grad100, Pf - float3(1.0, 0.0, 0.0)); float3 grad101 = tex2D(permTexture, float2(perm10, Pi.z + ONE)).rgb * 4.0 - 1.0; float n101 = dot(grad101, Pf - float3(1.0, 0.0, 1.0)); // Noise contributions from (x=1, y=1), z=0 and z=1 float perm11 = tex2D(permTexture, Pi.xy + float2(ONE, ONE)).a ; float3 grad110 = tex2D(permTexture, float2(perm11, Pi.z)).rgb * 4.0 - 1.0; float n110 = dot(grad110, Pf - float3(1.0, 1.0, 0.0)); float3 grad111 = tex2D(permTexture, float2(perm11, Pi.z + ONE)).rgb * 4.0 - 1.0; float n111 = dot(grad111, Pf - float3(1.0, 1.0, 1.0)); // Blend contributions along x float4 n_x = lerp(float4(n000, n001, n010, n011), float4(n100, n101, n110, n111), fade(Pf.x)); // Blend contributions along y float2 n_xy = lerp(n_x.xy, n_x.zw, fade(Pf.y)); // Blend contributions along z float n_xyz = lerp(n_xy.x, n_xy.y, fade(Pf.z)); return n_xyz; } float turbulence(int octaves, float3 P, float lacunarity, float gain,sampler2D permTexture) { float sum = 0; float scale = 1; float totalgain = 1; for(int i=0;i<octaves;i++){ sum += totalgain*noise(P*scale,permTexture); scale *= lacunarity; totalgain *= gain; } return abs(sum); } struct v_Output { float4 position : POSITION; //float pc : TEXCOORD0; float2 pc : TEXCOORD0; }; v_Output main(float3 position : POSITION, uniform float4x4 mvp, uniform float time1, uniform float time2, uniform float time3, uniform sampler2D permTexture) { v_Output OUT; float time = time3 + time1 + time2 * 10; float3 pf = float3(position.xz,time); //float ty = noise(pf,permTexture); //水 //float ty = turbulence(4,pf,2,0.5,permTexture); //float yy = ty * 0.5 + 0.5; //火 float ty = turbulence(4,pf,0.6,3,permTexture); float yy = ty * 0.5; float4 pos = float4(position.x,yy,position.z,1); OUT.pc = float2(position.x*0.01,position.z*0.01); //float4 pos = float4(position.x,50,position.z,1); OUT.position = mul(mvp,pos); //OUT.pc = yy; return OUT; } struct f_Output { float4 color : COLOR; }; f_Output fragmentShader(v_Output vin,uniform sampler2D texture) { f_Output OUT; OUT.color = tex2D(texture, vin.pc); OUT.color = OUT.color + float4(0.1,0.1,0.1,0.1); return OUT; }

这里简单说明一下材质代码,相关着色器代码具体意思大家移步前面链接。

在noise3D material中,vertex_program与fragment_program分别指定顶点和片断着色器,source指定相对路径的源文件代码位置,而entry_point指定当前着色器主函数,profiles指定硬件要求,因为我们用到纹理拾取,顶点着色器内纹理,所以在opengl下需要满足vp40才行,相关profiles对应硬件与说明请看Declaring Vertex/Geometry/Fragment Programs,vp40要求比较新的显卡。其中default_params相当于我们给着色器中uniform变量设定的初始值,param_named_auto指明由Ogre内部自动更新,详情请看Using Vertex/Geometry/Fragment Programs in a Pass。大家对应一下顶点着色器中的default_params参数与Cg里的代码,就能看明白是怎么个意思,Ogre这一块封装的相当不错,用起来没有别扭的感觉,并且还非常方便与人性化。在对应的material,对应Pass里指明vertex_program_ref与fragment_program_ref与前面对应,其中着色器需要的纹理直接紧接着对应的着色器后添加texture_unit就行,个数对应上。

总的来说,这里主要测试自动创建一些简单模型与着色器代码的基本用法,都是比较基础的,也可以看出Ogre对于这里的处理还是相当不错,方便又容易理解。