1.Spark Streaming功能介绍

1)定义

Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data streams

2.NC服务安装并运行Spark Streaming

1)在线安装nc命令

- rpm –ivh nc-1.84-22.el6.x86_64.rpm(优先选择)

#安装

上传nc-1.84-22.el6.x86_64.rpm包到software目录,再安装

#启动

启动之后在下边可以进行数据输入,然后就能够从spark端进行词频统计(如2)所示)

- yum install -y nc

2)运行Spark Streaming 的WordCount

#数据输入

#结果统计

注:把日志级别调整为WARN才能出现以上效果,否则会被日志覆盖,影响观察

3)把文件通过管道作为nc的输入,然后观察spark Streaming计算结果

文件具体内容

3.Spark Streaming工作原理

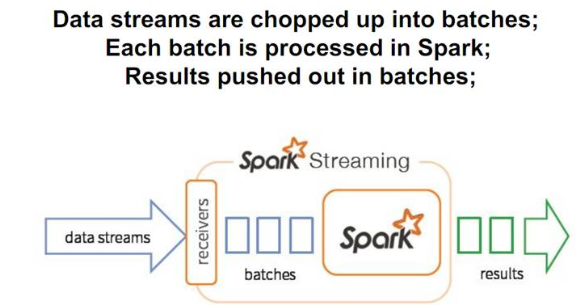

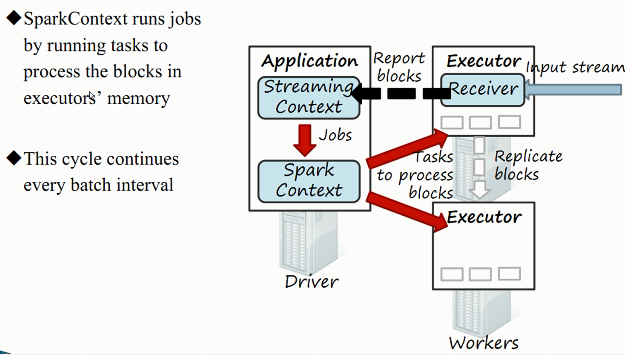

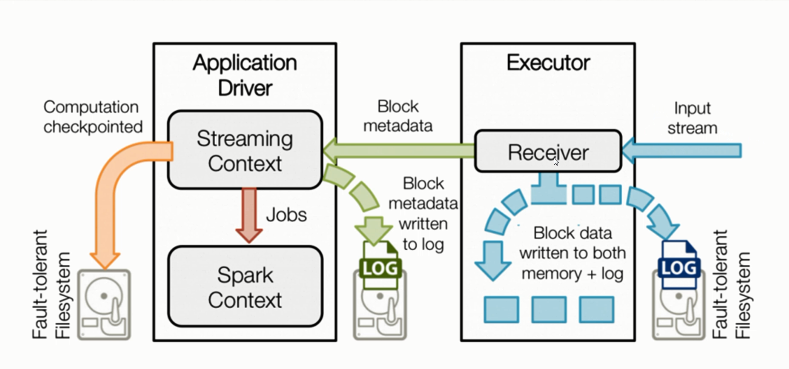

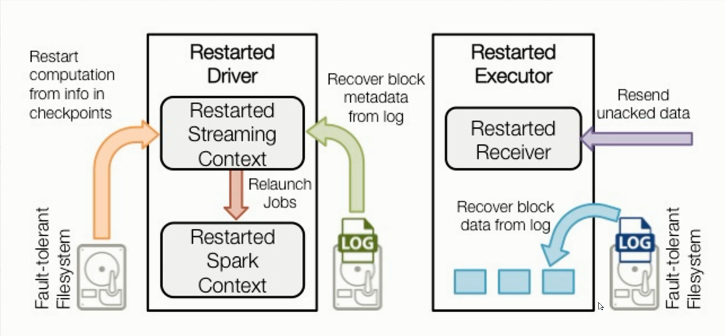

1)Spark Streaming数据流处理

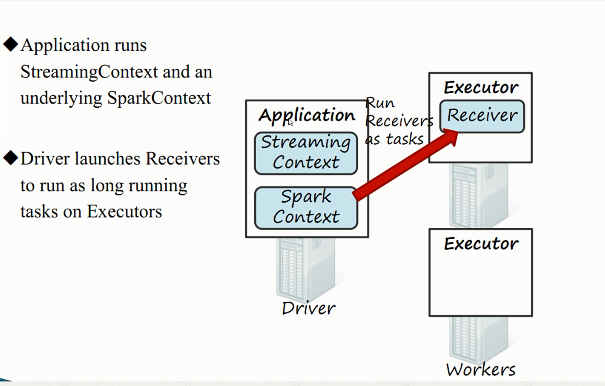

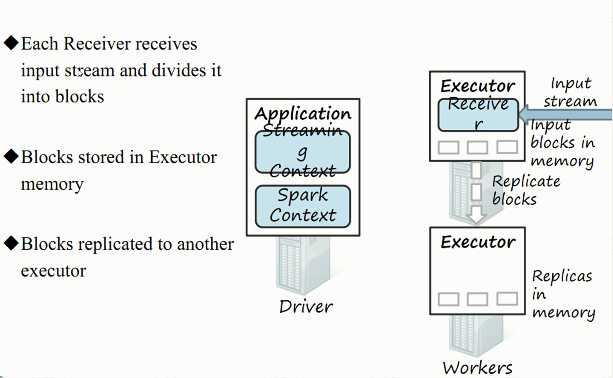

2)接收器工作原理

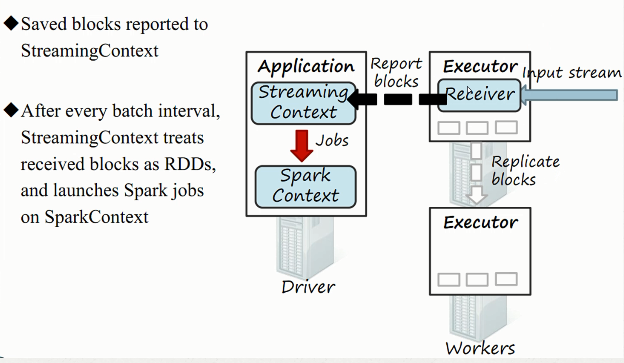

3)综合工作原理

4.Spark Streaming编程模型

1)StreamingContext初始化的两种方式

#第一种

#第二种

2)集群测试

#启动spark

#在nc服务器端输入数据

#结果统计

5.Spark Streaming读取Socket流数据

1)spark-shell运行Streaming程序,要么线程数大于1,要么基于集群。

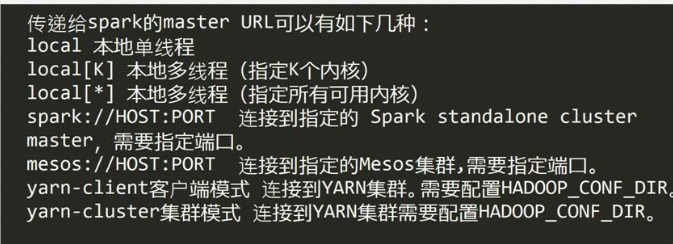

2)spark 运行模式

3)Spark Streaming读取Socket流数据

a)编写测试代码,并本地运行

b)启动nc服务发送数据

6.Spark Streaming保存数据到外部系统

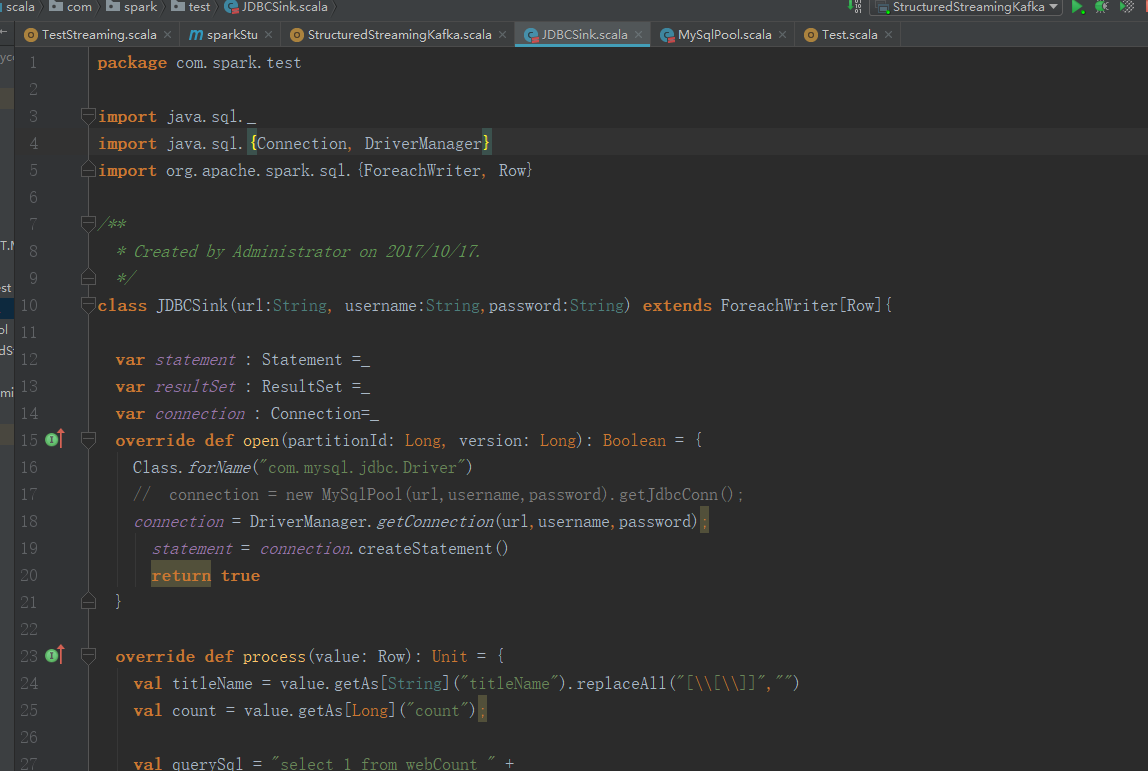

1)保存到mysql数据库

然后在nc服务器端输入数据,统计结果则会写入数据库内的webCount表中。

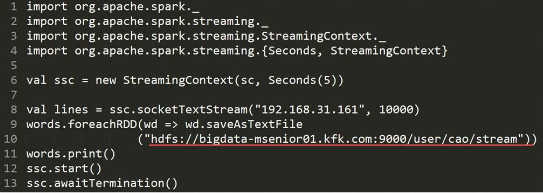

2)保存到hdfs

这种方法相比于写入数据库则更简单了,感兴趣的请参考下面代码自行测试一下。

特别说明:每次执行,HDFS文件内容都会被重置覆盖!

7.Structured Streaming 编程模型

1)complete输出模式

2)update输出模式

这种模式下你在nc服务器端继续输入,则会一直统计刚才输入及历史输入的值,而如果把outputMod修改为“update”,则会根据历史输入进行统计更新,并且只显示出最近一次输入value值更新后的统计结果。

3)append输出模式

把outputMod修改为“append”的话代码也要有一点小小的修改

可以看出,这种模式只是把每次输入进行简单追加而已。

8.实时数据处理业务分析

9.Spark Streaming与Kafka集成

1)准备工作

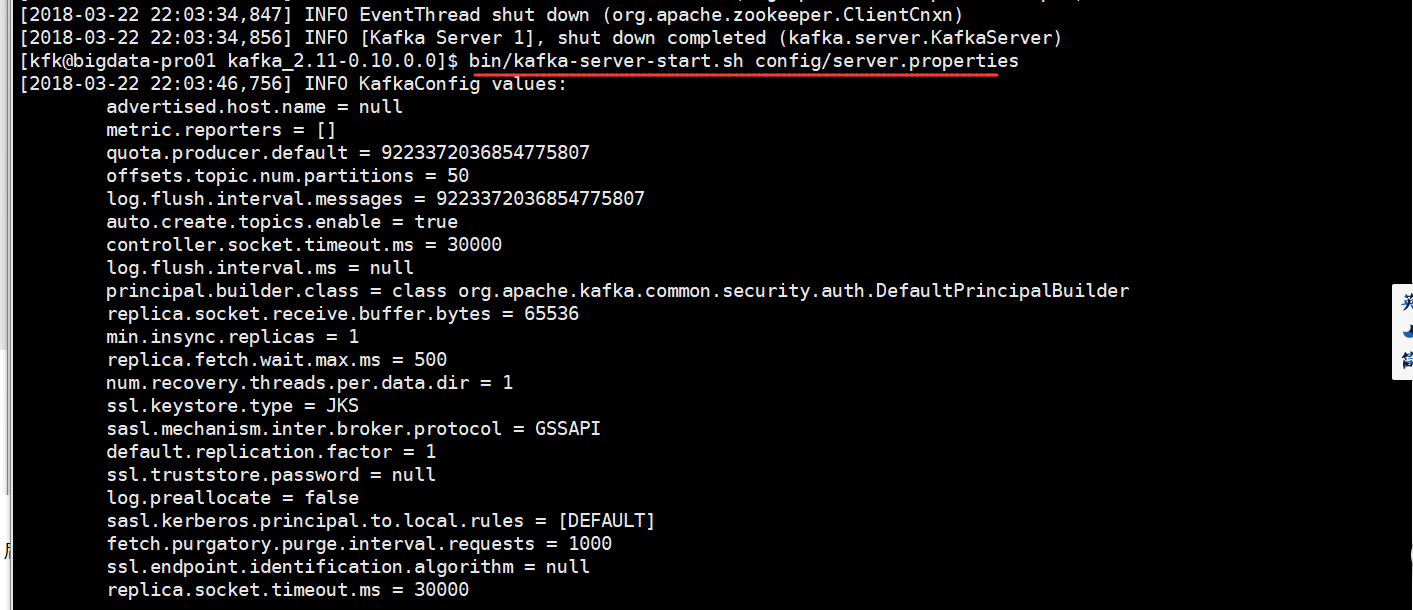

根据官网要求,我们之前的kafka的版本低了,需要下载一个至少0.10.0版本的。

下载地址 http://kafka.apache.org/downloads

修改配置很简单,只需要把我们原来配置的/config文件夹复制过来替换即可,并按照原来的配置新建kafka-logs和logs文件夹。然后,将配置文件夹中路径修改掉即可。

2)编写测试代码并启动运行

我们把包上传上来(3个节点都这样做)

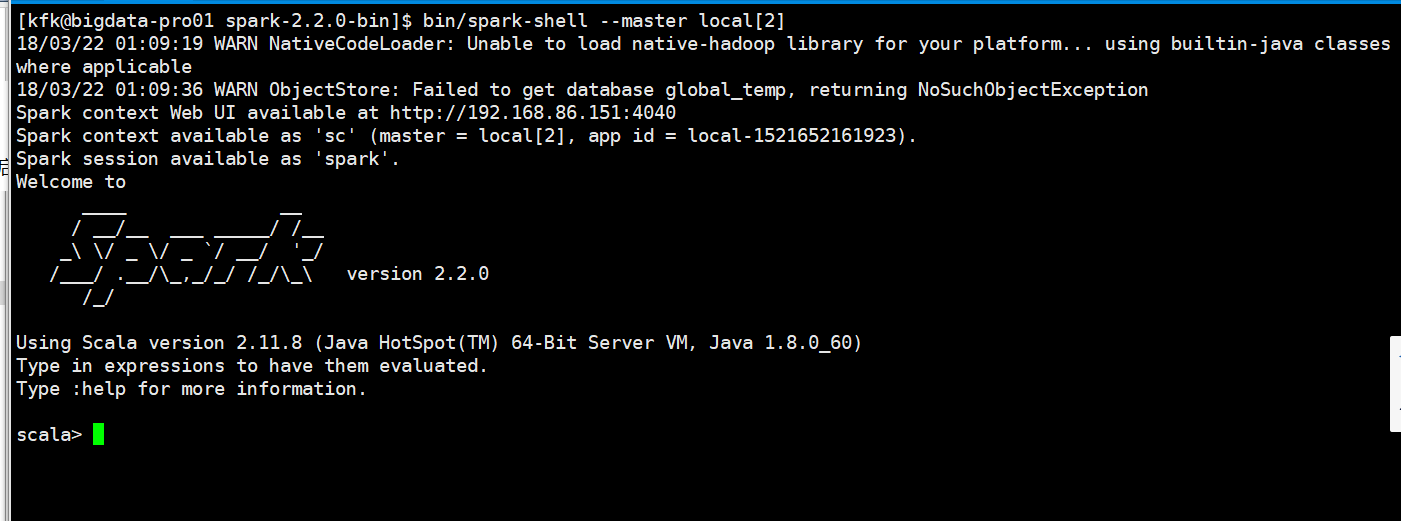

启动spark-shell

把代码拷贝进来

这个时候一定要保持kafka和生产者是开启的:

在生产者这边输入几个单词

回到spark-shell界面可以看到统计结果

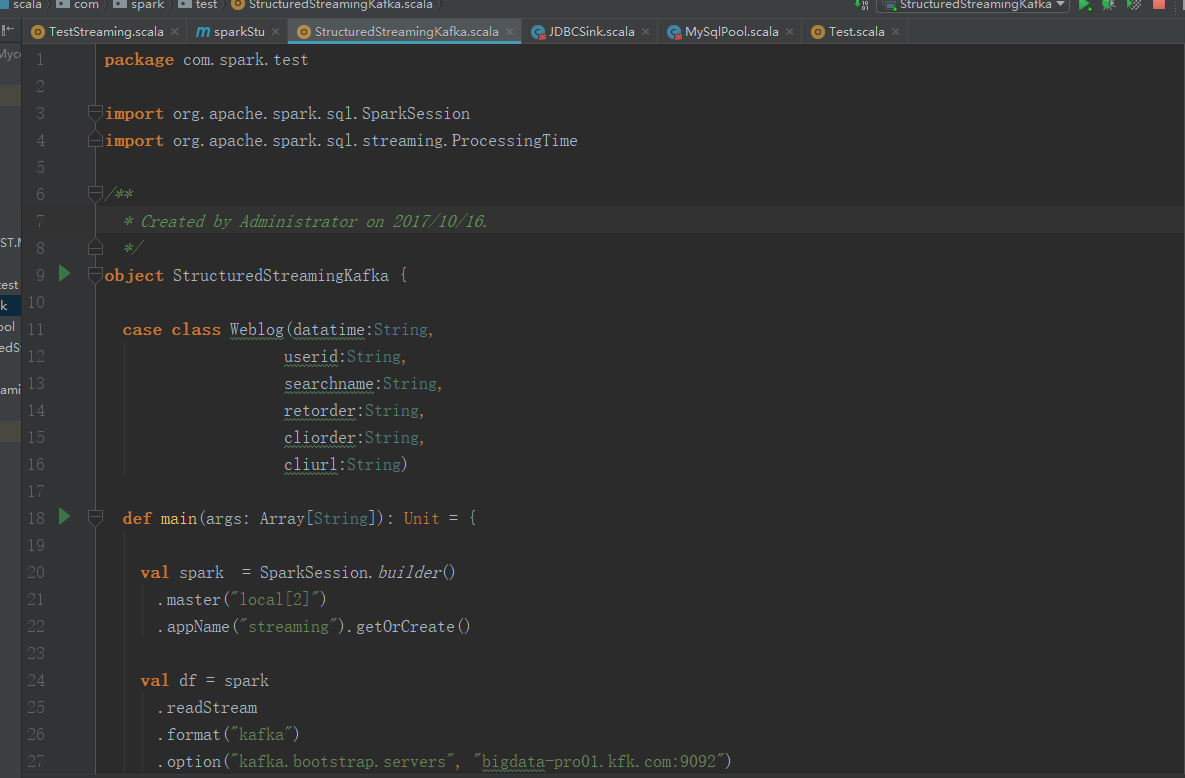

10. 基于结构化流完成数据实时分析

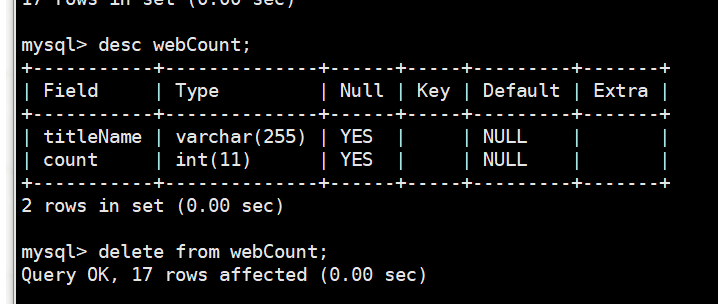

我们先把mysqld的test数据库的webCount的表的内容清除

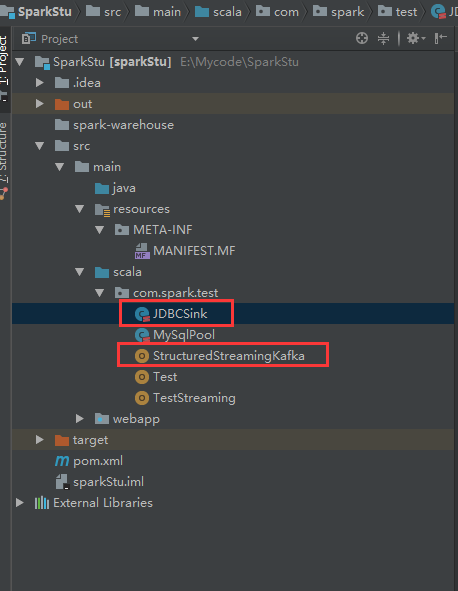

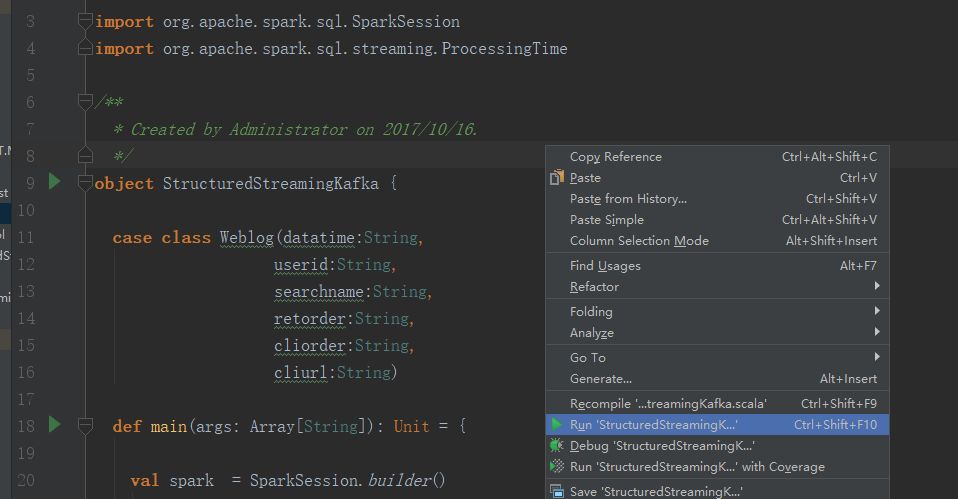

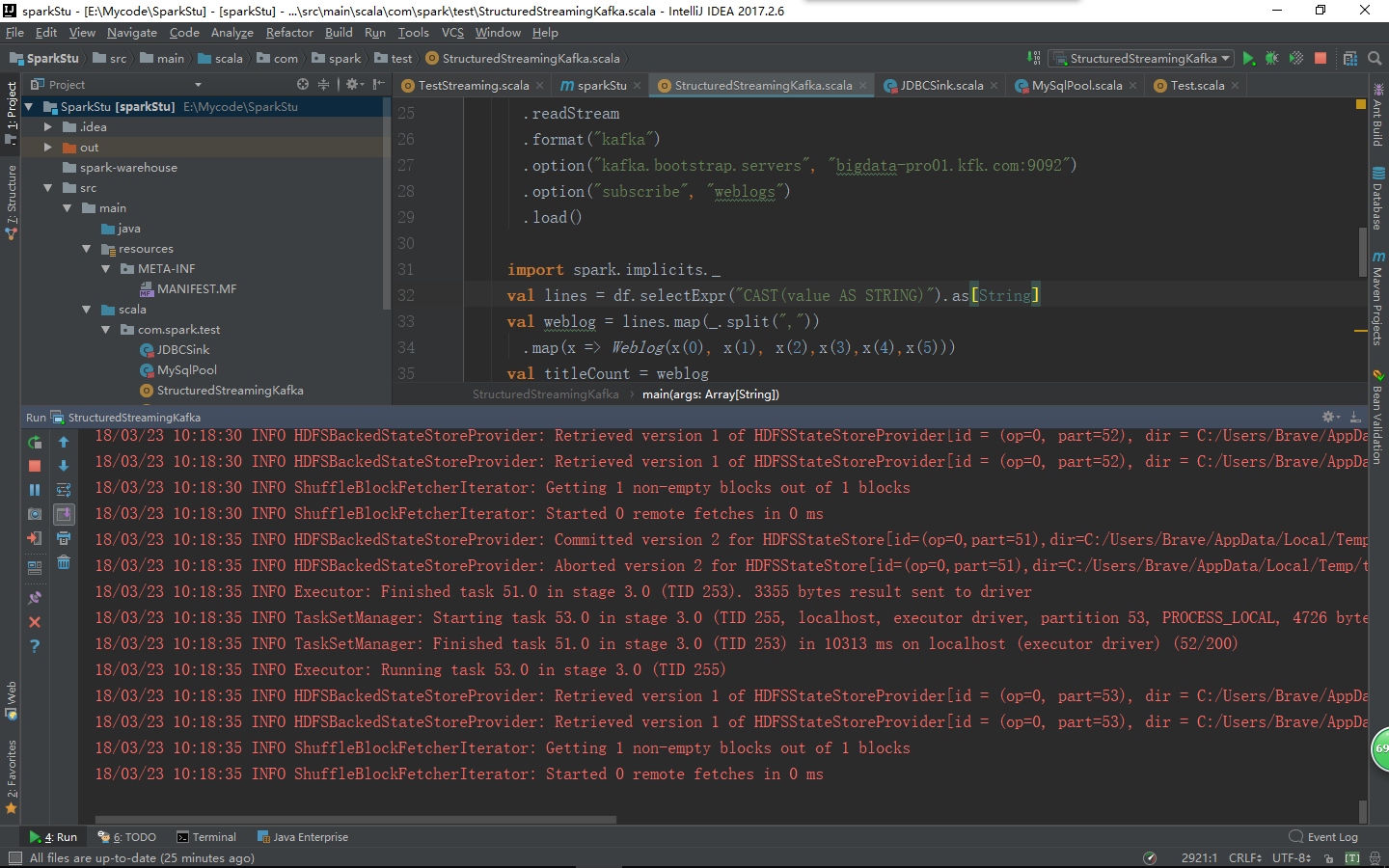

打开idea,我们编写两个程序

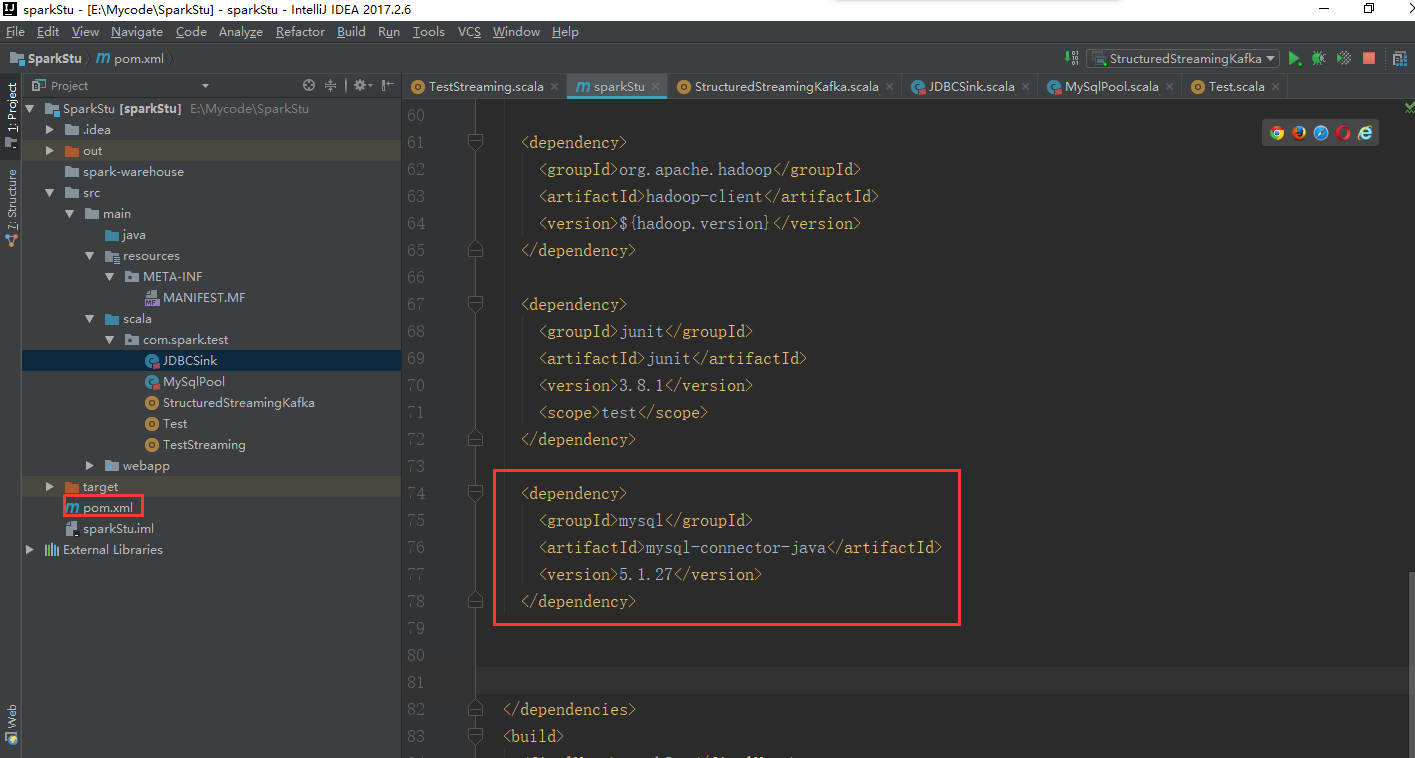

在pom.xml文件里添加这个依赖包

我在这里说一下这个依赖包版本的选择上最好要跟你集群里面的依赖包版本一样,不然可能会报错的,可以参考hive里的Lib路径下的版本。

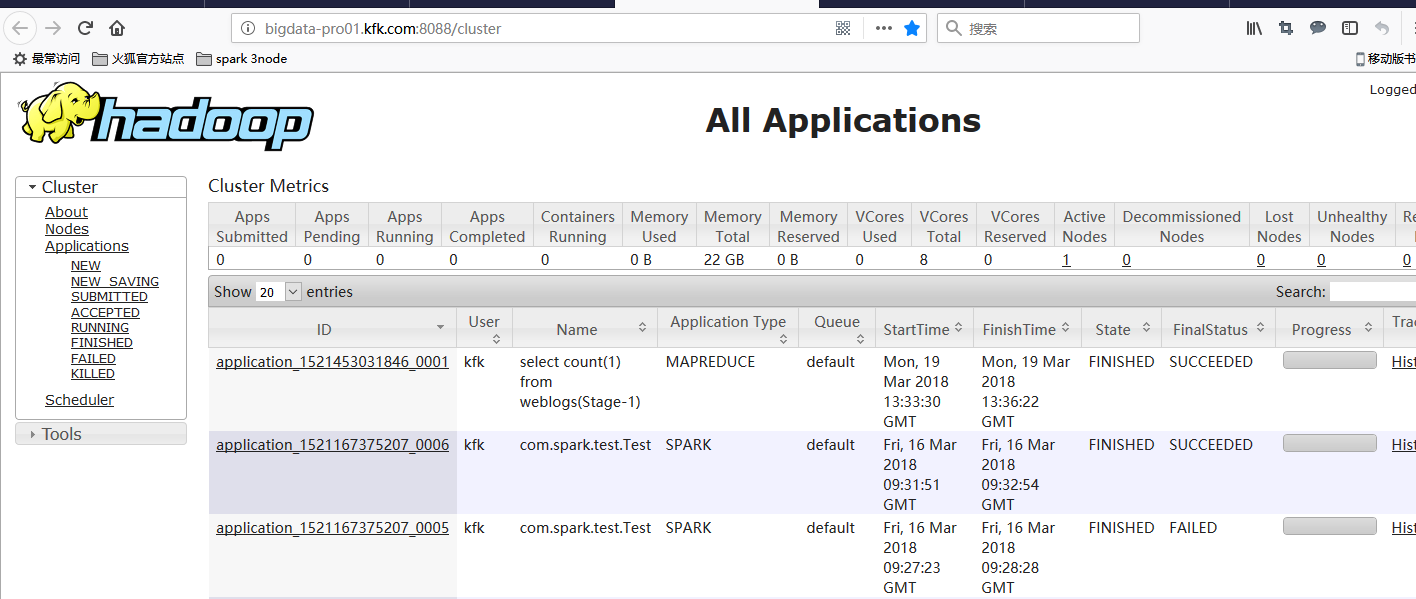

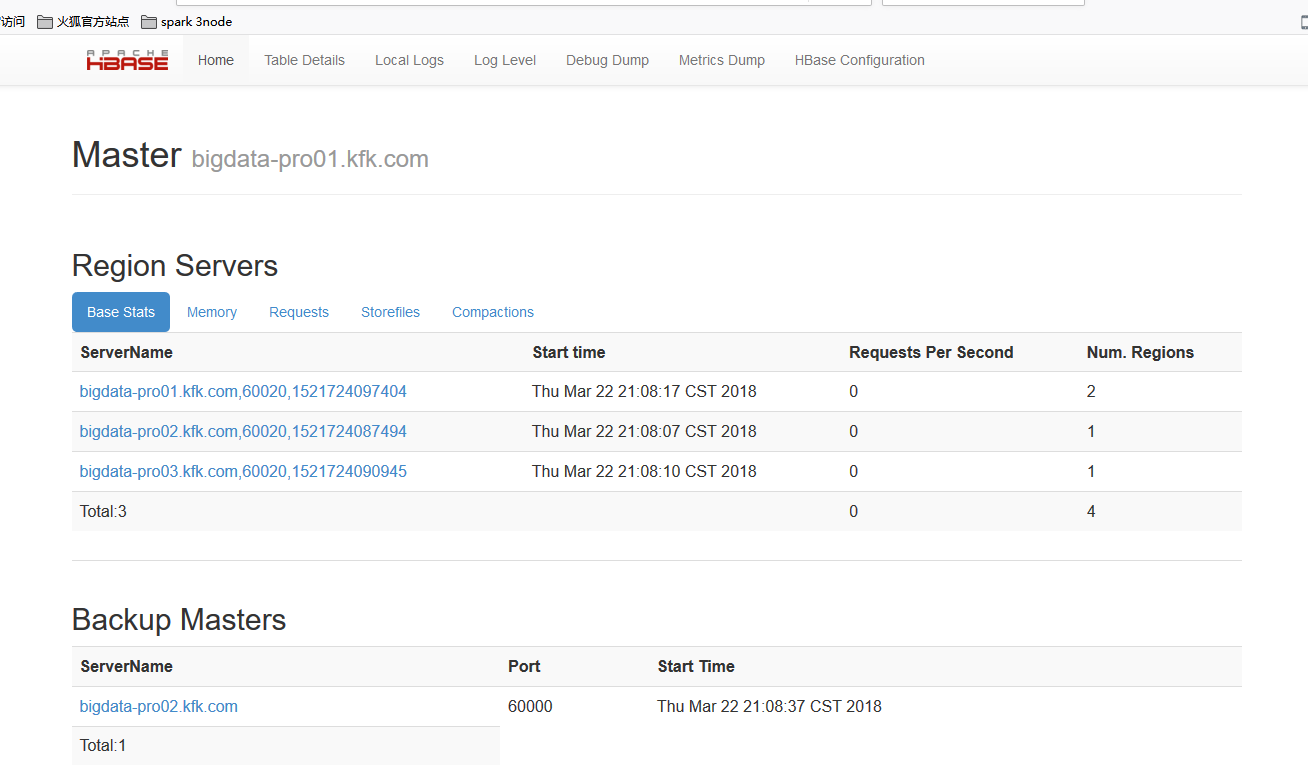

保持集群的dfs,hbase,yarn,zookeeper,都是启动的状态

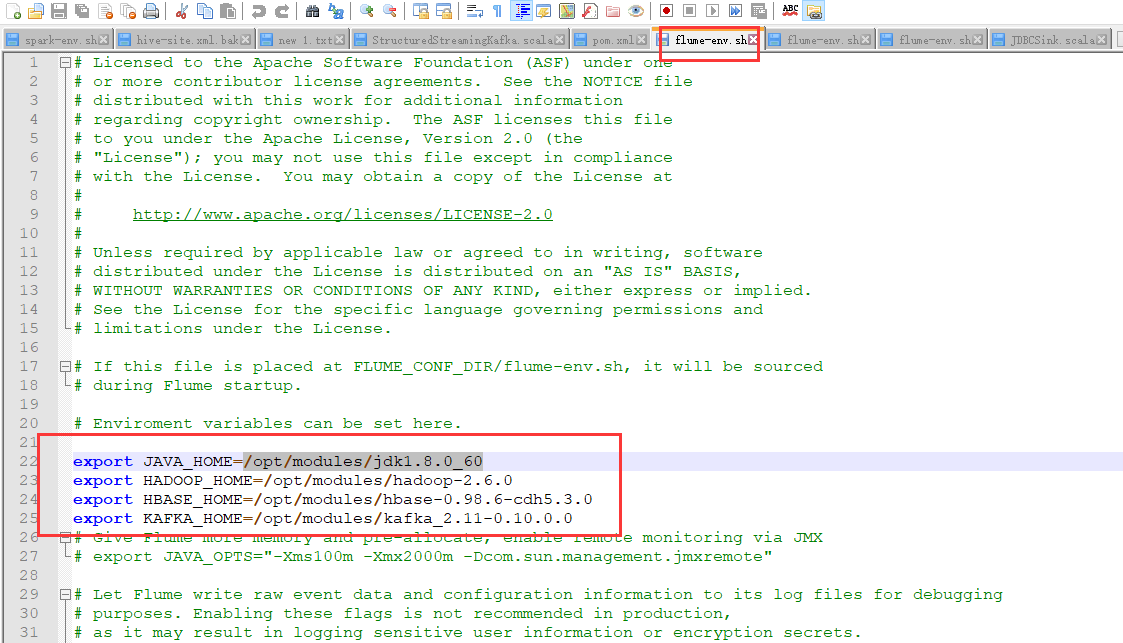

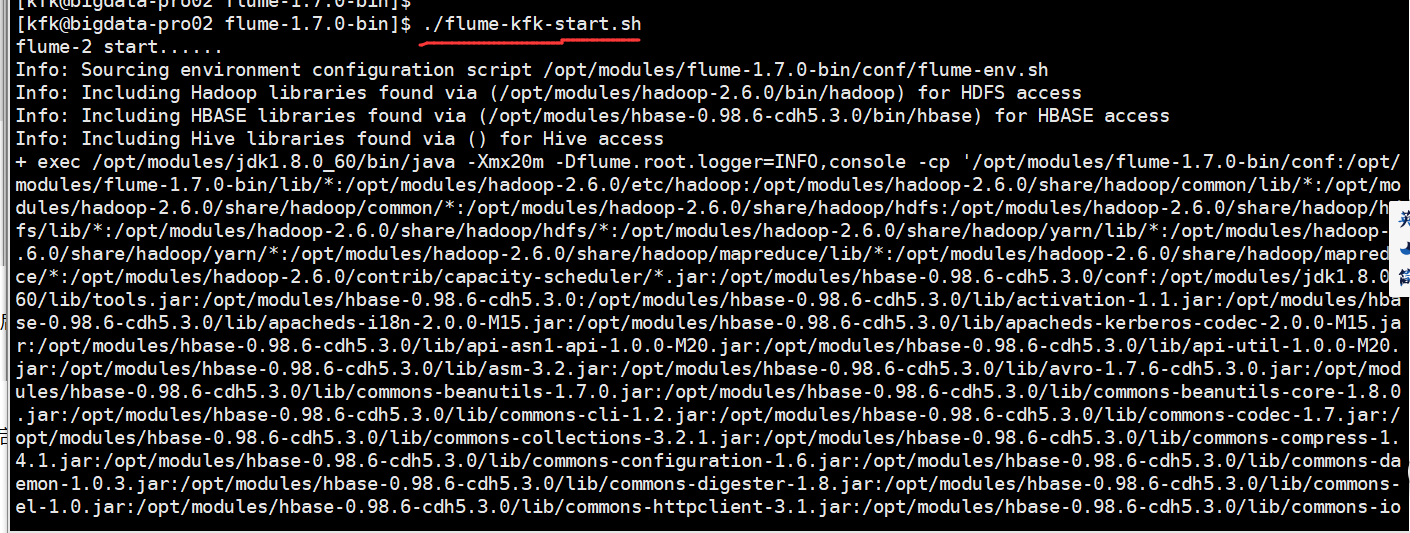

启动我们节点1和节点2的flume,在启动之前我们先修改一下flume的配置,因为我们把jdk版本和kafka版本后面更换了,所以我们要修改配置文件(3个节点的都改)

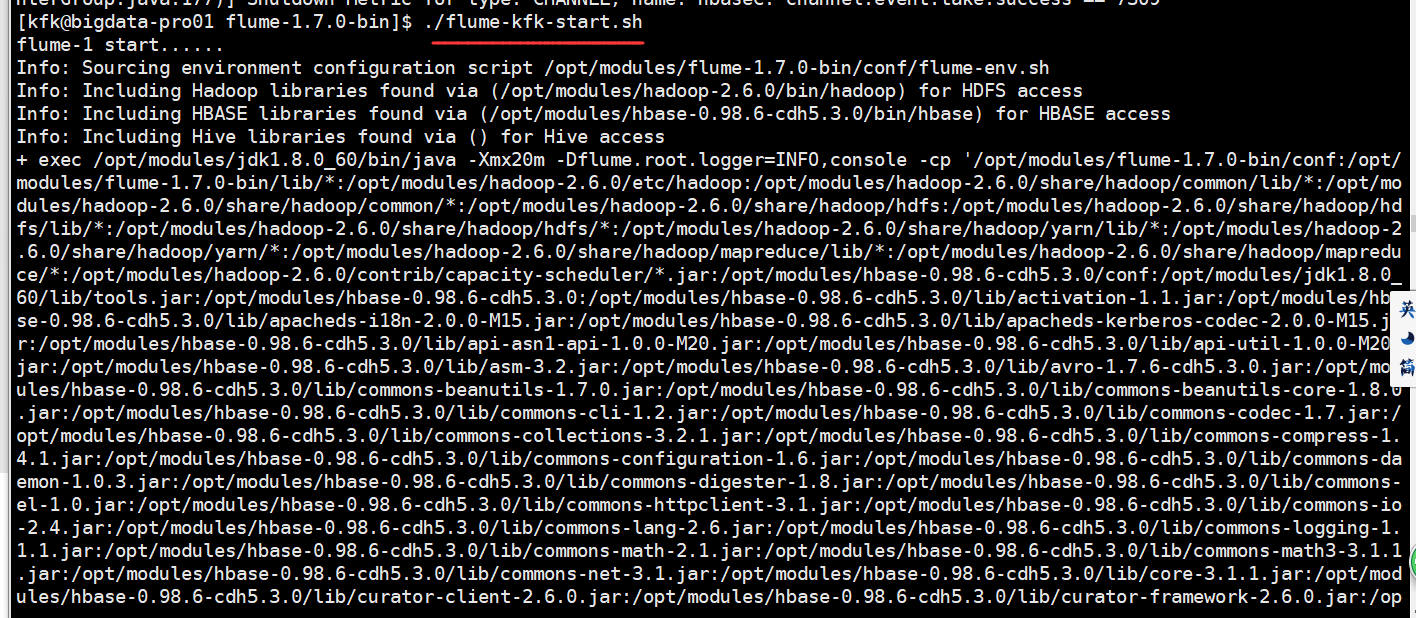

启动节点1的flume

启动节点1的kafka

启动节点2的flume

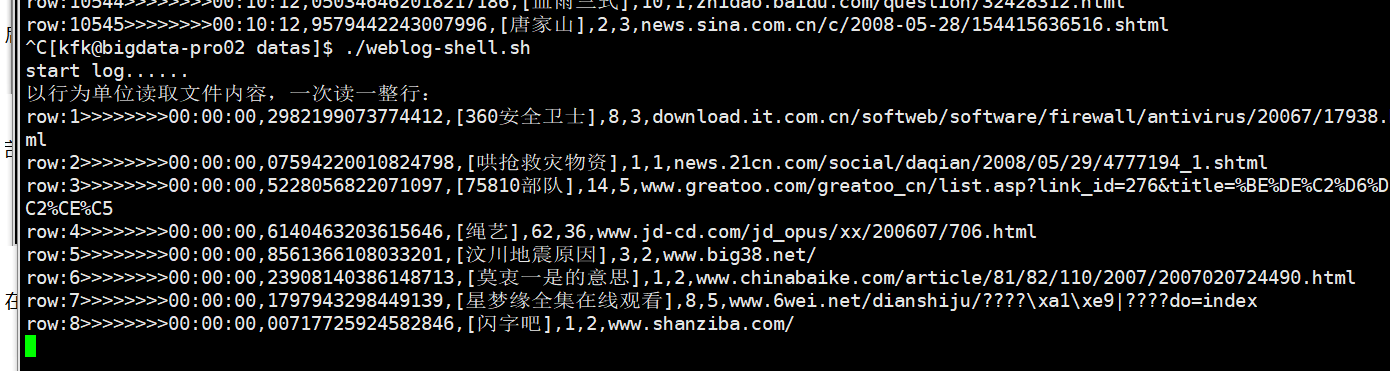

在节点2上把数据启动起来,实时产生数据

回到idea我们把程序运行一下

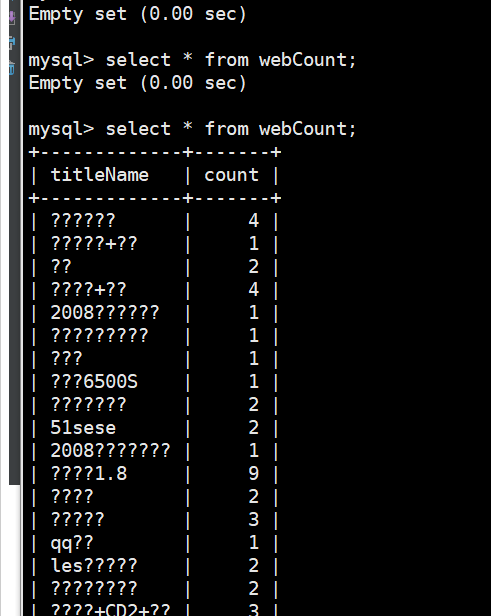

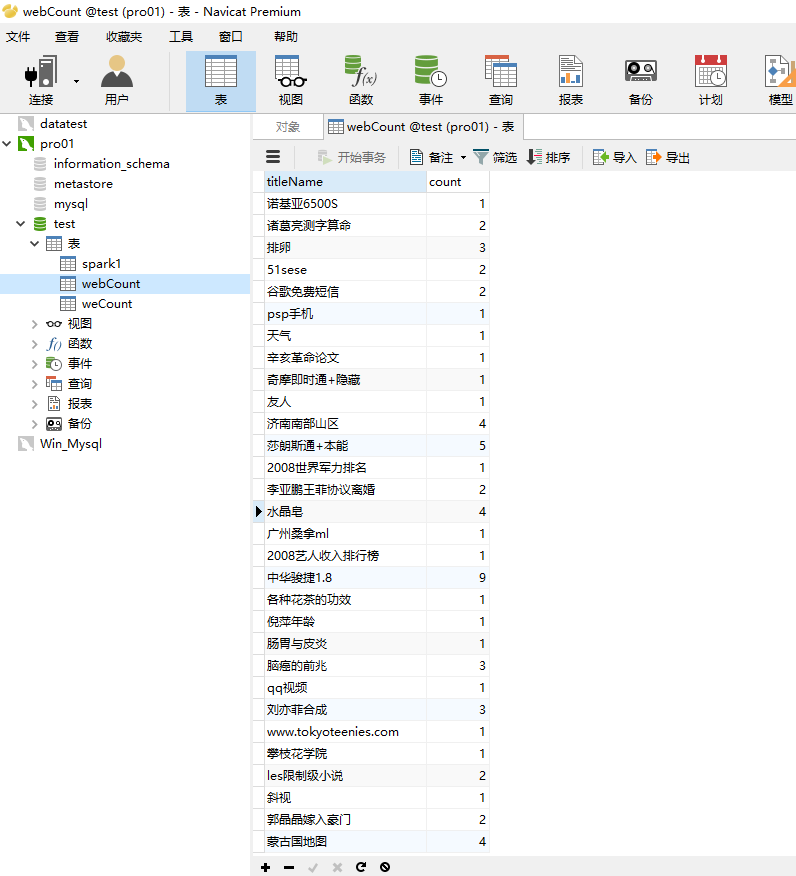

回到mysql里面查看webCount表,已经有数据进来了

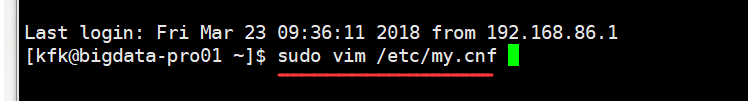

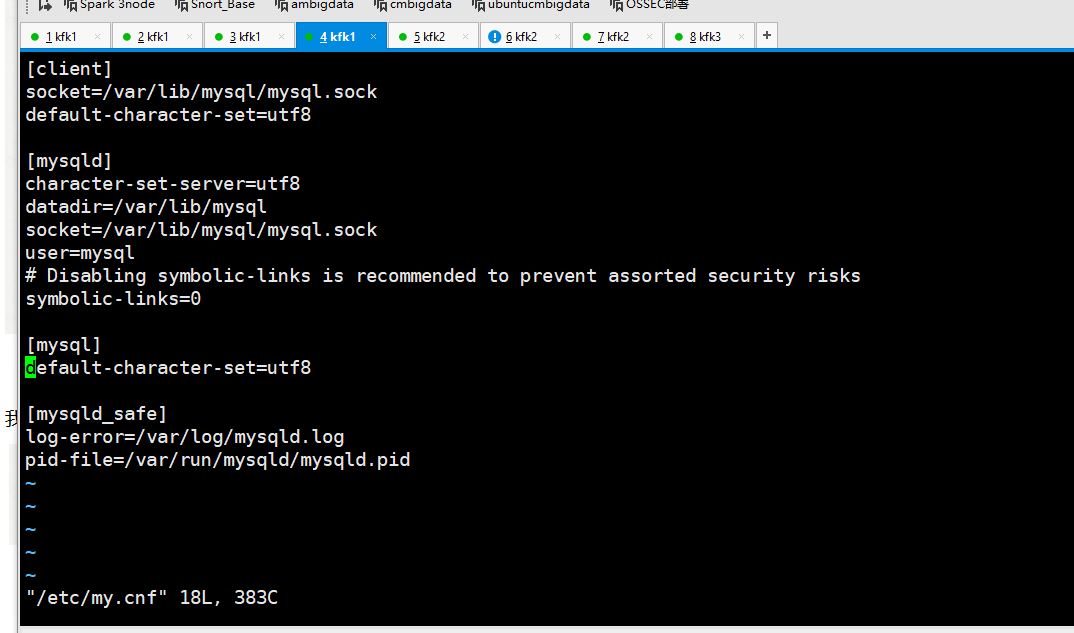

我们把配置文件修改如下

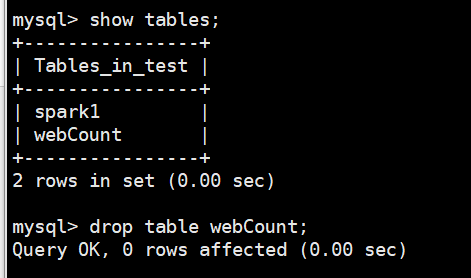

把表删除了

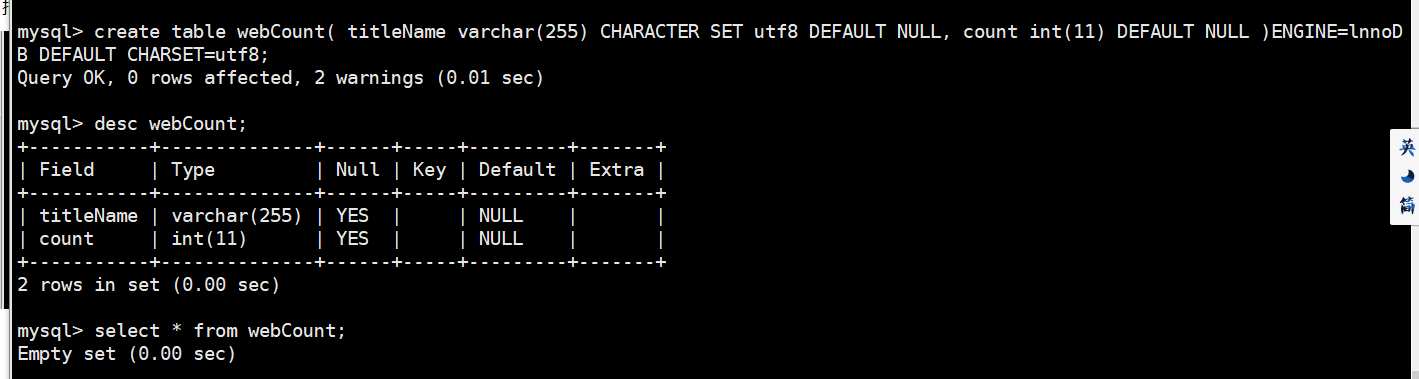

重新创建表

重新在运行一次程序

可以看到没有中文乱码了,同时我们也可以通过可视化工具连接mysql查看

以上就是博主为大家介绍的这一板块的主要内容,这都是博主自己的学习过程,希望能给大家带来一定的指导作用,有用的还望大家点个支持,如果对你没用也望包涵,有错误烦请指出。如有期待可关注博主以第一时间获取更新哦,谢谢!同时也欢迎转载,但必须在博文明显位置标注原文地址,解释权归博主所有!