不多说,直接上干货!

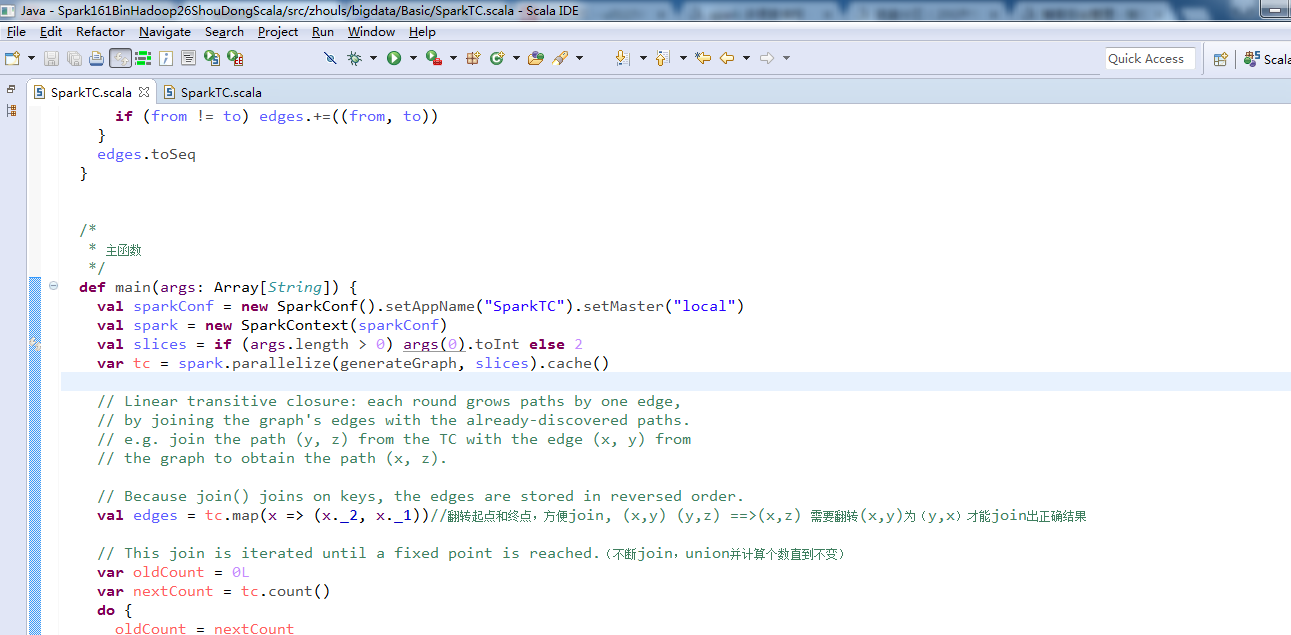

spark-1.6.1-bin-hadoop2.6里Basic包下的SparkTC.scala

/* * Licensed to the Apache Software Foundation (ASF) under one or more * contributor license agreements. See the NOTICE file distributed with * this work for additional information regarding copyright ownership. * The ASF licenses this file to You under the Apache License, Version 2.0 * (the "License"); you may not use this file except in compliance with * the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ // scalastyle:off println //package org.apache.spark.examples package zhouls.bigdata import scala.util.Random import scala.collection.mutable import org.apache.spark.{SparkConf, SparkContext} import org.apache.spark.SparkContext._ /** * Transitive closure on a graph. */ object SparkTC { val numEdges = 200 val numVertices = 100 val rand = new Random(42) def generateGraph: Seq[(Int, Int)] = { val edges: mutable.Set[(Int, Int)] = mutable.Set.empty while (edges.size < numEdges) { val from = rand.nextInt(numVertices) val to = rand.nextInt(numVertices) if (from != to) edges.+=((from, to)) } edges.toSeq } /* * 主函数 */ def main(args: Array[String]) { val sparkConf = new SparkConf().setAppName("SparkTC").setMaster("local") val spark = new SparkContext(sparkConf) val slices = if (args.length > 0) args(0).toInt else 2 var tc = spark.parallelize(generateGraph, slices).cache() // Linear transitive closure: each round grows paths by one edge, // by joining the graph's edges with the already-discovered paths. // e.g. join the path (y, z) from the TC with the edge (x, y) from // the graph to obtain the path (x, z). // Because join() joins on keys, the edges are stored in reversed order. val edges = tc.map(x => (x._2, x._1))//翻转起点和终点,方便join, (x,y) (y,z) ==>(x,z) 需要翻转(x,y)为(y,x)才能join出正确结果 // This join is iterated until a fixed point is reached.(不断join,union并计算个数直到不变) var oldCount = 0L var nextCount = tc.count() do { oldCount = nextCount // Perform the join, obtaining an RDD of (y, (z, x)) pairs, // then project the result to obtain the new (x, z) paths. tc = tc.union(tc.join(edges).map(x => (x._2._2, x._2._1))).distinct().cache() nextCount = tc.count() } while (nextCount != oldCount) println("TC has " + tc.count() + " edges.") spark.stop() } } // scalastyle:on println

/* * Licensed to the Apache Software Foundation (ASF) under one or more * contributor license agreements. See the NOTICE file distributed with * this work for additional information regarding copyright ownership. * The ASF licenses this file to You under the Apache License, Version 2.0 * (the "License"); you may not use this file except in compliance with * the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ // scalastyle:off println package org.apache.spark.examples import scala.collection.mutable import scala.util.Random import org.apache.spark.sql.SparkSession /** * Transitive closure on a graph. */ object SparkTC { val numEdges = 200 val numVertices = 100 val rand = new Random(42) /* * 1. 计算传递闭包(可到达路径数目) * 2. 自动生成图,使用可变Set存储起点,终点 */ def generateGraph: Seq[(Int, Int)] = { val edges: mutable.Set[(Int, Int)] = mutable.Set.empty while (edges.size < numEdges) { val from = rand.nextInt(numVertices) val to = rand.nextInt(numVertices) if (from != to) edges.+=((from, to)) } edges.toSeq } def main(args: Array[String]) { val spark = SparkSession .builder .master("local") .appName("SparkTC") .getOrCreate() val slices = if (args.length > 0) args(0).toInt else 2 var tc = spark.sparkContext.parallelize(generateGraph, slices).cache() // Linear transitive closure: each round grows paths by one edge, // by joining the graph's edges with the already-discovered paths. // e.g. join the path (y, z) from the TC with the edge (x, y) from // the graph to obtain the path (x, z). // Because join() joins on keys, the edges are stored in reversed order. val edges = tc.map(x => (x._2, x._1))//翻转起点和终点,方便join, (x,y) (y,z) ==>(x,z) 需要翻转(x,y)为(y,x)才能join出正确结果 // This join is iterated until a fixed point is reached.(不断join,union并计算个数直到不变) var oldCount = 0L var nextCount = tc.count() do { oldCount = nextCount // Perform the join, obtaining an RDD of (y, (z, x)) pairs, // then project the result to obtain the new (x, z) paths. tc = tc.union(tc.join(edges).map(x => (x._2._2, x._2._1))).distinct().cache() nextCount = tc.count() } while (nextCount != oldCount) println("TC has " + tc.count() + " edges.") spark.stop() } } // scalastyle:on println