定制命令

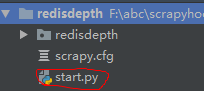

在项目目录下创建一个脚本

1 #!/usr/bin/env python 2 # -*- coding:utf-8 -*- 3 4 import sys 5 from scrapy.cmdline import execute 6 7 if __name__ == '__main__': 8 # execute(['scrapy', 'crawl', 'chouti', '--nolog']) 9 execute(['scrapy', 'crawlall', '--nolog'])

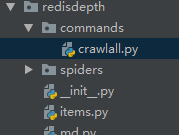

在spiders同级目录创建文件夹commands

1 #!/usr/bin/env python 2 # -*- coding:utf-8 -*- 3 4 from scrapy.commands import ScrapyCommand 5 from scrapy.utils.project import get_project_settings 6 7 8 class Command(ScrapyCommand): 9 requires_project = True 10 11 def syntax(self): 12 return '[options]' 13 14 def short_desc(self): 15 return 'Runs all of the spiders' 16 17 def run(self, args, opts): 18 spider_list = self.crawler_process.spiders.list() 19 print(spider_list) 20 for name in spider_list: 21 self.crawler_process.crawl(name, **opts.__dict__) 22 self.crawler_process.start()

配置文件

1 # 自定制命令 2 COMMANDS_MODULE = "redisdepth.commands"

信号开发框架的大佬给我们预留的位置自由发挥

1 #!/usr/bin/env python 2 # -*- coding:utf-8 -*- 3 4 from scrapy import signals 5 6 7 class MyExtension(object): 8 def __init__(self): 9 # self.value = value 10 pass 11 12 @classmethod 13 def from_crawler(cls, crawler): 14 # val = crawler.settings.getint('MMMM') 15 self = cls() 16 17 crawler.signals.connect(self.spider_opened, signal=signals.spider_opened) 18 crawler.signals.connect(self.spider_closed, signal=signals.spider_closed) 19 20 return self 21 22 def spider_opened(self, spider): 23 print('open') 24 25 def spider_closed(self, spider): 26 print('close')

配置

1 EXTENSIONS = { 2 # 'scrapy.extensions.telnet.TelnetConsole': None, 3 'redisdepth.ext.MyExtension': 666, 4 }

内置信号

1 """ 2 Scrapy signals 3 4 These signals are documented in docs/topics/signals.rst. Please don't add new 5 signals here without documenting them there. 6 """ 7 8 engine_started = object() 9 engine_stopped = object() 10 spider_opened = object() 11 spider_idle = object() 12 spider_closed = object() 13 spider_error = object() 14 request_scheduled = object() 15 request_dropped = object() 16 request_reached_downloader = object() 17 response_received = object() 18 response_downloaded = object() 19 item_scraped = object() 20 item_dropped = object() 21 item_error = object() 22 23 # for backward compatibility 24 stats_spider_opened = spider_opened 25 stats_spider_closing = spider_closed 26 stats_spider_closed = spider_closed 27 28 item_passed = item_scraped 29 30 request_received = request_scheduled