1、ip规划

注意:public ip、virtual ip、scan ip必须位于同一个网段

我目前是在虚拟机中设置了两个网卡,一个是NAT(192.168.88.X),另外一个是HOST ONLY(192.168.94.X)

node1.localdomain node1 public ip 192.168.88.191 node1-vip.localdomain node1-vip virtual ip 192.168.88.193 node1-priv.localdomain node1-priv private ip 192.168.94.11 node2.localdomain node2 public ip 192.168.88.192 node2-vip.localdomain node2-vip virtual ip 192.168.88.194 node2-priv.localdomain node2-priv private ip 192.168.94.12 scan-cluster.localdomain scan-cluster SCAN IP 192.168.88.203 dg.localdomain 192.168.88.212 DNS服务器ip: 192.168.88.11

2、安装oracle linux 6.10

安装过程略过,在安装过程中node1、node2要设置好public ip和private ip,dg要设置一个ip。

安装完成后,分别测试node1是否能ping通node2,dg,node2是否能ping通node1,dg。

在node1的终端: ping 192.168.88.192 ping 192.168.94.12 ping 192.168.88.212 在node2的终端: ping 192.168.88.191 ping 192.168.94.11 ping 192.168.88.212

3、设置hostname

node1和node2配置相同的hostname

127.0.0.1 localhost ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 #node1 192.168.88.191 node1.localdomain node1 192.168.88.193 node1-vip.localdomain node1-vip 192.168.94.11 node1-priv.localdomain node1-priv #node2 192.168.88.192 node2.localdomain node2 192.168.88.194 node2-vip.localdomain node2-vip 192.168.94.12 node2-priv.localdomain node2-priv #scan-ip 192.168.88.203 scan-cluster.localdomain scan-cluster

测试:

在node1的终端: ping node2 ping node2-priv 在node2的终端: ping node1 ping node1-priv

4、安装配置DNS服务器(192.168.88.11)

安装DNS软件包:

[root@feiht Packages]# rpm -ivh bind-9.8.2-0.68.rc1.el6.x86_64.rpm warning: bind-9.8.2-0.68.rc1.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY Preparing... ########################################### [100%] 1:bind ########################################### [100%] [root@feiht Packages]# rpm -ivh bind-chroot-9.8.2-0.68.rc1.el6.x86_64.rpm warning: bind-chroot-9.8.2-0.68.rc1.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY Preparing... ########################################### [100%] 1:bind-chroot ########################################### [100%]

配置/etc/named.conf 文件:

说明:

直接将该文件中的127.0.0.1、localhost 全部修改成any,且修改时,需要注意左右两边留空格。

修改前将原文件进行备份,注意加上-p 选项,来保证文件的权限问题,否则修改有问题后还原文件会由于权限问题导致DNS 服务启不来!

[root@feiht /]# cd /etc [root@feiht etc]# cp -p named.conf named.conf.bak

修改后如下:

// // named.conf // // Provided by Red Hat bind package to configure the ISC BIND named(8) DNS // server as a caching only nameserver (as a localhost DNS resolver only). // // See /usr/share/doc/bind*/sample/ for example named configuration files. // options { listen-on port 53 { any; }; listen-on-v6 port 53 { ::1; }; directory "/var/named"; dump-file "/var/named/data/cache_dump.db"; statistics-file "/var/named/data/named_stats.txt"; memstatistics-file "/var/named/data/named_mem_stats.txt"; allow-query { any; }; recursion yes; dnssec-enable yes; dnssec-validation yes; /* Path to ISC DLV key */ bindkeys-file "/etc/named.iscdlv.key"; managed-keys-directory "/var/named/dynamic"; }; logging { channel default_debug { file "data/named.run"; severity dynamic; }; }; zone "." IN { type hint; file "named.ca"; }; include "/etc/named.rfc1912.zones"; include "/etc/named.root.key";

配置/etc/named.rfc1912.zones 文件:

[root@feiht /]# cd /etc [root@feiht etc]# cp -p named.rfc1912.zones named.rfc1912.zones.bak

在etc/named.rfc1912.zones的最后添加如下内容:

zone "localdomain" IN { type master; file "localdomain.zone"; allow-update { none; }; }; zone "88.168.192.in-addr.arpa" IN { type master; file "88.168.192.in-addr.arpa"; allow-update { none; }; };

修改后的如下:

zone "localhost.localdomain" IN { type master; file "named.localhost"; allow-update { none; }; }; zone "localdomain" IN { type master; file "localdomain.zone"; allow-update { none; }; }; zone "localhost" IN { type master; file "named.localhost"; allow-update { none; }; }; zone "1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa" IN { type master; file "named.loopback"; allow-update { none; }; }; zone "1.0.0.127.in-addr.arpa" IN { type master; file "named.loopback"; allow-update { none; }; }; zone "0.in-addr.arpa" IN { type master; file "named.empty"; allow-update { none; }; }; zone "88.168.192.in-addr.arpa" IN { type master; file "88.168.192.in-addr.arpa"; allow-update { none; }; };

配置正、反向解析数据库文件:

[root@feiht ~]# cd /var/named/ 创建正反向文件: [root@feiht named]# cp -p named.localhost localdomain.zone [root@feiht named]# cp -p named.localhost 88.168.192.in-addr.arpa

在正向解析文件localdomain.zone的最后添加如下内容:

scan-cluster IN A 192.168.88.203

修改后如下:

$TTL 1D @ IN SOA @ rname.invalid. ( 0 ; serial 1D ; refresh 1H ; retry 1W ; expire 3H ) ; minimum NS @ A 127.0.0.1 AAAA ::1 scan-cluster A 192.168.88.203

在反向解析数据库文件88.168.192.in-addr.arpa 最后添加下述内容:

203 IN PTR scan-cluster.localdomain.

修改后如下:

$TTL 1D @ IN SOA @ rname.invalid. ( 1997022700 ; serial 28800 ; refresh 1400 ; retry 3600000 ; expire 86400 ) ; minimum NS @ A 127.0.0.1 AAAA ::1 203 IN PTR scan-cluster.localdomain.

如果遇到权限问题(具体什么问题忘记截图了),请执行下列语句把正反数据库文件的权限改成named:

[root@feiht named]# chown -R named:named localdomain.zone [root@feiht named]# chown -R named:named 88.168.192.in-addr.arpa

修改DNS服务器的 /etc/resolv.conf文件,保证resolv.conf不会自动修改:

[root@feiht etc]# cat /etc/resolv.conf # Generated by NetworkManager search localdomain nameserver 192.168.88.11 [root@feiht named]# chattr +i /etc/resolv.conf

关闭DNS服务器的防火墙:

[root@feiht etc]# service iptables stop

[root@oradb ~]# chkconfig iptables off

启动DNS服务:

[root@feiht named]# /etc/rc.d/init.d/named status [root@feiht named]# /etc/rc.d/init.d/named start [root@feiht named]# /etc/rc.d/init.d/named stop [root@feiht named]# /etc/rc.d/init.d/named restart

然后,分别在RAC 节点node1、node2 的/etc/resolv.conf 配置文件中添加下述配置信息:

search localdomain nameserver 192.168.88.11

验证node1的scan ip是否解析成功:

[root@node1 etc]# nslookup 192.168.88.203 Server: 192.168.88.11 Address: 192.168.88.11#53 203.88.168.192.in-addr.arpa name = scan-cluster.localdomain. [root@node1 etc]# nslookup scan-cluster.localdomain Server: 192.168.88.11 Address: 192.168.88.11#53 Name: scan-cluster.localdomain Address: 192.168.88.203 [root@node1 etc]# nslookup scan-cluster Server: 192.168.88.11 Address: 192.168.88.11#53 Name: scan-cluster.localdomain Address: 192.168.88.203 同样的方式测试node2.

5、安装前的准备工作

5.1、分别在node1、node2上建用户、改口令、修改用户配置文件

用户规划:

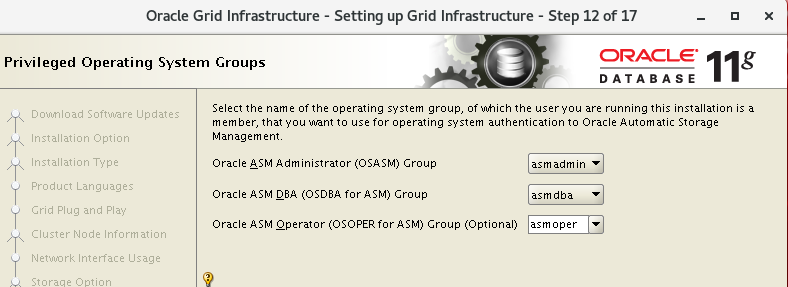

GroupName GroupID GroupInfo OracleUser(1100) GridUser(1101) oinstall 1000 Inventory Group Y Y dba 1300 OSDBA Group Y oper 1301 OSOPER Group Y asmadmin 1200 OSASM Y asmdba 1201 OSDBA for ASM Y Y asmoper 1202 OSOPER for ASM Y

shell脚本(node1):

说明:在节点node2 上执行该脚本时,

需要将grid 用户环境变量ORACLE_SID 修改为+ASM2,oracle 用户环境变量ORACLE_SID 修改为devdb2,ORACLE_HOSTNAME 环境变量修改为node2.localdomain

echo "Now create 6 groups named 'oinstall','dba','asmadmin','asmdba','asmoper','oper'" echo "Plus 2 users named 'oracle','grid',Also setting the Environment" groupadd -g 1000 oinstall groupadd -g 1200 asmadmin groupadd -g 1201 asmdba groupadd -g 1202 asmoper useradd -u 1100 -g oinstall -G asmadmin,asmdba,asmoper -d /home/grid -s /bin/bash -c "grid Infrastructure Owner" grid echo "grid" | passwd --stdin grid echo 'export PS1="`/bin/hostname -s`-> "'>> /home/grid/.bash_profile echo "export TMP=/tmp">> /home/grid/.bash_profile echo 'export TMPDIR=$TMP'>>/home/grid/.bash_profile echo "export ORACLE_SID=+ASM1">> /home/grid/.bash_profile echo "export ORACLE_BASE=/u01/app/grid">> /home/grid/.bash_profile echo "export ORACLE_HOME=/u01/app/11.2.0/grid">> /home/grid/.bash_profile echo "export ORACLE_TERM=xterm">> /home/grid/.bash_profile echo "export NLS_DATE_FORMAT='yyyy/mm/dd hh24:mi:ss'" >> /home/grid/.bash_profile echo 'export TNS_ADMIN=$ORACLE_HOME/network/admin' >> /home/grid/.bash_profile echo 'export PATH=/usr/sbin:$PATH'>> /home/grid/.bash_profile echo 'export PATH=$ORACLE_HOME/bin:$PATH'>> /home/grid/.bash_profile echo 'export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib'>> /home/grid/.bash_profile echo 'export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib'>> /home/grid/.bash_profile echo "export EDITOR=vi" >> /home/grid/.bash_profile echo "export LANG=en_US" >> /home/grid/.bash_profile echo "export NLS_LANG=AMERICAN_AMERICA.AL32UTF8" >> /home/grid/.bash_profile echo "umask 022">> /home/grid/.bash_profile groupadd -g 1300 dba groupadd -g 1301 oper useradd -u 1101 -g oinstall -G dba,oper,asmdba -d /home/oracle -s /bin/bash -c "Oracle Software Owner" oracle echo "oracle" | passwd --stdin oracle echo 'export PS1="`/bin/hostname -s`-> "'>> /home/oracle/.bash_profile echo "export TMP=/tmp">> /home/oracle/.bash_profile echo 'export TMPDIR=$TMP'>>/home/oracle/.bash_profile echo "export ORACLE_HOSTNAME=node1.localdomain">> /home/oracle/.bash_profile echo "export ORACLE_SID=devdb1">> /home/oracle/.bash_profile echo "export ORACLE_BASE=/u01/app/oracle">> /home/oracle/.bash_profile echo 'export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1'>> /home/oracle/.bash_profile echo "export ORACLE_UNQNAME=devdb">> /home/oracle/.bash_profile echo 'export TNS_ADMIN=$ORACLE_HOME/network/admin' >> /home/oracle/.bash_profile echo "export ORACLE_TERM=xterm">> /home/oracle/.bash_profile echo 'export PATH=/usr/sbin:$PATH'>> /home/oracle/.bash_profile echo 'export PATH=$ORACLE_HOME/bin:$PATH'>> /home/oracle/.bash_profile echo 'export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib'>> /home/oracle/.bash_profile echo 'export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib'>> /home/oracle/.bash_profile echo "export EDITOR=vi" >> /home/oracle/.bash_profile echo "export LANG=en_US" >> /home/oracle/.bash_profile echo "export NLS_LANG=AMERICAN_AMERICA.AL32UTF8" >> /home/oracle/.bash_profile echo "export NLS_DATE_FORMAT='yyyy/mm/dd hh24:mi:ss'" >> /home/oracle/.bash_profile echo "umask 022">> /home/oracle/.bash_profile echo "The Groups and users has been created" echo "The Environment for grid,oracle also has been set successfully"

查看用户和目录是否创建成功:

[root@node1 shell]# id oracle uid=1101(oracle) gid=1000(oinstall) 组=1000(oinstall),1201(asmdba),1300(dba),1301(oper) [root@node1 shell]# id grid uid=1100(grid) gid=1000(oinstall) 组=1000(oinstall),1200(asmadmin),1201(asmdba),1202(asmoper) [root@node1 shell]# groups oracle oracle : oinstall asmdba dba oper [root@node1 shell]# groups grid grid : oinstall asmadmin asmdba asmoper [root@node1 home]# ll /home 总用量 8 drwx------. 3 grid oinstall 4096 2月 5 17:18 grid drwx------. 3 oracle oinstall 4096 2月 5 17:18 oracle

5.2、建路径、改权限

路径和权限规划:

Environment Variable Grid User Oracle User ORACLE_BASE /u01/app/grid /u01/app/oracle ORACLE_HOME /u01/app/11.2.0/grid /u01/app/oracle/product/11.2.0/db_1 ORACLE_SID [node1] +ASM1 devdb1 ORACLE_SID [node2] +ASM2 devdb2

shell脚本:

echo "Now create the necessary directory for oracle,grid users and change the authention to oracle,grid users..." mkdir -p /u01/app/grid mkdir -p /u01/app/11.2.0/grid mkdir -p /u01/app/oracle chown -R oracle:oinstall /u01 chown -R grid:oinstall /u01/app/grid chown -R grid:oinstall /u01/app/11.2.0 chmod -R 775 /u01 echo "The necessary directory for oracle,grid users and change the authention to oracle,grid users has been finished"

5.3、修改/etc/security/limits.conf,配置 oracle、grid用户的shell限制

shell脚本:

echo "Now modify the /etc/security/limits.conf,but backup it named /etc/security/limits.conf.bak before" cp /etc/security/limits.conf /etc/security/limits.conf.bak echo "oracle soft nproc 2047" >>/etc/security/limits.conf echo "oracle hard nproc 16384" >>/etc/security/limits.conf echo "oracle soft nofile 1024" >>/etc/security/limits.conf echo "oracle hard nofile 65536" >>/etc/security/limits.conf echo "grid soft nproc 2047" >>/etc/security/limits.conf echo "grid hard nproc 16384" >>/etc/security/limits.conf echo "grid soft nofile 1024" >>/etc/security/limits.conf echo "grid hard nofile 65536" >>/etc/security/limits.conf echo "Modifing the /etc/security/limits.conf has been succeed."

5.4、修改/etc/pam.d/login配置文件

shell脚本:

echo "Now modify the /etc/pam.d/login,but with a backup named /etc/pam.d/login.bak" cp /etc/pam.d/login /etc/pam.d/login.bak echo "session required /lib/security/pam_limits.so" >>/etc/pam.d/login echo "session required pam_limits.so" >>/etc/pam.d/login echo "Modifing the /etc/pam.d/login has been succeed."

5.5、修改/etc/profile文件

shell脚本:

echo "Now modify the /etc/profile,but with a backup named /etc/profile.bak" cp /etc/profile /etc/profile.bak echo 'if [ $USER = "oracle" ]||[ $USER = "grid" ]; then' >> /etc/profile echo 'if [ $SHELL = "/bin/ksh" ]; then' >> /etc/profile echo 'ulimit -p 16384' >> /etc/profile echo 'ulimit -n 65536' >> /etc/profile echo 'else' >> /etc/profile echo 'ulimit -u 16384 -n 65536' >> /etc/profile echo 'fi' >> /etc/profile echo 'fi' >> /etc/profile echo "Modifing the /etc/profile has been succeed."

5.6、修改内核配置文件/etc/sysctl.conf

shell脚本:

echo "Now modify the /etc/sysctl.conf,but with a backup named /etc/sysctl.bak" cp /etc/sysctl.conf /etc/sysctl.conf.bak echo "fs.aio-max-nr = 1048576" >> /etc/sysctl.conf echo "fs.file-max = 6815744" >> /etc/sysctl.conf echo "kernel.shmall = 2097152" >> /etc/sysctl.conf echo "kernel.shmmax = 1054472192" >> /etc/sysctl.conf echo "kernel.shmmni = 4096" >> /etc/sysctl.conf echo "kernel.sem = 250 32000 100 128" >> /etc/sysctl.conf echo "net.ipv4.ip_local_port_range = 9000 65500" >> /etc/sysctl.conf echo "net.core.rmem_default = 262144" >> /etc/sysctl.conf echo "net.core.rmem_max = 4194304" >> /etc/sysctl.conf echo "net.core.wmem_default = 262144" >> /etc/sysctl.conf echo "net.core.wmem_max = 1048586" >> /etc/sysctl.conf echo "net.ipv4.tcp_wmem = 262144 262144 262144" >> /etc/sysctl.conf echo "net.ipv4.tcp_rmem = 4194304 4194304 4194304" >> /etc/sysctl.conf echo "Modifing the /etc/sysctl.conf has been succeed." echo "Now make the changes take effect....." sysctl -p

5.7、停止 ntp服务

[root@node1 /]# service ntpd status ntpd 已停 [root@node1 /]# chkconfig ntpd off [root@node1 etc]# ls |grep ntp ntp ntp.conf [root@node1 etc]# cp -p /etc/ntp.conf /etc/ntp.conf.bak [root@node1 etc]# ls |grep ntp ntp ntp.conf ntp.conf.bak [root@node1 etc]# rm -rf /etc/ntp.conf [root@node1 etc]# ls |grep ntp ntp ntp.conf.bak [root@node1 etc]#

6、在node2上重复第5步的步骤配置node2节点

说明:在节点node2 上执行该脚本时,

需要将grid 用户环境变量ORACLE_SID 修改为+ASM2,oracle 用户环境变量ORACLE_SID 修改为devdb2,ORACLE_HOSTNAME 环境变量修改为node2.localdomain

7、配置 oracle,grid 用户 SSH对等性

node1:

[root@node1 etc]# su - oracle node1-> env | grep ORA ORACLE_UNQNAME=devdb ORACLE_SID=devdb1 ORACLE_BASE=/u01/app/oracle ORACLE_HOSTNAME=node1.localdomain ORACLE_TERM=xterm ORACLE_HOME=/u01/app/oracle/product/11.2.0/db_1 node1-> pwd /home/oracle node1-> mkdir ~/.ssh node1-> chmod 700 ~/.ssh node1-> ls -al total 36 drwx------. 4 oracle oinstall 4096 Feb 5 18:53 . drwxr-xr-x. 4 root root 4096 Feb 5 17:10 .. -rw-------. 1 oracle oinstall 167 Feb 5 18:16 .bash_history -rw-r--r--. 1 oracle oinstall 18 Mar 22 2017 .bash_logout -rw-r--r--. 1 oracle oinstall 823 Feb 5 17:18 .bash_profile -rw-r--r--. 1 oracle oinstall 124 Mar 22 2017 .bashrc drwxr-xr-x. 2 oracle oinstall 4096 Nov 20 2010 .gnome2 drwx------. 2 oracle oinstall 4096 Feb 5 18:53 .ssh -rw-------. 1 oracle oinstall 651 Feb 5 18:16 .viminfo node1-> ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_rsa. Your public key has been saved in /home/oracle/.ssh/id_rsa.pub. The key fingerprint is: b9:97:e7:1b:1c:e4:1d:d9:31:47:e1:d1:90:7f:27:e7 oracle@node1.localdomain The key's randomart image is: node1-> ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_dsa. Your public key has been saved in /home/oracle/.ssh/id_dsa.pub. The key fingerprint is: b7:70:24:43:ab:90:74:b0:49:dc:a9:bf:e7:19:17:ef oracle@node1.localdomain The key's randomart image is: 同样在node2上执行上面的命令

返回node1:

node1-> id uid=1101(oracle) gid=1000(oinstall) groups=1000(oinstall),1201(asmdba),1300(dba),1301(oper) context=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023 node1-> pwd /home/oracle node1-> cd ~/.ssh node1-> ll total 16 -rw-------. 1 oracle oinstall 668 Feb 5 18:55 id_dsa -rw-r--r--. 1 oracle oinstall 614 Feb 5 18:55 id_dsa.pub -rw-------. 1 oracle oinstall 1675 Feb 5 18:54 id_rsa -rw-r--r--. 1 oracle oinstall 406 Feb 5 18:54 id_rsa.pub node1-> cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys node1-> cat ~/.ssh/id_dsa.pub >>~/.ssh/authorized_keys node1-> ll total 20 -rw-r--r--. 1 oracle oinstall 1020 Feb 5 19:05 authorized_keys -rw-------. 1 oracle oinstall 668 Feb 5 18:55 id_dsa -rw-r--r--. 1 oracle oinstall 614 Feb 5 18:55 id_dsa.pub -rw-------. 1 oracle oinstall 1675 Feb 5 18:54 id_rsa -rw-r--r--. 1 oracle oinstall 406 Feb 5 18:54 id_rsa.pub node1-> ssh node2 cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys The authenticity of host 'node2 (192.168.88.192)' can't be established. RSA key fingerprint is cd:fd:bd:72:7d:2f:54:b3:d7:32:30:de:67:bb:6f:8b. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node2,192.168.88.192' (RSA) to the list of known hosts. oracle@node2's password: node1-> ssh node2 cat ~/.ssh/id_dsa.pub >>~/.ssh/authorized_keys oracle@node2's password: node1-> scp ~/.ssh/authorized_keys node2:~/.ssh/authorized_keys oracle@node2's password: authorized_keys node1->

在node1上验证SSH的对等性是否配置成功:

node1-> ssh node1 date The authenticity of host 'node1 (192.168.88.191)' can't be established. RSA key fingerprint is b2:a4:19:c0:85:b5:df:f2:8d:16:d8:b2:83:5b:21:19. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node1,192.168.88.191' (RSA) to the list of known hosts. Fri Feb 5 19:15:02 CST 2021 node1-> ssh node2 date Fri Feb 5 19:15:57 CST 2021 node1-> ssh node1-priv date ....省略... node1-> ssh node2-priv date ....省略... node1-> ssh node1.localdomain date ....省略... node1-> ssh node2.localdomain date ....省略... node1-> ssh node1-priv.localdomain date ....省略... node1-> ssh node2-priv.localdomain date ....省略...

在node2上同样执行上述命令验证SSH的对等性是否配置成功

最后在node1和node2上分别再次执行上述命令,如果不需要输入密码,则node1和node2对等性配置成功:

node1: node1-> ssh node1 date Fri Feb 5 19:36:22 CST 2021 node1-> ssh node2 date Fri Feb 5 19:36:26 CST 2021 node1-> ssh node1-priv date Fri Feb 5 19:36:34 CST 2021 node1-> ssh node2-priv date Fri Feb 5 19:36:38 CST 2021 node1-> ssh node1.localdomain date Fri Feb 5 19:37:51 CST 2021 node1-> ssh node2.localdomain date Fri Feb 5 19:37:54 CST 2021 node1-> ssh node2-priv.localdomain date Fri Feb 5 19:38:01 CST 2021 node1-> ssh node1-priv.localdomain date Fri Feb 5 19:38:08 CST 2021 node2: node2-> ssh node1 date Fri Feb 5 19:49:20 CST 2021 node2-> ssh node2 date Fri Feb 5 19:49:23 CST 2021 node2-> ssh node1-priv date Fri Feb 5 19:49:29 CST 2021 node2-> ssh node2-priv date Fri Feb 5 19:49:32 CST 2021 node2-> ssh node1.localdomain date Fri Feb 5 19:49:40 CST 2021 node2-> ssh node2.localdomain date Fri Feb 5 19:49:43 CST 2021 node2-> ssh node2-priv.localdomain date Fri Feb 5 19:49:50 CST 2021 node2-> ssh node1-priv.localdomain date Fri Feb 5 19:49:55 CST 2021

Oracle 用户SSH 对等性配置完成!

8、重复上述步骤7,切换到grid用户(su - oracle),以grid 用户配置对等性。

9、配置共享磁盘

在任意一个节点上先创建共享磁盘,然后在另外的节点上选择添加已有磁盘。这里选择先在node2 节点机器上创建共享磁盘,然后在node1 上添加已创建的磁盘。

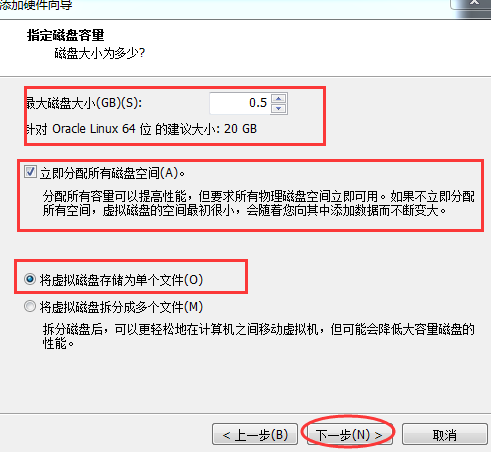

共创建4 块硬盘,其中2 块500M的硬盘,将来用于配置GRIDDG 磁盘组,专门存放OCR 和Voting Disk,Voting Disk一般是配置奇数块硬盘;1 块3G 的磁盘,用于配置DATA 磁盘组,存放数据库;1 块3G 的磁盘,用于配置FLASH 磁盘组,用于闪回区;

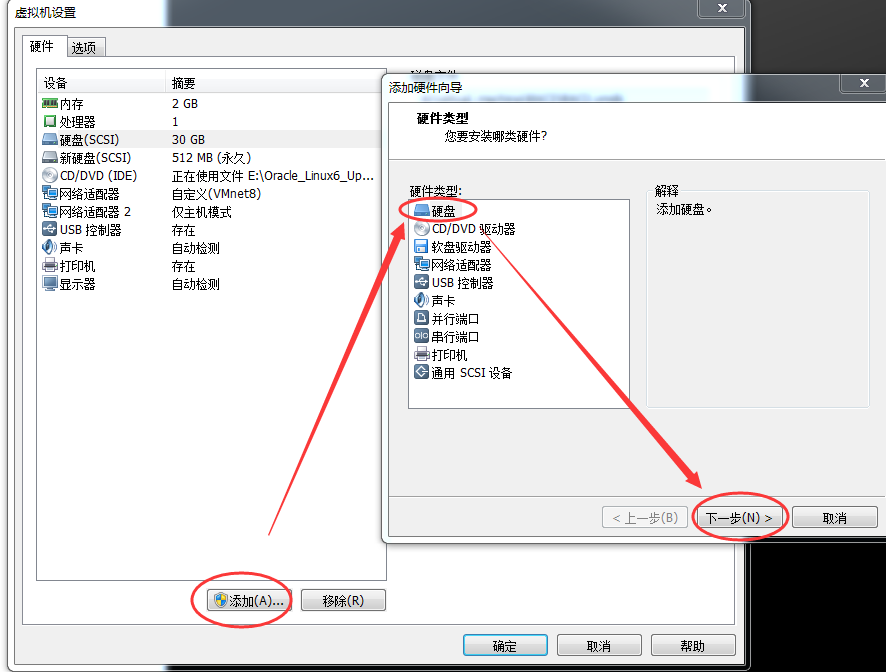

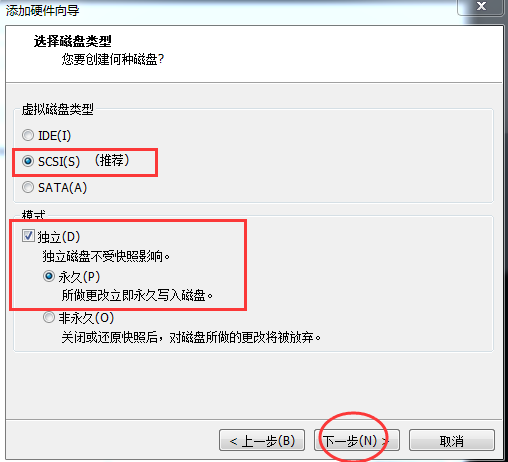

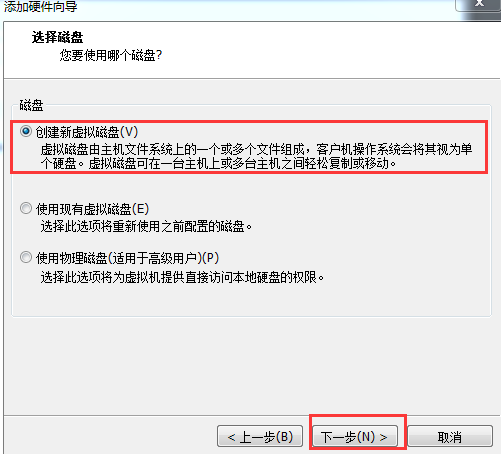

在node2 上创建第一块共享硬盘的步骤:

① 先关闭节点2 RAC2,然后选择RAC2,右键选择设置:

② 在编辑虚拟机对话框下,选择添加,选择硬盘,下一步:

③创建新虚拟磁盘

④指定共享磁盘的大小

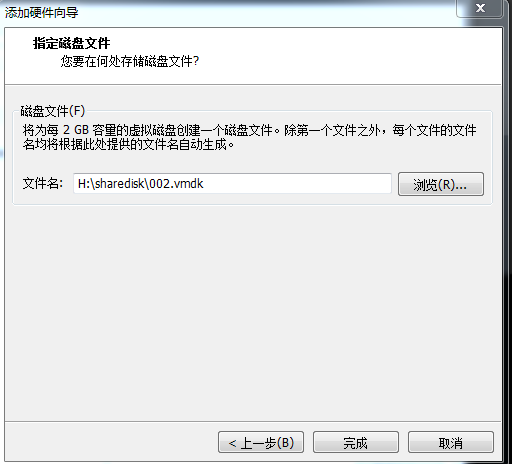

⑤选择共享磁盘文件的存放位置

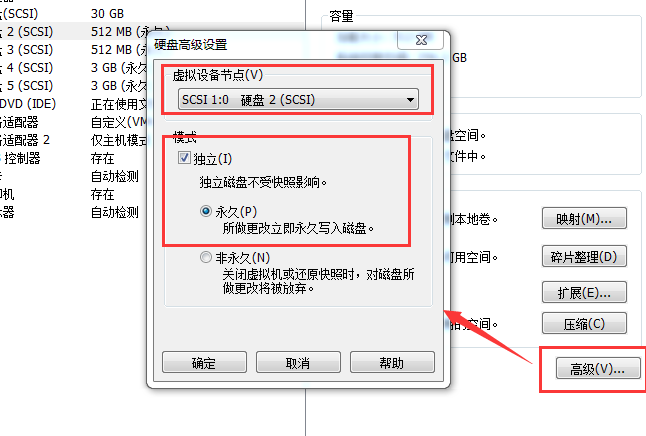

⑥磁盘创建完成后,选择刚创建的新硬盘,点击“高级”,在弹出的对话框里,虚拟设备节点这里需要特别注意,要选择1:0。

⑦重复步骤①-⑥创建第二块硬盘,磁盘大小0.5G,虚拟设备节点这里要选择1:1

⑧重复步骤①-⑥创建第三块硬盘,磁盘大小3G,虚拟设备节点这里要选择2:0

⑨重复步骤①-⑥创建第四块硬盘,磁盘大小3G,虚拟设备节点这里要选择2:1

关机node1节点,然后为node1添加磁盘:

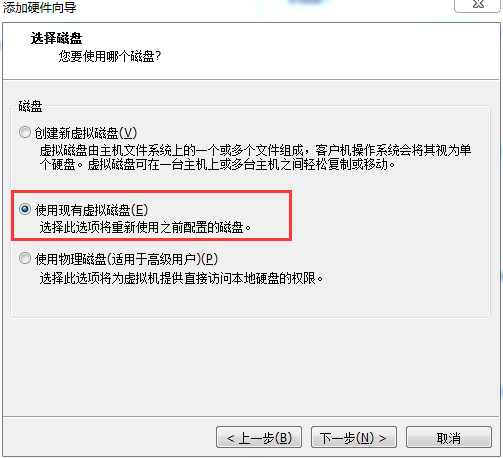

添加步骤和上述node2节点的步骤完全一致,但是要注意在选择磁盘的时候必须选择“使用现有虚拟磁盘”,如下图:

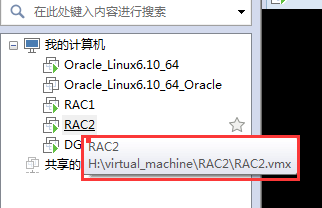

修改node1和node2的虚拟机文件:

先关机node2节点,鼠标放到RAC2上,会提示当前虚拟机对应的文件:

修改后的内容如下,红色字体为添加的部分:

...省略N行... scsi1.present = "TRUE" scsi1.virtualDev = "lsilogic" scsi1.sharedBus = "virtual" scsi2.present = "TRUE" scsi2.virtualDev = "lsilogic" scsi2.sharedBus = "virtual" scsi1:0.present = "TRUE" scsi1:1.present = "TRUE" scsi2:0.present = "TRUE" scsi2:1.present = "TRUE" scsi1:0.fileName = "H:sharediskOCR.vmdk" scsi1:0.mode = "independent-persistent" scsi1:0.deviceType = "disk" scsi1:1.fileName = "H:sharediskVOTING.vmdk" scsi1:1.mode = "independent-persistent" scsi1:1.deviceType = "disk" scsi2:0.fileName = "H:sharediskDATA.vmdk" scsi2:0.mode = "independent-persistent" scsi2:0.deviceType = "disk" scsi2:1.fileName = "H:sharediskFLASH.vmdk" scsi2:1.mode = "independent-persistent" scsi2:1.deviceType = "disk" floppy0.present = "FALSE" scsi1:1.redo = "" scsi1:0.redo = "" scsi2:0.redo = "" scsi2:1.redo = "" scsi1.pciSlotNumber = "38" scsi2.pciSlotNumber = "39" disk.locking = "false" diskLib.dataCacheMaxSize = "0" diskLib.dataCacheMaxReadAheadSize = "0" diskLib.DataCacheMinReadAheadSize = "0" diskLib.dataCachePageSize = "4096" diskLib.maxUnsyncedWrites = "0" disk.EnableUUID = "TRUE" usb:0.present = "TRUE" usb:0.deviceType = "hid" usb:0.port = "0" usb:0.parent = "-1"

关机node1,按照上述方法修改node1的虚拟机文件。

10、配置 ASM磁盘

在第15步中已经对RAC 双节点已经配置好了共享磁盘,接下来需要将这些共享磁盘格式化、然后用asmlib 将其配置为ASM 磁盘,用于将来存放OCR、Voting Disk和数据库用。

注意:只需在其中1 个节点上格式化就可以,接下来选择在node1 节点上格式化。这里以asmlib 软件来创建ASM 磁盘,而不使用raw disk,而且从11gR2 开始,OUI的图形界面已经不再支持raw disk。

① 查看共享磁盘信息

以root 用户分别在两个节点node1和node2上执行fdisk -l 命令,查看现有硬盘分区信息:

可以看到目前两个节点上的分区信息一致:其中/dev/sda 是用于存放操作系统的,/dev/sdb、/dev/sdc、/dev/sdd、/dev/sde 这4 块盘都没有分区信息

②格式化共享磁盘

root 用户在node1上格式化/dev/sdb、/dev/sdc、/dev/sdd、/dev/sde 这4块盘

root 用户在node1 上格式化/dev/sdb、/dev/sdc、/dev/sdd、/dev/sde 这4 块盘 [root@node1 ~]# fdisk /dev/sdb Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-500, default 1): Using default value 1 Last cylinder or +size or +sizeM or +sizeK (1-500, default 500): Using default value 500 Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks. [root@node1 ~]#

说明:

说明:fdisk /dev/sdb 表示要对/dev/sdb 磁盘进行格式化,其中,输入的命令分别表示:

n 表示新建1 个分区;

p 表示分区类型选择为primary partition 主分区;

1 表示分区编号从1 开始;

起始、终止柱面选择默认值,即1 和500;

w 表示将新建的分区信息写入硬盘分区表。

③ 重复上述步骤②,以root 用户在node1 上分别格式化其余3 块磁盘

④ 格式化完毕之后,在node1,node2 节点上分别看到下述信息:

node1:

[root@node1 ~]# fdisk -l ...前面省略N行... Disk /dev/sda: 32.2 GB, 32212254720 bytes 255 heads, 63 sectors/track, 3916 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x000229dc Device Boot Start End Blocks Id System /dev/sda1 * 1 3407 27360256 83 Linux /dev/sda2 3407 3917 4096000 82 Linux swap / Solaris Disk /dev/sdb: 536 MB, 536870912 bytes 64 heads, 32 sectors/track, 512 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xab9b0998 Device Boot Start End Blocks Id System /dev/sdb1 1 500 511984 83 Linux Disk /dev/sdc: 536 MB, 536870912 bytes 64 heads, 32 sectors/track, 512 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xcca413ef Device Boot Start End Blocks Id System /dev/sdc1 1 512 524272 83 Linux Disk /dev/sdd: 3221 MB, 3221225472 bytes 255 heads, 63 sectors/track, 391 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x886442a7 Device Boot Start End Blocks Id System /dev/sdd1 1 391 3140676 83 Linux Disk /dev/sde: 3221 MB, 3221225472 bytes 255 heads, 63 sectors/track, 391 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xa9674b78 Device Boot Start End Blocks Id System /dev/sde1 1 391 3140676 83 Linux [root@node1 ~]#

node2:

[root@node2 ~]# fdisk -l ...前面省略N行... Disk /dev/sda: 32.2 GB, 32212254720 bytes 255 heads, 63 sectors/track, 3916 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x000c2574 Device Boot Start End Blocks Id System /dev/sda1 * 1 3407 27360256 83 Linux /dev/sda2 3407 3917 4096000 82 Linux swap / Solaris Disk /dev/sdb: 536 MB, 536870912 bytes 64 heads, 32 sectors/track, 512 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xab9b0998 Device Boot Start End Blocks Id System /dev/sdb1 1 500 511984 83 Linux Disk /dev/sdc: 536 MB, 536870912 bytes 64 heads, 32 sectors/track, 512 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xcca413ef Device Boot Start End Blocks Id System /dev/sdc1 1 512 524272 83 Linux Disk /dev/sdd: 3221 MB, 3221225472 bytes 255 heads, 63 sectors/track, 391 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x886442a7 Device Boot Start End Blocks Id System /dev/sdd1 1 391 3140676 83 Linux Disk /dev/sde: 3221 MB, 3221225472 bytes 255 heads, 63 sectors/track, 391 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xa9674b78 Device Boot Start End Blocks Id System /dev/sde1 1 391 3140676 83 Linux [root@node2 ~]#

⑤在node1和node2两个节点上分别安装ASM RPM软件包

node1:

--检查是否已安装ASM RPM软件包 [root@node2 Packages]# rpm -qa|grep asm [root@node2 Packages]# --安装ASM RPM软件包 [root@node2 Packages]# rpm -ivh oracleasm-support-2.1.11-2.el6.x86_64.rpm warning: oracleasm-support-2.1.11-2.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY Preparing... ########################################### [100%] 1:oracleasm-support ########################################### [100%] [root@node2 Packages]# rpm -ivh kmod-oracleasm-2.0.8-15.el6_9.x86_64.rpm warning: kmod-oracleasm-2.0.8-15.el6_9.x86_64.rpm: Header V4 DSA/SHA1 Signature, key ID 192a7d7d: NOKEY Preparing... ########################################### [100%] 1:kmod-oracleasm ########################################### [100%] gzip: /boot/initramfs-2.6.32-754.el6.x86_64.img: not in gzip format gzip: /boot/initramfs-2.6.32-754.el6.x86_64.tmp: not in gzip format [root@node1 kmod-oracleasm-package]# rpm -ivh oracleasmlib-2.0.12-1.el7.x86_64.rpm warning: oracleasmlib-2.0.12-1.el7.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY Preparing... ########################################### [100%] 1:oracleasmlib ########################################### [100%] [root@node1 kmod-oracleasm-package]# rpm -qa|grep asm kmod-oracleasm-2.0.8-15.el6_9.x86_64 oracleasmlib-2.0.12-1.el7.x86_64 oracleasm-support-2.1.11-2.el6.x86_64

同样在node2上安装同样版本的软件包。

说明:安装上述3 个ASM RPM 软件包时要先安装oracleasm-support-2.1.11-2.el6.x86_64.rpm 软件包,其次安装kmod-oracleasm-2.0.8-15.el6_9.x86_64.rpm 软件包,最后安装oracleasmlib-2.0.12-1.el7.x86_64.rpm软件包。

必须安装和操作系统版本内核相同的软件包,可以使用uname -i命令查看linux的内核版本。

安装完毕后,执行rpm -qa|grep asm 确认是否安装成功。

⑥配置ASM driver服务

可以通过执行/usr/sbin/oracleasm 命令来进行配置, 也可以通过执行/etc/init.d/oracleasm 命令来进行配置,后者命令是Oracle 10g 中进行ASM 配置的命令,Oracle推荐执行前者命令,不过后者命令保留使用。

查看ASM 服务状态:

[root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm status Checking if ASM is loaded: no Checking if /dev/oracleasm is mounted: no

查看ASM服务配置的帮助信息:

[root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm -h Usage: oracleasm [--exec-path=<exec_path>] <command> [ <args> ] oracleasm --exec-path oracleasm -h oracleasm -V The basic oracleasm commands are: configure Configure the Oracle Linux ASMLib driver init Load and initialize the ASMLib driver exit Stop the ASMLib driver scandisks Scan the system for Oracle ASMLib disks status Display the status of the Oracle ASMLib driver listdisks List known Oracle ASMLib disks listiids List the iid files deleteiids Delete the unused iid files querydisk Determine if a disk belongs to Oracle ASMlib createdisk Allocate a device for Oracle ASMLib use deletedisk Return a device to the operating system renamedisk Change the label of an Oracle ASMlib disk update-driver Download the latest ASMLib driver

配置ASM 服务:

[root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm configure -i Configuring the Oracle ASM library driver. This will configure the on-boot properties of the Oracle ASM library driver. The following questions will determine whether the driver is loaded on boot and what permissions it will have. The current values will be shown in brackets ('[]'). Hitting <ENTER> without typing an answer will keep that current value. Ctrl-C will abort. Default user to own the driver interface []: grid Default group to own the driver interface []: asmadmin Start Oracle ASM library driver on boot (y/n) [n]: y Scan for Oracle ASM disks on boot (y/n) [y]: y Writing Oracle ASM library driver configuration: done [root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm status Checking if ASM is loaded: no Checking if /dev/oracleasm is mounted: no [root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm init Creating /dev/oracleasm mount point: /dev/oracleasm Loading module "oracleasm": oracleasm Configuring "oracleasm" to use device physical block size Mounting ASMlib driver filesystem: /dev/oracleasm [root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm configure ORACLEASM_ENABLED=true ORACLEASM_UID=grid ORACLEASM_GID=asmadmin ORACLEASM_SCANBOOT=true ORACLEASM_SCANORDER="" ORACLEASM_SCANEXCLUDE="" ORACLEASM_SCAN_DIRECTORIES="" ORACLEASM_USE_LOGICAL_BLOCK_SIZE="false"

说明:/usr/sbin/oracleasm configure -i 命令进行配置时,用户配置为grid,组为asmadmin,启动ASM library driver 驱动服务,并且将其配置为随着操作系统的启动而自动启动。

配置完成后,记得执行/usr/sbin/oracleasm init 命令来加载oracleasm 内核模块。

在node2 上执行上述步骤⑥,在node2上完成ASM 服务配置。

⑦ 配置ASM磁盘

安装ASM RPM软件包,配置ASM 驱动服务的最终目的是要创建ASM 磁盘,为将来安装grid 软件、创建Oracle 数据库提供存储。

说明:只需在一个节点上创建ASM 磁盘即可!创建完之后,在其它节点上执行/usr/sbin/oracleasm scandisks 之后,就可看到ASM 磁盘。

1、先在node2上执行/usr/sbin/oracleasm createdisk 来创建ASM 磁盘

[root@node2 kmod-oracleasm-package]# /usr/sbin/oracleasm listdisks

[root@node2 kmod-oracleasm-package]# /usr/sbin/oracleasm createdisk

Usage: oracleasm-createdisk [-l <manager>] [-v] <label> <device>

[root@node2 kmod-oracleasm-package]# /usr/sbin/oracleasm createdisk VOL1 /dev/sdb1

Writing disk header: done

Instantiating disk: done

[root@node2 kmod-oracleasm-package]# /usr/sbin/oracleasm createdisk VOL1 /dev/sdc1

Disk "VOL1" already exists

[root@node2 kmod-oracleasm-package]# /usr/sbin/oracleasm createdisk VOL2 /dev/sdc1

Writing disk header: done

Instantiating disk: done

[root@node2 kmod-oracleasm-package]# /usr/sbin/oracleasm createdisk VOL3 /dev/sdd1

Writing disk header: done

Instantiating disk: done

[root@node2 kmod-oracleasm-package]# /usr/sbin/oracleasm createdisk VOL4 /dev/sde1

Writing disk header: done

Instantiating disk: done

[root@node2 kmod-oracleasm-package]# /usr/sbin/oracleasm listdisks

VOL1

VOL2

VOL3

VOL4

2、现在在node1上还看不到刚创建的ASM 磁盘。执行/usr/sbin/oracleasm scandisks 扫描磁盘后就可以看到刚才在node2上创建的ASM磁盘了。

[root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm listdisks

[root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

Instantiating disk "VOL1"

Instantiating disk "VOL2"

Instantiating disk "VOL3"

Instantiating disk "VOL4"

[root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm listdisks

VOL1

VOL2

VOL3

VOL4

3、如何确定ASM 磁盘同物理磁盘之间的对应关系?

node1:

[root@node1 kmod-oracleasm-package]# /usr/sbin/oracleasm querydisk /dev/sd*

Device "/dev/sda" is not marked as an ASM disk

Device "/dev/sda1" is not marked as an ASM disk

Device "/dev/sda2" is not marked as an ASM disk

Device "/dev/sdb" is not marked as an ASM disk

Device "/dev/sdb1" is marked an ASM disk with the label "VOL1"

Device "/dev/sdc" is not marked as an ASM disk

Device "/dev/sdc1" is marked an ASM disk with the label "VOL2"

Device "/dev/sdd" is not marked as an ASM disk

Device "/dev/sdd1" is marked an ASM disk with the label "VOL3"

Device "/dev/sde" is not marked as an ASM disk

Device "/dev/sde1" is marked an ASM disk with the label "VOL4"

node2:

[root@node2 kmod-oracleasm-package]# /usr/sbin/oracleasm querydisk /dev/sd*

Device "/dev/sda" is not marked as an ASM disk

Device "/dev/sda1" is not marked as an ASM disk

Device "/dev/sda2" is not marked as an ASM disk

Device "/dev/sdb" is not marked as an ASM disk

Device "/dev/sdb1" is marked an ASM disk with the label "VOL1"

Device "/dev/sdc" is not marked as an ASM disk

Device "/dev/sdc1" is marked an ASM disk with the label "VOL2"

Device "/dev/sdd" is not marked as an ASM disk

Device "/dev/sdd1" is marked an ASM disk with the label "VOL3"

Device "/dev/sde" is not marked as an ASM disk

Device "/dev/sde1" is marked an ASM disk with the label "VOL4"

11、解压安装文件

--需要下载的安装文件: p13390677_112040_Linux-x86-64_1of7_database.zip p13390677_112040_Linux-x86-64_2of7_database.zip p13390677_112040_Linux-x86-64_3of7_grid.zip --解压文件: [root@node1 ~]# unzip p13390677_112040_Linux-x86-64_1of7_database.zip [root@node1 ~]# unzip p13390677_112040_Linux-x86-64_2of7_database.zip [root@node1 ~]# unzip p13390677_112040_Linux-x86-64_3of7_grid.zip [root@node1 ~]# ll drwxr-xr-x 8 root root 4096 8月 21 2009 database drwxr-xr-x 8 root root 4096 8月 21 2009 grid --查看解压后的文件大小: [root@node1 ~]# du -sh database 2.4G database [root@node1 ~]# du -sh grid/ 1.1G grid/ --为便于将来安装软件,分别将其move 到oracle 用户和grid 用户的家目录: [root@node1 ~]# mv database/ /home/oracle/ [root@node1 ~]# mv grid/ /home/grid/

12、安装前预检查配置信息

① 利用CVU(Cluster Verification Utility)检查CRS 的安装前环境

[root@node1 home]# su - grid node1-> pwd /home/grid node1-> ls grid node1-> cd grid node1-> ll total 40 drwxr-xr-x 9 root root 4096 Aug 17 2009 doc drwxr-xr-x 4 root root 4096 Aug 15 2009 install drwxrwxr-x 2 root root 4096 Aug 15 2009 response drwxrwxr-x 2 root root 4096 Aug 15 2009 rpm -rwxrwxr-x 1 root root 3795 Jan 29 2009 runcluvfy.sh -rwxr-xr-x 1 root root 3227 Aug 15 2009 runInstaller drwxrwxr-x 2 root root 4096 Aug 15 2009 sshsetup drwxr-xr-x 14 root root 4096 Aug 15 2009 stage -rw-r--r-- 1 root root 4228 Aug 18 2009 welcome.html node1-> ./runcluvfy.sh stage -pre crsinst -n node1,node2 -fixup -verbose ......

② 根据检查结果修复安装环境

Check: TCP connectivity of subnet "192.168.88.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- node1:192.168.88.191 node2:192.168.88.192 failed ERROR: PRVF-7617 : Node connectivity between "node1 : 192.168.88.191" and "node2 : 192.168.88.192" failed Result: TCP connectivity check failed for subnet "192.168.88.0" 解决方法:关闭node1和node2的防火墙 Check: Swap space Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- node2 2GB (2097148.0KB) 2.496GB (2617194.0KB) failed node1 2GB (2097148.0KB) 2.496GB (2617194.0KB) failed Result: Swap space check failed 解决方法:添加交换分区(linux太弱了,baidu后反正没搞明白) 第一步、dd命令创建一个空文件,大小为1G [root@node2 shell]# dd if=/dev/zero of=/tmp/swap bs=1MB count=1024 记录了1024+0 的读入 记录了1024+0 的写出 1024000000字节(1.0 GB)已复制,24.026 秒,42.6 MB/秒 [root@node2 shell]# du -sh /tmp/swap 977M /tmp/swap 第二步、格式化此文件为swap文件系统 [root@node2 shell]# mkswap -L swap /tmp/swap 正在设置交换空间版本 1,大小 = 999996 KiB LABEL=swap, UUID=4ba4d45c-76d0-4c57-acaf-0ba35967f39a 第三步、挂载swap分区 [root@node2 shell]# swapon /tmp/swap swapon: /tmp/swap:不安全的权限 0644,建议使用 0600。 [root@node2 shell]# free -h total used free shared buff/cache available Mem: 1.7G 370M 70M 17M 1.2G 1.1G Swap: 3.0G 0B 3.0G 第四步、编辑/etc/fstab文件,以便开机自动挂载。swap分区添加完成 在文件的最后加上: /tmp/swap swap swap defaults 0 0

如果分区不用了,可以用如下命令删掉: [root@node2 shell]# swapon off /tmp/swap [root@node2 shell]# free -h total used free shared buff/cache available Mem: 1.7G 370M 70M 17M 1.2G 1.1G Swap: 2.0G 0B 2.0G Check: Membership of user "grid" in group "dba" Node Name User Exists Group Exists User in Group Status ---------------- ------------ ------------ ------------ ---------------- node2 yes yes no failed node1 yes yes no failed Result: Membership check for user "grid" in group "dba" failed 解决方法:分别在node1和node2用root用户执行 [root@node1 ~]# sh /tmp/CVU_11.2.0.4.0_grid/runfixup.sh [root@node2 ~]# sh /tmp/CVU_11.2.0.4.0_grid/runfixup.sh Check: Package existence for "elfutils-libelf-devel" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- node2 missing elfutils-libelf-devel-0.97 failed node1 missing elfutils-libelf-devel-0.97 failed Result: Package existence check failed for "elfutils-libelf-devel" 解决方法:安装 Check: Package existence for "libaio-devel(x86_64)" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- node2 missing libaio-devel(x86_64)-0.3.105 failed node1 missing libaio-devel(x86_64)-0.3.105 failed Result: Package existence check failed for "libaio-devel(x86_64)" 解决方法:安装 Check: Package existence for "pdksh" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- node2 missing pdksh-5.2.14 failed node1 missing pdksh-5.2.14 failed Result: Package existence check failed for "pdksh" 解决方法:安装 [root@node1 oracle_soft]# rpm -ivh pdksh-5.2.14-37.el5.x86_64.rpm [root@node2 oracle_soft]# rpm -ivh pdksh-5.2.14-37.el5.x86_64.rpm Checking to make sure user "grid" is not in "root" group Node Name Status Comment ------------ ------------------------ ------------------------ node2 passed does not exist node1 passed does not exist Result: User "grid" is not part of "root" group. Check passed 解决办法:分别在node1和node2用root用户执行 [root@node1 ~]# sh /tmp/CVU_11.2.0.4.0_grid/runfixup.sh [root@node2 ~]# sh /tmp/CVU_11.2.0.4.0_grid/runfixup.sh Checking to make sure user "grid" is not in "root" group Node Name Status Comment ------------ ------------------------ ------------------------ node2 passed does not exist node1 passed does not exist Result: User "grid" is not part of "root" group. Check passed 解决办法:忽略 Check: TCP connectivity of subnet "192.168.122.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- node1:192.168.122.1 node2:192.168.122.1 failed ERROR: PRVF-7617 : Node connectivity between "node1 : 192.168.122.1" and "node2 : 192.168.122.1" failed Result: TCP connectivity check failed for subnet "192.168.122.0" 解决办法:删掉virbr0 [root@node1 ~]# ifconfig virbr0 down [root@node1 ~]# brctl delbr virbr0

18、安装Grid Infrastructure

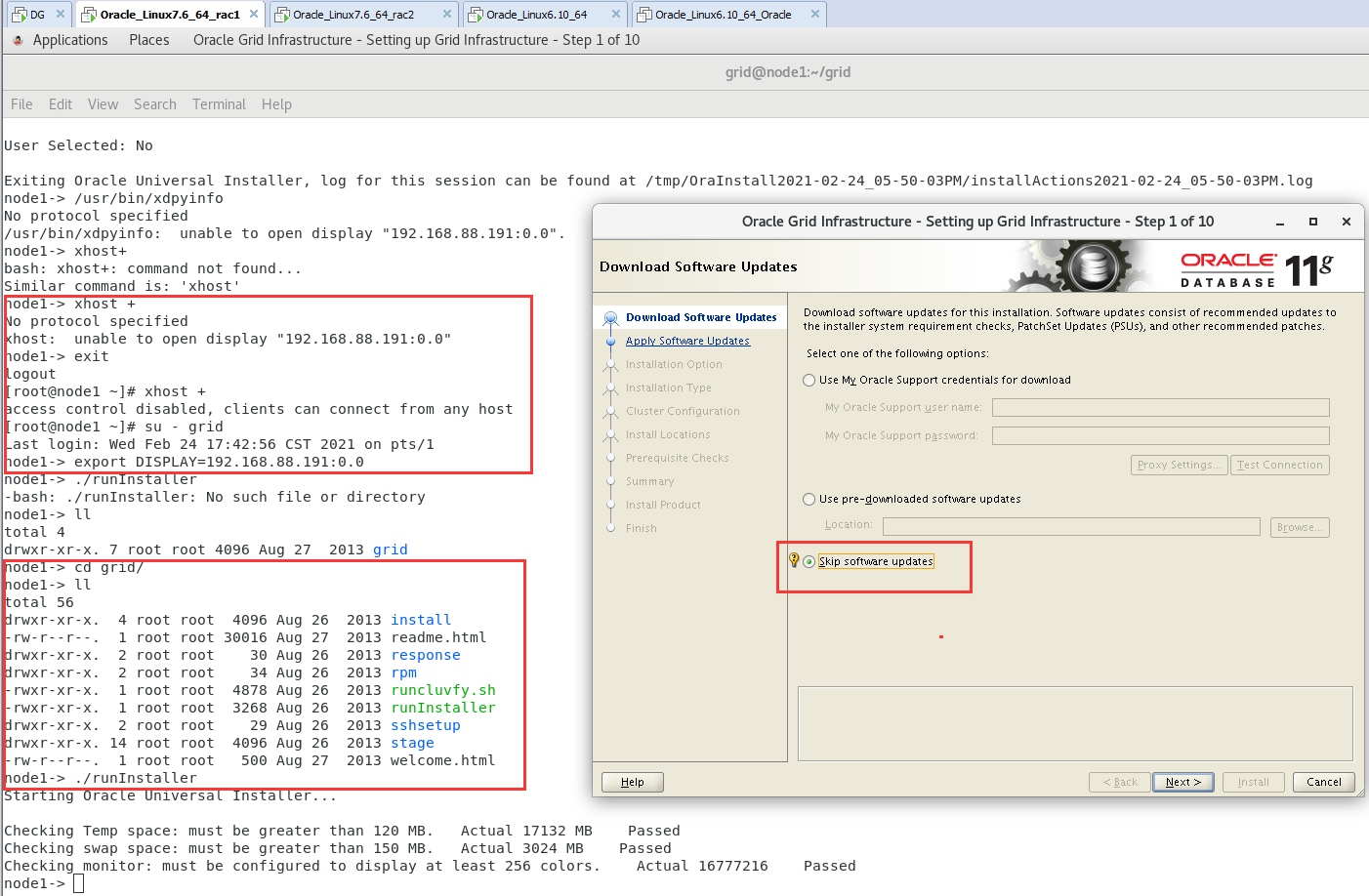

18.1、 以grid 用户登录图形界面,执行/home/grid/grid/runInstaller,进入OUI 的图形安装界

如果出现以下问题:

node1-> ./runInstaller

Starting Oracle Universal Installer...

Checking Temp space: must be greater than 120 MB. Actual 17549 MB Passed

Checking swap space: must be greater than 150 MB. Actual 3024 MB Passed

Checking monitor: must be configured to display at least 256 colors

>>> Could not execute auto check for display colors using command /usr/bin/xdpyinfo. Check if the DISPLAY variable is set. Failed <<<<

Some requirement checks failed. You must fulfill these requirements before

continuing with the installation,

Continue? (y/n) [n] n

User Selected: No

解决办法:

node1-> xhost + No protocol specified xhost: unable to open display "192.168.88.191:0.0" node1-> exit logout [root@node1 ~]# xhost + access control disabled, clients can connect from any host [root@node1 ~]# su - grid Last login: Wed Feb 24 17:42:56 CST 2021 on pts/1 node1-> export DISPLAY=192.168.88.191:0.0 node1-> cd grid/ node1-> ./runInstaller Starting Oracle Universal Installer... Checking Temp space: must be greater than 120 MB. Actual 17132 MB Passed Checking swap space: must be greater than 150 MB. Actual 3024 MB Passed Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed node1->

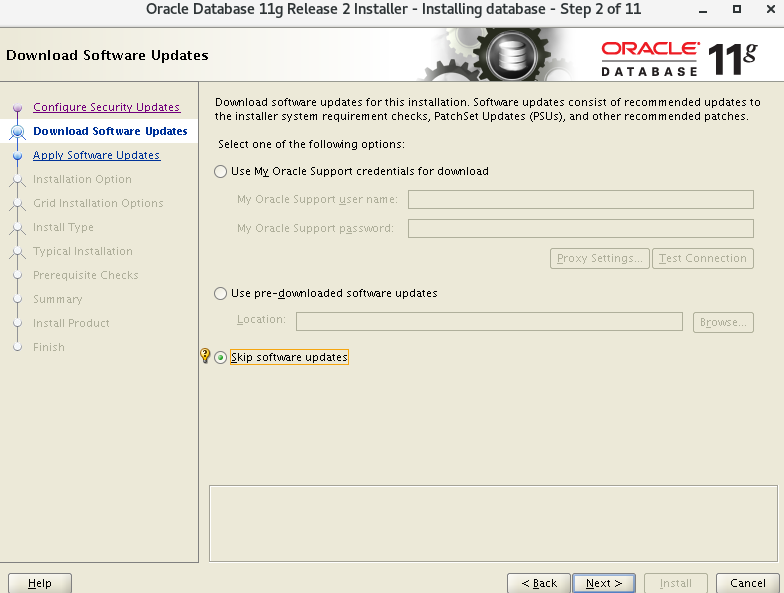

18.2、进入OUI 安装界面后,选择第3 项,跳过软件更新,Next:

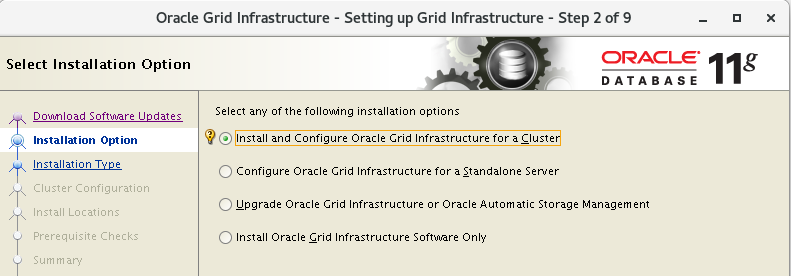

18.3、选择集群的Grid Infrastructure,Next:

18.4、选择advanced Installation,Next:

18.5、语言选择默认,English,Next:

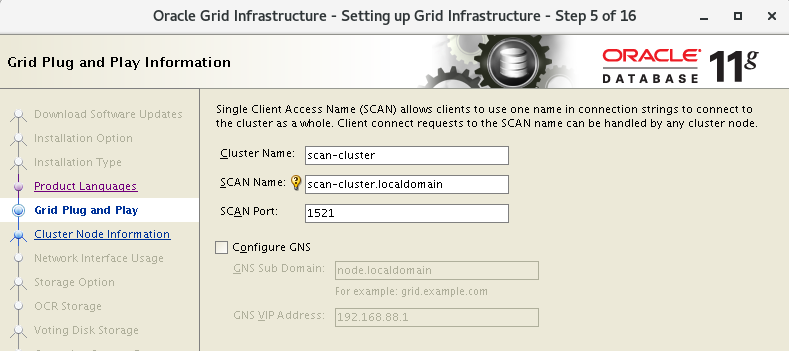

18.6、去掉Configure GNS 选项, 输入Cluster Name:scan-cluster,SCAN Name:scan-cluster.localdomain。Next:

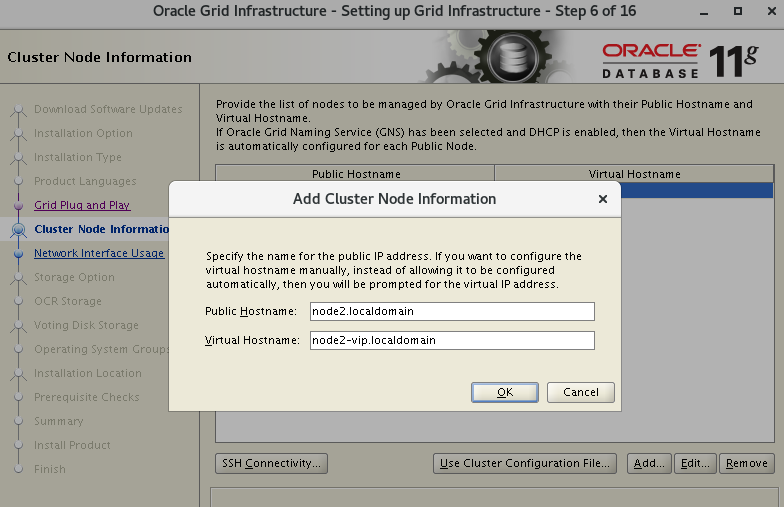

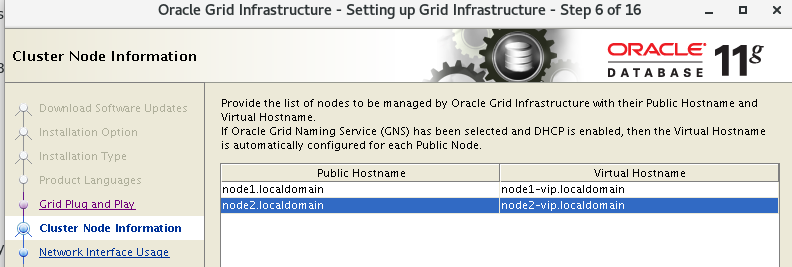

18.7、单击Add,添加第2 个节点,Next:

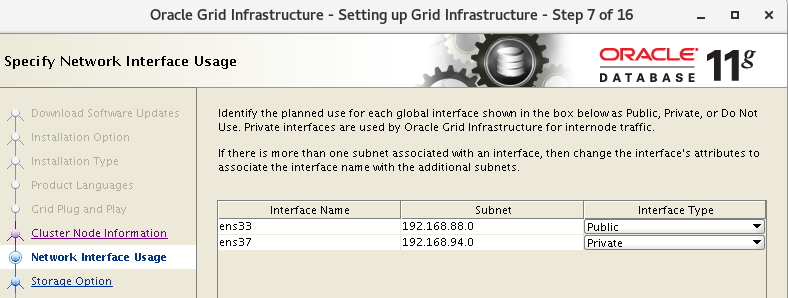

18.8、确认网络接口,Next:

18.9、选择ASM,作为存储,Next:

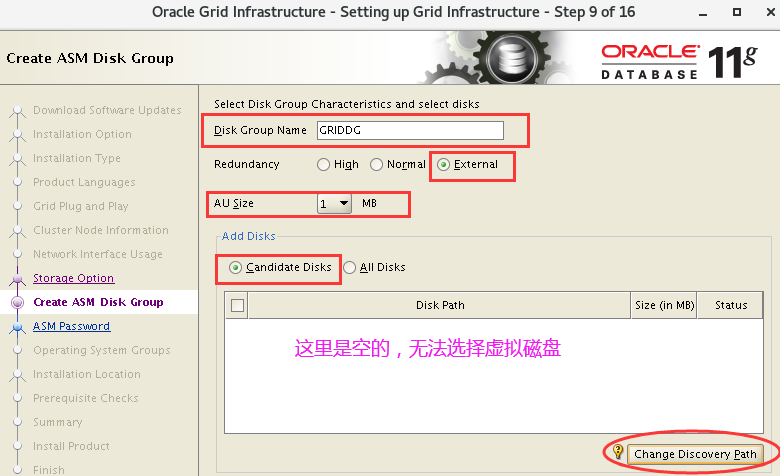

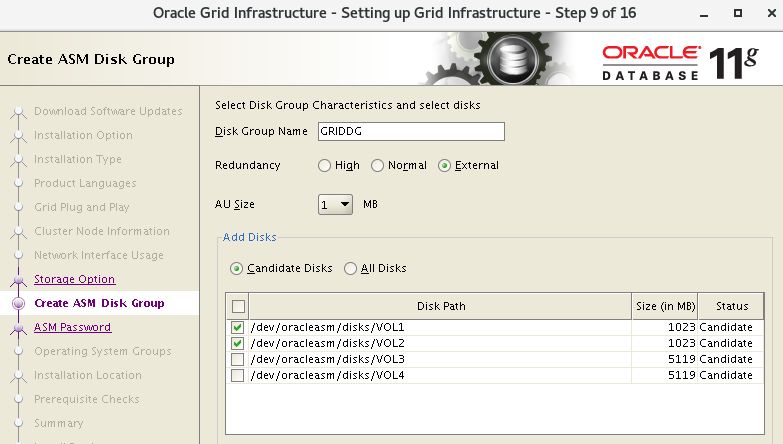

18.10、输入ASM 磁盘组名,这里命名为GRIDDG,冗余策略选择External 外部,AU 大小选择默认1M,ASM 磁盘选择VOL1,VOL2。Next:

这里有可能选择不到虚拟磁盘,如果下图:

可能是虚拟磁盘的路径不对。点击“Change Discovery Path”按钮,在弹出框中可以看到默认的路径是/dev/raw/*。

通过如下命令查看虚拟磁盘的状态,发现路径可能是/dev/oracleasm。于是将路径修改为/dev/oracleasm/disks/*,前面配置的ASM虚拟磁盘可以选择了,但是这个虚拟磁盘是带路径的,也不知道对不对,先继续吧。

[root@node2 ~]# /usr/sbin/oracleasm status Checking if ASM is loaded: yes Checking if /dev/oracleasm is mounted: yes

18.11、选择给ASM 的SYS、ASMSNMP 用户配置为相同的口令,并输入口令,Next:

18.12、选择不使用IPMI,Next:

18.13、给ASM 指定不同的组,Next:

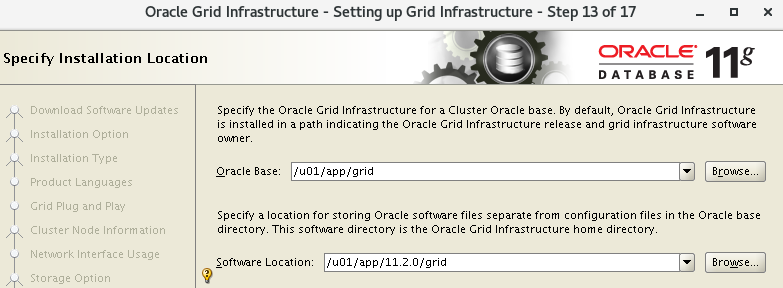

18.14、选择GRID 软件的安装路径,其中ORACLE_BASE,ORACLE_HOME 均选择之前已经配置好的。这里要注意GRID 的ORACLE_HOME 不能是ORACLE_BASE 的子目录。

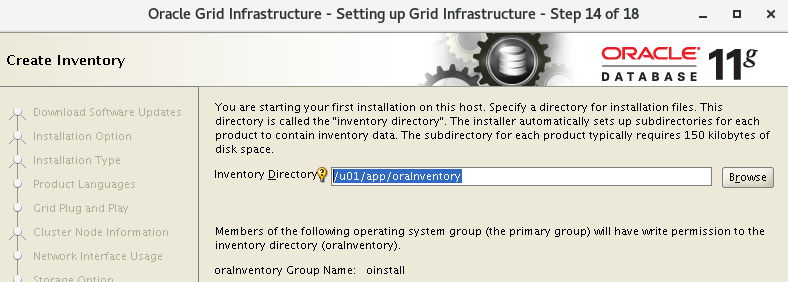

18.15、选择默认的Inventory,Next:

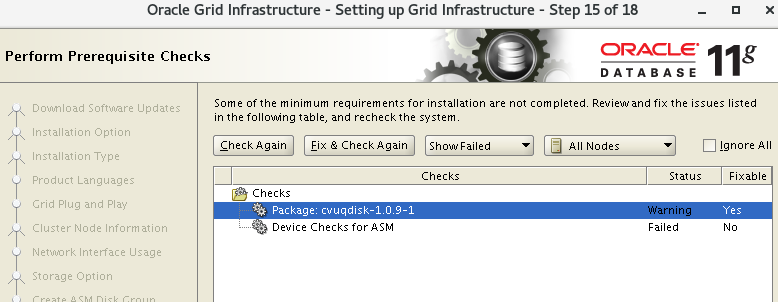

18.16、检查出现告警,提示在所有节点上缺失cvuqdisk-1.0.9-1 软件包。

可以选择忽略,直接进入下一步安装。也可以从grid 安装文件的rpm 目录下获取该RPM包,然后进行安装。

分别在node1、node2节点上安装完cvuqdisk-1.0.9-1 软件后,重新执行预检查,不再有警告信息。

我实际在安装的时候,安装万cvuqdisk后,重新执行检查还是有“Device Checks for ASM”的错误,我忽略掉了。

18.17、进入安装GRID 安装之前的概要信息,Install 进行安装:

18.18、根据提示以root 用户分别在两个节点上执行脚本:

执行/u01/app/oraInventory/orainstRoot.sh 脚本:

执行/u01/app/11.2.0/grid/root.sh 脚本:

node1节点运行root.sh结果:

[root@node1 ~]# sh /u01/app/11.2.0/grid/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /u01/app/11.2.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Creating /etc/oratab file... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params Creating trace directory User ignored Prerequisites during installation Installing Trace File Analyzer OLR initialization - successful root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user cert Adding Clusterware entries to inittab ohasd failed to start Failed to start the Clusterware. Last 20 lines of the alert log follow: 2021-04-07 15:34:45.885: [client(32854)]CRS-2101:The OLR was formatted using version 3. CRS-2672: Attempting to start 'ora.mdnsd' on 'node1' CRS-2676: Start of 'ora.mdnsd' on 'node1' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'node1' CRS-2676: Start of 'ora.gpnpd' on 'node1' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'node1' CRS-2672: Attempting to start 'ora.gipcd' on 'node1' CRS-2676: Start of 'ora.cssdmonitor' on 'node1' succeeded CRS-2676: Start of 'ora.gipcd' on 'node1' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'node1' CRS-2672: Attempting to start 'ora.diskmon' on 'node1' CRS-2676: Start of 'ora.diskmon' on 'node1' succeeded CRS-2676: Start of 'ora.cssd' on 'node1' succeeded ASM created and started successfully. Disk Group GRIDDG created successfully. clscfg: -install mode specified Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. CRS-4256: Updating the profile Successful addition of voting disk 6f99fb7033364f48bf7bf40de939dbc4. Successfully replaced voting disk group with +GRIDDG. CRS-4256: Updating the profile CRS-4266: Voting file(s) successfully replaced ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 6f99fb7033364f48bf7bf40de939dbc4 (/dev/oracleasm/disks/VOL1) [GRIDDG] Located 1 voting disk(s). CRS-2672: Attempting to start 'ora.asm' on 'node1' CRS-2676: Start of 'ora.asm' on 'node1' succeeded CRS-2672: Attempting to start 'ora.GRIDDG.dg' on 'node1' CRS-2676: Start of 'ora.GRIDDG.dg' on 'node1' succeeded Configure Oracle Grid Infrastructure for a Cluster ... succeeded

node2节点运行root.sh结果:

[root@node2 ~]# sh /u01/app/11.2.0/grid/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /u01/app/11.2.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params Creating trace directory User ignored Prerequisites during installation Installing Trace File Analyzer OLR initialization - successful Adding Clusterware entries to inittab CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node node1, number 1, and is terminating An active cluster was found during exclusive startup, restarting to join the cluster Configure Oracle Grid Infrastructure for a Cluster ... succeeded

在执行root.sh脚本是如果遇到下列问题的解决办法:

问题一:

Adding Clusterware entries to inittab ohasd failed to start Failed to start the Clusterware. Last 20 lines of the alert log follow: 2021-04-07 15:34:45.885: [client(32854)]CRS-2101:The OLR was formatted using version 3. 原因: 该问题是ORACLE的一个BUG,已经在11.2.0.3中修复,该问题是由于在执行root.sh时候 会在/tmp/.oracle下产生一个文件npohasd文件,此文件的只有root用户有权限,因此出现不能启动ohasd进程。 解决办法: 方法一: 提示错误并卡在此处,直接另开一个会话窗口,在 root 用户下执行 /bin/dd if=/var/tmp/.oracle/npohasd of=/dev/null bs=1024 count=1 ( 注:根据操作系统版本不同, npohasd 文件目录可能不同,更改目录路径即可 ) 方法二: [root@rac1 install]# cd /var/tmp/.oracle/ [root@rac1 .oracle]# ls npohasd [root@rac1 .oracle]# rm npohasd rm: remove fifo 'npohasd'? y [root@rac1 .oracle]# ls [root@rac1 .oracle]# touch npohasd [root@rac1 .oracle]# chomod 755 npohasd

问题二:

User ignored Prerequisites during installation Installing Trace File Analyzer Failed to create keys in the OLR, rc = 127, Message: /u01/app/11.2.0/grid/bin/clscfg.bin: error while loading shared libraries: libcap.so.1: cannot open shared object file: No such file or directory Failed to create keys in the OLR at /u01/app/11.2.0/grid/crs/install/crsconfig_lib.pm line 7660. /u01/app/11.2.0/grid/perl/bin/perl -I/u01/app/11.2.0/grid/perl/lib -I/u01/app/11.2.0/grid/crs/install /u01/app/11.2.0/grid/crs/install/rootcrs.pl execution failed 解决办法: [root@node2 ~]# cd /lib64/ [root@node2 lib64]# ls -lrt libcap* -rwxr-xr-x. 1 root root 23968 11月 20 2015 libcap-ng.so.0.0.0 -rwxr-xr-x. 1 root root 20032 8月 3 2017 libcap.so.2.22 lrwxrwxrwx. 1 root root 14 4月 8 15:38 libcap.so.2 -> libcap.so.2.22 lrwxrwxrwx. 1 root root 18 4月 8 15:38 libcap-ng.so.0 -> libcap-ng.so.0.0.0 [root@node2 lib64]# ln -s libcap.so.2.22 libcap.so.1 [root@node2 lib64]# ls -lrt libcap* -rwxr-xr-x. 1 root root 23968 11月 20 2015 libcap-ng.so.0.0.0 -rwxr-xr-x. 1 root root 20032 8月 3 2017 libcap.so.2.22 lrwxrwxrwx. 1 root root 14 4月 8 15:38 libcap.so.2 -> libcap.so.2.22 lrwxrwxrwx. 1 root root 18 4月 8 15:38 libcap-ng.so.0 -> libcap-ng.so.0.0.0 lrwxrwxrwx 1 root root 14 4月 12 16:07 libcap.so.1 -> libcap.so.2.22

18.19、此时,集群件相关的服务已经启动。当然,ASM 实例也将在两个节点上启动。

[root@node1 ~]# su - grid Last login: Wed Apr 7 15:50:00 CST 2021 on pts/2 node1-> crs_stat -t Name Type Target State Host ------------------------------------------------------------ ora.GRIDDG.dg ora....up.type ONLINE ONLINE node1 ora....N1.lsnr ora....er.type ONLINE ONLINE node1 ora.asm ora.asm.type ONLINE ONLINE node1 ora.cvu ora.cvu.type ONLINE ONLINE node1 ora.gsd ora.gsd.type OFFLINE OFFLINE ora....network ora....rk.type ONLINE ONLINE node1 ora....SM1.asm application ONLINE ONLINE node1 ora.node1.gsd application OFFLINE OFFLINE ora.node1.ons application ONLINE ONLINE node1 ora.node1.vip ora....t1.type ONLINE ONLINE node1 ora....SM2.asm application ONLINE ONLINE node2 ora.node2.gsd application OFFLINE OFFLINE ora.node2.ons application ONLINE ONLINE node2 ora.node2.vip ora....t1.type ONLINE ONLINE node2 ora.oc4j ora.oc4j.type ONLINE ONLINE node1 ora.ons ora.ons.type ONLINE ONLINE node1 ora.scan1.vip ora....ip.type ONLINE ONLINE node1

18.19、执行完上述脚本之后,单击OK,Next,进入下一步。

18.20、最后,单击close,完成GRID 软件在双节点上的安装。

至此,GRID 集群件安装成功!!!

18.21、重启虚拟机后,/etc/resolv.conf中的nameserver被覆盖

在本此安装中,DNS服务器的ip是192.168.88.11,每次在node1、node2的/etc/resolv.conf中设置好nameserver为192.168.88.11后,重启虚拟机,nameserver又变成了8.8.8.8.

经过百度有以下两种解决方法:

方法一:直接修改所有ifcfg-ens*中的dns为192.168.88.11

vi /etc/sysconfig/network-scripts/ifcfg-ens33

vi /etc/sysconfig/network-scripts/ifcfg-ens34

方法二:直接执行如下命令,没试过:

chattr +i /etc/resolv.conf

18.22、重启虚拟机后,执行crs_stat -t命令报错:CRS-0184: Cannot communicate with the CRS daemon

这个问题折腾了好久,搞的人都快崩溃了。最后发现可能是ohasd服务没有启动。解决步骤如下:

1. 创建服务ohas.service的服务文件并赋予权限

[root@node2 ~]# touch /usr/lib/systemd/system/ohas.service [root@node2 ~]# chmod 777 /usr/lib/systemd/system/ohas.service

2. 往ohas.service服务文件添加启动ohasd的相关信息

vi /usr/lib/systemd/system/ohas.service

添加内容如下:

[Unit] Description=Oracle High Availability Services After=syslog.target [Service] ExecStart=/etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple Restart=always [Install] WantedBy=multi-user.target

3. 加载,启动服务

重新加载守护进程: systemctl daemon-reload 设置守护进程自动启动: systemctl enable ohas.service 手工启动ohas服务: systemctl start ohas.service 查看ohas服务状态 systemctl status ohas.service

最终执行过程如下:

[root@node2 ~]# touch /usr/lib/systemd/system/ohas.service [root@node2 ~]# chmod 777 /usr/lib/systemd/system/ohas.service [root@node2 ~]# vi /usr/lib/systemd/system/ohas.service [root@node2 ~]# systemctl daemon-reload [root@node2 ~]# systemctl enable ohas.service Created symlink from /etc/systemd/system/multi-user.target.wants/ohas.service to /usr/lib/systemd/system/ohas.service. [root@node2 ~]# systemctl start ohas.service [root@node2 ~]# systemctl status ohas.service ● ohas.service - Oracle High Availability Services Loaded: loaded (/usr/lib/systemd/system/ohas.service; enabled; vendor preset: disabled) Active: active (running) since 二 2021-04-13 11:15:29 CST; 10s ago Main PID: 2724 (init.ohasd) Tasks: 1 CGroup: /system.slice/ohas.service └─2724 /bin/sh /etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple 4月 13 11:15:29 node2.localdomain systemd[1]: Started Oracle High Availability Services. 4月 13 11:15:29 node2.localdomain systemd[1]: Starting Oracle High Availability Services...

19、安装oracle

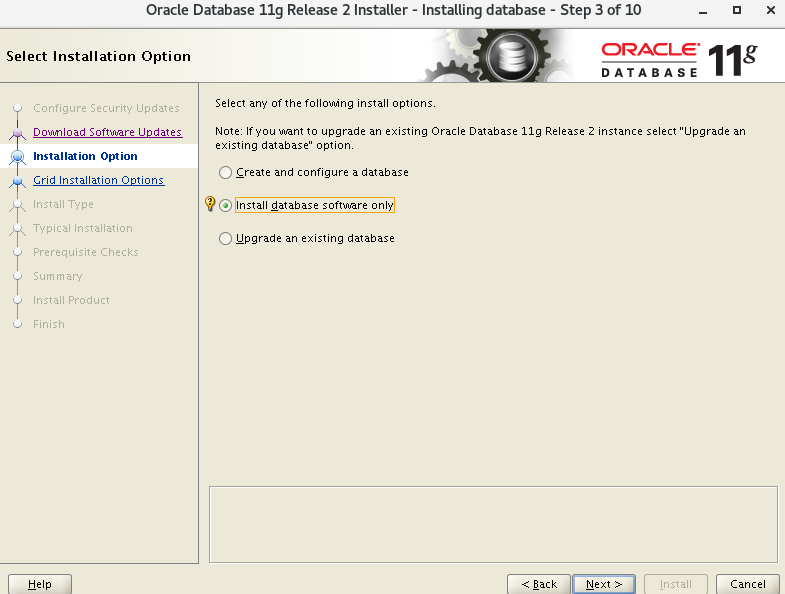

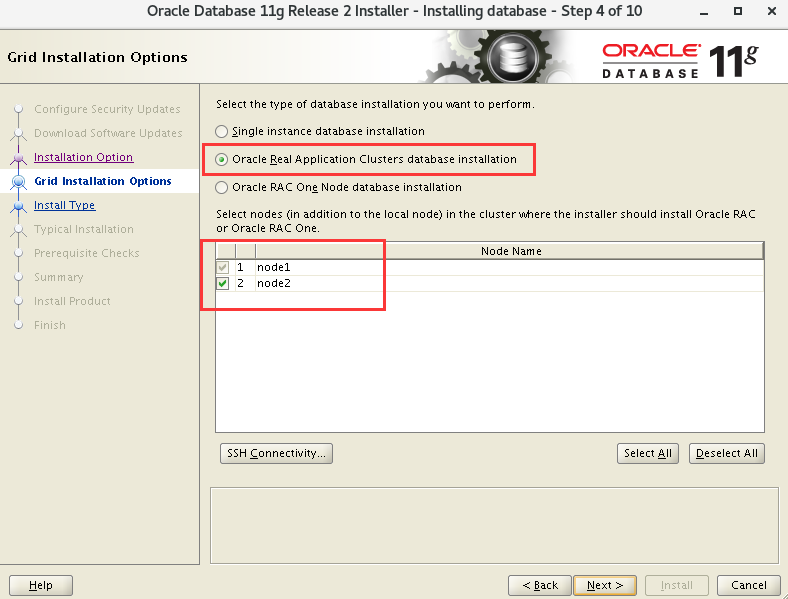

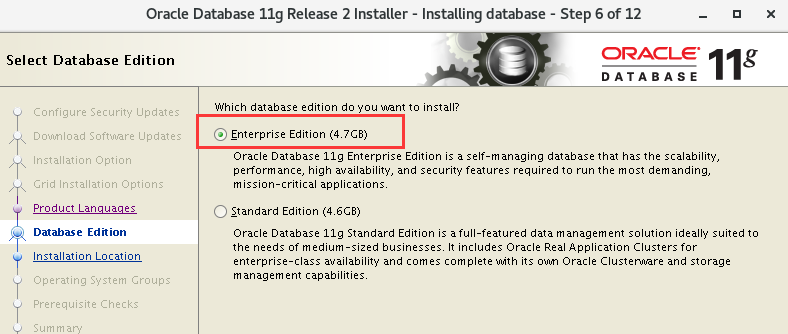

19.1、以oracle用户登录图形界面,执行/home/oracle/database/runInstaller,进入OUI 的图形安装界面:

[root@node1 ~]# su - oracle Last login: Wed Apr 7 11:55:13 CST 2021 from node1.localdomain on pts/1 node1-> pwd /home/oracle node1-> cd database/ node1-> export DISPLAY=192.168.88.191:0.0 node1-> ./runInstaller Starting Oracle Universal Installer... Checking Temp space: must be greater than 120 MB. Actual 7448 MB Passed Checking swap space: must be greater than 150 MB. Actual 3005 MB Passed Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed Preparing to launch Oracle Universal Installer from /tmp/OraInstall2021-04-07_04-24-10PM. Please wait ...node1->

Next,语言选择默认,English,Next:

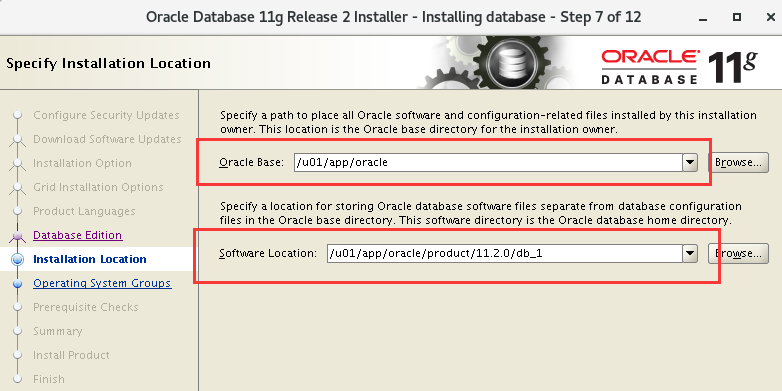

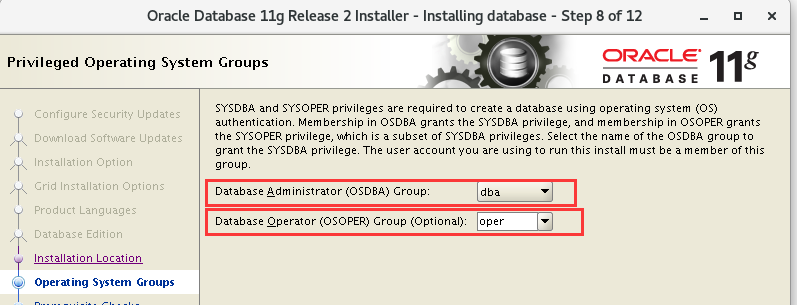

19.2、选择oracle 的安装路径,其中ORACLE_BASE,ORACLE_HOME 均选择之前已经配置好的

19.3、选择oracle 用户组,Next:

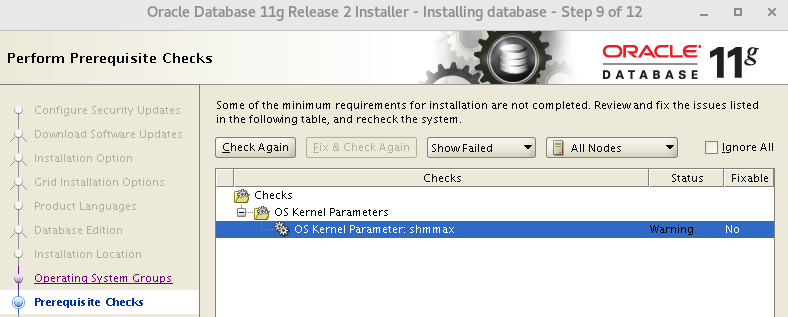

19.4、执行安装前的预检查,Next:

我忽略了这个Warning。

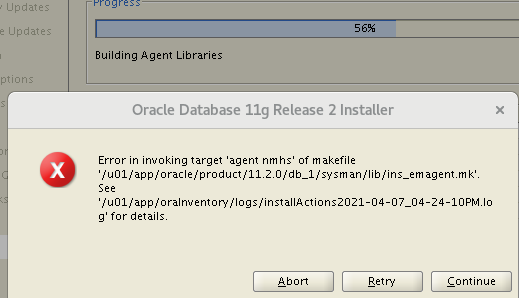

19.5、安装过程中遇到的问题

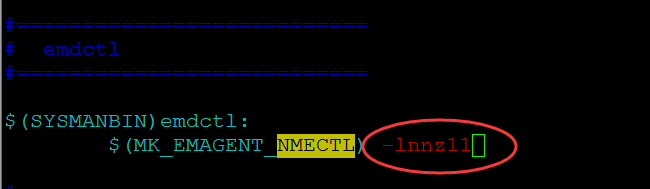

问题一:

解决办法:

修改$ORACLE_HOME/sysman/lib/ins_emagent.mk,将$(MK_EMAGENT_NMECTL)修改为:$(MK_EMAGENT_NMECTL) -lnnz11 修改前备份原始文件 [root@node1 ~]# su - oracle Last login: Wed Apr 7 16:52:33 CST 2021 on pts/1 node1-> cd $ORACLE_HOME/sysman/lib node1-> cp ins_emagent.mk ins_emagent.mk.bak node1-> vi ins_emagent.mk 进入vi编辑器后,命令模式输入/NMECTL 进行查找,快速定位要修改的行 在后面追加参数-lnnz11,注意:第一个是字母l,后面两个是数字1 保存后,回到安装界面,点Retry即可。

19.6、在node1和node2分别执行root.sh

node1:

[root@node1 ~]# /u01/app/oracle/product/11.2.0/db_1/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/11.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Finished product-specific root actions.

node2:

[root@node2 ~]# /u01/app/oracle/product/11.2.0/db_1/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/11.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Finished product-specific root actions.

至此,我们在RAC 双节点上完成oracle 软件的安装!!!

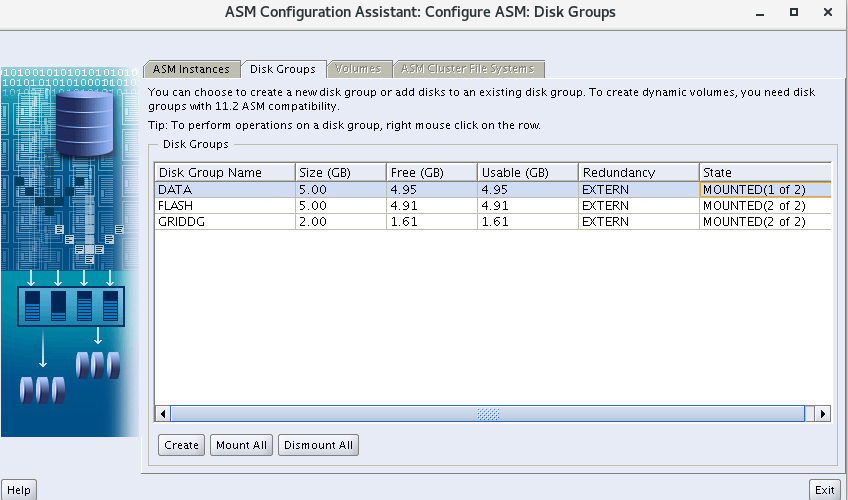

20、利用ASMCA创建ASM磁盘组

以grid 用户创建ASM 磁盘组,创建的ASM 磁盘组为下一步创建数据库提供存储。

① grid 用户登录图形界面,执行asmca 命令来创建磁盘组:

[root@node1 ~]# xhost + access control disabled, clients can connect from any host [root@node1 ~]# su - grid Last login: Wed Apr 7 17:21:54 CST 2021 on pts/2 node1-> id grid uid=1100(grid) gid=2000(oinstall) groups=2000(oinstall),2200(asmadmin),2201(asmdba),2202(asmoper),2300(dba) node1-> env|grep ORA ORACLE_SID=+ASM1 ORACLE_BASE=/u01/app/grid ORACLE_TERM=xterm ORACLE_HOME=/u01/app/11.2.0/grid node1-> asmca DISPLAY not set. Set DISPLAY environment variable, then re-run. node1-> export DISPLAY=192.168.88.191:0.0 node1-> asmca

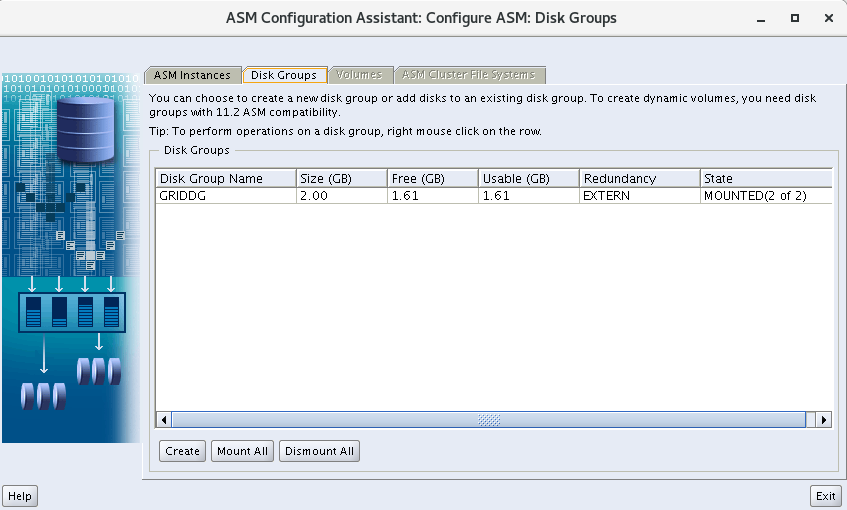

② 进入ASMCA 配置界面后,单击Create,创建新的磁盘组:

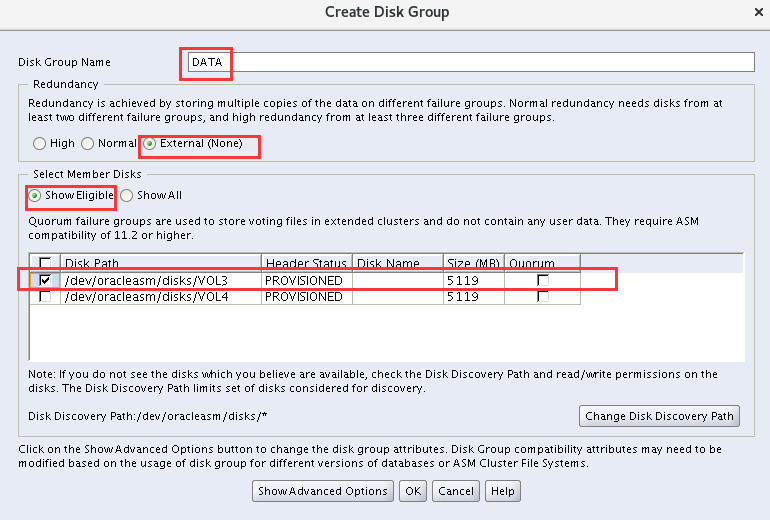

③ 输入磁盘组名DATA,冗余策略选择External,磁盘选择ORCL:VOL3,单击OK:

④ DATA 磁盘组创建完成,单击OK:

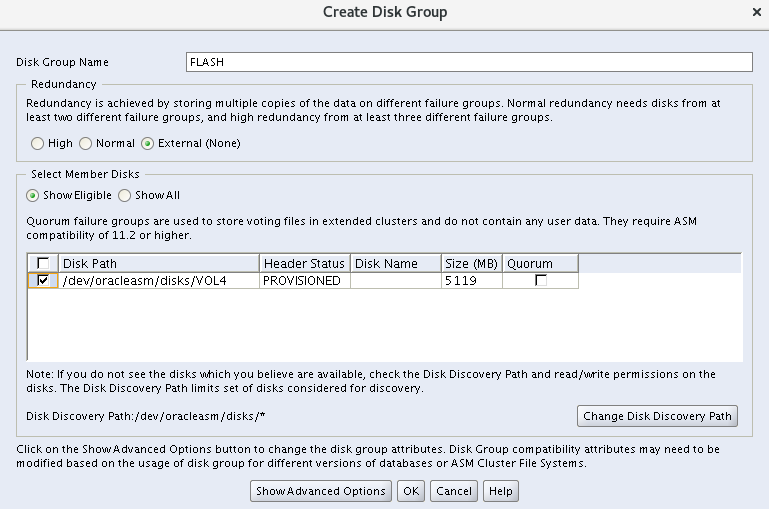

⑤ 继续创建磁盘组,磁盘组名FLASH,冗余策略选择External,磁盘选择VOL4:

⑥ 创建完DATA、FLASH 磁盘组后的界面如下,Exit 退出ASMCA 图形配置界面:

从下面图可以看到DATA磁盘组只被一个节点MOUNTED,点一下“Mount All”按钮即可被两个节点都MOUNT。

至此,利用ASMCA 创建好DATA、FLASH 磁盘组。且,可以看到连同之前创建的GRIDDG 3 个磁盘组均已经被RAC 双节点MOUNT。

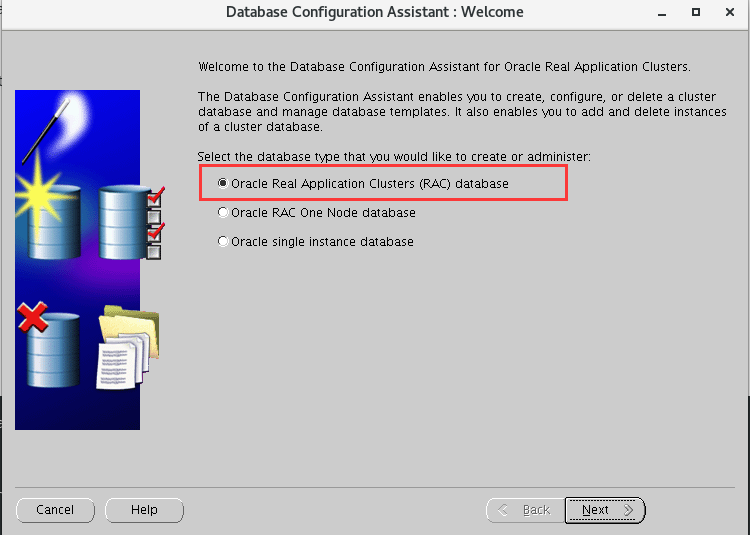

21、利用DBCA创建RAC数据库

① 以oracle 用户登录图形界面,执行dbca,进入DBCA 的图形界面,选择第1 项,创建RAC 数据库:

[root@node1 ~]# xhost + access control disabled, clients can connect from any host [root@node1 ~]# su - oracle Last login: Wed Apr 7 16:53:12 CST 2021 on pts/1 node1-> id oracle uid=1101(oracle) gid=2000(oinstall) groups=2000(oinstall),2200(asmadmin),2201(asmdba),2300(dba),2301(oper) node1-> env|grep ORA ORACLE_UNQNAME=devdb ORACLE_SID=devdb1 ORACLE_BASE=/u01/app/oracle ORACLE_HOSTNAME=node1.localdomain ORACLE_TERM=xterm ORACLE_HOME=/u01/app/oracle/product/11.2.0/db_1 node1-> export DISPLAY=192.168.88.191:0.0 node1-> dbca

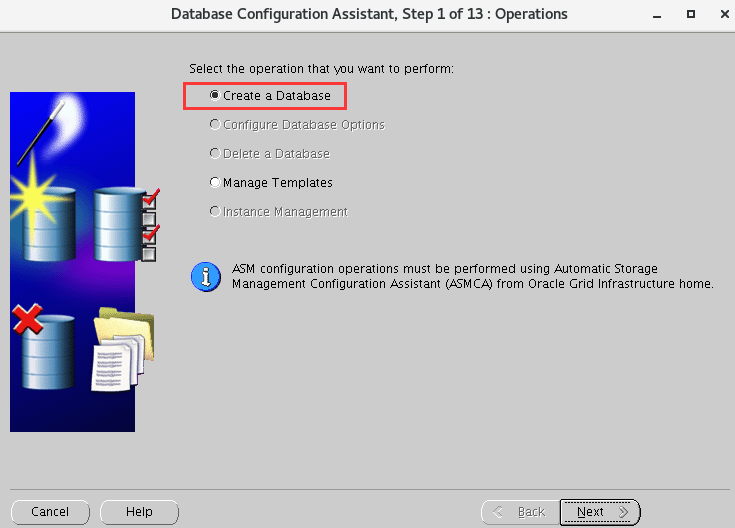

② 选择创建数据库选项,Next:

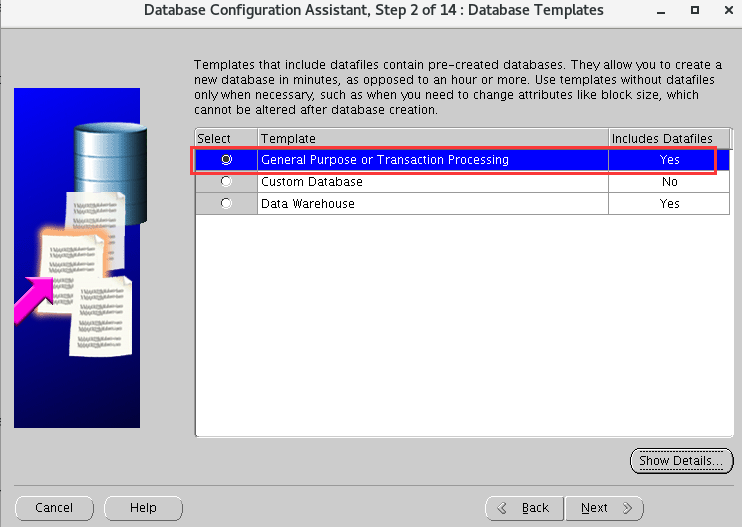

③ 选择创建通用数据库,Next:

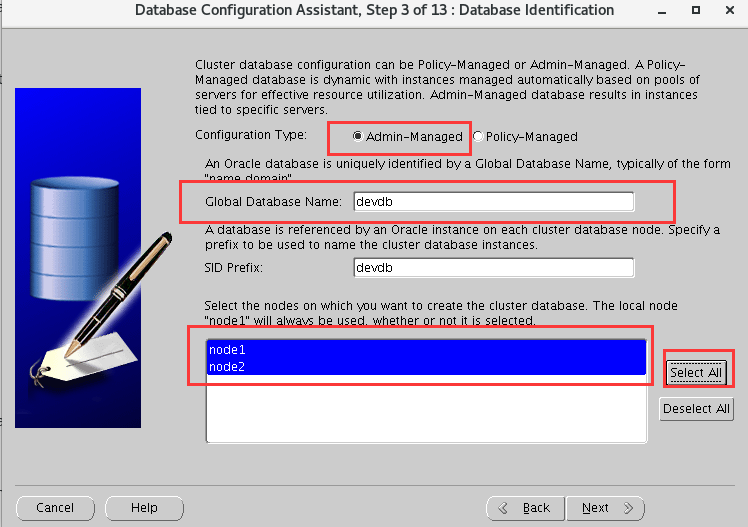

④ 配置类型选择Admin-Managed,输入数据库名devdb,选择双节点,Next:

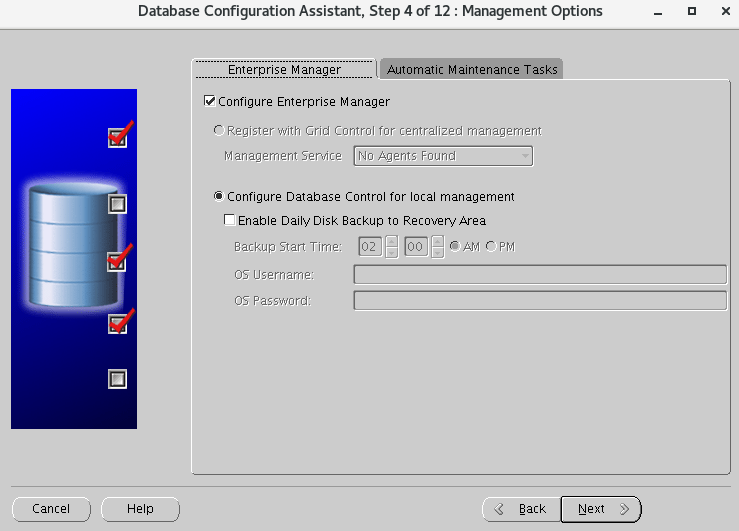

⑤ 选择默认,配置OEM、启用数据库自动维护任务,Next:

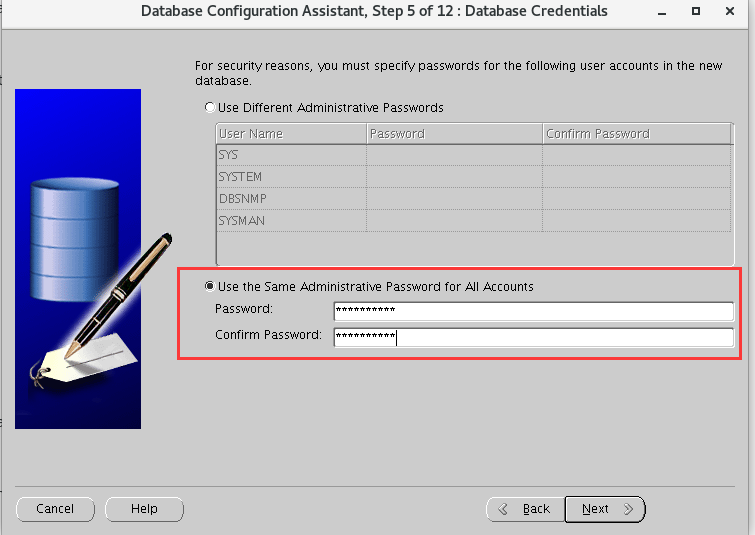

⑥ 选择数据库用户使用同一口令,Next:

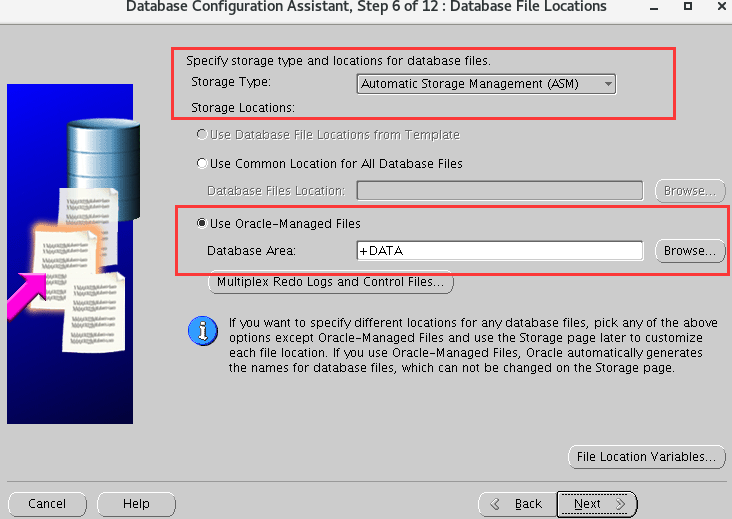

⑦ 数据库存储选择ASM,使用OMF,数据区选择之前创建的DATA 磁盘组,Next:

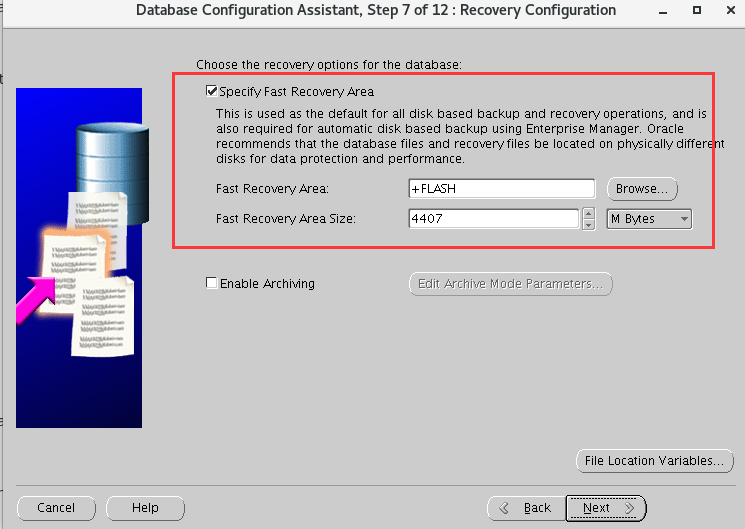

⑧ 指定数据库闪回区,选择之前创建好的FLASH 磁盘组,Next:

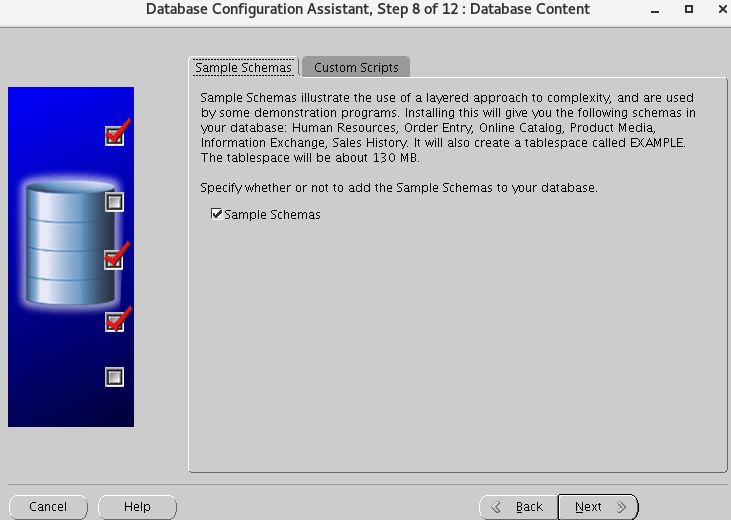

⑨ 选择创建数据库自带Sample Schema,Next:

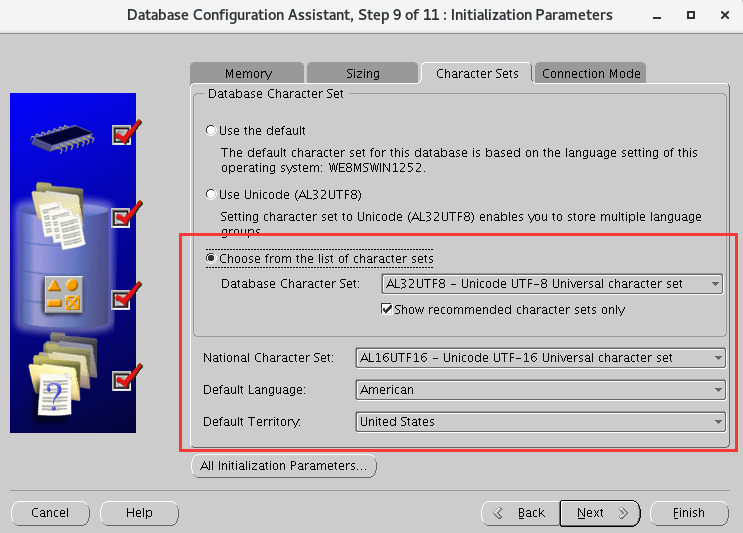

⑩ 选择数据库字符集,AL32UTF8,Next:

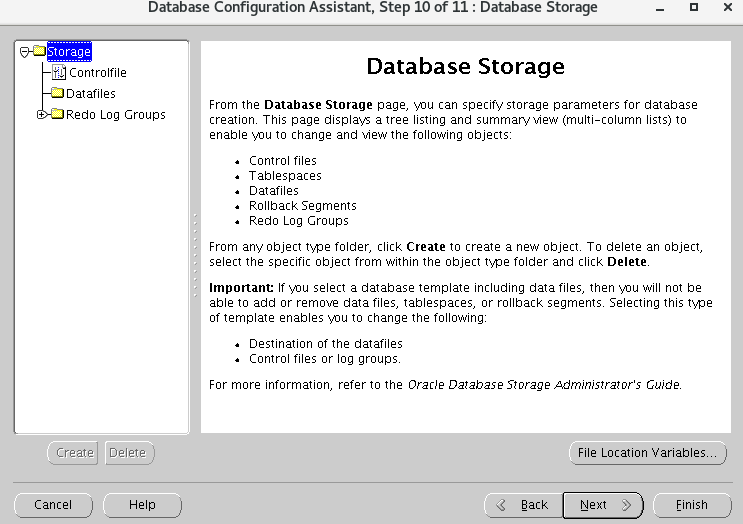

⑪ 选择默认数据库存储信息,直接Next:

⑫ 单击,Finish,开始创建数据库,Next:

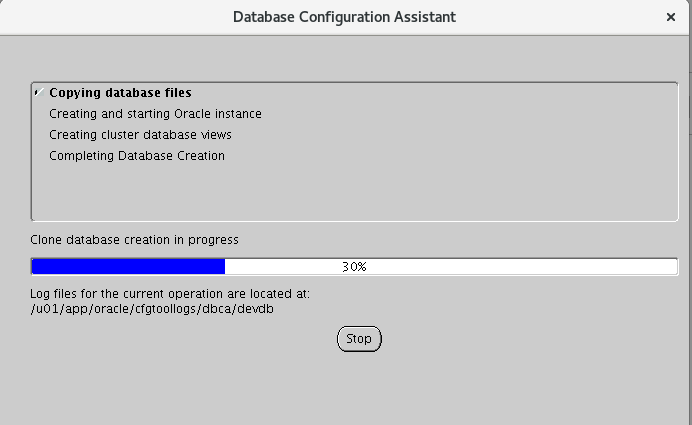

⑬ 完成创建数据库。

至此,我们完成创建RAC 数据库!!!