使用 github上已有的开源项目

1)git clone https://github.com/wzhe06/ipdatabase.git

2)编译下载的项目: mvn clean package- DskipTests

3)安装jar包到自己的 maven仓库

mvn install: install-file -Dfile=${编译的jar包路径}/target/ipdatabase-1.0-SNAPSHOT jar -DgroupId=com.ggstar -DartifactId=ipdatabase -Dversion=1.0 -Dpackaging=jar

4)添加依赖到pom

<dependency> <groupId>com.ggstar<groupId> <artifactId>ipdatabase</artifactId> <version>1.0</version> </dependency>

<dependency> <groupId>org.apache.poi</groupId> <cartifactId>poi-ooxml</artifactId> <version>3.14</version> </dependency> <dependency> <groupId>org.apache.poi</groupId>

<cartifactId>poi</artifactId>

<version>3.14</version>

</dependency>

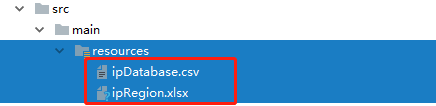

5)将源码main/resource下的ipDatabase.csv和ipRegion.xlxs拷贝到当前项目的resource目录下

6)ip解析工具类

/** * IP解析工具类 */ object IpUtils { def getCity(ip:String): Unit ={ IpHepler.findRegionByIp(ip) } }

7)打包到yarn运行

在pom文件排除spark打包,因为环境上有。

<!--scala 依赖--> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> <version>${scala.version}</version> <scope>provided</scope> </dependency> <!--SparkSQL--> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_2.11</artifactId> <version>${spark.version}</version> <scope>provided</scope> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-hive_2.11</artifactId> <version>${spark.version}</version> <scope>provided</scope> </dependency>

打包时注意,pom.xml中需要添加如下plugin

<plugin> <artifactId>maven-assembly-plugin</artifactId> <configuration> <archive> <mainfest> <mainClass></mainClass> </mainfest> </archive> <descriptRefs> <descriptRef> jar-with-dependencies </descriptRef> </descriptRefs> </configuration> </plugin> <plugin>

提交运行

/bin/spark-submit

class com.rz.log.SparkstatcleanJobYARN

--name SparkstatcleanJobYARN

--master yarn

--executor-memory 1G

--num-executors 1

--files /home/hadoop/Lib/ipDatabase. CSV, /home/hadoop/lib/ipRegion XlSx

/home/hadoop/lib/sql-1.0-jar-with-dependencies.jar

hdfs://hadoop001:8020/imooc/input/* hdfs://hadoop001: 8020/imooc/clean