Android在内存管理上于Linux有些小的区别,其中一个就是引入了lowmemorykiller。从lowmemorykiller.c位于drivers/staging/android也可知道,属于Android专有,没有进入Linux kernel的mainline。

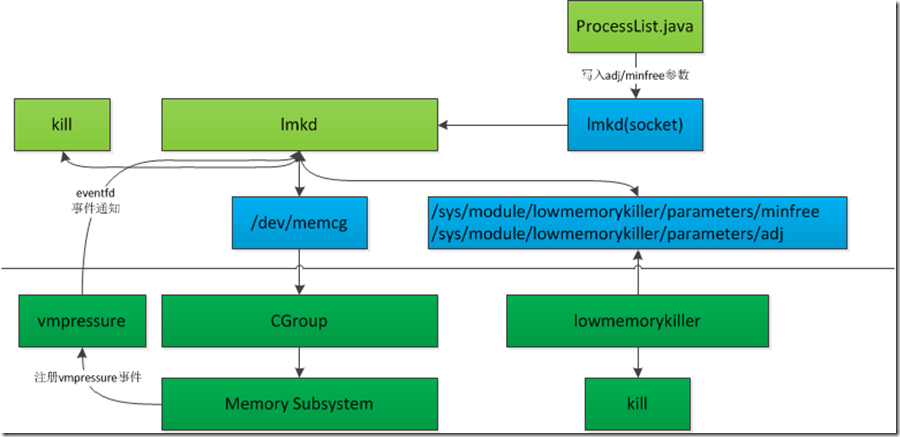

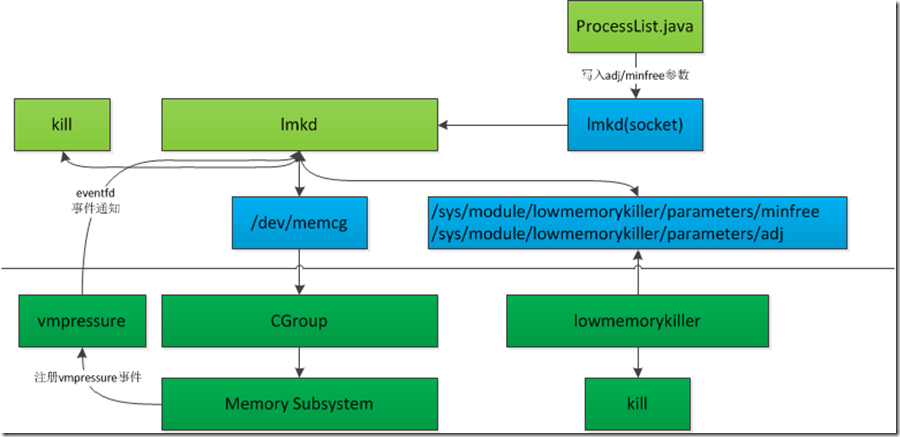

lmkd,即Low Memory Killer Daemon,基于memory子系统和Kernel lowmemorykiller功能参数,选择一个合适的进程,然后kill进程,以达到释放内存的目的。所以也绕不开Kernel模块lowmemorykiller(drivers/staging/android/lowmemorykiller.c)。

在考虑一个系统服务的功能,不仅要分析其内部功能,还要对其输入(lmkd socket、memory子系统和lowmemory)和输出(kill)进行详细的分析,才能更好的理解整个lmkd建立的生态。

他们之间的关系可以简要概括如下:

lmkd相关模块关系

启动lmkd系统服务

在/etc/init/lmkd.rc中,启动lmkd系统服务,创建了lmkd socket,并且将lmkd设置为system-background类型的进程。

|

service lmkd /system/bin/lmkd

class core

group root readproc

critical

socket lmkd seqpacket 0660 system system

writepid /dev/cpuset/system-background/tasks

|

lmkd框架分析

正如上图lmkd相关模块分析中所示,lmkd通过读取CGroup中memory子系统和lowmemory两个模块作为输入参数;输出是kill选定的进程。

正如所有的service一样,lmkd的起点也是main函数,lmkd的main函数很简单:

|

int main(int argc __unused, char **argv __unused) {

struct sched_param param = {

.sched_priority = 1,

};

mlockall(MCL_FUTURE); 锁住该实时进程在物理内存上全部地址空间。这将阻止Linux将这个内存页调度到交换空间(swap space),及时该进程已有一段时间没有访问这段空间。参见末尾参考资料。

sched_setscheduler(0, SCHED_FIFO, ¶m); 设置lmkd调度类型为SCHED_FIFO的实时进程。

if (!init()) 初始化,主要是socket通信,epoll文件操作memory子系统sysfs

mainloop(); epoll_wait处理epollfd

ALOGI("exiting");

return 0;

}

|

下面来分析一下主要核心函数init:

|

static int init(void) {

struct epoll_event epev;

int i;

int ret;

page_k = sysconf(_SC_PAGESIZE);

if (page_k == -1)

page_k = PAGE_SIZE;

page_k /= 1024;

epollfd = epoll_create(MAX_EPOLL_EVENTS); 创建全局epoll文件句柄

if (epollfd == -1) {

ALOGE("epoll_create failed (errno=%d)", errno);

return -1;

}

ctrl_lfd = android_get_control_socket("lmkd"); 打开lmkd socket文件句柄

if (ctrl_lfd < 0) {

ALOGE("get lmkd control socket failed");

return -1;

}

ret = listen(ctrl_lfd, 1);

if (ret < 0) {

ALOGE("lmkd control socket listen failed (errno=%d)", errno);

return -1;

}

epev.events = EPOLLIN;

epev.data.ptr = (void *)ctrl_connect_handler;

if (epoll_ctl(epollfd, EPOLL_CTL_ADD, ctrl_lfd, &epev) == -1) { 将lmkd socket加入epoll,处理函数问ctrl_connect_handler

ALOGE("epoll_ctl for lmkd control socket failed (errno=%d)", errno);

return -1;

}

maxevents++;

use_inkernel_interface = !access(INKERNEL_MINFREE_PATH, W_OK);

if (use_inkernel_interface) {

ALOGI("Using in-kernel low memory killer interface");

} else {

ret = init_mp(MEMPRESSURE_WATCH_LEVEL, (void *)&mp_event); 处理memory pressure相关

if (ret)

ALOGE("Kernel does not support memory pressure events or in-kernel low memory killer");

}

for (i = 0; i <= ADJTOSLOT(OOM_SCORE_ADJ_MAX); i++) {

procadjslot_list[i].next = &procadjslot_list[i];

procadjslot_list[i].prev = &procadjslot_list[i];

}

return 0;

}

|

1.创建epollfd文件,MAX_EPOLL_EVENTS为3,;

2.连接到lmkd socket,并将文件句柄加到epollfd,EPOLLIN的句柄函数问ctrl_connect_handler。

3.init_mp初始化memory pressure相关参数,创建一个用于事件通知的文件句柄,加入到epollfd,EPOLLIN的处理函数为mp_event。

init_mp将memory.presure_level的句柄,和创建用于本进程事件通知的evfd,然后和levelstr一起写入cgroup.event_control。

|

static int init_mp(char *levelstr, void *event_handler)

{

int mpfd;

int evfd;

int evctlfd;

char buf[256];

struct epoll_event epev;

int ret;

mpfd = open(MEMCG_SYSFS_PATH "memory.pressure_level", O_RDONLY | O_CLOEXEC);

if (mpfd < 0) {

ALOGI("No kernel memory.pressure_level support (errno=%d)", errno);

goto err_open_mpfd;

}

evctlfd = open(MEMCG_SYSFS_PATH "cgroup.event_control", O_WRONLY | O_CLOEXEC);

if (evctlfd < 0) {

ALOGI("No kernel memory cgroup event control (errno=%d)", errno);

goto err_open_evctlfd;

}

evfd = eventfd(0, EFD_NONBLOCK | EFD_CLOEXEC); 参见末尾参考资料,eventfd用于创建本进程事件通知的文件句柄。

if (evfd < 0) {

ALOGE("eventfd failed for level %s; errno=%d", levelstr, errno);

goto err_eventfd;

}

ret = snprintf(buf, sizeof(buf), "%d %d %s", evfd, mpfd, levelstr); ???

if (ret >= (ssize_t)sizeof(buf)) {

ALOGE("cgroup.event_control line overflow for level %s", levelstr);

goto err;

}

ret = write(evctlfd, buf, strlen(buf) + 1);

if (ret == -1) {

ALOGE("cgroup.event_control write failed for level %s; errno=%d",

levelstr, errno);

goto err;

}

epev.events = EPOLLIN;

epev.data.ptr = event_handler;

ret = epoll_ctl(epollfd, EPOLL_CTL_ADD, evfd, &epev);

if (ret == -1) {

ALOGE("epoll_ctl for level %s failed; errno=%d", levelstr, errno);

goto err;

}

maxevents++;

mpevfd = evfd;

return 0;

err:

close(evfd);

err_eventfd:

close(evctlfd);

err_open_evctlfd:

close(mpfd);

err_open_mpfd:

return -1;

}

|

ctrl_connect_handler是lmkd socket相关句柄函数,accept之后又会创建ctrl_dfd句柄。如果是EPOLLHUP,则关闭ctrl_dfd;如果是EPOLLIN,则会根据cmd类型进行不同处理。

|

static void ctrl_data_handler(uint32_t events) {

if (events & EPOLLHUP) {

ALOGI("ActivityManager disconnected");

if (!ctrl_dfd_reopened)

ctrl_data_close();

} else if (events & EPOLLIN) {

ctrl_command_handler();

}

}

|

LMK_TARGET类型对应cmd_targt,用于设置"/sys/module/lowmemorykiller/parameters/minfree"和"/sys/module/lowmemorykiller/parameters/adj"。

LMK_PROCPRIO类型对应cmd_procprio,用于写入/proc/xxx/oom_score_adj,并将pid加入pidhash表中。

LMK_PROCREMOVE类型对应cmd_procremove,用于将pid从pidhash中移除。

在vmpressure上报low事件后,lmkd就会触发mp_event处理memory pressure相关事件。mp_event就是low的处理函数,通过kill进程来释放内存空间。

|

static void mp_event(uint32_t events __unused) {

int ret;

unsigned long long evcount;

struct sysmeminfo mi;

int other_free;

int other_file;

int killed_size;

bool first = true;

ret = read(mpevfd, &evcount, sizeof(evcount));

if (ret < 0)

ALOGE("Error reading memory pressure event fd; errno=%d",

errno);

if (time(NULL) - kill_lasttime < KILL_TIMEOUT)

return;

while (zoneinfo_parse(&mi) < 0) {

// Failed to read /proc/zoneinfo, assume ENOMEM and kill something

find_and_kill_process(0, 0, true);

} 解析/proc/zoneinfo,主要解析nr_free_pages、nr_file_pages、nr_shmem、high、protection:。

other_free = mi.nr_free_pages - mi.totalreserve_pages;

other_file = mi.nr_file_pages - mi.nr_shmem;

基于zoneinfo解析,计算出other_free和other_file两个参数,用于选取待kill的进程。

do {

killed_size = find_and_kill_process(other_free, other_file, first);这是最核心的地方。

if (killed_size > 0) {

first = false;

other_free += killed_size;

other_file += killed_size;

}

} while (killed_size > 0);循环释放,直到killed_size<=0,也即满足了最低内存需求。

}

|

find_and_kill_process根据other_free和other_file两个参数,确定在哪个adj组中寻找进程。然后寻找最近使用进程kill。

|

static int find_and_kill_process(int other_free, int other_file, bool first)

{

int i;

int min_score_adj = OOM_SCORE_ADJ_MAX + 1;

int minfree = 0;

int killed_size = 0;

for (i = 0; i < lowmem_targets_size; i++) {

minfree = lowmem_minfree[i];

if (other_free < minfree && other_file < minfree) {

min_score_adj = lowmem_adj[i];

break;

}

}

lowmem_minfree和lowmem_adj是从/sys/module/lowmemorykiller/parameters/minfree和/sys/module/lowmemorykiller/parameters/adj中解析出来的。释放内存以达到最低使用内存,adj从0到906,每一个adj都有对应的最低内存,逐级释放。

0,100,200,300,900,906

18432,23040,27648,32256,55296,80640 |

if (min_score_adj == OOM_SCORE_ADJ_MAX + 1)

return 0;

for (i = OOM_SCORE_ADJ_MAX; i >= min_score_adj; i--) {

struct proc *procp;

retry:

procp = proc_adj_lru(i); 在procadjslot_list寻找最近使用的proc

if (procp) {

killed_size = kill_one_process(procp, other_free, other_file, minfree, min_score_adj, first); 杀死procp指定的进程,返回释放的内存大小。

if (killed_size < 0) {

goto retry;

} else {

return killed_size;

}

}

}

return 0;

}

|

lowmemorykiller分析

lowmemorykiller作为内核一个module,输入参数有如下:

|

/sys/module/lowmemorykiller/parameters/adj 0,100,200,300,900,906

/sys/module/lowmemorykiller/parameters/cost 32

/sys/module/lowmemorykiller/parameters/debug_level 1

/sys/module/lowmemorykiller/parameters/minfree 18432,23040,27648,32256,55296,80640

|

adj文件包含oom_adj的阈值,minfree存放着对应的阈值,以page为单位。当对应的minfree值达到,则进程的oom_adj如果大于这个值将被杀掉。

ProcessList.java中定义的mOomAdj的值通过writeLmkd写入sysfs节点,和上面对应:

|

private final int[] mOomAdj = new int[] {

FOREGROUND_APP_ADJ, VISIBLE_APP_ADJ, PERCEPTIBLE_APP_ADJ,

BACKUP_APP_ADJ, CACHED_APP_MIN_ADJ, CACHED_APP_MAX_ADJ

};

// These are the low-end OOM level limits. This is appropriate for an

// HVGA or smaller phone with less than 512MB. Values are in KB.

private final int[] mOomMinFreeLow = new int[] {

12288, 18432, 24576,

36864, 43008, 49152

};

// These are the high-end OOM level limits. This is appropriate for a

// 1280x800 or larger screen with around 1GB RAM. Values are in KB.

private final int[] mOomMinFreeHigh = new int[] {

73728, 92160, 110592,

129024, 147456, 184320

};

|

在frameworks/base/services/core/java/com/android/server/am/ProcessList.java中定义了,不同类型进程对应的adj值:

|

static final int CACHED_APP_MAX_ADJ = 906;

static final int CACHED_APP_MIN_ADJ = 900;

static final int SERVICE_B_ADJ = 800;

static final int PREVIOUS_APP_ADJ = 700;

static final int HOME_APP_ADJ = 600;

static final int SERVICE_ADJ = 500;

static final int HEAVY_WEIGHT_APP_ADJ = 400;

static final int BACKUP_APP_ADJ = 300;

static final int PERCEPTIBLE_APP_ADJ = 200;

static final int VISIBLE_APP_ADJ = 100;

static final int VISIBLE_APP_LAYER_MAX = PERCEPTIBLE_APP_ADJ - VISIBLE_APP_ADJ - 1;

static final int FOREGROUND_APP_ADJ = 0;

static final int PERSISTENT_SERVICE_ADJ = -700;

static final int PERSISTENT_PROC_ADJ = -800;

static final int SYSTEM_ADJ = -900;

static final int NATIVE_ADJ = -1000;

|

lowmem_init是整个模块的入口,主要注册一个shrinker,lowmem_shrinker。shrinker是内核内存回收机制。

|

static struct shrinker lowmem_shrinker = {

.scan_objects = lowmem_scan, 如果count_objects返回值不为0,则被调用。

.count_objects = lowmem_count, 返回缓存中可被释放的内存大小。

.seeks = DEFAULT_SEEKS * 16

};

|

lowmem_scan是shrinker的核心:

|

static unsigned long lowmem_scan(struct shrinker *s, struct shrink_control *sc)

{

struct task_struct *tsk;

struct task_struct *selected = NULL;

unsigned long rem = 0;

int tasksize;

int i;

short min_score_adj = OOM_SCORE_ADJ_MAX + 1;

int minfree = 0;

int selected_tasksize = 0;

short selected_oom_score_adj;

int array_size = ARRAY_SIZE(lowmem_adj);

int other_free = global_page_state(NR_FREE_PAGES) - totalreserve_pages;

int other_file = global_page_state(NR_FILE_PAGES) -

global_page_state(NR_SHMEM) -

total_swapcache_pages();

if (lowmem_adj_size < array_size)

array_size = lowmem_adj_size;

if (lowmem_minfree_size < array_size)

array_size = lowmem_minfree_size;

for (i = 0; i < array_size; i++) {

minfree = lowmem_minfree[i];

if (other_free < minfree && other_file < minfree) {

min_score_adj = lowmem_adj[i]; 确定min_score_adj,从adj小的开始,也即内存最紧张的adj开始。直到找到other_free/other_file都小于minfree的adj。比这个adj大的进程都可以释放。

break;

}

}

lowmem_print(3, "lowmem_scan %lu, %x, ofree %d %d, ma %hd

",

sc->nr_to_scan, sc->gfp_mask, other_free,

other_file, min_score_adj);

if (min_score_adj == OOM_SCORE_ADJ_MAX + 1) {

lowmem_print(5, "lowmem_scan %lu, %x, return 0

",

sc->nr_to_scan, sc->gfp_mask);

return 0;

}

selected_oom_score_adj = min_score_adj;

rcu_read_lock();

for_each_process(tsk) { 遍历所有进程

struct task_struct *p;

short oom_score_adj;

if (tsk->flags & PF_KTHREAD)

continue;

p = find_lock_task_mm(tsk);

if (!p)

continue;

if (test_tsk_thread_flag(p, TIF_MEMDIE) &&

time_before_eq(jiffies, lowmem_deathpending_timeout)) {

task_unlock(p);

rcu_read_unlock();

return 0;

}

oom_score_adj = p->signal->oom_score_adj;

if (oom_score_adj < min_score_adj) { 跳过高优先级的adj,adj小的优先级高。

task_unlock(p);

continue;

}

tasksize = get_mm_rss(p->mm);

task_unlock(p);

if (tasksize <= 0)

continue;

if (selected) {

if (oom_score_adj < selected_oom_score_adj) 跳过高优先级的adj,adj小的优先级高。

continue;

if (oom_score_adj == selected_oom_score_adj &&

tasksize <= selected_tasksize) 如果adj和选中优先级相同,则选用tasksize大的进程,能释放更多空间。

continue;

}

selected = p;

selected_tasksize = tasksize;

selected_oom_score_adj = oom_score_adj;

lowmem_print(2, "select '%s' (%d), adj %hd, size %d, to kill

",

p->comm, p->pid, oom_score_adj, tasksize);

} 所以总的原则是对所有oom_score_adj大于等于min_score_adj的进程,选取tasksize最大的进进程。也即根据进程的重要性(oom_adj)和释放量(tasksize)进行选取。

if (selected) {

long cache_size = other_file * (long)(PAGE_SIZE / 1024);

long cache_limit = minfree * (long)(PAGE_SIZE / 1024);

long free = other_free * (long)(PAGE_SIZE / 1024);

task_lock(selected);

send_sig(SIGKILL, selected, 0); 发送SIGKILL信号到选定的进程

/*

* FIXME: lowmemorykiller shouldn't abuse global OOM killer

* infrastructure. There is no real reason why the selected

* task should have access to the memory reserves.

*/

if (selected->mm)

mark_oom_victim(selected);

task_unlock(selected);

trace_lowmemory_kill(selected, cache_size, cache_limit, free);

lowmem_print(1, "Killing '%s' (%d), adj %hd,

"

" to free %ldkB on behalf of '%s' (%d) because

"

" cache %ldkB is below limit %ldkB for oom_score_adj %hd

"

" Free memory is %ldkB above reserved

",

selected->comm, selected->pid,

selected_oom_score_adj,

selected_tasksize * (long)(PAGE_SIZE / 1024),

current->comm, current->pid,

cache_size, cache_limit,

min_score_adj,

free);

lowmem_deathpending_timeout = jiffies + HZ;

rem += selected_tasksize;

}

lowmem_print(4, "lowmem_scan %lu, %x, return %lu

",

sc->nr_to_scan, sc->gfp_mask, rem);

rcu_read_unlock();

return rem;

}

|

每一个进程都有oom_adj/oom_score/oom_score_adj节点,

|

oom_adj -13

oom_score 0

oom_score_adj –800

oom_adj=oom_score_adj*17/1000=800*17/1000=13.6

|

CGroup memory子系统参数详解

要理解memory.pressure_level,就要从何为Memory Pressure开始。

pressure_level通知可以被用来监控内存分配代价;基于不同的pressure_level,采取不同的策略管理内存资源。有以下三种pressure_level:

low:系统会采取回收内存给新的内存分配。

medium:系统会使用swap、换出活动文件缓存等方式来腾空内存

critical:表示系统此时已经OOM或者内核OOM即将触发,应用应该尽可能采取措施腾出内存空间。

pressure_level出发后产生的events会向上传播,直到被处理。比如三个cgroup:A->B->C。A、B、C都有事件监听器,此时C触发了memory pressure。这种情况下,C会受到通知,而A和B则不会。这是为了避免此类消息广播,进而打断系统。

memory.pressure_level只是被用来设置eventfd,节点的读写操作都没有实现,所以在sysfs中无从获得信息。下面是一个使用示例:

- 使用eventfd创建一个evfd句柄

- 打开memory.pressure_level节点mpfd

- 将“<evfd> <mpfd> <level>”组成的字符串写入cgroup.event_control

那么如果memory pressure达到一定level(low/medium/critical),相关应用就会通过eventfd被通知到。下面是lmkd中的一个实现:

|

static int init_mp(char *levelstr, void *event_handler)

{

…

mpfd = open(MEMCG_SYSFS_PATH "memory.pressure_level", O_RDONLY | O_CLOEXEC);

evctlfd = open(MEMCG_SYSFS_PATH "cgroup.event_control", O_WRONLY | O_CLOEXEC);

evfd = eventfd(0, EFD_NONBLOCK | EFD_CLOEXEC);

ret = snprintf(buf, sizeof(buf), "%d %d %s", evfd, mpfd, levelstr);

ret = write(evctlfd, buf, strlen(buf) + 1);

epev.events = EPOLLIN;

epev.data.ptr = event_handler;

ret = epoll_ctl(epollfd, EPOLL_CTL_ADD, evfd, &epev);

}

|

所以重点就转到分析cgroup.event_control

|

static struct cftype mem_cgroup_legacy_files[] = {

{

.name = "cgroup.event_control", /* XXX: for compat */

.write = memcg_write_event_control,

.flags = CFTYPE_NO_PREFIX | CFTYPE_WORLD_WRITABLE,

},

}

|

memcg_write_event_control解析lmkd写入的字符串,然后注册cgroup的事件处理函数。

|

static ssize_t memcg_write_event_control(struct kernfs_open_file *of,

char *buf, size_t nbytes, loff_t off)

{

struct cgroup_subsys_state *css = of_css(of);

struct mem_cgroup *memcg = mem_cgroup_from_css(css);

struct mem_cgroup_event *event;

struct cgroup_subsys_state *cfile_css;

unsigned int efd, cfd;

struct fd efile;

struct fd cfile;

const char *name;

char *endp;

int ret;

buf = strstrip(buf);

efd = simple_strtoul(buf, &endp, 10); 解析出eventfd文件句柄

if (*endp != ' ')

return -EINVAL;

buf = endp + 1;

cfd = simple_strtoul(buf, &endp, 10); 解析出字符串的第二个参数句柄

if ((*endp != ' ') && (*endp != '�'))

return -EINVAL;

buf = endp + 1; 解析出第三个参数

event = kzalloc(sizeof(*event), GFP_KERNEL);

if (!event)

return -ENOMEM;

event->memcg = memcg;

INIT_LIST_HEAD(&event->list);

init_poll_funcptr(&event->pt, memcg_event_ptable_queue_proc);

init_waitqueue_func_entry(&event->wait, memcg_event_wake);

INIT_WORK(&event->remove, memcg_event_remove);

efile = fdget(efd);

if (!efile.file) {

ret = -EBADF;

goto out_kfree;

}

event->eventfd = eventfd_ctx_fileget(efile.file);

if (IS_ERR(event->eventfd)) {

ret = PTR_ERR(event->eventfd);

goto out_put_efile;

}

cfile = fdget(cfd);

if (!cfile.file) {

ret = -EBADF;

goto out_put_eventfd;

}

/* the process need read permission on control file */

/* AV: shouldn't we check that it's been opened for read instead? */

ret = inode_permission(file_inode(cfile.file), MAY_READ);

if (ret < 0)

goto out_put_cfile;

/*

* Determine the event callbacks and set them in @event. This used

* to be done via struct cftype but cgroup core no longer knows

* about these events. The following is crude but the whole thing

* is for compatibility anyway.

*

* DO NOT ADD NEW FILES.

*/

name = cfile.file->f_path.dentry->d_name.name;

if (!strcmp(name, "memory.usage_in_bytes")) { 根据第二个参数文件名,选择不同注册/去注册函数。

event->register_event = mem_cgroup_usage_register_event;

event->unregister_event = mem_cgroup_usage_unregister_event;

} else if (!strcmp(name, "memory.oom_control")) {

event->register_event = mem_cgroup_oom_register_event;

event->unregister_event = mem_cgroup_oom_unregister_event;

} else if (!strcmp(name, "memory.pressure_level")) {

event->register_event = vmpressure_register_event;

event->unregister_event = vmpressure_unregister_event;

} else if (!strcmp(name, "memory.memsw.usage_in_bytes")) {

event->register_event = memsw_cgroup_usage_register_event;

event->unregister_event = memsw_cgroup_usage_unregister_event;

} else {

ret = -EINVAL;

goto out_put_cfile;

}

/*

* Verify @cfile should belong to @css. Also, remaining events are

* automatically removed on cgroup destruction but the removal is

* asynchronous, so take an extra ref on @css.

*/

cfile_css = css_tryget_online_from_dir(cfile.file->f_path.dentry->d_parent,

&memory_cgrp_subsys);

ret = -EINVAL;

if (IS_ERR(cfile_css))

goto out_put_cfile;

if (cfile_css != css) {

css_put(cfile_css);

goto out_put_cfile;

}

ret = event->register_event(memcg, event->eventfd, buf); 执行注册

if (ret)

goto out_put_css;

efile.file->f_op->poll(efile.file, &event->pt);

spin_lock(&memcg->event_list_lock);

list_add(&event->list, &memcg->event_list);

spin_unlock(&memcg->event_list_lock);

fdput(cfile);

fdput(efile);

return nbytes;

out_put_css:

css_put(css);

out_put_cfile:

fdput(cfile);

out_put_eventfd:

eventfd_ctx_put(event->eventfd);

out_put_efile:

fdput(efile);

out_kfree:

kfree(event);

return ret;

}

|

vmpressure_register_event会将vmpressure通知和eventfs绑定,这样lmkd就会收到vmpressure的通知了。

memcg:需要关注vmpressure通知的CGroup子系统memory

eventfd:接收vmpressure通知的eventfd句柄

args:设置pressure_level参数

|

int vmpressure_register_event(struct mem_cgroup *memcg,

struct eventfd_ctx *eventfd, const char *args)

{

struct vmpressure *vmpr = memcg_to_vmpressure(memcg);

struct vmpressure_event *ev;

int level;

for (level = 0; level < VMPRESSURE_NUM_LEVELS; level++) {

if (!strcmp(vmpressure_str_levels[level], args)) 检查pressure_level有效性:low/medium/critical。

break;

}

if (level >= VMPRESSURE_NUM_LEVELS)

return -EINVAL;

ev = kzalloc(sizeof(*ev), GFP_KERNEL);

if (!ev)

return -ENOMEM;

ev->efd = eventfd;

ev->level = level;

mutex_lock(&vmpr->events_lock);

list_add(&ev->node, &vmpr->events);

mutex_unlock(&vmpr->events_lock);

return 0;

}

|

关于Memory Pressure深度阅读参考:Documents/cgroups/memory.txt 第11小节 Memory Pressure

这里有涉及到一个概念vmpressure。应用不会去关注系统有多少可用空间,但是作为一个整体的系统如果能对内存紧缺进行通知,并让应用采取相关措施以减少内存分配。vmpressure就是这样一种机制,通过vmpressure内核能够通知用户空间,系统当前处于何种memory pressure等级。

应用?

整个框架提供的配置参数就是应用的切入点:

-

根据内存大小?屏幕分辨率?…情况配置不同的minfree值。

-

增加adj个数,增加lowmemorykiller的控制粒度;或者修改adj大小,改变不同类型进程的优先级。

-

memory pressure的levelstr,low?medium?critical?进行不同的处理?

-

修改vmpressure触发不同level的条件?

参考资料

mlockall/munlockall:http://pubs.opengroup.org/onlinepubs/007908799/xsh/mlockall.html

mlockall函数:http://blog.csdn.net/zhjutao/article/details/8652252

event:http://www.man7.org/linux/man-pages/man2/eventfd.2.html

Memory Pressure:https://linux-mm.org/Memory_pressure

vmpressure_fd:https://lwn.net/Articles/524742/