pom:

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.11</artifactId>

<version>2.3.4</version>

</dependency>

val ss = SparkSession.builder().master("local").appName("standalone_hive") .config("spark.sql.shuffle.partitions", 1) .config("spark.sql.warehouse.dir", "D:\code\tmp\scladata") .enableHiveSupport() // 开启hive支持, 自己会启动单机模式hive的metastore .getOrCreate() val sc: SparkContext = ss.sparkContext import ss.sql ss.catalog.listTables().show() //作用再current库 sql("create database ke") sql("create table table01(name string)") //作用再current库 ss.catalog.listTables().show() //作用再current库 println("--------------------------------") sql("use ke") ss.catalog.listTables().show() //作用再ke这个库 sql("create table table02(name string)") //作用再ke库 ss.catalog.listTables().show() //作用再ke这个库

结果:

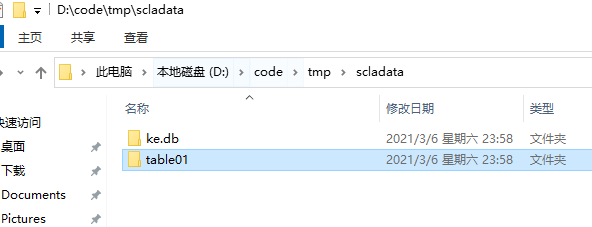

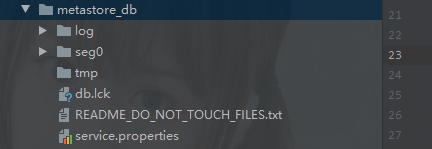

1.项目目录下多了metastore_db文件夹,里面记录了元数据 2.目录:D:code mpscladata 下多了文件夹:1. ke.db able02 2.table01 3. +-------+--------+-----------+---------+-----------+ | name|database|description|tableType|isTemporary| +-------+--------+-----------+---------+-----------+ |table01| default| null| MANAGED| false| +-------+--------+-----------+---------+-----------+ -------------------------------- +----+--------+-----------+---------+-----------+ |name|database|description|tableType|isTemporary| +----+--------+-----------+---------+-----------+ +----+--------+-----------+---------+-----------+ +-------+--------+-----------+---------+-----------+ | name|database|description|tableType|isTemporary| +-------+--------+-----------+---------+-----------+ |table02| ke| null| MANAGED| false| +-------+--------+-----------+---------+-----------+

元数据图:

数据目录图: