参考链接:https://blog.csdn.net/ywq935/article/details/80818390 https://www.jianshu.com/p/ac8853927528

1、配置configmap

apiVersion: v1 kind: ConfigMap metadata: name: prometheus-config namespace: kube-system data: prometheus.yml: | global: scrape_interval: 15s evaluation_interval: 15s rule_files: - /etc/prometheus/rules.yml alerting: alertmanagers: - static_configs: - targets: ["alertmanager:9093"] scrape_configs: - job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-nodes' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics - job_name: 'kubernetes-cadvisor' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name - job_name: 'kubernetes-services' kubernetes_sd_configs: - role: service metrics_path: /probe params: module: [http_2xx] relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__address__] target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] target_label: kubernetes_name - job_name: 'kubernetes-ingresses' kubernetes_sd_configs: - role: ingress relabel_configs: - source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe] action: keep regex: true regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - job_name: 'kubernetes-node-exporter' scheme: http tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - source_labels: [__meta_kubernetes_role] action: replace target_label: kubernetes_role - source_labels: [__address__] regex: '(.*):10250' replacement: '${1}:31672' target_label: __address__

2、配置deploy

apiVersion: apps/v1 kind: Deployment metadata: labels: name: prometheus-deployment name: prometheus namespace: kube-system spec: replicas: 1 selector: matchLabels: app: prometheus template: metadata: labels: app: prometheus spec: containers: - image: prom/prometheus:v2.0.0 name: prometheus command: - "/bin/prometheus" args: - "--config.file=/etc/prometheus/prometheus.yml" - "--storage.tsdb.path=/prometheus" - "--storage.tsdb.retention=24h" ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: "/prometheus" name: data - mountPath: "/etc/prometheus" name: config-volume resources: requests: cpu: 100m memory: 100Mi limits: cpu: 500m memory: 2500Mi serviceAccountName: prometheus volumes: - name: data emptyDir: {} - name: config-volume configMap: name: prometheus-config

3、配置svc

kind: Service apiVersion: v1 metadata: labels: app: prometheus name: prometheus namespace: kube-system spec: type: ClusterIP ports: - port: 80 protocol: TCP targetPort: 9090 selector: app: prometheus

4、配置rbac

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: [""] resources: - nodes - nodes/proxy - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: - extensions resources: - ingresses verbs: ["get", "list", "watch"] - nonResourceURLs: ["/metrics"] verbs: ["get"] --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: kube-system

5、配置ingress

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: ingress-prome namespace: kube-system annotations: kubernets.io/ingress.class: "nginx" spec: rules: - host: prome.yourdomain.com http: paths: - path: backend: serviceName: prometheus servicePort: 80

6、配置完ingress后,再配置域名解析,我在外层nginx做了引流

upstream prome { server 192.168.10.55; server 192.168.10.56; } server { listen 80; server_name prome.dogotsn.com; access_log /data/logs/nginx/prome.dogotsn.access.log; error_log /data/logs/nginx/prome.dogotsn.error.log; location / { proxy_set_header X-Forwarded-For $remote_addr; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $host; proxy_redirect off; proxy_read_timeout 600; proxy_connect_timeout 600; proxy_pass http://prome; } }

6、以上部署完成后,开始访问

7、为了更好的展示图形效果,需要部署grafana:

apiVersion: apps/v1 kind: Deployment metadata: name: grafana-core namespace: kube-system labels: app: grafana component: core spec: replicas: 1 selector: matchLabels: app: grafana component: core template: metadata: labels: app: grafana component: core spec: containers: - image: grafana/grafana:4.2.0 name: grafana-core imagePullPolicy: IfNotPresent resources: limits: cpu: 100m memory: 100Mi requests: cpu: 100m memory: 100Mi env: - name: GF_AUTH_BASIC_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ENABLED value: "false" readinessProbe: httpGet: path: /login port: 3000 volumeMounts: - name: grafana-persistent-storage mountPath: /var volumes: - name: grafana-persistent-storage emptyDir: {} --- apiVersion: v1 kind: Service metadata: name: grafana namespace: kube-system labels: app: grafana component: core spec: type: NodePort ports: - port: 3000 selector: app: grafana component: core

部署完后,查看nodeport端口:

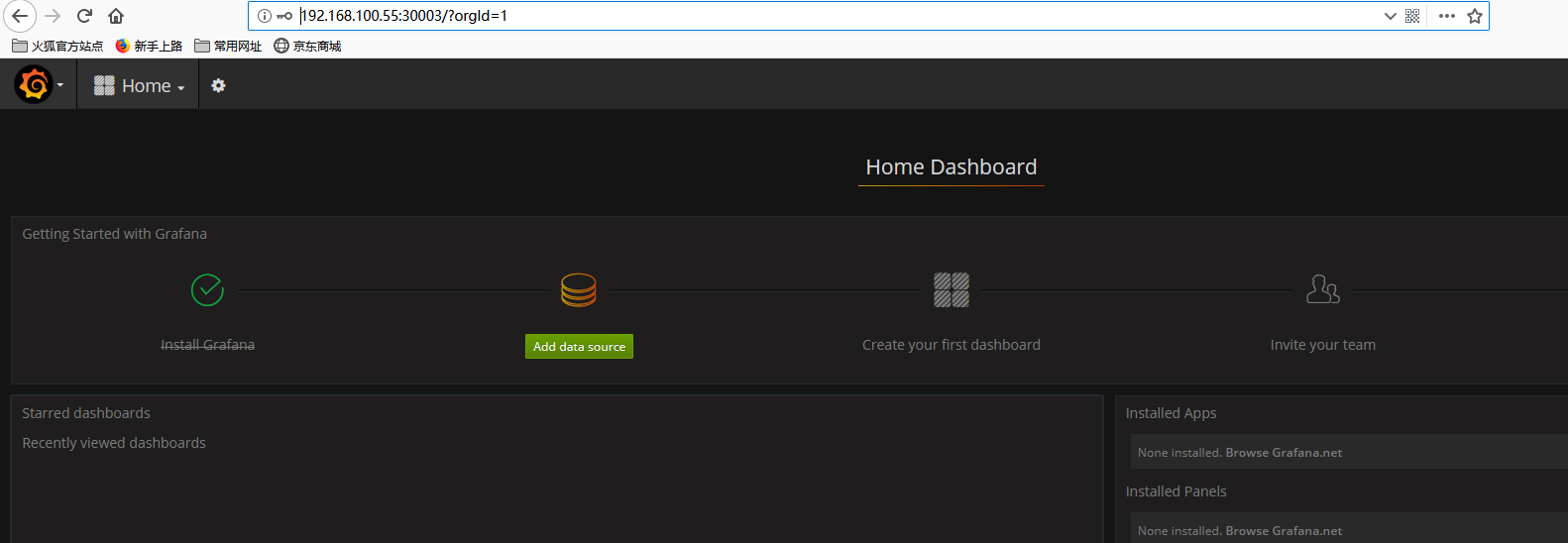

可以看到nodeport是30003,然后访问http://nodeip:30003 用户名和密码都是admin

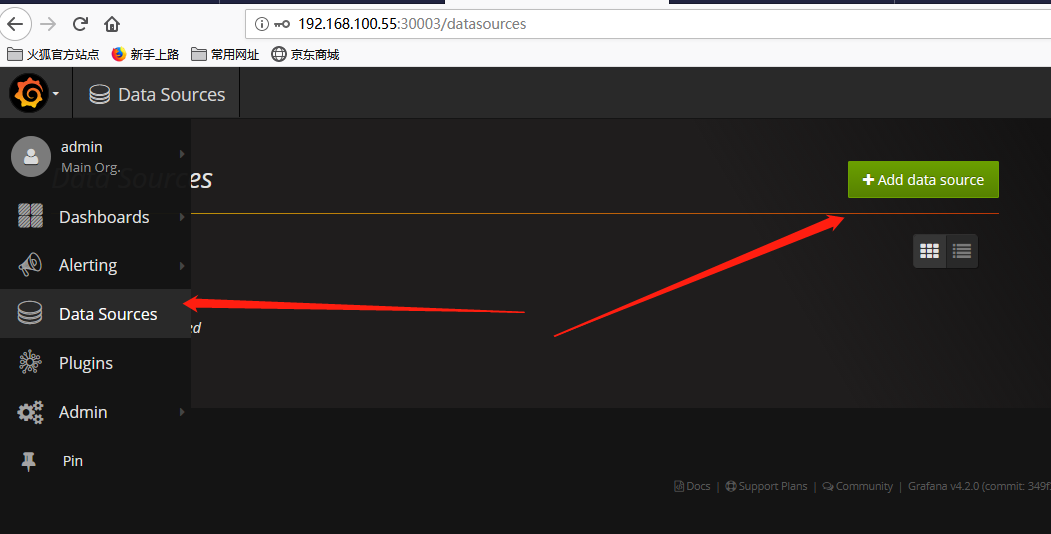

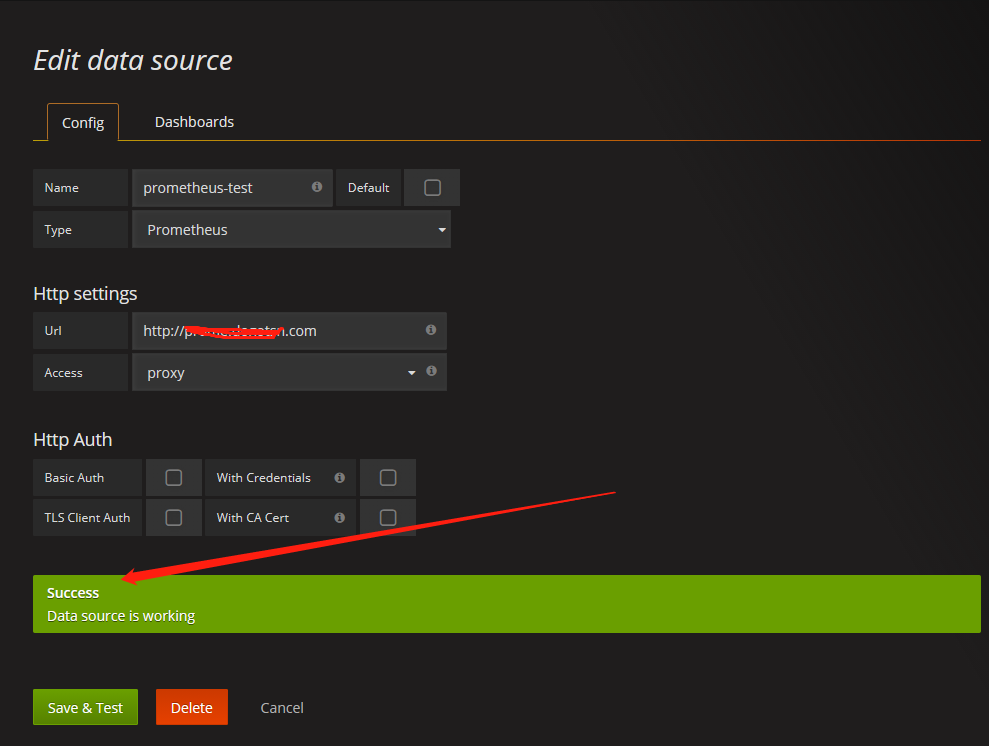

选择资源类型,填入prometheus的服务地址及端口号,点击保存

导入展示模板:

点击dashboard,点击import dashboard,在弹出框内填写数字315,会自动加载官方提供的315号模板,然后选择数据源为刚添加的数据源,模板就创建好了,非常easy。

最近发现,采用daemon-set方式部署的node-exporterc采集到的度量值不准确,最后发现需要将host的/proc和/sys目录挂载进node-exporter的容器内。

更新后的node-exporter.yaml文件:

apiVersion: apps/v1 kind: DaemonSet metadata: name: node-exporter namespace: default labels: k8s-app: node-exporter spec: selector: matchLabels: k8s-app: node-exporter template: metadata: labels: k8s-app: node-exporter spec: containers: - image: prom/node-exporter name: node-exporter ports: - containerPort: 9100 protocol: TCP name: http hostPort: 9101 volumeMounts: - mountPath: /host/proc name: proc - mountPath: /host/sys name: sys args: - --path.procfs=/host/proc - --path.sysfs=/host/sys - --collector.filesystem.ignored-mount-points - "^/(dev|proc|sys|host|etc|rootfs|docker)($|/)" volumes: - hostPath: path: /proc name: proc - hostPath: path: /sys name: sys --- apiVersion: v1 kind: Service metadata: labels: k8s-app: node-exporter name: node-exporter namespace: default spec: ports: - name: http port: 9100 nodePort: 31672 protocol: TCP type: NodePort selector: k8s-app: node-exporter