▶ 按照书上的例子,使用 async 导语实现主机与设备端的异步计算

● 代码,非异步的代码只要将其中的 async 以及第 29 行删除即可

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <openacc.h> 4 5 #define N 10240000 6 #define COUNT 200 // 多算几次,增加耗时 7 8 int main() 9 { 10 int *a = (int *)malloc(sizeof(int)*N); 11 int *b = (int *)malloc(sizeof(int)*N); 12 int *c = (int *)malloc(sizeof(int)*N); 13 14 #pragma acc enter data create(a[0:N]) async // 在设备上赋值 a 15 for (int i = 0; i < COUNT; i++) 16 { 17 #pragma acc parallel loop async 18 for (int j = 0; j < N; j++) 19 a[j] = (i + j) * 2; 20 } 21 22 for (int i = 0; i < COUNT; i++) // 在主机上赋值 b 23 { 24 for (int j = 0; j < N; j++) 25 b[j] = (i + j) * 2; 26 } 27 28 #pragma acc update host(a[0:N]) async // 异步必须 update a,否则还没同步就参与 c 的运算 29 #pragma acc wait // 非异步时去掉该行 30 31 for (int i = 0; i < N; i++) 32 c[i] = a[i] + b[i]; 33 34 #pragma acc update device(a[0:N]) async // 没啥用,增加耗时 35 #pragma acc exit data delete(a[0:N]) 36 37 printf(" c[1] = %d ", c[1]); 38 free(a); 39 free(b); 40 free(c); 41 //getchar(); 42 return 0; 43 }

● 输出结果(是否异步,差异仅在行号、耗时上)

//+-----------------------------------------------------------------------------非异步 D:CodeOpenACCOpenACCProjectOpenACCProject>pgcc main.c -acc -Minfo -o main_acc.exe main: 14, Generating enter data create(a[:10240000]) 17, Accelerator kernel generated Generating Tesla code 18, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */ 17, Generating implicit copyout(a[:10240000]) 31, Generating update self(a[:10240000]) 35, Generating update device(a[:10240000]) Generating exit data delete(a[:10240000]) D:CodeOpenACCOpenACCProjectOpenACCProject>main_acc.exe launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=17 device=0 threadid=1 queue=0 num_gangs=65535 num_workers=1 vector_length=128 grid=65535 block=128 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=17 device=0 threadid=1 queue=0 num_gangs=65535 num_workers=1 vector_length=128 grid=65535 block=128 ... // 省略 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=17 device=0 threadid=1 queue=0 num_gangs=65535 num_workers=1 vector_length=128 grid=65535 block=128 c[1] = 800 PGI: "acc_shutdown" not detected, performance results might be incomplete. Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c main NVIDIA devicenum=0 time(us): 6,366 14: data region reached 1 time 17: compute region reached 200 times 17: kernel launched 200 times grid: [65535] block: [128] elapsed time(us): total=58,000 max=1000 min=0 avg=290 17: data region reached 400 times 31: update directive reached 1 time 31: data copyout transfers: 3 device time(us): total=3,220 max=1,331 min=593 avg=1,073 35: update directive reached 1 time 35: data copyin transfers: 3 device time(us): total=3,146 max=1,286 min=578 avg=1,048 35: data region reached 1 time //------------------------------------------------------------------------------有异步 D:CodeOpenACCOpenACCProjectOpenACCProject>pgcc main.c -acc -Minfo -o main_acc.exe main: 14, Generating enter data create(a[:10240000]) 17, Accelerator kernel generated Generating Tesla code 18, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */ 17, Generating implicit copyout(a[:10240000]) 29, Generating update self(a[:10240000]) 35, Generating update device(a[:10240000]) Generating exit data delete(a[:10240000]) D:CodeOpenACCOpenACCProjectOpenACCProject>main_acc.exe launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=17 device=0 threadid=1 queue=0 num_gangs=65535 num_workers=1 vector_length=128 grid=65535 block=128 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=17 device=0 threadid=1 queue=0 num_gangs=65535 num_workers=1 vector_length=128 grid=65535 block=128 ... // 省略 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=17 device=0 threadid=1 queue=0 num_gangs=65535 num_workers=1 vector_length=128 grid=65535 block=128 c[1] = 800 PGI: "acc_shutdown" not detected, performance results might be incomplete. Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data Timing may be affected by asynchronous behavior set PGI_ACC_SYNCHRONOUS to 1 to disable async() clauses D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c main NVIDIA devicenum=0 time(us): 6,225 14: data region reached 1 time 17: compute region reached 200 times 17: kernel launched 200 times grid: [65535] block: [128] elapsed time(us): total=63,000 max=1000 min=0 avg=315 17: data region reached 400 times 29: update directive reached 1 time 29: data copyout transfers: 3 device time(us): total=3,055 max=1,244 min=567 avg=1,018 35: update directive reached 1 time 35: data copyin transfers: 3 device time(us): total=3,170 max=1,294 min=587 avg=1,056 35: data region reached 1 time

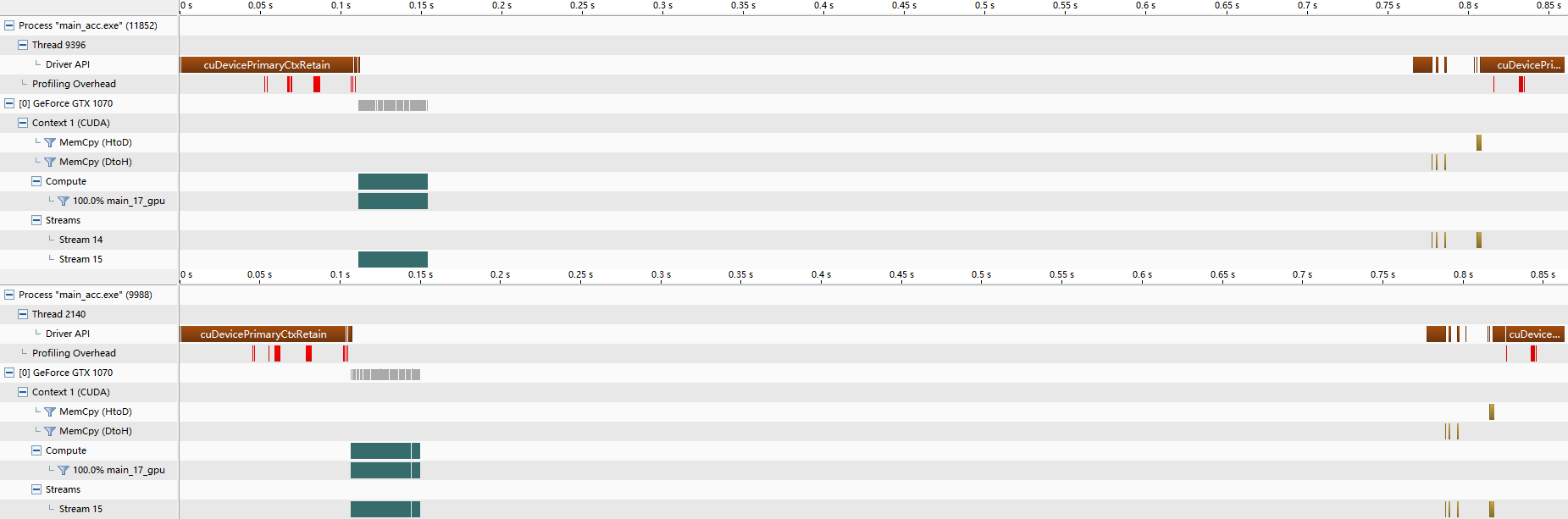

● Nvvp 的结果,我是真没看出来有较大的差别,可能例子举得不够好

● 在一个设备上同时使用两个命令队列

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <openacc.h> 4 5 #define N 10240000 6 #define COUNT 200 7 8 int main() 9 { 10 int *a = (int *)malloc(sizeof(int)*N); 11 int *b = (int *)malloc(sizeof(int)*N); 12 int *c = (int *)malloc(sizeof(int)*N); 13 14 #pragma acc enter data create(a[0:N]) async(1) 15 for (int i = 0; i < COUNT; i++) 16 { 17 #pragma acc parallel loop async(1) 18 for (int j = 0; j < N; j++) 19 a[j] = (i + j) * 2; 20 } 21 22 #pragma acc enter data create(b[0:N]) async(2) 23 for (int i = 0; i < COUNT; i++) 24 { 25 #pragma acc parallel loop async(2) 26 for (int j = 0; j < N; j++) 27 b[j] = (i + j) * 3; 28 } 29 30 #pragma acc enter data create(c[0:N]) async(2) 31 #pragma acc wait(1) async(2) 32 33 #pragma acc parallel loop async(2) 34 for (int i = 0; i < N; i++) 35 c[i] = a[i] + b[i]; 36 37 #pragma acc update host(c[0:N]) async(2) 38 #pragma acc exit data delete(a[0:N], b[0:N], c[0:N]) 39 40 printf(" c[1] = %d ", c[1]); 41 free(a); 42 free(b); 43 free(c); 44 //getchar(); 45 return 0; 46 }

● 输出结果

D:CodeOpenACCOpenACCProjectOpenACCProject>pgcc main.c -acc -Minfo -o main_acc.exe main: 14, Generating enter data create(a[:10240000]) 17, Accelerator kernel generated Generating Tesla code 18, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */ 17, Generating implicit copyout(a[:10240000]) 22, Generating enter data create(b[:10240000]) 25, Accelerator kernel generated Generating Tesla code 26, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */ 25, Generating implicit copyout(b[:10240000]) 30, Generating enter data create(c[:10240000]) 33, Accelerator kernel generated Generating Tesla code 34, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */ 33, Generating implicit copyout(c[:10240000]) Generating implicit copyin(b[:10240000],a[:10240000]) 38, Generating update self(c[:10240000]) Generating exit data delete(c[:10240000],b[:10240000],a[:10240000]) D:CodeOpenACCOpenACCProjectOpenACCProject>main_acc.exe c[1] = 1000 PGI: "acc_shutdown" not detected, performance results might be incomplete. Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data Timing may be affected by asynchronous behavior set PGI_ACC_SYNCHRONOUS to 1 to disable async() clauses D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c main NVIDIA devicenum=0 time(us): 3,118 14: data region reached 1 time 17: compute region reached 200 times 17: kernel launched 200 times grid: [65535] block: [128] elapsed time(us): total=48,000 max=1000 min=0 avg=240 17: data region reached 400 times 22: data region reached 1 time 25: compute region reached 200 times 25: kernel launched 200 times grid: [65535] block: [128] elapsed time(us): total=48,000 max=1000 min=0 avg=240 25: data region reached 400 times 30: data region reached 1 time 33: compute region reached 1 time 33: kernel launched 1 time grid: [65535] block: [128] device time(us): total=0 max=0 min=0 avg=0 33: data region reached 2 times 38: update directive reached 1 time 38: data copyout transfers: 3 device time(us): total=3,118 max=1,277 min=568 avg=1,039 38: data region reached 1 time

● Nvvp 中,可以看到两个命令队列交替执行

● 在 PGI 命令行中使用命令 pgaccelinfo 查看设备信息

D:CodeOpenACCOpenACCProjectOpenACCProject>pgaccelinfo CUDA Driver Version: 9010 Device Number: 0 Device Name: GeForce GTX 1070 Device Revision Number: 6.1 Global Memory Size: 8589934592 Number of Multiprocessors: 16 Concurrent Copy and Execution: Yes Total Constant Memory: 65536 Total Shared Memory per Block: 49152 Registers per Block: 65536 Warp Size: 32 Maximum Threads per Block: 1024 Maximum Block Dimensions: 1024, 1024, 64 Maximum Grid Dimensions: 2147483647 x 65535 x 65535 Maximum Memory Pitch: 2147483647B Texture Alignment: 512B Clock Rate: 1645 MHz Execution Timeout: Yes Integrated Device: No Can Map Host Memory: Yes Compute Mode: default Concurrent Kernels: Yes ECC Enabled: No Memory Clock Rate: 4004 MHz Memory Bus Width: 256 bits L2 Cache Size: 2097152 bytes Max Threads Per SMP: 2048 Async Engines: 2 // 有两个异步引擎,支持两个命令队列并行 Unified Addressing: Yes Managed Memory: Yes Concurrent Managed Memory: No PGI Compiler Option: -ta=tesla:cc60