本文首发于:Java大数据与数据仓库,Flink实时计算pv、uv的几种方法

实时统计pv、uv是再常见不过的大数据统计需求了,前面出过一篇SparkStreaming实时统计pv,uv的案例,这里用Flink实时计算pv,uv。

我们需要统计不同数据类型每天的pv,uv情况,并且有如下要求.

- 每秒钟要输出最新的统计结果;

- 程序永远跑着不会停,所以要定期清理内存里的过时数据;

- 收到的消息里的时间字段并不是按照顺序严格递增的,所以要有一定的容错机制;

- 访问uv并不一定每秒钟都会变化,重复输出对IO是巨大的浪费,所以要在uv变更时在一秒内输出结果,未变更时不输出;

Flink数据流上的类型和操作

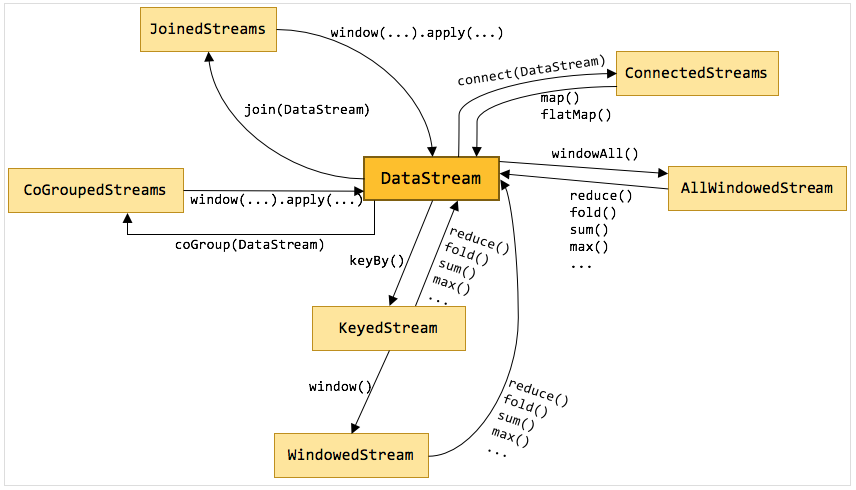

DataStream是flink流处理最核心的数据结构,其它的各种流都可以直接或者间接通过DataStream来完成相互转换,一些常用的流直接的转换关系如图:

可以看出,DataStream可以与KeyedStream相互转换,KeyedStream可以转换为WindowedStream,DataStream不能直接转换为WindowedStream,WindowedStream可以直接转换为DataStream。各种流之间虽然不能相互直接转换,但是都可以通过先转换为DataStream,再转换为其它流的方法来实现。

在这个计算pv,uv的需求中就主要用到DataStream、KeyedStream以及WindowedStream这些数据结构。

这里需要用到window和watermark,使用窗口把数据按天分割,使用watermark可以通过“水位”来定期清理窗口外的迟到数据,起到清理内存的作用。

业务代码

我们的数据是json类型的,含有date,helperversion,guid这3个字段,在实时统计pv,uv这个功能中,其它字段可以直接丢掉,当然了在离线数据仓库中,所有有含义的业务字段都是要保留到hive当中的。

其它相关概念就不说了,会专门介绍,这里直接上代码吧。

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.ddxygq</groupId>

<artifactId>bigdata</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<scala.version>2.11.8</scala.version>

<flink.version>1.7.0</flink.version>

<pkg.name>bigdata</pkg.name>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>{flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>flink.version</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>{flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-kafka-0.8 -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.10_2.11</artifactId>

<version>flink.version</version>

</dependency>

<build>

<!--测试代码和文件-->

<!--<testSourceDirectory>{basedir}/src/test</testSourceDirectory>-->

<finalName>basedir/src/test</testSourceDirectory>−−><finalName>{pkg.name}</finalName>

<sourceDirectory>src/main/java</sourceDirectory>

<resources>

<resource>

<directory>src/main/resources</directory>

<includes>

<include>*.properties</include>

<include>*.xml</include>

</includes>

<filtering>false</filtering>

</resource>

</resources>

<plugins>

<!-- 跳过测试插件-->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<configuration>

<skip>true</skip>

</configuration>

</plugin>

<!--编译scala插件-->

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<version>2.15.2</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

主要代码,主要使用scala开发:

package com.ddxygq.bigdata.flink.streaming.pvuv

import java.util.Properties

import com.alibaba.fastjson.JSON

import org.apache.flink.runtime.state.filesystem.FsStateBackend

import org.apache.flink.streaming.api.CheckpointingMode

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.triggers.ContinuousProcessingTimeTrigger

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010

import org.apache.flink.streaming.util.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala.extensions._

import org.apache.flink.api.scala._

/**

* @ Author: keguang

* @ Date: 2019/3/18 17:34

* @ version: v1.0.0

* @ description:

*/

object PvUvCount {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

// 容错

env.enableCheckpointing(5000)

env.getCheckpointConfig.setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE)

env.setStateBackend(new FsStateBackend("file:///D:/space/IJ/bigdata/src/main/scala/com/ddxygq/bigdata/flink/checkpoint/flink/tagApp"))

// kafka 配置

val ZOOKEEPER_HOST = "hadoop01:2181,hadoop02:2181,hadoop03:2181"

val KAFKA_BROKERS = "hadoop01:9092,hadoop02:9092,hadoop03:9092"

val TRANSACTION_GROUP = "flink-count"

val TOPIC_NAME = "flink"

val kafkaProps = new Properties()

kafkaProps.setProperty("zookeeper.connect", ZOOKEEPER_HOST)

kafkaProps.setProperty("bootstrap.servers", KAFKA_BROKERS)

kafkaProps.setProperty("group.id", TRANSACTION_GROUP)

// watrmark 允许数据延迟时间

val MaxOutOfOrderness = 86400 * 1000L

// 消费kafka数据

val streamData: DataStream[(String, String, String)] = env.addSource(

new FlinkKafkaConsumer010[String](TOPIC_NAME, new SimpleStringSchema(), kafkaProps)

).assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor[String](Time.milliseconds(MaxOutOfOrderness)) {

override def extractTimestamp(element: String): Long = {

val t = JSON.parseObject(element)

val time = JSON.parseObject(JSON.parseObject(t.getString("message")).getString("decrypted_data")).getString("time")

time.toLong

}

}).map(x => {

var date = "error"

var guid = "error"

var helperversion = "error"

try {

val messageJsonObject = JSON.parseObject(JSON.parseObject(x).getString("message"))

val datetime = messageJsonObject.getString("time")

date = datetime.split(" ")(0)

// hour = datetime.split(" ")(1).substring(0, 2)

val decrypted_data_string = messageJsonObject.getString("decrypted_data")

if (!"".equals(decrypted_data_string)) {

val decrypted_data = JSON.parseObject(decrypted_data_string)

guid = decrypted_data.getString("guid").trim

helperversion = decrypted_data.getString("helperversion")

}

} catch {

case e: Exception => {

println(e)

}

}

(date, helperversion, guid)

})

// 这上面是设置watermark并解析json部分

// 聚合窗口中的数据,可以研究下applyWith这个方法和OnWindowedStream这个类

val resultStream = streamData.keyBy(x => {

x._1 + x._2

}).timeWindow(Time.days(1))

.trigger(ContinuousProcessingTimeTrigger.of(Time.seconds(1)))

.applyWith(("", List.empty[Int], Set.empty[Int], 0L, 0L))(

foldFunction = {

case ((_, list, set, _, 0), item) => {

val date = item._1

val helperversion = item._2

val guid = item._3

(date + "_" + helperversion, guid.hashCode +: list, set + guid.hashCode, 0L, 0L)

}

}

, windowFunction = {

case (key, window, result) => {

result.map {

case (leixing, list, set, _, _) => {

(leixing, list.size, set.size, window.getStart, window.getEnd)

}

}

}

}

).keyBy(0)

.flatMapWithState[(String, Int, Int, Long, Long),(Int, Int)]{

case ((key, numpv, numuv, begin, end), curr) =>

curr match {

case Some(numCurr) if numCurr == (numuv, numpv) =>

(Seq.empty, Some((numuv, numpv))) //如果之前已经有相同的数据,则返回空结果

case _ =>

(Seq((key, numpv, numuv, begin, end)), Some((numuv, numpv)))

}

}

// 最终结果

val resultedStream = resultStream.map(x => {

val keys = x._1.split("_")

val date = keys(0)

val helperversion = keys(1)

(date, helperversion, x._2, x._3)

})

resultedStream.print()

env.execute("PvUvCount")

}

}

使用List集合的size保存pv,使用Set集合的size保存uv,从而达到实时统计pv,uv的目的。

这里用了几个关键的函数:

applyWith:里面需要的参数,初始状态变量,和foldFunction ,windowFunction ;

存在的问题

显然,当数据量很大的时候,这个List集合和Set集合会很大,并且这里的pv是否可以不用List来存储,而是通过一个状态变量,不断做累加,对应操作就是更新状态来完成。

改进版

使用了一个计数器来存储pv的值。

packagecom.ddxygq.bigdata.flink.streaming.pvuv

import java.util.Properties

import com.alibaba.fastjson.JSON

import org.apache.flink.api.common.accumulators.IntCounter

import org.apache.flink.runtime.state.filesystem.FsStateBackend

import org.apache.flink.streaming.api.CheckpointingMode

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.triggers.ContinuousProcessingTimeTrigger

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010

import org.apache.flink.streaming.util.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala.extensions._

import org.apache.flink.api.scala._

import org.apache.flink.core.fs.FileSystem

object PvUv2 {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

// 容错

env.enableCheckpointing(5000)

env.getCheckpointConfig.setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE)

env.setStateBackend(new FsStateBackend("file:///D:/space/IJ/bigdata/src/main/scala/com/ddxygq/bigdata/flink/checkpoint/streaming/counter"))

// kafka 配置

val ZOOKEEPER_HOST = "hadoop01:2181,hadoop02:2181,hadoop03:2181"

val KAFKA_BROKERS = "hadoop01:9092,hadoop02:9092,hadoop03:9092"

val TRANSACTION_GROUP = "flink-count"

val TOPIC_NAME = "flink"

val kafkaProps = new Properties()

kafkaProps.setProperty("zookeeper.connect", ZOOKEEPER_HOST)

kafkaProps.setProperty("bootstrap.servers", KAFKA_BROKERS)

kafkaProps.setProperty("group.id", TRANSACTION_GROUP)

// watrmark 允许数据延迟时间

val MaxOutOfOrderness = 86400 * 1000L

val streamData: DataStream[(String, String, String)] = env.addSource(

new FlinkKafkaConsumer010[String](TOPIC_NAME, new SimpleStringSchema(), kafkaProps)

).assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor[String](Time.milliseconds(MaxOutOfOrderness)) {

override def extractTimestamp(element: String): Long = {

val t = JSON.parseObject(element)

val time = JSON.parseObject(JSON.parseObject(t.getString("message")).getString("decrypted_data")).getString("time")

time.toLong

}

}).map(x => {

var date = "error"

var guid = "error"

var helperversion = "error"

try {

val messageJsonObject = JSON.parseObject(JSON.parseObject(x).getString("message"))

val datetime = messageJsonObject.getString("time")

date = datetime.split(" ")(0)

// hour = datetime.split(" ")(1).substring(0, 2)

val decrypted_data_string = messageJsonObject.getString("decrypted_data")

if (!"".equals(decrypted_data_string)) {

val decrypted_data = JSON.parseObject(decrypted_data_string)

guid = decrypted_data.getString("guid").trim

helperversion = decrypted_data.getString("helperversion")

}

} catch {

case e: Exception => {

println(e)

}

}

(date, helperversion, guid)

})

val resultStream = streamData.keyBy(x => {

x._1 + x._2

}).timeWindow(Time.days(1))

.trigger(ContinuousProcessingTimeTrigger.of(Time.seconds(1)))

.applyWith(("", new IntCounter(), Set.empty[Int], 0L, 0L))(

foldFunction = {

case ((_, cou, set, _, 0), item) => {

val date = item._1

val helperversion = item._2

val guid = item._3

cou.add(1)

(date + "_" + helperversion, cou, set + guid.hashCode, 0L, 0L)

}

}

, windowFunction = {

case (key, window, result) => {

result.map {

case (leixing, cou, set, _, _) => {

(leixing, cou.getLocalValue, set.size, window.getStart, window.getEnd)

}

}

}

}

).keyBy(0)

.flatMapWithState[(String, Int, Int, Long, Long),(Int, Int)]{

case ((key, numpv, numuv, begin, end), curr) =>

curr match {

case Some(numCurr) if numCurr == (numuv, numpv) =>

(Seq.empty, Some((numuv, numpv))) //如果之前已经有相同的数据,则返回空结果

case _ =>

(Seq((key, numpv, numuv, begin, end)), Some((numuv, numpv)))

}

}

// 最终结果

val resultedStream = resultStream.map(x => {

val keys = x._1.split("_")

val date = keys(0)

val helperversion = keys(1)

(date, helperversion, x._2, x._3)

})

val resultPath = "D:\space\IJ\bigdata\src\main\scala\com\ddxygq\bigdata\flink\streaming\pvuv\result"

resultedStream.writeAsText(resultPath, FileSystem.WriteMode.OVERWRITE)

env.execute("PvUvCount")

}

}

参考资料

https://flink.sojb.cn/dev/event_time.html

http://wuchong.me/blog/2016/05/20/flink-internals-streams-and-operations-on-streams

https://segmentfault.com/a/1190000006235690

Hive系列文章

Hive表的基本操作

Hive中的集合数据类型

Hive动态分区详解

hive中orc格式表的数据导入

Java通过jdbc连接hive

通过HiveServer2访问Hive

SpringBoot连接Hive实现自助取数

hive关联hbase表

Hive udf 使用方法

Hive基于UDF进行文本分词

Hive窗口函数row number的用法

数据仓库之拉链表