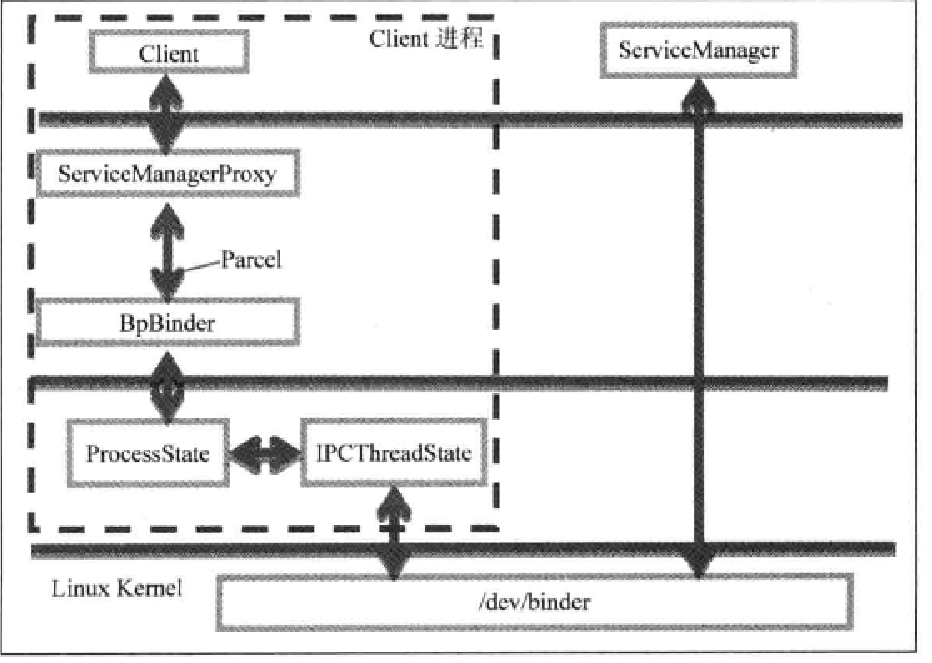

Bind机制由4个部分组成。bind驱动,Client,ServiceManager &Service

1.Bind其实是一个基于linux系统的驱动,目的是为了实现内存共享。

bind驱动的东西,由于偏向内核,并且bind机制的内容非常庞大,所以我们暂时略去这个部分。

2.ServiceManager

Service Manager顾名思义,是一个“管家”。更确切的说,是所有系统service 的manager。

首先从service_manager.c开始frameworks ativecmdsservicemanagerservice_manager.c

static struct { unsigned uid; const char *name; } allowed[] = { { AID_MEDIA, "media.audio_flinger" }, { AID_MEDIA, "media.log" }, { AID_MEDIA, "media.player" }, { AID_MEDIA, "media.camera" }, { AID_MEDIA, "media.audio_policy" }, { AID_DRM, "drm.drmManager" }, { AID_NFC, "nfc" }, { AID_BLUETOOTH, "bluetooth" }, { AID_RADIO, "radio.phone" }, { AID_RADIO, "radio.sms" }, { AID_RADIO, "radio.phonesubinfo" }, { AID_RADIO, "radio.simphonebook" }, /* TODO: remove after phone services are updated: */ { AID_RADIO, "phone" }, { AID_RADIO, "sip" }, { AID_RADIO, "isms" }, { AID_RADIO, "iphonesubinfo" }, { AID_RADIO, "simphonebook" }, { AID_MEDIA, "common_time.clock" }, { AID_MEDIA, "common_time.config" }, { AID_KEYSTORE, "android.security.keystore" }, };

以上就是系统服务的一个部分。这些都是注册在servicemanager来管理。

那service manager干那些事:

I.提供IBind对象,也就是各个service的引用,供每个进程使用,且对于每个进程来说,该Ibind对象是唯一的。

II.让各个系统service注册到servicemanager中。

这里binder驱动,不是我们通常操作系统结构里的驱动概念,可以理解为是client和ServiceManager交流的媒介。

binder驱动的本质是内存共享。

其实这是整个bind机制的前面部分,就是从client到servicemanager,这样client可以拿到Ibind对象,进而可以直接“操作servie”。

举个例子:

AlarmManager alarmManager = context.getSystemService(Context.ALARM_SERVICE);

alarmManager.setExact(AlarmManager.ELAPSED_REALTIME, elapsedRealtime,

pendingIntent);

拿到alaram service bind对象,进而操作service提供的“服务”。

而且这个操作是同步的!

就好象在操作同一个进程的东西。

下面我们看看service Manager究竟是如何做到上面说的几点的。

2.1 Service Manager的启动:

既然SM是管理员,那么它应该是最勤快的,也就是必须最“早”启动。

是的,它的启动是定义在init.rc里面的:systemcore ootdirinit.rc

# adbd on at boot in emulator on property:ro.kernel.qemu=1 start adbd service servicemanager /system/bin/servicemanager class core user system group system critical onrestart restart healthd onrestart restart zygote onrestart restart media onrestart restart surfaceflinger onrestart restart drm

Service Manager启动后,在干什么?

还是在service_manager.c中:

int main(int argc, char **argv) { struct binder_state *bs; void *svcmgr = BINDER_SERVICE_MANAGER; bs = binder_open(128*1024); if (binder_become_context_manager(bs)) { ALOGE("cannot become context manager (%s) ", strerror(errno)); return -1; } svcmgr_handle = svcmgr; binder_loop(bs, svcmgr_handler); return 0; }

binder_open打开bind驱动,并且分配128K大小。

binder_become_context_manager(bs):

int binder_become_context_manager(struct binder_state *bs) { return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0); }

把自己注册为Service 大管家。

void binder_loop(struct binder_state *bs, binder_handler func) { int res; struct binder_write_read bwr; unsigned readbuf[32]; bwr.write_size = 0; bwr.write_consumed = 0; bwr.write_buffer = 0; readbuf[0] = BC_ENTER_LOOPER; binder_write(bs, readbuf, sizeof(unsigned)); for (;;) { bwr.read_size = sizeof(readbuf); bwr.read_consumed = 0; bwr.read_buffer = (unsigned) readbuf; res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); if (res < 0) { ALOGE("binder_loop: ioctl failed (%s) ", strerror(errno)); break; } res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func); if (res == 0) { ALOGE("binder_loop: unexpected reply?! "); break; } if (res < 0) { ALOGE("binder_loop: io error %d %s ", res, strerror(errno)); break; } } }

开始进入loop,和之前分析的andorid线程消息驱动机制非常相似。

读取消息队列,解析它们,知道出现异常。

接下来,看看bind_parse:

int binder_parse(struct binder_state *bs, struct binder_io *bio, uint32_t *ptr, uint32_t size, binder_handler func) { int r = 1; uint32_t *end = ptr + (size / 4); while (ptr < end) { uint32_t cmd = *ptr++; #if TRACE fprintf(stderr,"%s: ", cmd_name(cmd)); #endif switch(cmd) { case BR_NOOP: break; case BR_TRANSACTION_COMPLETE: break; case BR_INCREFS: case BR_ACQUIRE: case BR_RELEASE: case BR_DECREFS: #if TRACE fprintf(stderr," %08x %08x ", ptr[0], ptr[1]); #endif ptr += 2; break; case BR_TRANSACTION: { struct binder_txn *txn = (void *) ptr; if ((end - ptr) * sizeof(uint32_t) < sizeof(struct binder_txn)) { ALOGE("parse: txn too small! "); return -1; } binder_dump_txn(txn); if (func) { unsigned rdata[256/4]; struct binder_io msg; struct binder_io reply; int res; bio_init(&reply, rdata, sizeof(rdata), 4); bio_init_from_txn(&msg, txn); res = func(bs, txn, &msg, &reply); binder_send_reply(bs, &reply, txn->data, res); } ptr += sizeof(*txn) / sizeof(uint32_t); break; } case BR_REPLY: { struct binder_txn *txn = (void*) ptr; if ((end - ptr) * sizeof(uint32_t) < sizeof(struct binder_txn)) { ALOGE("parse: reply too small! "); return -1; } binder_dump_txn(txn); if (bio) { bio_init_from_txn(bio, txn); bio = 0; } else { /* todo FREE BUFFER */ } ptr += (sizeof(*txn) / sizeof(uint32_t)); r = 0; break; } case BR_DEAD_BINDER: { struct binder_death *death = (void*) *ptr++; death->func(bs, death->ptr); break; } case BR_FAILED_REPLY: r = -1; break; case BR_DEAD_REPLY: r = -1; break; default: ALOGE("parse: OOPS %d ", cmd); return -1; } } return r; }

关键是分析:BR_TRANSACTION,BR_REPLY。

BR_TRANSACTION中做了一些初始化,然后

res = func(bs, txn, &msg, &reply);

binder_send_reply(bs, &reply, txn->data, res);

func函数就是在service_manager.c中传入的

int svcmgr_handler(struct binder_state *bs, struct binder_txn *txn, struct binder_io *msg, struct binder_io *reply)

所以bind_loop最终实现分析的函数是传入的函数!

至此整个service_manager的流程已经清楚。

事件驱动机制:

1.从bind驱动读取消息

2.处理消息

3.进入looper,永远不会主动退出,直到出现致命错误。

int svcmgr_handler(struct binder_state *bs, struct binder_txn *txn, struct binder_io *msg, struct binder_io *reply) { struct svcinfo *si; uint16_t *s; unsigned len; void *ptr; uint32_t strict_policy; int allow_isolated; // ALOGI("target=%p code=%d pid=%d uid=%d ", // txn->target, txn->code, txn->sender_pid, txn->sender_euid); if (txn->target != svcmgr_handle) return -1; // Equivalent to Parcel::enforceInterface(), reading the RPC // header with the strict mode policy mask and the interface name. // Note that we ignore the strict_policy and don't propagate it // further (since we do no outbound RPCs anyway). strict_policy = bio_get_uint32(msg); s = bio_get_string16(msg, &len); if ((len != (sizeof(svcmgr_id) / 2)) || memcmp(svcmgr_id, s, sizeof(svcmgr_id))) { fprintf(stderr,"invalid id %s ", str8(s)); return -1; } switch(txn->code) { case SVC_MGR_GET_SERVICE: case SVC_MGR_CHECK_SERVICE: s = bio_get_string16(msg, &len); ptr = do_find_service(bs, s, len, txn->sender_euid); if (!ptr) break; bio_put_ref(reply, ptr); return 0; case SVC_MGR_ADD_SERVICE: s = bio_get_string16(msg, &len); ptr = bio_get_ref(msg); allow_isolated = bio_get_uint32(msg) ? 1 : 0; if (do_add_service(bs, s, len, ptr, txn->sender_euid, allow_isolated)) return -1; break; case SVC_MGR_LIST_SERVICES: { unsigned n = bio_get_uint32(msg); si = svclist; while ((n-- > 0) && si) si = si->next; if (si) { bio_put_string16(reply, si->name); return 0; } return -1; } default: ALOGE("unknown code %d ", txn->code); return -1; } bio_put_uint32(reply, 0); return 0; }

switch语句,查询和获取service 或者注册。

查找svclist里面是否有相同name的服务。

svclist是链表的方式,与线程的消息队列一样!

struct svcinfo *find_svc(uint16_t *s16, unsigned len) { struct svcinfo *si; for (si = svclist; si; si = si->next) { if ((len == si->len) && !memcmp(s16, si->name, len * sizeof(uint16_t))) { return si; } } return 0; }

接下来我们看看void *do_find_service(struct binder_state *bs, uint16_t *s, unsigned len, unsigned uid)

return的到底是什么?

注册服务:SVC_MGR_ADD_SERVICE:

int do_add_service(struct binder_state *bs, uint16_t *s, unsigned len, void *ptr, unsigned uid, int allow_isolated) { struct svcinfo *si; //ALOGI("add_service('%s',%p,%s) uid=%d ", str8(s), ptr, // allow_isolated ? "allow_isolated" : "!allow_isolated", uid); if (!ptr || (len == 0) || (len > 127)) return -1; if (!svc_can_register(uid, s)) { ALOGE("add_service('%s',%p) uid=%d - PERMISSION DENIED ", str8(s), ptr, uid); return -1; } si = find_svc(s, len); if (si) { if (si->ptr) { ALOGE("add_service('%s',%p) uid=%d - ALREADY REGISTERED, OVERRIDE ", str8(s), ptr, uid); svcinfo_death(bs, si); } si->ptr = ptr; } else { si = malloc(sizeof(*si) + (len + 1) * sizeof(uint16_t)); if (!si) { ALOGE("add_service('%s',%p) uid=%d - OUT OF MEMORY ", str8(s), ptr, uid); return -1; } si->ptr = ptr; si->len = len; memcpy(si->name, s, (len + 1) * sizeof(uint16_t)); si->name[len] = '�'; si->death.func = svcinfo_death; si->death.ptr = si; si->allow_isolated = allow_isolated; si->next = svclist; svclist = si; } binder_acquire(bs, ptr); binder_link_to_death(bs, ptr, &si->death); return 0; }

int svc_can_register(unsigned uid, uint16_t *name)

判断是否在allowed表格里面。

先看看是否在列表里面?

si = find_svc(s, len);

如果不再的话,就注册一个新的si,到svclist。

至此service_manager就启动起来了。