Nobody doubts that OpenStack is the best open source project for private and public clouds today. OpenStack has begun to be perceived as a standard platform not only for laboratory or development environments, but has became suitable for large enterprises and service providers as well. This OpenStack revolution brought along a new topic called SDN. Software Defined Networking (SDN) can be seen as kind of buzzword and lot of our customers thought that they did not have a need for SDN, because their environment is not large enough, too dynamic, etc. But SDN is not just about scaling, it gives you opportunity to use NFV (Network Function Virtualization) like LbaaS, FWaaS, VPNaaS. Finally the most important reason is that Neutron supports vendor driven SDN solutions (Neutron vendor plugin) and it is possible to use this vendor SDN solution together with OpenStack in real enterprise production. The reason to use vendor plugin is that upstream Neutron solution with OpenVSwitch (DVR or L3 agent) does not provide native High Availability, scalability, performance and service provider features (L3VPN, EVPN) required by the large enterprises.

Our team in tcp cloud has been solving very advanced network questions and problems for different service providers for past 6 months. Based on that we had to choose SDN solution, which would let us to satisfy customer requirements and provide robust and stable cloud solution. We compared several SDN solutions from different vendors and chose OpenContrail, simply because “it works” and it is not only slideware or any other from of secret marketing product, which can be installed only by vendor behind the closed door.

Therefore tcp cloud together with Arrow ECS decided to create LAB environment, where we can proof and verify all marketing messages in real deployment to show customer that given solution exists, works and we know how to implement it without hidden issues.

This blog is first one from series of articles about OpenContrail SDN Lab testing, where we would like to cover topics like:

- L3VPN termination at cloud environment

- VxLAN to EVPN Stitching for L2 Extension

- VxLAN Routing and SDN

- OVSDB Provides Control for VxLAN

- Translating between SDN Types

- MPLSoverGRE or VxLAN encapsulation

- Kubernetes integration (container virtualization)

ToR integration Overview

SDN brings idea that everything can be virtualized, however there are still technological or legal limitation, which block possibility to integrate them into overlay like:

- Legacy hardware infrastructure – PowerVM, HP Itanium, OEM appliances

- Licenses – some software cannot be operated on virtual hardware

- Physical network appliances – firewalls, load balancers, etc

- Database clusters – Oracle SuperCluster, etc

Therefore there must be way how to connect the underlay world with overlay. OpenContrail provides 3 ways to connect overlay with underlay:

- Link Local Services – it might be required for a virtual machine to access specific services running on the fabric infrastructure. For example, a VM requiring access to the backup service running in the fabric. Such access can be provided by configuring the required service as a link local service.

- Router L3/L2 gateway (VRF, EVI) – standard cloud gateway used for external routing networks. Standard use-case is OpenStack floating IPs. This will be discuss in next blog article.

- ToR switch – top-of-rack switch provides L2 connection for baremetal server or any other L2 service.

This blog focuses at ToR switch integration and should answer following questions:

- Baremetal server into overlay VN

- VxLAN with OVSDB terminated at ToR switch

- Multi vendor support for ToR switches with OVSDB – OpenContrail lets to use any Switch vendor with standard OVSDB protocol.

- Redundantly connected Bare Metal Servers – Physical port in virtual network is amazing, but how to solve HA for this server?

- High Availability for ToR configuration – Functional test is not equal to production setup.

The beginning covers OpenContrail’s support for ToR switches with OVSDB is explained at official Juniper documentation. The next section introduces the Lab infrastructure and architecture with server role description. At the end Contrail deployment is briefly described. The last two sections cover implementation of ToR Agent with Juniper QFX and OpenVSwitch.

OpenContrail Support for TOR Switch and OVSDB

This overview is taken from [ContrailToR]. Since 2.1 release, Contrail supports extending a cluster to include bare metal servers or other virtual instances connected to a top-of-rack (ToR) switch that supports the Open vSwitch Database Management (OVSDB) Protocol. The bare metal servers and other virtual instances can belong to any of the virtual networks configured in the Contrail overlay, facilitating communication with the virtual instances running in the OpenStack cluster. Contrail policy configuration is used to control behaviour of this communication.

OVSDB protocol is used to configure the TOR switch and to import dynamically-learned addresses. VXLAN encapsulation is used in the data plane for communication with the TOR switch.

TOR Services Node (TSN)

The TSN acts as the multicast controller for the TOR switches. The TSN also provides DHCP and DNS services to the bare metal servers or virtual instances running behind TOR ports.

The TSN receives all the broadcast packets from the TOR, and replicates them to the required compute nodes in the cluster and to other EVPN nodes. Broadcast packets from the virtual machines in the cluster are sent directly from the respective compute nodes to the TOR switch.

The TSN can also act as the DHCP server for the bare metal servers or virtual instances, leasing IP addresses to them, along with other DHCP options configured in the system. The TSN also provides a DNS service for the bare metal servers.

Multiple TSN nodes can be configured in the system based on the scaling needs of the cluster.

Contrail TOR Agent

A TOR agent provisioned in the Contrail cluster acts as the OVSDB client for the TOR switch, and all of the OVSDB interactions with the TOR are performed by using the TOR agent. The TOR agent programs the different OVSDB tables onto the TOR switch and receives the local unicast table entries from the TOR switch.

There is more information about [ContrailToR].

Actual Lab Environment

Arrow LAB infrastructure consists of several Juniper boxes. The following figure captures the testing rack.

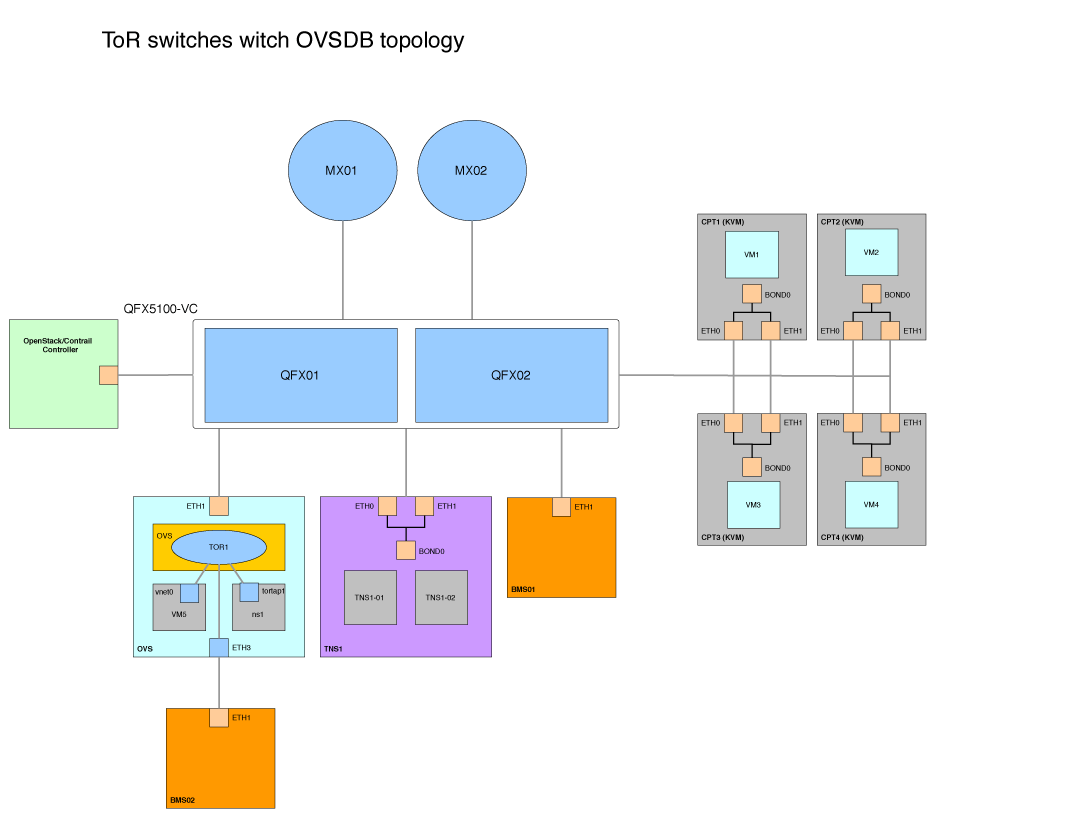

The following diagram shows high level network design of the lab environment.

The following diagram shows logical architecture for TOR testing. As already mentioned we use two Juniper MX5 routers and QFX5100 switches.

- CTPx – Using 4 physical servers as compute nodes. Each compute node is KVM hypervisor with Contrail vRouter.

- BMS01 – Represents physical server with one 10Gbps port connected to QFX.

- TNS01 – Physical server (can be virtual) for TOR Services Node with 2 ToR agents (QFX and OpenVSwitch).

- CTL – OpenStack and OpenContrail standalone controller. It contains all OpenStack APIs, database, message queue and OpenContrail control, config and analytics roles.

- OVS – Physical server with OpenVSwitch installed that is used as ToR switch. Details are described in section with openvswitch integration.

- BMS2 – Physical server connected to OVS node.

The next section describes installation Contrail with TNS (ToR agent).

Contrail Installation

The lab testing was commited on Contrail 2.1 with OpenStack IceHouse. The reason for choosing version 2.1 over 2.2 is that official Contrail 2.2 release has been available since last week. Therefore ToR in high availability setup will be discusses in next blog post, because of significant performance and availabitlity improvements in release 2.2.

The installation guide is available at official Juniper [site]. The following output shows our testbed.py file, where hosts are:

- host1 – OpenStack and OpenContrail standalone controller. It contains all OpenStack APIs, database, message queue and OpenContrail control, config and analytics role.

- host2 – 5 – Compute nodes

- host6 – TNS node with ToR agents. We installed first ToR agent by Fabric provisioning and second ToR manually in OpenVSwitch section.

from fabric.api import env

#Management ip addresses of hosts in the cluster

host1 = 'ubuntu@10.10.90.129'

host2 = 'ubuntu@10.100.10.2'

host3 = 'ubuntu@10.100.10.3'

host4 = 'ubuntu@10.100.10.4'

host5 = 'ubuntu@10.100.10.5'

host6 = 'ubuntu@10.100.10.6'

ext_routers = []

router_asn = 65412

host_build = 'ubuntu@10.10.90.129'

env.roledefs = {

'all': [host1, host2, host3,host6],

'cfgm': [host1],

'openstack': [host1],

'control': [host1],

'compute': [host2,host3,host4,host5,host6],

'collector': [host1],

'webui': [host1],

'database': [host1],

'build': [host_build],

'storage-master': [host1],

'storage-compute': [host2, host3,host4,host5],

'tsn': [host6], # Optional, Only to enable TSN. Only compute can support TSN

'toragent': [host6], #, Optional, Only to enable Tor Agent. Only compute can support Tor Agent

}

env.openstack_admin_password = 'arrowlab'

env.hostnames = {

'all': ['ctl01', 'cpt01', 'cpt02', 'cpt03', 'cpt04','tns01']

}

env.passwords = {

host1: 'ubuntu',

host2: 'ubuntu',

host3: 'ubuntu',

host4: 'ubuntu',

host5: 'ubuntu',

host6: 'ubuntu',

host_build: 'ubuntu',

}

env.ostypes = {

host1:'ubuntu',

host2:'ubuntu',

host3:'ubuntu',

host4:'ubuntu',

host5:'ubuntu',

host6:'ubuntu',

}

env.tor_agent = {

host6: [{

'tor_ip':'10.100.10.1', # IP address of the TOR

'tor_id':'1', # Numeric value to uniquely identify TOR 'tor_type':'ovs'

'tor_ovs_port':'9999', # the TCP port to connect on the TOR

'tor_ovs_protocol':'tcp', # always tcp, for now

'tor_tsn_ip':'10.100.10.6', # IP address of the TSN for this TOR

'tor_tsn_name':'tns01', # Name of the TSN node

'tor_name':'qfx5100', # Name of the TOR switch

'tor_tunnel_ip':'10.10.80.6', # IP address of Data tunnel endpoint

'tor_vendor_name':'QFX5100', # Vendor name for TOR switch

'tor_http_server_port':'8085', # HTTP port for TOR Introspect

}]

}

Contrail Baremetal ToR Implementation with Juniper QFX5100

This section shows how to setup Juniper QFX as ToR switch with baremetal server connection to the virtual network.

1. Create virtual network vxlannet 10.0.10.0/24 and set VxLAN encapsulation through VNI 10

2. Boot two instances VM1 (10.0.10.4) and VM2 (10.0.10.3) at two compute nodes into created virtual network vxlannet3.

3. Configure QFX for managing by OVSDB.

4. Configure QFX port xe-0/0/40.1000 through Contrail as L2 10.0.10.100 to network vxlannet.

5. Verify connectivity and configuration.

Step 1. and 2. is not covered in this blog post.

QFX5100 Configuration

OVSDB software package must be installed in order to enable following configuration in QFX side. We run Junos version 14.1X53-D15.2 with JUNOS SDN Software Suite 14.1X53-D15.2.

The following output shows commands for configuration QFX switch to enable managing interface xe-0/0/40 through ovsdb. This configuration parameters have to meet values from testbed.py.

set interfaces lo0 unit 0 family inet address 10.10.80.6/32

set switch-options ovsdb-managed

set switch-options vtep-source-interface lo0.0

set protocols ovsdb passive-connection protocol tcp port 6632

set protocols ovsdb interfaces xe-0/0/40

Contrail Configuration

Configuration for ToR agent was already defined in testbed.py, therefore the rest can be done in Contrail WebUI.

On Contrail side we had to add physical device QFX. After that we added physical and logical port for bare metal server.

In Contrail WebUI go to Configure > Physical Devices > Physical Routers and create new entry for the TOR switch, providing the TOR’s IP address and VTEP address. The router name should match the hostname of the TOR. Also configure the TSN and TOR agent addresses for the TOR.

Go to Configure > Physical Devices > Interfaces and add physical and logical interface to be configured on the TOR. The name of the logical interface must match the name on the TOR (xe-0/0/40 and xe-0/0/40.1000). Also enter other logical interface configurations, such as VLAN ID, MAC address, and IP address of the bare metal server and the virtual network to which it belongs.

We made several tests and this configuration shows connection of bare metal server with VLAN tagged with ID 1000.

The following output shows configuration changes done by Contrail on interfaces and VLAN section.

softtronik@QFX5100_VC# show interfaces xe-0/0/40

flexible-vlan-tagging;

encapsulation extended-vlan-bridge;

unit 1000 {

vlan-id 1000;

}

softtronik@QFX5100_VC# show vlans

Contrail-c68a622b-9248-4535-bf04-4859012d7a2a {

interface xe-0/0/40.1000;

vxlan {

vni 10;

}

}

Command Outputs

This section shows interesting command outputs of Juniper QFXs.

With show ovsdb logical-switch we can get the summary about logical switching (virtual network)

softtronik@QFX5100_VC> show ovsdb logical-switch

Logical switch information:

Logical Switch Name: Contrail-c68a622b-9248-4535-bf04-4859012d7a2a

Flags: Created by both

VNI: 10

Num of Remote MAC: 6

Num of Local MAC: 2

To list interfaces managed via ovsdb, use show ovsdb interface command.

softtronik@QFX5100_VC> show ovsdb interface

Interface VLAN ID Bridge-domain

xe-0/0/40 1000 Contrail-c68a622b-9248-4535-bf04-4859012d7a2a

List all learned MAC addresses and connection with particular VTEP with show ovsdb mac. Grep only remote addresses by adding keyword remote at the end of the command.

softtronik@QFX5100_VC> show ovsdb mac

Logical Switch Name: Contrail-c68a622b-9248-4535-bf04-4859012d7a2a

Mac IP Encapsulation Vtep

Address Address Address

ff:ff:ff:ff:ff:ff 0.0.0.0 Vxlan over Ipv4 10.10.80.6

10:0e:7e:bf:9e:ec 0.0.0.0 Vxlan over Ipv4 10.10.80.6

02:30:84:c3:d1:13 0.0.0.0 Vxlan over Ipv4 10.100.10.2

02:e1:bb:af:65:11 0.0.0.0 Vxlan over Ipv4 10.100.10.4

02:fc:94:91:42:f2 0.0.0.0 Vxlan over Ipv4 10.100.10.5

1a:7f:6d:fb:0e:3d 0.0.0.0 Vxlan over Ipv4 10.100.10.7

40:a6:77:9a:b3:38 0.0.0.0 Vxlan over Ipv4 10.10.80.4

ff:ff:ff:ff:ff:ff 0.0.0.0 Vxlan over Ipv4 10.100.10.6

softtronik@QFX5100_VC> show ovsdb virtual-tunnel-end-point

Encapsulation Ip Address Num of MAC's

VXLAN over IPv4 10.10.80.4 1

VXLAN over IPv4 10.10.80.6 2

VXLAN over IPv4 10.100.10.2 1

VXLAN over IPv4 10.100.10.4 1

VXLAN over IPv4 10.100.10.5 1

VXLAN over IPv4 10.100.10.6 1

VXLAN over IPv4 10.100.10.7 1

With this command we can see all Vtep addresses present in out network.

softtronik@QFX5100_VC> show vlans

Routing instance VLAN name Tag Interfaces

default-switch Contrail-c68a622b-9248-4535-bf04-4859012d7a2a NA

vtep.32769*

vtep.32770*

vtep.32771*

vtep.32772*

vtep.32773*

vtep.32774*

xe-0/0/40.1000*

Vtep interfaces can be also listed with show interfaces terse vtep command.

softtronik@QFX5100_VC> show interfaces terse vtep

Interface Admin Link Proto Local Remote

vtep up up

vtep.32768 up up

vtep.32769 up up eth-switch

vtep.32770 up up eth-switch

vtep.32771 up up eth-switch

vtep.32772 up up eth-switch

vtep.32773 up up eth-switch

vtep.32774 up up eth-switch

To see detailed information, use the previous command with particular interface.

softtronik@QFX5100_VC> show interfaces vtep.32769

Logical interface vtep.32769 (Index 576) (SNMP ifIndex 544)

Flags: Up SNMP-Traps Encapsulation: ENET2

VXLAN Endpoint Type: Remote, VXLAN Endpoint Address: 10.100.10.2, L2 Routing Instance: default-switch, L3 Routing Instance: default

Input packets : 0

Output packets: 8

Protocol eth-switch, MTU: 1600

Flags: Trunk-Mode

Redundant Connection of Bare Metal Servers

We wanted to use MC-LAG (QFX in virtual chassis) to enable run LACP on both ports, but MC-LAG is not currently supported with VxLAN (but it’s on the roadmap). Therefore only viable option is to connect both port into same Virtual Network (VNI) and configure active-passive bonding on the bare metal server.

Contrail Baremetal ToR Implementation with OpenVSwitch VTEP

We tested and verified Juniper QFX5100 works well as TOR switch in the previous section, which was no surprise to us because they are from the same vendor. At this part we want to show that Contrail in not a vendor locked-in solution by using standard network protocols. There are several switch vendors (Cumulus, Arista) who support OVSDB capability in their boxes. We have decided to proof this openness on OpenVSwitch.

We deployed another two physical server OVS, BSM02 and ToR agent TNS1-02. OVS server with openvswitch represents same role as Juniper QFX. We tested 3 use cases:

- simulate netns ns1 namespaces as BMS endpoint

- install KVM on OVS and launch VM5 as BMS endpoint

- use physical NIC eth3 and connect BMS02 physical bare metal server

Scenario for testing:

1. Create TOR agent – deploy TOR agent for managing OVS

2. Setup OVS – install openvswitch-vtep, configure physical switch and connect namespace.

3. Connect KVM VM to cloud – install kvm, launch VM5 with OVS interface and connect through a new logical port.

4. Connect BMS to cloud – add OVS physical interface eth2 and verify connectivity from BMS02.

Create TOR agent

In previous chapter we used tns1-01 TOR agent for QFX5100. If we want to manage another switch via OVSDB, next TOR agent service has to be started. It can be done on the same TNS node. Provisioning can be done through Fabric, but we show how to do that manually. Start witch copying config file of tns1-01 agent.

root@tns01:~# cp /etc/contrail/contrail-tor-agent-1.conf /etc/contrail/contrail-tor-agent-2.conf

Then we had to change some values in this copied file.

[DEFAULT]

agent_name=tns01-2

log_file=/var/log/contrail/contrail-tor-agent-2.log

http_server_port=8086

[TOR]

tor_ip=10.100.10.7

We have copy of supervisor file to start new service also.

root@tns01:~# cp /etc/contrail/supervisord_vrouter_files/contrail-tor-agent-1.ini /etc/contrail/supervisord_vrouter_files/contrail-tor-agent-2.ini

And change these configuration values.

command=/usr/bin/contrail-tor-agent --config_file /etc/contrail/contrail-tor-agent-2.conf

stdout_logfile=/var/log/contrail/contrail-tor-agent-2-stdout.log

Now restart supervisor to see changes.

root@tns01:~# service supervisor-vrouter restart

And verify changes:

root@tns01:~# contrail-status

== Contrail vRouter ==

supervisor-vrouter: active

contrail-tor-agent-1 active

contrail-tor-agent-2 active

contrail-vrouter-agent active

contrail-vrouter-nodemgr active

Setup OVS

We need to install at least openvswitch-2.3.1, because it has ovs-vtep with VTEP simulator [vtep]. However Ubuntu 14.04.2 contains 2.0.2. Therefore you have to build your own packages or use source tarball. We found packages at PPA https://launchpad.net/~vshn/+archive/ubuntu/openvswitch.

root@ovs:~# cat /etc/apt/sources.list.d/ovs.list

deb http://ppa.launchpad.net/vshn/openvswitch/ubuntu trusty main

deb-src http://ppa.launchpad.net/vshn/openvswitch/ubuntu trusty main

root@ovs:~# apt-get install openvswitch-vtep

We have to delete default existing database created during installation.

root@ovs:~#rm /etc/openvswitch/*.db

And create two new databases: ovs.db and vtep.db

root@ovs:~#ovsdb-tool create /etc/openvswitch/ovs.db /usr/share/openvswitch/vswitch.ovsschema ; ovsdb-tool create /etc/openvswitch/vtep.db /usr/share/openvswitch/vtep.ovsschema

Restart services and make sure, that the ptcp port number matches the port number in contrail-tor-agent-2.conf on TNS node.

root@ovs:~#service openvswitch-switch stop

root@ovs:~#ovsdb-server --pidfile --detach --log-file --remote punix:/var/run/openvswitch/db.sock --remote=db:hardware_vtep,Global,managers --remote ptcp:6632 /etc/openvswitch/ovs.db /etc/openvswitch/vtep.db

root@ovs:~#ovs-vswitchd --log-file --detach --pidfile unix:/var/run/openvswitch/db.sock

Verify creation of databases with:

root@ovs:~#ovsdb-client list-dbs unix:/var/run/openvswitch/db.sock

Open_vSwitch

hardware_vtep

First we need to test our installation with connecting namespace to virtual network. Start with creating bridge.

root@ovs:~#ovs-vsctl add-br TOR1

root@ovs:~#vtep-ctl add-ps TOR1

Setup VTEP of bridge. IP addresses are underlay addresses of our node with OVS.

root@ovs:~#vtep-ctl set Physical_Switch TOR1 tunnel_ips=10.100.10.7

root@ovs:~#vtep-ctl set Physical_Switch TOR1 management_ips=10.100.10.7

root@ovs:~#python /usr/share/openvswitch/scripts/ovs-vtep --log-file=/var/log/openvswitch/ovs-vtep.log --pidfile=/var/run/openvswitch/ovs-vtep.pid --detach TOR1

Now create namespace and link its interface with OVS interface.

root@ovs:~#ip netns add ns1

root@ovs:~#ip link add nstap1 type veth peer name tortap1

root@ovs:~#ovs-vsctl add-port TOR1 tortap1

root@ovs:~#ip link set nstap1 netns ns1

root@ovs:~#ip netns exec ns1 ip link set dev nstap1 up

root@ovs:~#ip link set dev tortap1 up

And configure namespace to be able to communicate with world.

root@ovs:~#ip netns

root@ovs:~#ip netns exec ns1 ip a a 127.0.0.1/8 dev lo

root@ovs:~#ip netns exec ns1 ip a

root@ovs:~#ip netns exec ns1 ip a a 10.0.10.120/24 dev nstap1

root@ovs:~#ip netns exec ns1 ping 10.0.10.120

root@ovs:~#ip netns exec ns1 ip link set up dev lo

root@ovs:~#ip netns exec ns1 ping 10.0.10.120

You can verify previous steps with looking into database.

root@ovs:~# vtep-ctl list Physical_Switch

_uuid : f00f2242-409e-43fc-8d4f-32e2225937d8

description : "OVS VTEP Emulator"

management_ips : ["10.100.10.7"]

name : "TOR1"

ports : [82afe753-25f8-4127-839b-2c5c8f7948b2]

switch_fault_status : []

tunnel_ips : ["10.100.10.7"]

tunnels : []

Now we have to add TOR1 as a new physical device in Contrail managed by TOR agent tns01-2.

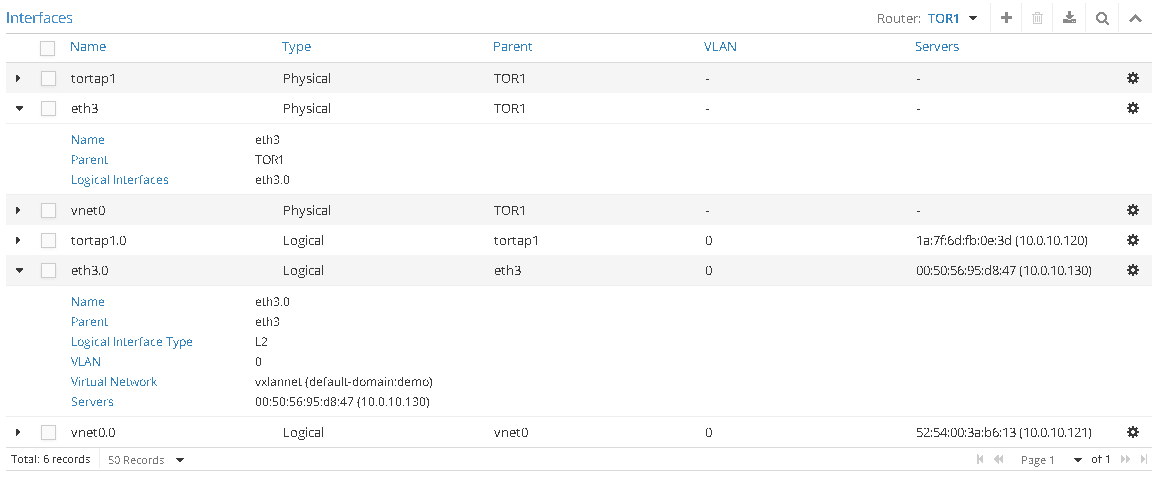

Then create physical port tortap1 with logical tortap1.0 interface, which goes to our ns1 namespace.

Then create physical port tortap1 with logical tortap1.0 interface, which goes to our ns1 namespace.

We can verify Contrail configuration by following output.

root@ovs:~# vtep-ctl list-ls

Contrail-c68a622b-9248-4535-bf04-4859012d7a2a

Check namespace IP addresses.

root@ovs:~# ip netns exec ns1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

7: nstap1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 1a:7f:6d:fb:0e:3d brd ff:ff:ff:ff:ff:ff

inet 10.0.10.120/24 scope global nstap1

valid_lft forever preferred_lft forever

inet6 fe80::187f:6dff:fefb:e3d/64 scope link

valid_lft forever preferred_lft forever

Try to ping VM4 from ns1.

root@ovs:~# ip netns exec ns1 ping 10.0.10.3

PING 10.0.10.3 (10.0.10.3) 56(84) bytes of data.

64 bytes from 10.0.10.3: icmp_seq=1 ttl=64 time=1.25 ms

64 bytes from 10.0.10.3: icmp_seq=2 ttl=64 time=0.311 ms

64 bytes from 10.0.10.3: icmp_seq=3 ttl=64 time=0.307 ms

64 bytes from 10.0.10.3: icmp_seq=4 ttl=64 time=0.270 ms

^C

--- 10.0.10.3 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 2999ms

rtt min/avg/max/mdev = 0.270/0.536/1.256/0.416 ms

Following output shows all interface on physical server OVS.

root@ovs:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:95:60:e8 brd ff:ff:ff:ff:ff:ff

inet 10.10.70.135/24 brd 10.10.70.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fe95:60e8/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:95:6b:14 brd ff:ff:ff:ff:ff:ff

inet 10.100.10.7/24 brd 10.100.10.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fe95:6b14/64 scope link

valid_lft forever preferred_lft forever

4: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether 4a:4f:14:53:c6:df brd ff:ff:ff:ff:ff:ff

5: TOR1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether 2a:11:8c:74:61:46 brd ff:ff:ff:ff:ff:ff

6: tortap1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master ovs-system state UP group default qlen 1000

link/ether 16:62:97:5a:62:e8 brd ff:ff:ff:ff:ff:ff

inet6 fe80::1462:97ff:fe5a:62e8/64 scope link

valid_lft forever preferred_lft forever

8: vtep_ls1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether 62:2b:86:c3:c2:4b brd ff:ff:ff:ff:ff:ff

Following output shows openvswitch configuration. Patch ports 0000-tortap1-p and 0000-tortap1-lwere created by Contrail.

root@ovs:~# ovs-vsctl show

93d90385-f9c6-4cfc-b67d-4f64eec15479

Bridge "TOR1"

Port "TOR1"

Interface "TOR1"

type: internal

Port "tortap1"

Interface "tortap1"

Port "0000-tortap1-p"

Interface "0000-tortap1-p"

type: patch

options: {peer="0000-tortap1-l"}

Bridge "vtep_ls1"

Port "vx4"

Interface "vx4"

type: vxlan

options: {key="10", remote_ip="10.100.10.2"}

Port "vx2"

Interface "vx2"

type: vxlan

options: {key="10", remote_ip="10.100.10.5"}

Port "vx5"

Interface "vx5"

type: vxlan

options: {key="10", remote_ip="10.100.10.6"}

Port "vx3"

Interface "vx3"

type: vxlan

options: {key="10", remote_ip="10.100.10.4"}

Port "vx9"

Interface "vx9"

type: vxlan

options: {key="10", remote_ip="10.10.80.6"}

Port "vtep_ls1"

Interface "vtep_ls1"

type: internal

Port "0000-tortap1-l"

Interface "0000-tortap1-l"

type: patch

options: {peer="0000-tortap1-p"}

Connect KVM VM to the Cloud

Now we want to try to boot VM5 on OVS server and connect it into our virtual network as a bare metal server. At first we need to install qemu and boot a virtual machine.

sudo apt-get install qemu-system-x86 ubuntu-vm-builder uml-utilities

sudo ubuntu-vm-builder kvm precise

Once that is done, create a VM, if necessary, and edit its Domain XML file:

root@ovs:~# virsh list

Id Name State

----------------------------------------------------

7 ubuntu running

% virsh destroy ubuntu

% virsh edit ubuntu

Look at the Domain XML file the <interface> section. There should be one XML section for each interface the VM has.

<interface type='network'>

<mac address='52:54:00:3a:b6:13'/>

<source network='default'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

And change it to something like this:

<interface type='bridge'>

<mac address='52:54:00:3a:b6:13'/>

<source bridge='TOR1'/>

<virtualport type='openvswitch'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

Start VM5 and verify that it uses openvswitch interface. There is automatically created interface vnet0.

% virsh start ubuntu

<interface type='bridge'>

<mac address='52:54:00:3a:b6:13'/>

<source bridge='TOR1'/>

<virtualport type='openvswitch'>

<parameters interfaceid='5def61f9-7123-43a5-b7ae-35f0fbd22fca'/>

</virtualport>

<target dev='vnet0'/>

<model type='virtio'/>

<alias name='net0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

Now we can add a new port vnet0 as physical and logical port in Contrail. We can check new patch interfaces in openvswitch.

We can check new patch interfaces in openvswitch.

root@ovs:~# ovs-vsctl show

93d90385-f9c6-4cfc-b67d-4f64eec15479

Bridge "TOR1"

Port "vnet0"

Interface "vnet0"

Port "TOR1"

Interface "TOR1"

type: internal

Port "tortap1"

Interface "tortap1"

Port "0000-vnet0-p"

Interface "0000-vnet0-p"

type: patch

options: {peer="0000-vnet0-l"}

Port "0000-tortap1-p"

Interface "0000-tortap1-p"

type: patch

options: {peer="0000-tortap1-l"}

Bridge "vtep_ls1"

Port "vx4"

Interface "vx4"

type: vxlan

options: {key="10", remote_ip="10.100.10.2"}

Port "0000-vnet0-l"

Interface "0000-vnet0-l"

type: patch

options: {peer="0000-vnet0-p"}

Port "vx2"

Interface "vx2"

type: vxlan

options: {key="10", remote_ip="10.100.10.5"}

Port "vx5"

Interface "vx5"

type: vxlan

options: {key="10", remote_ip="10.100.10.6"}

Port "vx3"

Interface "vx3"

type: vxlan

options: {key="10", remote_ip="10.100.10.4"}

Port "vtep_ls1"

Interface "vtep_ls1"

type: internal

Port "0000-tortap1-l"

Interface "0000-tortap1-l"

type: patch

options: {peer="0000-tortap1-p"}

We can open console at VM5, manually set IP address and try to ping VM4.

We can check the same thing from baremetal namespace.

root@ovs:~# ip netns exec ns1 ping 10.0.10.121

PING 10.0.10.121 (10.0.10.121) 56(84) bytes of data.

64 bytes from 10.0.10.121: icmp_seq=1 ttl=64 time=0.709 ms

64 bytes from 10.0.10.121: icmp_seq=2 ttl=64 time=0.432 ms

64 bytes from 10.0.10.121: icmp_seq=3 ttl=64 time=0.302 ms

^C

--- 10.0.10.121 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.302/0.481/0.709/0.169 ms

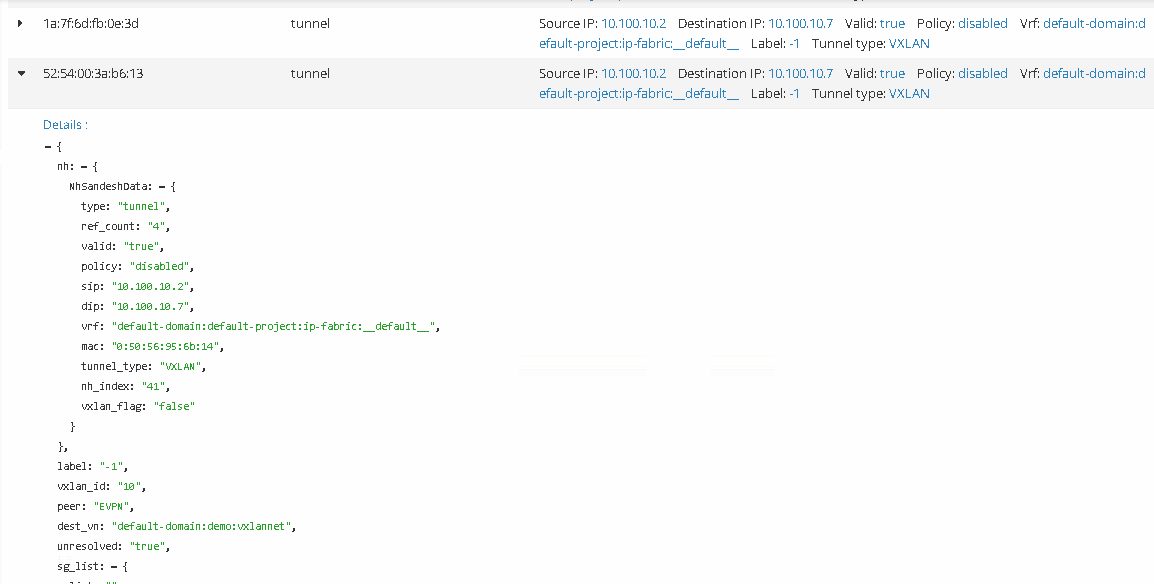

The following screen shows L2 routes at vRouter with VM4, where you can see all details about VxLAN tunnel.

Connect BMS to cloud

Last test use case is to connect another baremetal server BMS02 through the physical NIC of OVS server. In this case OVS server represents a true switch.Add a physical interface eth3to you server with OVS.

root@ovs:~#ovs-vsctl add-port TOR1 eth3

root@ovs:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:95:60:e8 brd ff:ff:ff:ff:ff:ff

inet 10.10.70.135/24 brd 10.10.70.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fe95:60e8/64 scope link

valid_lft forever preferred_lft forever

...

24: eth3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:50:56:95:e0:21 brd ff:ff:ff:ff:ff:ff

inet6 fe80::250:56ff:fe95:e021/64 scope link

valid_lft forever preferred_lft forever

Create physical and logical ports for eth3.

Check new ports in openvswitch.

root@ovs:~# ovs-vsctl show

93d90385-f9c6-4cfc-b67d-4f64eec15479

Bridge "TOR1"

...

Port "TOR1"

Interface "TOR1"

type: internal

Port "0000-eth3-p"

Interface "0000-eth3-p"

type: patch

options: {peer="0000-eth3-l"}

Port "eth3"

Interface "eth3"

...

Bridge "vtep_ls1"

Port "vx17"

Interface "vx17"

type: vxlan

options: {key="10", remote_ip="10.10.80.4"}

Port "vx4"

Interface "vx4"

type: vxlan

options: {key="10", remote_ip="10.100.10.2"}

Port "vx2"

Interface "vx2"

type: vxlan

options: {key="10", remote_ip="10.100.10.5"}

Port "0000-vnet0-l"

Interface "0000-vnet0-l"

type: patch

options: {peer="0000-vnet0-p"}

Port "0000-tortap1-l"

Interface "0000-tortap1-l"

type: patch

options: {peer="0000-tortap1-p"}

Port "vx5"

Interface "vx5"

type: vxlan

options: {key="10", remote_ip="10.100.10.6"}

Port "vx3"

Interface "vx3"

type: vxlan

options: {key="10", remote_ip="10.100.10.4"}

Port "vtep_ls1"

Interface "vtep_ls1"

type: internal

Port "0000-eth3-l"

Interface "0000-eth3-l"

type: patch

options: {peer="0000-eth3-p"}

As you can see it is possible to ping cloud instance from our bare metal server.

root@ovs2:~# ping 10.0.10.3

PING 10.0.10.3 (10.0.10.3) 56(84) bytes of data.

64 bytes from 10.0.10.3: icmp_seq=2 ttl=64 time=1.10 ms

64 bytes from 10.0.10.3: icmp_seq=3 ttl=64 time=0.387 ms

64 bytes from 10.0.10.3: icmp_seq=4 ttl=64 time=0.428 ms

64 bytes from 10.0.10.3: icmp_seq=5 ttl=64 time=0.378 ms

64 bytes from 10.0.10.3: icmp_seq=6 ttl=64 time=0.419 ms

64 bytes from 10.0.10.3: icmp_seq=7 ttl=64 time=0.382 ms

^C

--- 10.0.10.3 ping statistics ---

7 packets transmitted, 6 received, 14% packet loss, time 6005ms

rtt min/avg/max/mdev = 0.378/0.516/1.102/0.262 ms

Verification

OVS maintains network information in database. To list existing tables use:

root@ovs:~# ovsdb-client list-tables unix:/var/run/openvswitch/db.sock hardware_vtep

Table

---------------------

Physical_Port

Physical_Locator_Set

Physical_Locator

Logical_Binding_Stats

Arp_Sources_Remote

Manager

Mcast_Macs_Local

Global

Ucast_Macs_Local

Logical_Switch

Physical_Switch

Ucast_Macs_Remote

Tunnel

Mcast_Macs_Remote

Logical_Router

Arp_Sources_Local

To view the content of these tables in readable format use vtep-ctl listcommand with table’s name at the end.The list of physical interfaces associated with ovs.

root@ovs:~# vtep-ctl list Physical_Port

_uuid : ac4a8bb8-bd11-47d3-a5ac-9828c5f68ffc

description : ""

name : "eth3"

port_fault_status : []

vlan_bindings : {0=4f016591-56ce-496f-996e-a93203061e07}

vlan_stats : {0=288fe0f7-d7b8-430a-beb4-0c0a2c536a9c}

_uuid : 41f87dae-6568-4fc9-97fc-46ec3d2fbfdd

description : ""

name : "vnet0"

port_fault_status : []

vlan_bindings : {0=4f016591-56ce-496f-996e-a93203061e07}

vlan_stats : {0=12d70df8-6448-4784-af0d-754f37847942}

_uuid : 82afe753-25f8-4127-839b-2c5c8f7948b2

description : ""

name : "tortap1"

port_fault_status : []

vlan_bindings : {0=4f016591-56ce-496f-996e-a93203061e07}

vlan_stats : {0=e68cc29d-72c3-4809-a013-32c91a119b11}

To see remote MAC addresses and their next hop VTEPs we have to first find out name of our logical switch.

root@ovs:~# vtep-ctl list-ls

Contrail-c68a622b-9248-4535-bf04-4859012d7a2a

Then list remote macs:

root@ovs:~# vtep-ctl list-remote-macs Contrail-c68a622b-9248-4535-bf04-4859012d7a2a

ucast-mac-remote

02:30:84:c3:d1:13 -> vxlan_over_ipv4/10.100.10.2

02:e1:bb:af:65:11 -> vxlan_over_ipv4/10.100.10.4

02:fc:94:91:42:f2 -> vxlan_over_ipv4/10.100.10.5

40:a6:77:9a:b3:38 -> vxlan_over_ipv4/10.10.80.4

mcast-mac-remote

unknown-dst -> vxlan_over_ipv4/10.100.10.6

As we can see, unknown traffic is handled by TOR agent.To list local MACs type:

root@ovs:~# vtep-ctl list-local-macs Contrail-c68a622b-9248-4535-bf04-4859012d7a2a

ucast-mac-local

1a:7f:6d:fb:0e:3d -> vxlan_over_ipv4/10.100.10.7

mcast-mac-local

unknown-dst -> vxlan_over_ipv4/10.100.10.7

Conclusion

We tested almost all scenarios for bare-metal connection to overlay networks on different devices. We proved that OpenContrail is working open source, multi-vendor SDN solution, which moves OpenStack cloud to the next level suitable for large enterprises.

In future parts of this blog we would like to look at High Availability setup of TOR agent, which has been added in Contrail 2.2. Our next post will focus on route gateways with functions like VxLAN to EVPN Stitching for L2 Extension, L3VPN, multi-vendor support and gateway redundancy.

Marek Celoud & Jakub Pavlik

tcp cloud engineers

Rostislav Safar

Arrow ECS network engineer

Resources

| [ContrailToR] | (1, 2) Using TOR Switches with OVSDB for Virtual Instance Support https://techwiki.juniper.net/Documentation/Contrail/Contrail_Controller_Getting_Started_Guide/40_Extending_Contrail_to_Physical_Routers,_Bare_Metal_Servers,_Switches,_and_Interfaces/20_Using_TOR_Switches_with_OVSDB_for_Virtual_Instance_Support |

| [site] | Juniper Contrail documentation http://www.juniper.net/techpubs/en_US/contrail2.10/information-products/pathway-pages/getting-started-pwp-r2.10.html#installation |

| [vtep] | How to Use the VTEP Emulator https://github.com/openvswitch/ovs/blob/master/vtep/README.ovs-vtep.md |

| [ovscontrail] | Setting up openvswitch VM for Contrail Baremetal https://github.com/Juniper/contrail-test/wiki/Setting-up-an-openvswitch-VM-for-Contrail-Baremetal-tests |

| [vxlanovsdb] | Enhancing VM mobility with VxLAN OVSDB http://mcleonard.blogspot.cz/2013/12/enhancing-vm-mobility-with-vxlan-ovsdb.html |

| [ovslibvirt] | Libvirt configuration with openvswitch https://github.com/openvswitch/ovs/blob/master/INSTALL.Libvirt.md |