How are Sentry system calls trapped into host kernel?

From How gvisor trap to syscall handler in kvm platform, “Note that the SYSCALL instruction (Wenbo: in sentry guest ring 0) works just fine from ring 0, it just doesn’t perform a ring switch since you’re already in ring 0 (guest). Yup, the syscall handler executes a HLT, which is the trigger to switch back to host mode. To see the host/guest transition internals take a look at bluepill() (switch to guest mode) and redpill() (switch to host mode) in platform/kvm. The control flow is bit hard to follow. At a high level it goes: bluepill() -> execute CLI (allowed if already in guest mode, or …) -> SIGILL signal handler -> bluepillHandler() -> KVM_RUN with RIP @ CLI instruction -> execute CLI in guest mode, bluepill() returns”

kernel_main

在逆向内核的部分的时候主要关注的有两个点

- 内核地址空间,用户地址空间,页表

- 系统调用表。

首先是entry.s,最开始的位置,从名称中我们也能看出来,该部分的代码应该是内核的起始代码,在代码中首先将参数取出,随后调用了一个函数,随后就一直执行hlt。该函数应该就是kernel_main函数。

虚拟机启动过程

https://www.cnblogs.com/Bozh/p/5753379.html

第一步,获取到kvm句柄

kvmfd = open("/dev/kvm", O_RDWR);

第二步,创建虚拟机,获取到虚拟机句柄。

vmfd = ioctl(kvmfd, KVM_CREATE_VM, 0);

第三步,为虚拟机映射内存,还有其他的PCI,信号处理的初始化。

ioctl(kvmfd, KVM_SET_USER_MEMORY_REGION, &mem);

第四步,将虚拟机镜像映射到内存,相当于物理机的boot过程,把镜像映射到内存。

第五步,创建vCPU,并为vCPU分配内存空间。

ioctl(kvmfd, KVM_CREATE_VCPU, vcpuid);

vcpu->kvm_run_mmap_size = ioctl(kvm->dev_fd, KVM_GET_VCPU_MMAP_SIZE, 0);

第五步,创建vCPU个数的线程并运行虚拟机。

ioctl(kvm->vcpus->vcpu_fd, KVM_RUN, 0);

第六步,线程进入循环,并捕获虚拟机退出原因,做相应的处理。

这里的退出并不一定是虚拟机关机,虚拟机如果遇到IO操作,访问硬件设备,缺页中断等都会退出执行,退出执行可以理解为将CPU执行上下文返回到QEMU。

====================

open("/dev/kvm")

ioctl(KVM_CREATE_VM)

ioctl(KVM_CREATE_VCPU)

for (;;) {

ioctl(KVM_RUN) switch (exit_reason)

{ case KVM_EXIT_IO:

/* ... */ case KVM_EXIT_HLT: /* ... */ }

}

先来一个KVM API开胃菜

下面是一个KVM的简单demo,其目的在于加载 code 并使用KVM运行起来.

这是一个at&t的8086汇编,.code16表示他是一个16位的,当然直接运行是运行不起来的,为了让他运行起来,我们可以用KVM提供的API,将这个程序看做一个最简单的操作系统,让其运行起来。

这个汇编的作用是输出al寄存器的值到0x3f8端口。对于x86架构来说,通过IN/OUT指令访问。PC架构一共有65536个8bit的I/O端口,组成64KI/O地址空间,编号从0~0xFFFF。连续两个8bit的端口可以组成一个16bit的端口,连续4个组成一个32bit的端口。I/O地址空间和CPU的物理地址空间是两个不同的概念,例如I/O地址空间为64K,一个32bit的CPU物理地址空间是4G。

最终程序理想的输出应该是,al,bl的值后面KVM初始化的时候有赋值。

4

(并不直接输出

,而是换了一行),hlt 指令表示虚拟机退出

.globl _start

.code16

_start:

mov $0x3f8, %dx

add %bl, %al

add $'0', %al

out %al, (%dx)

mov $'

', %al

out %al, (%dx)

hlt

我们编译一下这个汇编,得到一个 Bin.bin 的二进制文件

as -32 bin.S -o bin.o

ld -m elf_i386 --oformat binary -N -e _start -Ttext 0x10000 -o Bin.bin bin.o

查看一下二进制格式

➜ demo1 hexdump -C bin.bin

00000000 ba f8 03 00 d8 04 30 ee b0 0a ee f4 |......0.....|

0000000c

对应了下面的code数组,这样直接加载字节码就不需要再从文件加载了

const uint8_t code[] = {

0xba, 0xf8, 0x03, /* mov $0x3f8, %dx */

0x00, 0xd8, /* add %bl, %al */

0x04, '0', /* add $'0', %al */

0xee, /* out %al, (%dx) */

0xb0, '

', /* mov $'

', %al */

0xee, /* out %al, (%dx) */

0xf4, /* hlt */

};

#include <err.h>

#include <fcntl.h>

#include <linux/kvm.h>

#include <stdint.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/ioctl.h>

#include <sys/mman.h>

#include <sys/stat.h>

#include <sys/types.h>

int main(void)

{

int kvm, vmfd, vcpufd, ret;

const uint8_t code[] = {

0xba, 0xf8, 0x03, /* mov $0x3f8, %dx */

0x00, 0xd8, /* add %bl, %al */

0x04, '0', /* add $'0', %al */

0xee, /* out %al, (%dx) */

0xb0, '

', /* mov $'

', %al */

0xee, /* out %al, (%dx) */

0xf4, /* hlt */

};

uint8_t *mem;

struct kvm_sregs sregs;

size_t mmap_size;

struct kvm_run *run;

// 获取 kvm 句柄

kvm = open("/dev/kvm", O_RDWR | O_CLOEXEC);

if (kvm == -1)

err(1, "/dev/kvm");

// 确保是正确的 API 版本

ret = ioctl(kvm, KVM_GET_API_VERSION, NULL);

if (ret == -1)

err(1, "KVM_GET_API_VERSION");

if (ret != 12)

errx(1, "KVM_GET_API_VERSION %d, expected 12", ret);

// 创建一虚拟机

vmfd = ioctl(kvm, KVM_CREATE_VM, (unsigned long)0);

if (vmfd == -1)

err(1, "KVM_CREATE_VM");

// 为这个虚拟机申请内存,并将代码(镜像)加载到虚拟机内存中

mem = mmap(NULL, 0x1000, PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1, 0);

if (!mem)

err(1, "allocating guest memory");

memcpy(mem, code, sizeof(code));

// 为什么从 0x1000 开始呢,因为页表空间的前4K是留给页表目录

struct kvm_userspace_memory_region region = {

.slot = 0,

.guest_phys_addr = 0x1000,

.memory_size = 0x1000,

.userspace_addr = (uint64_t)mem,

};

// 设置 KVM 的内存区域

ret = ioctl(vmfd, KVM_SET_USER_MEMORY_REGION, ®ion);

if (ret == -1)

err(1, "KVM_SET_USER_MEMORY_REGION");

// 创建虚拟CPU

vcpufd = ioctl(vmfd, KVM_CREATE_VCPU, (unsigned long)0);

if (vcpufd == -1)

err(1, "KVM_CREATE_VCPU");

// 获取 KVM 运行时结构的大小

ret = ioctl(kvm, KVM_GET_VCPU_MMAP_SIZE, NULL);

if (ret == -1)

err(1, "KVM_GET_VCPU_MMAP_SIZE");

mmap_size = ret;

if (mmap_size < sizeof(*run))

errx(1, "KVM_GET_VCPU_MMAP_SIZE unexpectedly small");

// 将 kvm run 与 vcpu 做关联,这样能够获取到kvm的运行时信息

run = mmap(NULL, mmap_size, PROT_READ | PROT_WRITE, MAP_SHARED, vcpufd, 0);

if (!run)

err(1, "mmap vcpu");

// 获取特殊寄存器

ret = ioctl(vcpufd, KVM_GET_SREGS, &sregs);

if (ret == -1)

err(1, "KVM_GET_SREGS");

// 设置代码段为从地址0处开始,我们的代码被加载到了0x0000的起始位置

sregs.cs.base = 0;

sregs.cs.selector = 0;

// KVM_SET_SREGS 设置特殊寄存器

ret = ioctl(vcpufd, KVM_SET_SREGS, &sregs);

if (ret == -1)

err(1, "KVM_SET_SREGS");

// 设置代码的入口地址,相当于32位main函数的地址,这里16位汇编都是由0x1000处开始。

// 如果是正式的镜像,那么rip的值应该是类似引导扇区加载进来的指令

struct kvm_regs regs = {

.rip = 0x1000,

.rax = 2, // 设置 ax 寄存器初始值为 2

.rbx = 2, // 同理

.rflags = 0x2, // 初始化flags寄存器,x86架构下需要设置,否则会粗错

};

ret = ioctl(vcpufd, KVM_SET_REGS, ®s);

if (ret == -1)

err(1, "KVM_SET_REGS");

// 开始运行虚拟机,如果是qemu-kvm,会用一个线程来执行这个vCPU,并加载指令

while (1) {

// 开始运行虚拟机

ret = ioctl(vcpufd, KVM_RUN, NULL);

if (ret == -1)

err(1, "KVM_RUN");

// 获取虚拟机退出原因

switch (run->exit_reason) {

case KVM_EXIT_HLT:

puts("KVM_EXIT_HLT");

return 0;

// 汇编调用了 out 指令,vmx 模式下不允许执行这个操作,所以

// 将操作权切换到了宿主机,切换的时候会将上下文保存到VMCS寄存器

// 后面CPU虚拟化会讲到这部分

// 因为虚拟机的内存宿主机能够直接读取到,所以直接在宿主机上获取到

// 虚拟机的输出(out指令),这也是后面PCI设备虚拟化的一个基础,DMA模式的PCI设备

case KVM_EXIT_IO:

if (run->io.direction == KVM_EXIT_IO_OUT && run->io.size == 1 && run->io.port == 0x3f8 && run->io.count == 1)

putchar(*(((char *)run) + run->io.data_offset));

else

errx(1, "unhandled KVM_EXIT_IO");

break;

case KVM_EXIT_FAIL_ENTRY:

errx(1, "KVM_EXIT_FAIL_ENTRY: hardware_entry_failure_reason = 0x%llx",

(unsigned long long)run->fail_entry.hardware_entry_failure_reason);

case KVM_EXIT_INTERNAL_ERROR:

errx(1, "KVM_EXIT_INTERNAL_ERROR: suberror = 0x%x", run->internal.suberror);

default:

errx(1, "exit_reason = 0x%x", run->exit_reason);

}

}

}

编译并运行这个demo

gcc -g demo.c -o demo ➜ demo1 ./demo 4 KVM_EXIT_HLT

/dev/kvm

// OpenDevice opens the KVM device at /dev/kvm and returns the File. func OpenDevice() (*os.File, error) { f, err := os.OpenFile("/dev/kvm", syscall.O_RDWR, 0) if err != nil { return nil, fmt.Errorf("error opening /dev/kvm: %v", err) } return f, nil } type constructor struct{} func (*constructor) New(f *os.File) (platform.Platform, error) { return New(f) } func (*constructor) OpenDevice() (*os.File, error) { return OpenDevice() }

创建vcpu

int vcpu_fd = ioctl(vm_fd, KVM_CREATE_VCPU, 0); struct kvm_sregs sregs; ioctl(vcpu_fd, KVM_GET_SREGS, &sregs); // Initialize selector and base with zeros sregs.cs.selector = sregs.cs.base = sregs.ss.selector = sregs.ss.base = sregs.ds.selector = sregs.ds.base = sregs.es.selector = sregs.es.base = sregs.fs.selector = sregs.fs.base = sregs.gs.selector = 0; // Save special registers ioctl(vcpu_fd, KVM_SET_SREGS, &sregs); // Initialize and save normal registers struct kvm_regs regs; regs.rflags = 2; // bit 1 must always be set to 1 in EFLAGS and RFLAGS regs.rip = 0; // our code runs from address 0 ioctl(vcpu_fd, KVM_SET_REGS, ®s); int runsz = ioctl(kvm_fd, KVM_GET_VCPU_MMAP_SIZE, 0); struct kvm_run *run = (struct kvm_run *) mmap(NULL, runsz, PROT_READ | PROT_WRITE, MAP_SHARED, vcpu_fd, 0); for (;;) { ioctl(vcpu_fd, KVM_RUN, 0); switch (run->exit_reason) { case KVM_EXIT_IO: printf("IO port: %x, data: %x ", run->io.port, *(int *)((char *)(run) + run->io.data_offset)); break; case KVM_EXIT_SHUTDOWN: return; } }

设置寄存器 KVM_SET_SREGS

// //go:nosplit func (c *vCPU) setUserRegisters(uregs *userRegs) syscall.Errno { if _, _, errno := syscall.RawSyscall( syscall.SYS_IOCTL, uintptr(c.fd), _KVM_SET_REGS, uintptr(unsafe.Pointer(uregs))); errno != 0 { return errno } return 0 } // setSystemRegisters sets system registers. func (c *vCPU) setSystemRegisters(sregs *systemRegs) error { if _, _, errno := syscall.RawSyscall( syscall.SYS_IOCTL, uintptr(c.fd), _KVM_SET_SREGS, uintptr(unsafe.Pointer(sregs))); errno != 0 { return fmt.Errorf("error setting system registers: %v", errno) } return nil }

// 设置代码的入口地址,相当于32位main函数的地址,这里16位汇编都是由0x1000处开始。 // 如果是正式的镜像,那么rip的值应该是类似引导扇区加载进来的指令 struct kvm_regs regs = { .rip = 0x1000, .rax = 2, // 设置 ax 寄存器初始值为 2 .rbx = 2, // 同理 .rflags = 0x2, // 初始化flags寄存器,x86架构下需要设置,否则会粗错 };

https://www.cnblogs.com/Bozh/p/5753379.html

// Set base control registers. kernelSystemRegs.CR0 = c.CR0() kernelSystemRegs.CR4 = c.CR4() kernelSystemRegs.EFER = c.EFER() // Set the IDT & GDT in the registers. kernelSystemRegs.IDT.base, kernelSystemRegs.IDT.limit = c.IDT() kernelSystemRegs.GDT.base, kernelSystemRegs.GDT.limit = c.GDT() kernelSystemRegs.CS.Load(&ring0.KernelCodeSegment, ring0.Kcode) kernelSystemRegs.DS.Load(&ring0.UserDataSegment, ring0.Udata) kernelSystemRegs.ES.Load(&ring0.UserDataSegment, ring0.Udata) kernelSystemRegs.SS.Load(&ring0.KernelDataSegment, ring0.Kdata) kernelSystemRegs.FS.Load(&ring0.UserDataSegment, ring0.Udata) kernelSystemRegs.GS.Load(&ring0.UserDataSegment, ring0.Udata) tssBase, tssLimit, tss := c.TSS() kernelSystemRegs.TR.Load(tss, ring0.Tss) kernelSystemRegs.TR.base = tssBase kernelSystemRegs.TR.limit = uint32(tssLimit) // Point to kernel page tables, with no initial PCID. kernelSystemRegs.CR3 = c.machine.kernel.PageTables.CR3(false, 0) // Initialize the PCID database. if hasGuestPCID { // Note that NewPCIDs may return a nil table here, in which // case we simply don't use PCID support (see below). In // practice, this should not happen, however. c.PCIDs = pagetables.NewPCIDs(fixedKernelPCID+1, poolPCIDs) } // Set the CPUID; this is required before setting system registers, // since KVM will reject several CR4 bits if the CPUID does not // indicate the support is available. if err := c.setCPUID(); err != nil { return err } // Set the entrypoint for the kernel. kernelUserRegs.RIP = uint64(reflect.ValueOf(ring0.Start).Pointer()) kernelUserRegs.RAX = uint64(reflect.ValueOf(&c.CPU).Pointer()) kernelUserRegs.RSP = c.StackTop() kernelUserRegs.RFLAGS = ring0.KernelFlagsSet // Set the system registers. if err := c.setSystemRegisters(&kernelSystemRegs); err != nil { return err } // Set the user registers. if errno := c.setUserRegisters(&kernelUserRegs); errno != 0 { return fmt.Errorf("error setting user registers: %v", errno) }

VM* kvm_init(uint8_t code[], size_t len) { //获取kvm句柄 int kvmfd = open("/dev/kvm", O_RDONLY | O_CLOEXEC); if(kvmfd < 0) pexit("open(/dev/kvm)"); //检查 kvm 版本 int api_ver = ioctl(kvmfd, KVM_GET_API_VERSION, 0); if(api_ver < 0) pexit("KVM_GET_API_VERSION"); if(api_ver != KVM_API_VERSION) { error("Got KVM api version %d, expected %d ", api_ver, KVM_API_VERSION); } //创建kvm虚拟机 int vmfd = ioctl(kvmfd, KVM_CREATE_VM, 0); if(vmfd < 0) pexit("ioctl(KVM_CREATE_VM)"); //为KVM虚拟机申请内存 void *mem = mmap(0, MEM_SIZE, PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1, 0); if(mem == NULL) pexit("mmap(MEM_SIZE)"); size_t entry = 0; //拷贝用户态代码到虚拟机内存 memcpy((void*) mem + entry, code, len); //创建内存结构体 struct kvm_userspace_memory_region region = { .slot = 0, .flags = 0, .guest_phys_addr = 0, .memory_size = MEM_SIZE, .userspace_addr = (size_t) mem }; //设置 KVM 的内存区域 if(ioctl(vmfd, KVM_SET_USER_MEMORY_REGION, ®ion) < 0) { pexit("ioctl(KVM_SET_USER_MEMORY_REGION)"); } //创建 VCPU int vcpufd = ioctl(vmfd, KVM_CREATE_VCPU, 0); if(vcpufd < 0) pexit("ioctl(KVM_CREATE_VCPU)"); //获取 KVM 运行时结构的大小 size_t vcpu_mmap_size = ioctl(kvmfd, KVM_GET_VCPU_MMAP_SIZE, NULL); //将 kvm run 与 vcpu 做关联,这样能够获取到kvm的运行时信息 struct kvm_run *run = (struct kvm_run*) mmap(0, vcpu_mmap_size, PROT_READ | PROT_WRITE, MAP_SHARED, vcpufd, 0); //设置虚拟机结构体 VM *vm = (VM*) malloc(sizeof(VM)); *vm = (struct VM){ .mem = mem, .mem_size = MEM_SIZE, .vcpufd = vcpufd, .run = run }; //设置特殊寄存器 setup_regs(vm, entry); //设置段页 setup_long_mode(vm); return vm; }

/* set rip = entry point * set rsp = MAX_KERNEL_SIZE + KERNEL_STACK_SIZE (the max address can be used) * * set rdi = PS_LIMIT (start of free (unpaging) physical pages) * set rsi = MEM_SIZE - rdi (total length of free pages) * Kernel could use rdi and rsi to initalize its memory allocator. */ void setup_regs(VM *vm, size_t entry) { struct kvm_regs regs; //KVM_GET_SREGS 获得特殊寄存器 if(ioctl(vm->vcpufd, KVM_GET_REGS, ®s) < 0) pexit("ioctl(KVM_GET_REGS)"); //初始化寄存器 regs.rip = entry; //代码开始运行点 regs.rsp = MAX_KERNEL_SIZE + KERNEL_STACK_SIZE; /* temporary stack */ regs.rdi = PS_LIMIT; /* start of free pages */ regs.rsi = MEM_SIZE - regs.rdi; /* total length of free pages */ regs.rflags = 0x2; //KVM_SET_SREGS 设置特殊寄存器 if(ioctl(vm->vcpufd, KVM_SET_REGS, ®s) < 0) pexit("ioctl(KVM_SET_REGS"); }

gvisor+ kvm_run + kvm_thread

// Precondition: mu must be held. func (m *machine) newVCPU() *vCPU { // Create the vCPU. id := int(atomic.AddUint32(&m.nextID, 1) - 1) fd, _, errno := syscall.RawSyscall(syscall.SYS_IOCTL, uintptr(m.fd), _KVM_CREATE_VCPU, uintptr(id)) } func bluepillHandler(context unsafe.Pointer) { // Sanitize the registers; interrupts must always be disabled. c := bluepillArchEnter(bluepillArchContext(context)) // Mark this as guest mode. switch atomic.SwapUint32(&c.state, vCPUGuest|vCPUUser) { case vCPUUser: // Expected case. case vCPUUser | vCPUWaiter: c.notify() default: throw("invalid state") } for { _, _, errno := syscall.RawSyscall(syscall.SYS_IOCTL, uintptr(c.fd), _KVM_RUN, 0) // escapes: no. switch errno { } } }

//go:nosplit func bluepillHandler(context unsafe.Pointer) { // Sanitize the registers; interrupts must always be disabled. c := bluepillArchEnter(bluepillArchContext(context)) // Mark this as guest mode. switch atomic.SwapUint32(&c.state, vCPUGuest|vCPUUser) { case vCPUUser: // Expected case. case vCPUUser | vCPUWaiter: c.notify() default: throw("invalid state") } for { _, _, errno := syscall.RawSyscall(syscall.SYS_IOCTL, uintptr(c.fd), _KVM_RUN, 0) // escapes: no. switch errno { case 0: // Expected case. case syscall.EINTR: // First, we process whatever pending signal // interrupted KVM. Since we're in a signal handler // currently, all signals are masked and the signal // must have been delivered directly to this thread. timeout := syscall.Timespec{} sig, _, errno := syscall.RawSyscall6( // escapes: no. syscall.SYS_RT_SIGTIMEDWAIT, uintptr(unsafe.Pointer(&bounceSignalMask)), 0, // siginfo. uintptr(unsafe.Pointer(&timeout)), // timeout. 8, // sigset size. 0, 0) if errno == syscall.EAGAIN { continue } if errno != 0 { throw("error waiting for pending signal") } if sig != uintptr(bounceSignal) { throw("unexpected signal") } // Check whether the current state of the vCPU is ready // for interrupt injection. Because we don't have a // PIC, we can't inject an interrupt while they are // masked. We need to request a window if it's not // ready. if bluepillReadyStopGuest(c) { // Force injection below; the vCPU is ready. c.runData.exitReason = _KVM_EXIT_IRQ_WINDOW_OPEN } else { c.runData.requestInterruptWindow = 1 continue // Rerun vCPU. } case syscall.EFAULT: // If a fault is not serviceable due to the host // backing pages having page permissions, instead of an // MMIO exit we receive EFAULT from the run ioctl. We // always inject an NMI here since we may be in kernel // mode and have interrupts disabled. bluepillSigBus(c) continue // Rerun vCPU. case syscall.ENOSYS: bluepillHandleEnosys(c) continue default: throw("run failed") } switch c.runData.exitReason { case _KVM_EXIT_EXCEPTION: c.die(bluepillArchContext(context), "exception") return case _KVM_EXIT_IO: c.die(bluepillArchContext(context), "I/O") return case _KVM_EXIT_INTERNAL_ERROR: // An internal error is typically thrown when emulation // fails. This can occur via the MMIO path below (and // it might fail because we have multiple regions that // are not mapped). We would actually prefer that no // emulation occur, and don't mind at all if it fails. case _KVM_EXIT_HYPERCALL: c.die(bluepillArchContext(context), "hypercall") return case _KVM_EXIT_DEBUG: c.die(bluepillArchContext(context), "debug") return case _KVM_EXIT_HLT: bluepillGuestExit(c, context) return case _KVM_EXIT_MMIO: physical := uintptr(c.runData.data[0]) if getHypercallID(physical) == _KVM_HYPERCALL_VMEXIT { bluepillGuestExit(c, context) return } // Increment the fault count. atomic.AddUint32(&c.faults, 1) // For MMIO, the physical address is the first data item. physical = uintptr(c.runData.data[0]) virtual, ok := handleBluepillFault(c.machine, physical, physicalRegions, _KVM_MEM_FLAGS_NONE) if !ok { c.die(bluepillArchContext(context), "invalid physical address") return } // We now need to fill in the data appropriately. KVM // expects us to provide the result of the given MMIO // operation in the runData struct. This is safe // because, if a fault occurs here, the same fault // would have occurred in guest mode. The kernel should // not create invalid page table mappings. data := (*[8]byte)(unsafe.Pointer(&c.runData.data[1])) length := (uintptr)((uint32)(c.runData.data[2])) write := (uint8)(((c.runData.data[2] >> 32) & 0xff)) != 0 for i := uintptr(0); i < length; i++ { b := bytePtr(uintptr(virtual) + i) if write { // Write to the given address. *b = data[i] } else { // Read from the given address. data[i] = *b } } case _KVM_EXIT_IRQ_WINDOW_OPEN: bluepillStopGuest(c) case _KVM_EXIT_SHUTDOWN: c.die(bluepillArchContext(context), "shutdown") return case _KVM_EXIT_FAIL_ENTRY: c.die(bluepillArchContext(context), "entry failed") return default: bluepillArchHandleExit(c, context) return } } }

gdb kvm_run

/go:nosplit func bluepillHandler(context unsafe.Pointer) { // Sanitize the registers; interrupts must always be disabled. c := bluepillArchEnter(bluepillArchContext(context)) // Mark this as guest mode. switch atomic.SwapUint32(&c.state, vCPUGuest|vCPUUser) { case vCPUUser: // Expected case. case vCPUUser | vCPUWaiter: c.notify() default: throw("invalid state") } for { _, _, errno := syscall.RawSyscall(syscall.SYS_IOCTL, uintptr(c.fd), _KVM_RUN, 0) // escapes: no.

root@cloud:/mycontainer# dlv attach 928771 Type 'help' for list of commands. (dlv) b bluepillHandler Breakpoint 1 set at 0x87b300 for gvisor.dev/gvisor/pkg/sentry/platform/kvm.bluepillHandler() pkg/sentry/platform/kvm/bluepill_unsafe.go:91 (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.bluepillHandler() pkg/sentry/platform/kvm/bluepill_unsafe.go:91 (hits goroutine(276):1 total:1) (PC: 0x87b300) Warning: debugging optimized function (dlv) bt 0 0x000000000087b300 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.bluepillHandler at pkg/sentry/platform/kvm/bluepill_unsafe.go:91 1 0x0000000000881bec in ??? at ?:-1 2 0x0000ffff82bfe598 in ??? at ?:-1 3 0x000000000087f514 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser at pkg/sentry/platform/kvm/machine_arm64_unsafe.go:249 4 0x00000000009e5f89 in ??? at ?:-1 5 0x000000000087bb1c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*context).Switch at pkg/sentry/platform/kvm/context.go:75 6 0x00000040005fc000 in ??? at ?:-1 7 0x0000000000517d9c in gvisor.dev/gvisor/pkg/sentry/kernel.(*Task).run at pkg/sentry/kernel/task_run.go:97 8 0x00000040007a73f0 in ??? at ?:-1 9 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 error: Undefined return address at 0x77c84 (truncated)

KVM_SET_USER_MEMORY_REGION

// setMemoryRegion initializes a region. // // This may be called from bluepillHandler, and therefore returns an errno // directly (instead of wrapping in an error) to avoid allocations. // //go:nosplit func (m *machine) setMemoryRegion(slot int, physical, length, virtual uintptr, flags uint32) syscall.Errno { userRegion := userMemoryRegion{ slot: uint32(slot), flags: uint32(flags), guestPhysAddr: uint64(physical), memorySize: uint64(length), userspaceAddr: uint64(virtual), } // Set the region. _, _, errno := syscall.RawSyscall( syscall.SYS_IOCTL, uintptr(m.fd), _KVM_SET_USER_MEMORY_REGION, uintptr(unsafe.Pointer(&userRegion))) return errno }

gdb KVM_SET_USER_MEMORY_REGION

root@cloud:~# docker exec -it test ping 8.8.8.8 PING 8.8.8.8 (8.8.8.8): 56 data bytes 64 bytes from 8.8.8.8: seq=0 ttl=42 time=71.790 ms 第一个ping会产生多个handleBluepillFault 64 bytes from 8.8.8.8: seq=1 ttl=42 time=52.753 ms 64 bytes from 8.8.8.8: seq=2 ttl=42 time=72.294 ms 64 bytes from 8.8.8.8: seq=3 ttl=42 time=46.929 ms 64 bytes from 8.8.8.8: seq=4 ttl=42 time=48.804 ms 64 bytes from 8.8.8.8: seq=5 ttl=42 time=56.042 ms 64 bytes from 8.8.8.8: seq=6 ttl=42 time=46.749 ms 64 bytes from 8.8.8.8: seq=7 ttl=42 time=46.641 ms 64 bytes from 8.8.8.8: seq=8 ttl=42 time=71.048 ms 64 bytes from 8.8.8.8: seq=9 ttl=42 time=46.810 ms 64 bytes from 8.8.8.8: seq=10 ttl=42 time=54.809 ms 64 bytes from 8.8.8.8: seq=11 ttl=42 time=54.142 ms 64 bytes from 8.8.8.8: seq=12 ttl=42 time=55.836 ms 64 bytes from 8.8.8.8: seq=13 ttl=42 time=54.597 ms 64 bytes from 8.8.8.8: seq=14 ttl=42 time=54.257 ms 64 bytes from 8.8.8.8: seq=15 ttl=42 time=51.381 ms 64 bytes from 8.8.8.8: seq=16 ttl=42 time=68.398 ms 64 bytes from 8.8.8.8: seq=17 ttl=42 time=55.201 ms 64 bytes from 8.8.8.8: seq=18 ttl=42 time=51.468 ms 64 bytes from 8.8.8.8: seq=19 ttl=42 time=58.932 ms 64 bytes from 8.8.8.8: seq=20 ttl=42 time=56.083 ms 64 bytes from 8.8.8.8: seq=21 ttl=42 time=55.778 ms 64 bytes from 8.8.8.8: seq=22 ttl=42 time=46.736 ms 64 bytes from 8.8.8.8: seq=23 ttl=42 time=52.156 ms 64 bytes from 8.8.8.8: seq=24 ttl=42 time=67.973 ms 64 bytes from 8.8.8.8: seq=25 ttl=42 time=75.482 ms 64 bytes from 8.8.8.8: seq=26 ttl=42 time=83.042 ms 64 bytes from 8.8.8.8: seq=27 ttl=42 time=55.989 ms 64 bytes from 8.8.8.8: seq=28 ttl=42 time=92.035 ms 64 bytes from 8.8.8.8: seq=29 ttl=42 time=55.596 ms 64 bytes from 8.8.8.8: seq=30 ttl=42 time=55.749 ms ^C --- 8.8.8.8 ping statistics --- 31 packets transmitted, 31 packets received, 0% packet loss round-trip min/avg/max = 46.641/58.564/92.035 ms

root@cloud:~# dlv attach 930795 Type 'help' for list of commands. (dlv) b setMemoryRegion Breakpoint 1 set at 0x87f9b0 for gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):1 total:1) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) bt 0 0x000000000087f9b0 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion at pkg/sentry/platform/kvm/machine_unsafe.go:44 1 0x000000000087b0b8 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.handleBluepillFault at pkg/sentry/platform/kvm/bluepill_fault.go:99 2 0x000000000087d3e0 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).mapPhysical at pkg/sentry/platform/kvm/machine.go:323 3 0x0000000000879a7c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*addressSpace).mapLocked at pkg/sentry/platform/kvm/address_space.go:135 4 0x0000000000879d94 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*addressSpace).MapFile at pkg/sentry/platform/kvm/address_space.go:199 5 0x00000000003cfc08 in gvisor.dev/gvisor/pkg/sentry/mm.(*MemoryManager).mapASLocked at pkg/sentry/mm/address_space.go:209 6 0x00000000003edb80 in gvisor.dev/gvisor/pkg/sentry/mm.(*MemoryManager).HandleUserFault at pkg/sentry/mm/syscalls.go:69 7 0x00000000005189b0 in gvisor.dev/gvisor/pkg/sentry/kernel.(*runApp).execute at pkg/sentry/kernel/task_run.go:311 8 0x0000000000517d9c in gvisor.dev/gvisor/pkg/sentry/kernel.(*Task).run at pkg/sentry/kernel/task_run.go:97 9 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):2 total:2) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) bt 0 0x000000000087f9b0 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion at pkg/sentry/platform/kvm/machine_unsafe.go:44 1 0x000000000087b0b8 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.handleBluepillFault at pkg/sentry/platform/kvm/bluepill_fault.go:99 2 0x000000000087d3e0 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).mapPhysical at pkg/sentry/platform/kvm/machine.go:323 3 0x0000000000879a7c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*addressSpace).mapLocked at pkg/sentry/platform/kvm/address_space.go:135 4 0x0000000000879d94 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*addressSpace).MapFile at pkg/sentry/platform/kvm/address_space.go:199 5 0x00000000003cfc08 in gvisor.dev/gvisor/pkg/sentry/mm.(*MemoryManager).mapASLocked at pkg/sentry/mm/address_space.go:209 6 0x00000000003edb80 in gvisor.dev/gvisor/pkg/sentry/mm.(*MemoryManager).HandleUserFault at pkg/sentry/mm/syscalls.go:69 7 0x00000000005189b0 in gvisor.dev/gvisor/pkg/sentry/kernel.(*runApp).execute at pkg/sentry/kernel/task_run.go:311 8 0x0000000000517d9c in gvisor.dev/gvisor/pkg/sentry/kernel.(*Task).run at pkg/sentry/kernel/task_run.go:97 9 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):3 total:3) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):4 total:4) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):5 total:5) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):6 total:6) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):7 total:7) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):8 total:8) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):9 total:9) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*machine).setMemoryRegion() pkg/sentry/platform/kvm/machine_unsafe.go:44 (hits goroutine(322):10 total:10) (PC: 0x87f9b0) Warning: debugging optimized function (dlv) c received SIGINT, stopping process (will not forward signal) > syscall.Syscall6() src/syscall/asm_linux_arm64.s:43 (PC: 0x8dccc) Warning: debugging optimized function (dlv) quit Would you like to kill the process? [Y/n] n

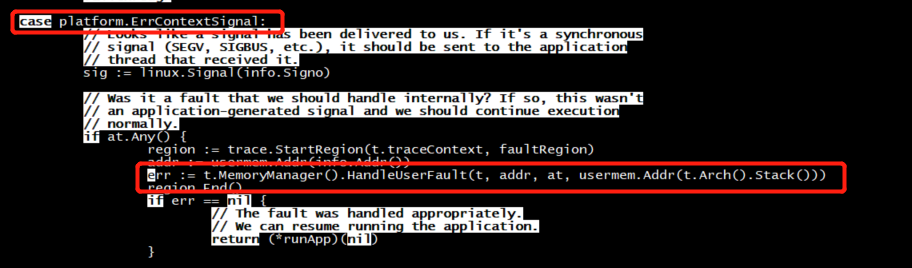

HandleUserFault platform.ErrContextSignal

// sighandler: see bluepill.go for documentation. // // The arguments are the following: // // DI - The signal number. // SI - Pointer to siginfo_t structure. // DX - Pointer to ucontext structure. // TEXT ·sighandler(SB),NOSPLIT,$0 // Check if the signal is from the kernel. MOVQ $0x80, CX CMPL CX, 0x8(SI) JNE fallback // Check if RIP is disable interrupts. MOVQ CONTEXT_RIP(DX), CX CMPQ CX, $0x0 JE fallback CMPB 0(CX), CLI JNE fallback // Call the bluepillHandler. PUSHQ DX // First argument (context). CALL ·bluepillHandler(SB) // Call the handler. POPQ DX // Discard the argument. RET fallback: // Jump to the previous signal handler. XORQ CX, CX MOVQ ·savedHandler(SB), AX JMP AX

runtime.sigreturn

goroutine 1 [running, locked to thread]: runtime.throw(0xcef9f0, 0xa) GOROOT/src/runtime/panic.go:617 +0x72 fp=0xc000009930 sp=0xc000009900 pc=0x42d342 gvisor.googlesource.com/gvisor/pkg/sentry/platform/kvm.bluepillHandler(0xc0000099c0) pkg/sentry/platform/kvm/bluepill_unsafe.go:118 +0x47d fp=0xc0000099b0 sp=0xc000009930 pc=0xa0f47d gvisor.googlesource.com/gvisor/pkg/sentry/platform/kvm.sighandler(0x7, 0x0, 0xc000002000, 0x0, 0x8000, 0x20, 0xc000038a70, 0x2, 0xc000038bd0, 0x0, ...) pkg/sentry/platform/kvm/bluepill_amd64.s:79 +0x24 fp=0xc0000099c0 sp=0xc0000099b0 pc=0xa16184 runtime: unexpected return pc for runtime.sigreturn called from 0x7 stack: frame={sp:0xc0000099c0, fp:0xc0000099c8} stack=[0xc0001f6000,0xc0001fe000) runtime.sigreturn(0x0, 0xc000002000, 0x0, 0x8000, 0x20, 0xc000038a70, 0x2, 0xc000038bd0, 0x0, 0xff, ...) bazel-out/k8-fastbuild/bin/external/io_bazel_rules_go/linux_amd64_pure_stripped/stdlib%/src/runtime/sys_linux_amd64.s:449 fp=0xc0000099c8 sp=0xc0000099c0 pc=0x45cc70

func init() { // Install the handler. if err := safecopy.ReplaceSignalHandler(bluepillSignal, reflect.ValueOf(sighandler).Pointer(), &savedHandler); err != nil { panic(fmt.Sprintf("Unable to set handler for signal %d: %v", bluepillSignal, err)) } // Extract the address for the trampoline. dieTrampolineAddr = reflect.ValueOf(dieTrampoline).Pointer() }

func ReplaceSignalHandler

ReplaceSignalHandler replaces the existing signal handler for the provided signal with the one that handles faults in safecopy-protected functions.

It stores the value of the previously set handler in previous.

This function will be called on initialization in order to install safecopy handlers for appropriate signals. These handlers will call the previous handler however, and if this is function is being used externally then the same courtesy is expected.

gvisor/pkg/sentry/platform/kvm/bluepill_amd64.go:27: // The action for bluepillSignal is changed by sigaction(). gvisor/pkg/sentry/platform/kvm/bluepill_amd64.go:28: bluepillSignal = syscall.SIGSEGV gvisor/pkg/sentry/platform/kvm/bluepill_arm64.go:27: // The action for bluepillSignal is changed by sigaction(). gvisor/pkg/sentry/platform/kvm/bluepill_arm64.go:28: bluepillSignal = syscall.SIGILL

pkg/sentry/platform/kvm/machine_unsafe.go:57: _KVM_SET_USER_MEMORY_REGION, pkg/sentry/platform/kvm/kvm_const.go:33: _KVM_SET_USER_MEMORY_REGION = 0x4020ae46

ret = ioctl(kvm->vm_fd, KVM_SET_USER_MEMORY_REGION, &(kvm->mem));

func (m *machine) setMemoryRegion(slot int, physical, length, virtual uintptr, flags uint32) syscall.Errno { userRegion := userMemoryRegion{ slot: uint32(slot), flags: uint32(flags), guestPhysAddr: uint64(physical), memorySize: uint64(length), userspaceAddr: uint64(virtual), } // Set the region. _, _, errno := syscall.RawSyscall( syscall.SYS_IOCTL, uintptr(m.fd), _KVM_SET_USER_MEMORY_REGION, uintptr(unsafe.Pointer(&userRegion))) return errno }

func handleBluepillFault(m *machine, physical uintptr, phyRegions []physicalRegion, flags uint32) (uintptr, bool) { // Paging fault: we need to map the underlying physical pages for this // fault. This all has to be done in this function because we're in a // signal handler context. (We can't call any functions that might // split the stack.) virtualStart, physicalStart, length, ok := calculateBluepillFault(physical, phyRegions) if !ok { return 0, false } // Set the KVM slot. // // First, we need to acquire the exclusive right to set a slot. See // machine.nextSlot for information about the protocol. slot := atomic.SwapUint32(&m.nextSlot, ^uint32(0)) for slot == ^uint32(0) { yield() // Race with another call. slot = atomic.SwapUint32(&m.nextSlot, ^uint32(0)) } errno := m.setMemoryRegion(int(slot), physicalStart, length, virtualStart, flags)

bluepillHandler

// sighandler: see bluepill.go for documentation. // // The arguments are the following: // // DI - The signal number. // SI - Pointer to siginfo_t structure. // DX - Pointer to ucontext structure. // TEXT ·sighandler(SB),NOSPLIT,$0 // Check if the signal is from the kernel. MOVQ $0x80, CX CMPL CX, 0x8(SI) JNE fallback // Check if RIP is disable interrupts. MOVQ CONTEXT_RIP(DX), CX CMPQ CX, $0x0 JE fallback CMPB 0(CX), CLI JNE fallback // Call the bluepillHandler. PUSHQ DX // First argument (context). CALL ·bluepillHandler(SB) // Call the handler. POPQ DX // Discard the argument. RET

// bluepillHandler is called from the signal stub. // // The world may be stopped while this is executing, and it executes on the // signal stack. It should only execute raw system calls and functions that are // explicitly marked go:nosplit. // // +checkescape:all // //go:nosplit func bluepillHandler(context unsafe.Pointer) { // Sanitize the registers; interrupts must always be disabled. c := bluepillArchEnter(bluepillArchContext(context)) // Mark this as guest mode. switch atomic.SwapUint32(&c.state, vCPUGuest|vCPUUser) { case vCPUUser: // Expected case. case vCPUUser | vCPUWaiter: c.notify() default: throw("invalid state") } for { _, _, errno := syscall.RawSyscall(syscall.SYS_IOCTL, uintptr(c.fd), _KVM_RUN, 0) // escapes: no. switch errno { case 0: // Expected case. case syscall.EINTR: // First, we process whatever pending signal // interrupted KVM. Since we're in a signal handler // currently, all signals are masked and the signal // must have been delivered directly to this thread. timeout := syscall.Timespec{} sig, _, errno := syscall.RawSyscall6( // escapes: no. syscall.SYS_RT_SIGTIMEDWAIT, uintptr(unsafe.Pointer(&bounceSignalMask)), 0, // siginfo. uintptr(unsafe.Pointer(&timeout)), // timeout. 8, // sigset size. 0, 0) if errno == syscall.EAGAIN { continue } if errno != 0 { throw("error waiting for pending signal") } if sig != uintptr(bounceSignal) { throw("unexpected signal") } // Check whether the current state of the vCPU is ready // for interrupt injection. Because we don't have a // PIC, we can't inject an interrupt while they are // masked. We need to request a window if it's not // ready. if bluepillReadyStopGuest(c) { // Force injection below; the vCPU is ready. c.runData.exitReason = _KVM_EXIT_IRQ_WINDOW_OPEN } else { c.runData.requestInterruptWindow = 1 continue // Rerun vCPU. } case syscall.EFAULT: // If a fault is not serviceable due to the host // backing pages having page permissions, instead of an // MMIO exit we receive EFAULT from the run ioctl. We // always inject an NMI here since we may be in kernel // mode and have interrupts disabled. bluepillSigBus(c) continue // Rerun vCPU. case syscall.ENOSYS: bluepillHandleEnosys(c) continue default: throw("run failed") } switch c.runData.exitReason { case _KVM_EXIT_EXCEPTION: c.die(bluepillArchContext(context), "exception") return case _KVM_EXIT_IO: c.die(bluepillArchContext(context), "I/O") return case _KVM_EXIT_INTERNAL_ERROR: // An internal error is typically thrown when emulation // fails. This can occur via the MMIO path below (and // it might fail because we have multiple regions that // are not mapped). We would actually prefer that no // emulation occur, and don't mind at all if it fails. case _KVM_EXIT_HYPERCALL: c.die(bluepillArchContext(context), "hypercall") return case _KVM_EXIT_DEBUG: c.die(bluepillArchContext(context), "debug") return case _KVM_EXIT_HLT: bluepillGuestExit(c, context) return case _KVM_EXIT_MMIO: physical := uintptr(c.runData.data[0]) if getHypercallID(physical) == _KVM_HYPERCALL_VMEXIT { bluepillGuestExit(c, context) return } // Increment the fault count. atomic.AddUint32(&c.faults, 1) // For MMIO, the physical address is the first data item. physical = uintptr(c.runData.data[0]) virtual, ok := handleBluepillFault(c.machine, physical, physicalRegions, _KVM_MEM_FLAGS_NONE) if !ok { c.die(bluepillArchContext(context), "invalid physical address") return } // We now need to fill in the data appropriately. KVM // expects us to provide the result of the given MMIO // operation in the runData struct. This is safe // because, if a fault occurs here, the same fault // would have occurred in guest mode. The kernel should // not create invalid page table mappings. data := (*[8]byte)(unsafe.Pointer(&c.runData.data[1])) length := (uintptr)((uint32)(c.runData.data[2])) write := (uint8)(((c.runData.data[2] >> 32) & 0xff)) != 0 for i := uintptr(0); i < length; i++ { b := bytePtr(uintptr(virtual) + i) if write { // Write to the given address. *b = data[i] } else { // Read from the given address. data[i] = *b } } case _KVM_EXIT_IRQ_WINDOW_OPEN: bluepillStopGuest(c) case _KVM_EXIT_SHUTDOWN: c.die(bluepillArchContext(context), "shutdown") return case _KVM_EXIT_FAIL_ENTRY: c.die(bluepillArchContext(context), "entry failed") return default: bluepillArchHandleExit(c, context) return } } }

func (m *machine) mapPhysical(physical, length uintptr, phyRegions []physicalRegion, flags uint32) { for end := physical + length; physical < end; { _, physicalStart, length, ok := calculateBluepillFault(physical, phyRegions) if !ok { // Should never happen. panic("mapPhysical on unknown physical address") } // Is this already mapped? Check the usedSlots. if !m.hasSlot(physicalStart) { if _, ok := handleBluepillFault(m, physical, phyRegions, flags); !ok { panic("handleBluepillFault failed") } } // Move to the next chunk. physical = physicalStart + length } }

physicalRegionsReadOnly = rdonlyRegionsForSetMem() physicalRegionsAvailable = availableRegionsForSetMem() // Map all read-only regions. for _, r := range physicalRegionsReadOnly { m.mapPhysical(r.physical, r.length, physicalRegionsReadOnly, _KVM_MEM_READONLY) }

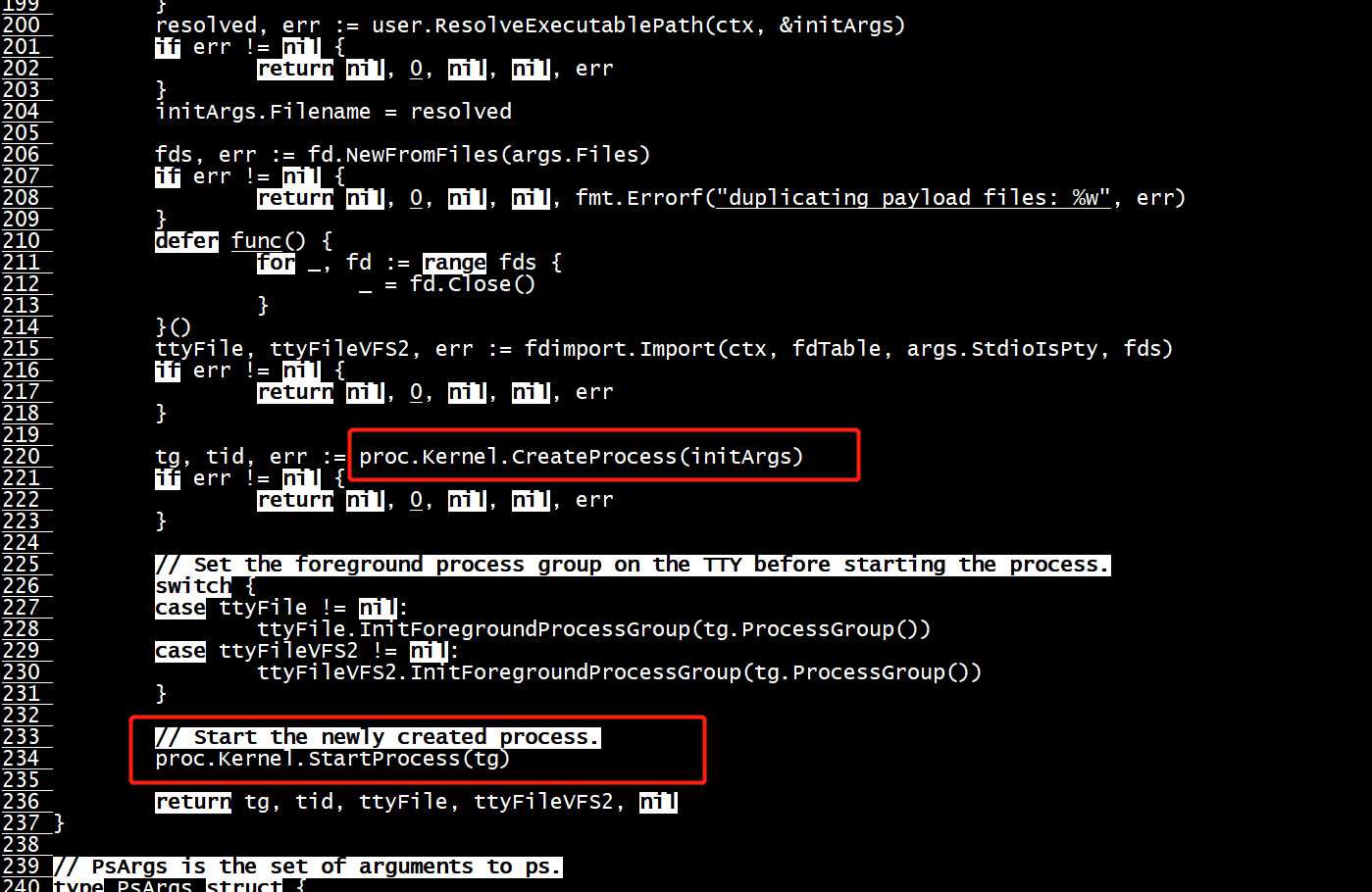

gdb CreateProcess

root@cloud:/gvisor/pkg# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 556bb439a7ff alpine "sleep 1000" 4 minutes ago Up 4 minutes test root@cloud:/gvisor/pkg# docker inspect test | grep Pid | head -n 1 "Pid": 927849, root@cloud:/gvisor/pkg# docker exec -it test ip

root@cloud:/mycontainer# dlv attach 927849 Type 'help' for list of commands. (dlv) b CreateProcess Breakpoint 1 set at 0x4f4920 for gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).CreateProcess() pkg/sentry/kernel/kernel.go:909 (dlv) c > gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).CreateProcess() pkg/sentry/kernel/kernel.go:909 (hits goroutine(250):1 total:1) (PC: 0x4f4920) Warning: debugging optimized function (dlv) bt 0 0x00000000004f4920 in gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).CreateProcess at pkg/sentry/kernel/kernel.go:909 1 0x00000000006c0f34 in gvisor.dev/gvisor/pkg/sentry/control.(*Proc).execAsync at pkg/sentry/control/proc.go:220 2 0x00000000006c0970 in gvisor.dev/gvisor/pkg/sentry/control.ExecAsync at pkg/sentry/control/proc.go:133 3 0x0000000000925470 in gvisor.dev/gvisor/runsc/boot.(*Loader).executeAsync at runsc/boot/loader.go:972 4 0x0000000000916a88 in gvisor.dev/gvisor/runsc/boot.(*containerManager).ExecuteAsync at runsc/boot/controller.go:321 5 0x0000000000075ec4 in runtime.call64 at src/runtime/asm_arm64.s:1 6 0x00000000000c0c80 in reflect.Value.call at GOROOT/src/reflect/value.go:475 7 0x00000000000c0444 in reflect.Value.Call at GOROOT/src/reflect/value.go:336 8 0x0000000000688c30 in gvisor.dev/gvisor/pkg/urpc.(*Server).handleOne at pkg/urpc/urpc.go:337 9 0x00000000006897d0 in gvisor.dev/gvisor/pkg/urpc.(*Server).handleRegistered at pkg/urpc/urpc.go:432 10 0x000000000068adbc in gvisor.dev/gvisor/pkg/urpc.(*Server).StartHandling.func1 at pkg/urpc/urpc.go:452 11 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv) list > gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).CreateProcess() pkg/sentry/kernel/kernel.go:909 (hits goroutine(250):1 total:1) (PC: 0x4f4920) Warning: debugging optimized function Command failed: open pkg/sentry/kernel/kernel.go: no such file or directory (dlv) quit Would you like to kill the process? [Y/n] y root@cloud:/mycontainer#

root@cloud:~/delve# dlv attach 925361 Type 'help' for list of commands. (dlv) break mm.MemoryManager.MMap Breakpoint 1 set at 0x3edc70 for gvisor.dev/gvisor/pkg/sentry/mm.(*MemoryManager).MMap() pkg/sentry/mm/syscalls.go:75 (dlv) continue > gvisor.dev/gvisor/pkg/sentry/mm.(*MemoryManager).MMap() pkg/sentry/mm/syscalls.go:75 (hits goroutine(32):1 total:1) (PC: 0x3edc70) Warning: debugging optimized function (dlv) bt 0 0x00000000003edc70 in gvisor.dev/gvisor/pkg/sentry/mm.(*MemoryManager).MMap at pkg/sentry/mm/syscalls.go:75 1 0x00000000004de06c in gvisor.dev/gvisor/pkg/sentry/loader.loadParsedELF at pkg/sentry/loader/elf.go:505 2 0x00000000004df348 in gvisor.dev/gvisor/pkg/sentry/loader.loadInitialELF at pkg/sentry/loader/elf.go:609 3 0x00000000004df9e4 in gvisor.dev/gvisor/pkg/sentry/loader.loadELF at pkg/sentry/loader/elf.go:651 4 0x00000000004e1204 in gvisor.dev/gvisor/pkg/sentry/loader.loadExecutable at pkg/sentry/loader/loader.go:179 5 0x00000000004e1824 in gvisor.dev/gvisor/pkg/sentry/loader.Load at pkg/sentry/loader/loader.go:222 6 0x000000000051560c in gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).LoadTaskImage at pkg/sentry/kernel/task_image.go:150 7 0x00000000004f4e80 in gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).CreateProcess at pkg/sentry/kernel/kernel.go:1022 8 0x00000000006c0f34 in gvisor.dev/gvisor/pkg/sentry/control.(*Proc).execAsync at pkg/sentry/control/proc.go:220 9 0x00000000006c0970 in gvisor.dev/gvisor/pkg/sentry/control.ExecAsync at pkg/sentry/control/proc.go:133 10 0x0000000000925470 in gvisor.dev/gvisor/runsc/boot.(*Loader).executeAsync at runsc/boot/loader.go:972 11 0x0000000000916a88 in gvisor.dev/gvisor/runsc/boot.(*containerManager).ExecuteAsync at runsc/boot/controller.go:321 12 0x0000000000075ec4 in runtime.call64 at src/runtime/asm_arm64.s:1 13 0x00000000000c0c80 in reflect.Value.call at GOROOT/src/reflect/value.go:475 14 0x00000000000c0444 in reflect.Value.Call at GOROOT/src/reflect/value.go:336 15 0x0000000000688c30 in gvisor.dev/gvisor/pkg/urpc.(*Server).handleOne at pkg/urpc/urpc.go:337 16 0x00000000006897d0 in gvisor.dev/gvisor/pkg/urpc.(*Server).handleRegistered at pkg/urpc/urpc.go:432 17 0x000000000068adbc in gvisor.dev/gvisor/pkg/urpc.(*Server).StartHandling.func1 at pkg/urpc/urpc.go:452 18 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv)

LoadTaskImage

docker exec -it test ping 8.8.8.8

(dlv) b LoadTaskImage Breakpoint 1 set at 0x515510 for gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).LoadTaskImage() pkg/sentry/kernel/task_image.go:139 (dlv) c > gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).LoadTaskImage() pkg/sentry/kernel/task_image.go:139 (hits goroutine(183):1 total:1) (PC: 0x515510) Warning: debugging optimized function (dlv) p args gvisor.dev/gvisor/pkg/sentry/loader.LoadArgs { MemoryManager: *gvisor.dev/gvisor/pkg/sentry/mm.MemoryManager nil, RemainingTraversals: *40, ResolveFinal: true, Filename: "/bin/ping", File: gvisor.dev/gvisor/pkg/sentry/fsbridge.File nil, Opener: gvisor.dev/gvisor/pkg/sentry/fsbridge.Lookup(*gvisor.dev/gvisor/pkg/sentry/fsbridge.fsLookup) *{ mntns: *(*"gvisor.dev/gvisor/pkg/sentry/fs.MountNamespace")(0x400042c1c0), root: *(*"gvisor.dev/gvisor/pkg/sentry/fs.Dirent")(0x40001c1760), workingDir: *(*"gvisor.dev/gvisor/pkg/sentry/fs.Dirent")(0x40001c1760),}, CloseOnExec: false, Argv: []string len: 2, cap: 4, ["ping","8.8.8.8"], Envv: []string len: 4, cap: 6, [ "HOSTNAME=123d10163110", "TERM=xterm", "PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bi...+1 more", "HOME=/root", ], Features: *gvisor.dev/gvisor/pkg/cpuid.FeatureSet { Set: map[gvisor.dev/gvisor/pkg/cpuid.Feature]bool [...], CPUImplementer: 65, CPUArchitecture: 8, CPUVariant: 0, CPUPartnum: 3336, CPURevision: 2,},}

(dlv) bt 0 0x0000000000515554 in gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).LoadTaskImage at pkg/sentry/kernel/task_image.go:146 1 0x00000000004f4e80 in gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).CreateProcess at pkg/sentry/kernel/kernel.go:1022 2 0x00000000006c0f34 in gvisor.dev/gvisor/pkg/sentry/control.(*Proc).execAsync at pkg/sentry/control/proc.go:220 3 0x00000000006c0970 in gvisor.dev/gvisor/pkg/sentry/control.ExecAsync at pkg/sentry/control/proc.go:133 4 0x0000000000925470 in gvisor.dev/gvisor/runsc/boot.(*Loader).executeAsync at runsc/boot/loader.go:972 5 0x0000000000916a88 in gvisor.dev/gvisor/runsc/boot.(*containerManager).ExecuteAsync at runsc/boot/controller.go:321 6 0x0000000000075ec4 in runtime.call64 at src/runtime/asm_arm64.s:1 7 0x00000000000c0c80 in reflect.Value.call at GOROOT/src/reflect/value.go:475 8 0x00000000000c0444 in reflect.Value.Call at GOROOT/src/reflect/value.go:336 9 0x0000000000688c30 in gvisor.dev/gvisor/pkg/urpc.(*Server).handleOne at pkg/urpc/urpc.go:337 10 0x00000000006897d0 in gvisor.dev/gvisor/pkg/urpc.(*Server).handleRegistered at pkg/urpc/urpc.go:432 11 0x000000000068adbc in gvisor.dev/gvisor/pkg/urpc.(*Server).StartHandling.func1 at pkg/urpc/urpc.go:452 12 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136

docker exec -it test ping 8.8.8.8

(dlv) b kernel.go:1066 Command failed: Location "kernel.go:1066" ambiguous: pkg/sentry/kernel/kernel.go, pkg/sentry/platform/ring0/kernel.go… (dlv) b pkg/sentry/kernel/kernel.go:1066 Breakpoint 1 set at 0x4f5a78 for gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).StartProcess() pkg/sentry/kernel/kernel.go:1066 (dlv) c > gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).StartProcess() pkg/sentry/kernel/kernel.go:1066 (hits goroutine(186):1 total:1) (PC: 0x4f5a78) Warning: debugging optimized function (dlv) bt 0 0x00000000004f5a78 in gvisor.dev/gvisor/pkg/sentry/kernel.(*Kernel).StartProcess at pkg/sentry/kernel/kernel.go:1066 1 0x00000000006c0f74 in gvisor.dev/gvisor/pkg/sentry/control.(*Proc).execAsync at pkg/sentry/control/proc.go:234 2 0x00000000006c0970 in gvisor.dev/gvisor/pkg/sentry/control.ExecAsync at pkg/sentry/control/proc.go:133 3 0x0000000000925470 in gvisor.dev/gvisor/runsc/boot.(*Loader).executeAsync at runsc/boot/loader.go:972 4 0x0000000000916a88 in gvisor.dev/gvisor/runsc/boot.(*containerManager).ExecuteAsync at runsc/boot/controller.go:321 5 0x0000000000075ec4 in runtime.call64 at src/runtime/asm_arm64.s:1 6 0x00000000000c0c80 in reflect.Value.call at GOROOT/src/reflect/value.go:475 7 0x00000000000c0444 in reflect.Value.Call at GOROOT/src/reflect/value.go:336 8 0x0000000000688c30 in gvisor.dev/gvisor/pkg/urpc.(*Server).handleOne at pkg/urpc/urpc.go:337 9 0x00000000006897d0 in gvisor.dev/gvisor/pkg/urpc.(*Server).handleRegistered at pkg/urpc/urpc.go:432 10 0x000000000068adbc in gvisor.dev/gvisor/pkg/urpc.(*Server).StartHandling.func1 at pkg/urpc/urpc.go:452 11 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv) p t *gvisor.dev/gvisor/pkg/sentry/kernel.Task { taskNode: gvisor.dev/gvisor/pkg/sentry/kernel.taskNode { tg: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.ThreadGroup")(0x400027d000), taskEntry: (*"gvisor.dev/gvisor/pkg/sentry/kernel.taskEntry")(0x40001cca88), parent: *gvisor.dev/gvisor/pkg/sentry/kernel.Task nil, children: map[*gvisor.dev/gvisor/pkg/sentry/kernel.Task]struct {} [], childPIDNamespace: *gvisor.dev/gvisor/pkg/sentry/kernel.PIDNamespace nil,}, goid: 0, runState: gvisor.dev/gvisor/pkg/sentry/kernel.taskRunState(*gvisor.dev/gvisor/pkg/sentry/kernel.runApp) nil, taskWorkCount: 0, taskWorkMu: gvisor.dev/gvisor/pkg/sync.Mutex { m: (*"gvisor.dev/gvisor/pkg/sync.CrossGoroutineMutex")(0x40001ccacc),}, taskWork: []gvisor.dev/gvisor/pkg/sentry/kernel.TaskWorker len: 0, cap: 0, nil, haveSyscallReturn: false, interruptChan: chan struct {} { qcount: 0, dataqsiz: 1, buf: *[1]struct struct {} [ {}, ], elemsize: 0, closed: 0, elemtype: *runtime._type {size: 0, ptrdata: 0, hash: 670477339, tflag: tflagExtraStar|tflagRegularMemory (10), align: 1, fieldAlign: 1, kind: 25, equal: runtime.memequal0, gcdata: *1, str: 54665, ptrToThis: 498784}, sendx: 0, recvx: 0, recvq: waitq<struct {}> { first: *sudog<struct {}> nil, last: *sudog<struct {}> nil,}, sendq: waitq<struct {}> { first: *sudog<struct {}> nil, last: *sudog<struct {}> nil,}, lock: runtime.mutex { lockRankStruct: runtime.lockRankStruct {}, key: 0,},}, goschedSeq: gvisor.dev/gvisor/pkg/sync.SeqCount {epoch: 0}, gosched: gvisor.dev/gvisor/pkg/sentry/kernel.TaskGoroutineSchedInfo {Timestamp: 0, State: TaskGoroutineNonexistent (0), UserTicks: 0, SysTicks: 0}, yieldCount: 0, pendingSignals: gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignals { signals: [64]gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue [ (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccb30), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccb48), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccb60), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccb78), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccb90), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccba8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccbc0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccbd8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccbf0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccc08), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccc20), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccc38), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccc50), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccc68), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccc80), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccc98), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cccb0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cccc8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccce0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cccf8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccd10), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccd28), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccd40), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccd58), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccd70), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccd88), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccda0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccdb8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccdd0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccde8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cce00), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cce18), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cce30), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cce48), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cce60), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cce78), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cce90), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccea8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccec0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cced8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccef0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccf08), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccf20), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccf38), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccf50), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccf68), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccf80), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccf98), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccfb0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccfc8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccfe0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001ccff8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd010), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd028), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd040), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd058), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd070), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd088), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd0a0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd0b8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd0d0), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd0e8), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd100), (*"gvisor.dev/gvisor/pkg/sentry/kernel.pendingSignalQueue")(0x40001cd118), ], pendingSet: 0,}, signalMask: 0, realSignalMask: 0, haveSavedSignalMask: false, savedSignalMask: 0, signalStack: gvisor.dev/gvisor/pkg/sentry/arch.SignalStack {Addr: 0, Flags: 2, _: 0, Size: 0}, signalQueue: gvisor.dev/gvisor/pkg/waiter.Queue { list: (*"gvisor.dev/gvisor/pkg/waiter.waiterList")(0x40001cd170), mu: (*"gvisor.dev/gvisor/pkg/sync.RWMutex")(0x40001cd180),}, groupStopPending: false, groupStopAcknowledged: false, trapStopPending: false, trapNotifyPending: false, stop: gvisor.dev/gvisor/pkg/sentry/kernel.TaskStop nil, stopCount: 0, endStopCond: sync.Cond { noCopy: sync.noCopy {}, L: sync.Locker(*gvisor.dev/gvisor/pkg/sync.Mutex) ..., notify: (*sync.notifyList)(0x40001cd1c8), checker: 0,}, exitStatus: gvisor.dev/gvisor/pkg/sentry/kernel.ExitStatus {Code: 0, Signo: 0}, syscallRestartBlock: gvisor.dev/gvisor/pkg/sentry/kernel.SyscallRestartBlock nil, p: gvisor.dev/gvisor/pkg/sentry/platform.Context(*gvisor.dev/gvisor/pkg/sentry/platform/kvm.context) *{ machine: *(*"gvisor.dev/gvisor/pkg/sentry/platform/kvm.machine")(0x400039da00), info: (*"gvisor.dev/gvisor/pkg/sentry/arch.SignalInfo")(0x40006bce78), interrupt: (*"gvisor.dev/gvisor/pkg/sentry/platform/interrupt.Forwarder")(0x40006bcef8),}, k: *gvisor.dev/gvisor/pkg/sentry/kernel.Kernel { extMu: (*"gvisor.dev/gvisor/pkg/sync.Mutex")(0x40001f8f00), started: true, Platform: gvisor.dev/gvisor/pkg/sentry/platform.Platform(*gvisor.dev/gvisor/pkg/sentry/platform/kvm.KVM) ..., mf: *(*"gvisor.dev/gvisor/pkg/sentry/pgalloc.MemoryFile")(0x4000392e00), featureSet: *(*"gvisor.dev/gvisor/pkg/cpuid.FeatureSet")(0x400039e830), timekeeper: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.Timekeeper")(0x40001f1f80), tasks: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.TaskSet")(0x4000321d40), rootUserNamespace: *(*"gvisor.dev/gvisor/pkg/sentry/kernel/auth.UserNamespace")(0x40003998c0), rootNetworkNamespace: *(*"gvisor.dev/gvisor/pkg/sentry/inet.Namespace")(0x400038bb30), applicationCores: 64, useHostCores: false, extraAuxv: []gvisor.dev/gvisor/pkg/sentry/arch.AuxEntry len: 0, cap: 0, nil, vdso: *(*"gvisor.dev/gvisor/pkg/sentry/loader.VDSO")(0x40003c5c00), rootUTSNamespace: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.UTSNamespace")(0x400038bb90), rootIPCNamespace: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.IPCNamespace")(0x40003b9f80), rootAbstractSocketNamespace: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.AbstractSocketNamespace")(0x400039e840), futexes: *(*"gvisor.dev/gvisor/pkg/sentry/kernel/futex.Manager")(0x4000348a80), globalInit: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.ThreadGroup")(0x400027c800), realtimeClock: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.timekeeperClock")(0x400039e850), monotonicClock: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.timekeeperClock")(0x400039e860), syslog: (*"gvisor.dev/gvisor/pkg/sentry/kernel.syslog")(0x40001f8fb8), runningTasksMu: (*"gvisor.dev/gvisor/pkg/sync.Mutex")(0x40001f8fd8), runningTasks: 0, cpuClock: 65635, cpuClockTicker: *(*"gvisor.dev/gvisor/pkg/sentry/kernel/time.Timer")(0x400053c000), cpuClockTickerDisabled: true, cpuClockTickerSetting: (*"gvisor.dev/gvisor/pkg/sentry/kernel/time.Setting")(0x40001f9000), uniqueID: 38, nextInotifyCookie: 0, netlinkPorts: *(*"gvisor.dev/gvisor/pkg/sentry/socket/netlink/port.Manager")(0x400039e870), saveStatus: error nil, danglingEndpoints: struct {} {}, sockets: (*"gvisor.dev/gvisor/pkg/sentry/kernel.socketList")(0x40001f9040), socketsVFS2: map[*gvisor.dev/gvisor/pkg/sentry/vfs.FileDescription]*gvisor.dev/gvisor/pkg/sentry/kernel.SocketRecord nil, nextSocketRecord: 2, deviceRegistry: struct {} {}, DirentCacheLimiter: *gvisor.dev/gvisor/pkg/sentry/fs.DirentCacheLimiter nil, unimplementedSyscallEmitterOnce: (*sync.Once)(0x40001f9068), unimplementedSyscallEmitter: gvisor.dev/gvisor/pkg/eventchannel.Emitter(*gvisor.dev/gvisor/pkg/eventchannel.rateLimitedEmitter) ..., SpecialOpts: gvisor.dev/gvisor/pkg/sentry/kernel.SpecialOpts {}, vfs: (*"gvisor.dev/gvisor/pkg/sentry/vfs.VirtualFilesystem")(0x40001f9088), hostMount: *gvisor.dev/gvisor/pkg/sentry/vfs.Mount nil, pipeMount: *gvisor.dev/gvisor/pkg/sentry/vfs.Mount nil, shmMount: *gvisor.dev/gvisor/pkg/sentry/vfs.Mount nil, socketMount: *gvisor.dev/gvisor/pkg/sentry/vfs.Mount nil, SleepForAddressSpaceActivation: false,}, containerID: "123d1016311020442e5c869cebfff4dd3cbd4be2c922f219f63f7b2f84309553", mu: gvisor.dev/gvisor/pkg/sync.Mutex { m: (*"gvisor.dev/gvisor/pkg/sync.CrossGoroutineMutex")(0x40001cd238),}, image: gvisor.dev/gvisor/pkg/sentry/kernel.TaskImage { Name: "ping", Arch: gvisor.dev/gvisor/pkg/sentry/arch.Context(*gvisor.dev/gvisor/pkg/sentry/arch.context64) ..., MemoryManager: *(*"gvisor.dev/gvisor/pkg/sentry/mm.MemoryManager")(0x40001c8000), fu: *(*"gvisor.dev/gvisor/pkg/sentry/kernel/futex.Manager")(0x4000335500), st: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.SyscallTable")(0x14fa2e0),}, fsContext: *gvisor.dev/gvisor/pkg/sentry/kernel.FSContext { FSContextRefs: (*"gvisor.dev/gvisor/pkg/sentry/kernel.FSContextRefs")(0x40001c6870), mu: (*"gvisor.dev/gvisor/pkg/sync.Mutex")(0x40001c6878), root: *(*"gvisor.dev/gvisor/pkg/sentry/fs.Dirent")(0x40001c1760), rootVFS2: (*"gvisor.dev/gvisor/pkg/sentry/vfs.VirtualDentry")(0x40001c6888), cwd: *(*"gvisor.dev/gvisor/pkg/sentry/fs.Dirent")(0x40001c1760), cwdVFS2: (*"gvisor.dev/gvisor/pkg/sentry/vfs.VirtualDentry")(0x40001c68a0), umask: 18,}, fdTable: *gvisor.dev/gvisor/pkg/sentry/kernel.FDTable { FDTableRefs: (*"gvisor.dev/gvisor/pkg/sentry/kernel.FDTableRefs")(0x40004649f0), k: *(*"gvisor.dev/gvisor/pkg/sentry/kernel.Kernel")(0x40001f8f00), mu: (*"gvisor.dev/gvisor/pkg/sync.Mutex")(0x4000464a00), next: 0, used: 3, descriptorTable: (*"gvisor.dev/gvisor/pkg/sentry/kernel.descriptorTable")(0x4000464a10),}, vforkParent: *gvisor.dev/gvisor/pkg/sentry/kernel.Task nil, exitState: TaskExitNone (0), exitTracerNotified: false, exitTracerAcked: false, exitParentNotified: false, exitParentAcked: false, goroutineStopped: sync.WaitGroup { noCopy: sync.noCopy {}, state1: [3]uint32 [0,0,0],}, ptraceTracer: sync/atomic.Value { v: interface {}(*gvisor.dev/gvisor/pkg/sentry/kernel.Task) ...,}, ptraceTracees: map[*gvisor.dev/gvisor/pkg/sentry/kernel.Task]struct {} [], ptraceSeized: false, ptraceOpts: gvisor.dev/gvisor/pkg/sentry/kernel.ptraceOptions {ExitKill: false, SysGood: false, TraceClone: false, TraceExec: false, TraceExit: false, TraceFork: false, TraceSeccomp: false, TraceVfork: false, TraceVforkDone: false}, ptraceSyscallMode: ptraceSyscallNone (0), ptraceSinglestep: false, ptraceCode: 0, ptraceSiginfo: *gvisor.dev/gvisor/pkg/sentry/arch.SignalInfo nil, ptraceEventMsg: 0, ioUsage: *gvisor.dev/gvisor/pkg/sentry/usage.IO {CharsRead: 0, CharsWritten: 0, ReadSyscalls: 0, WriteSyscalls: 0, BytesRead: 0, BytesWritten: 0, BytesWriteCancelled: 0}, logPrefix: sync/atomic.Value { v: interface {}(string) *(*interface {})(0x40001cd2f8),}, traceContext: context.Context(*context.valueCtx) *{ Context: context.Context(*gvisor.dev/gvisor/pkg/context.logContext) ..., key: (unreadable interface type "runtime/trace.traceContextKey" not found for 0x4000020d98: no type entry found, use 'types' for a list of valid types), val: interface {}(*runtime/trace.Task) ...,}, traceTask: *runtime/trace.Task {id: 4}, creds: gvisor.dev/gvisor/pkg/sentry/kernel/auth.AtomicPtrCredentials {ptr: unsafe.Pointer(0x40004cc4e0)}, utsns: *gvisor.dev/gvisor/pkg/sentry/kernel.UTSNamespace { mu: (*"gvisor.dev/gvisor/pkg/sync.Mutex")(0x400038bb90), hostName: "123d10163110", domainName: "123d10163110", userns: *(*"gvisor.dev/gvisor/pkg/sentry/kernel/auth.UserNamespace")(0x40003998c0),}, ipcns: *gvisor.dev/gvisor/pkg/sentry/kernel.IPCNamespace { IPCNamespaceRefs: (*"gvisor.dev/gvisor/pkg/sentry/kernel.IPCNamespaceRefs")(0x40003b9f80), userNS: *(*"gvisor.dev/gvisor/pkg/sentry/kernel/auth.UserNamespace")(0x40003998c0), semaphores: *(*"gvisor.dev/gvisor/pkg/sentry/kernel/semaphore.Registry")(0x400038bc20), shms: *(*"gvisor.dev/gvisor/pkg/sentry/kernel/shm.Registry")(0x400038bcb0),}, abstractSockets: *gvisor.dev/gvisor/pkg/sentry/kernel.AbstractSocketNamespace { mu: (*"gvisor.dev/gvisor/pkg/sync.Mutex")(0x400039e840), endpoints: map[string]gvisor.dev/gvisor/pkg/sentry/kernel.abstractEndpoint [],}, mountNamespaceVFS2: *gvisor.dev/gvisor/pkg/sentry/vfs.MountNamespace nil, parentDeathSignal: 0, syscallFilters: sync/atomic.Value { v: interface {} nil,}, cleartid: 0, allowedCPUMask: gvisor.dev/gvisor/pkg/sentry/kernel/sched.CPUSet len: 8, cap: 8, [255,255,255,255,255,255,255,255], cpu: 3, niceness: 0, numaPolicy: MPOL_DEFAULT (0), numaNodeMask: 0, netns: *gvisor.dev/gvisor/pkg/sentry/inet.Namespace { stack: gvisor.dev/gvisor/pkg/sentry/inet.Stack(*gvisor.dev/gvisor/pkg/sentry/socket/netstack.Stack) ..., creator: gvisor.dev/gvisor/pkg/sentry/inet.NetworkStackCreator(*gvisor.dev/gvisor/runsc/boot.sandboxNetstackCreator) ..., isRoot: true,}, rseqPreempted: false, rseqCPU: -1, oldRSeqCPUAddr: 0, rseqAddr: 0, rseqSignature: 0, copyScratchBuffer: [144]uint8 [0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,...+80 more], blockingTimer: *gvisor.dev/gvisor/pkg/sentry/kernel/time.Timer nil, blockingTimerChan: <-chan struct {} {}, futexWaiter: *gvisor.dev/gvisor/pkg/sentry/kernel/futex.Waiter { waiterEntry: (*"gvisor.dev/gvisor/pkg/sentry/kernel/futex.waiterEntry")(0x40004cccc0), bucket: (*"gvisor.dev/gvisor/pkg/sentry/kernel/futex.AtomicPtrBucket")(0x40004cccd0), C: chan struct {} { qcount: 0, dataqsiz: 1, buf: *[1]struct struct {} [ {}, ], elemsize: 0, closed: 0, elemtype: *runtime._type {size: 0, ptrdata: 0, hash: 670477339, tflag: tflagExtraStar|tflagRegularMemory (10), align: 1, fieldAlign: 1, kind: 25, equal: runtime.memequal0, gcdata: *1, str: 54665, ptrToThis: 498784}, sendx: 0, recvx: 0, recvq: waitq<struct {}> { first: *sudog<struct {}> nil, last: *sudog<struct {}> nil,}, sendq: waitq<struct {}> { first: *sudog<struct {}> nil, last: *sudog<struct {}> nil,}, lock: runtime.mutex { lockRankStruct: runtime.lockRankStruct {}, key: 0,},}, key: (*"gvisor.dev/gvisor/pkg/sentry/kernel/futex.Key")(0x40004ccce0), bitmask: 0, tid: 0,}, robustList: 0, startTime: gvisor.dev/gvisor/pkg/sentry/kernel/time.Time {ns: 1610623566972496825}, kcov: *gvisor.dev/gvisor/pkg/sentry/kernel.Kcov nil,} (dlv)

kvm struct kvm_run + gvisor runData

/* for KVM_RUN, returned by mmap(vcpu_fd, offset=0) */ struct kvm_run { /* in */ __u8 request_interrupt_window; __u8 padding1[7]; /* out */ __u32 exit_reason; __u8 ready_for_interrupt_injection; __u8 if_flag; __u8 padding2[2]; /* in (pre_kvm_run), out (post_kvm_run) */ __u64 cr8; __u64 apic_base; #ifdef __KVM_S390 /* the processor status word for s390 */ __u64 psw_mask; /* psw upper half */ __u64 psw_addr; /* psw lower half */ #endif union { /* KVM_EXIT_UNKNOWN */ struct { __u64 hardware_exit_reason; } hw; /* KVM_EXIT_FAIL_ENTRY */ struct { __u64 hardware_entry_failure_reason; } fail_entry; /* KVM_EXIT_EXCEPTION */ struct { __u32 exception; __u32 error_code; } ex; /* KVM_EXIT_IO */ struct { #define KVM_EXIT_IO_IN 0 #define KVM_EXIT_IO_OUT 1 __u8 direction; __u8 size; /* bytes */ __u16 port; __u32 count; __u64 data_offset; /* relative to kvm_run start */ } io; struct { struct kvm_debug_exit_arch arch; } debug; /* KVM_EXIT_MMIO */ struct { __u64 phys_addr; __u8 data[8]; __u32 len; __u8 is_write; } mmio; /* KVM_EXIT_HYPERCALL */ struct { __u64 nr; __u64 args[6]; __u64 ret; __u32 longmode; __u32 pad; } hypercall; /* KVM_EXIT_TPR_ACCESS */ struct { __u64 rip; __u32 is_write; __u32 pad; } tpr_access; /* KVM_EXIT_S390_SIEIC */ struct { __u8 icptcode; __u16 ipa; __u32 ipb; } s390_sieic; /* KVM_EXIT_S390_RESET */ #define KVM_S390_RESET_POR 1 #define KVM_S390_RESET_CLEAR 2 #define KVM_S390_RESET_SUBSYSTEM 4 #define KVM_S390_RESET_CPU_INIT 8 #define KVM_S390_RESET_IPL 16 __u64 s390_reset_flags; /* KVM_EXIT_DCR */ struct { __u32 dcrn; __u32 data; __u8 is_write; } dcr; struct { __u32 suberror; } internal; /* Fix the size of the union. */ char padding[256]; }; };

runData

// runData is the run structure. This may be mapped for synchronous register // access (although that doesn't appear to be supported by my kernel at least). // // This mirrors kvm_run. type runData struct { requestInterruptWindow uint8 _ [7]uint8 exitReason uint32 readyForInterruptInjection uint8 ifFlag uint8 _ [2]uint8 cr8 uint64 apicBase uint64 // This is the union data for exits. Interpretation depends entirely on // the exitReason above (see vCPU code for more information). data [32]uint64 }

KVM_SET_USER_MEMORY_REGION 和KVM_SET_REGS

mem_init()函数用于初始化虚拟机内存,首先使用mmap()申请一片按页对齐的内存,默认是512M(KVM_CFG_RAM_SIZE),然后将内存地址和大小填充到kvm_userspace_memory_region 结构体中,最后调用KVM_SET_USER_MEMORY_REGION API将虚拟机内存和vm_fd绑定。

int mem_init(struct kvm* kvm){ int ret=0; u64 ram_size = KVM_CFG_RAM_SIZE; void* ram_start=NULL; ram_start = mmap(NULL, ram_size, PROT_READ | PROT_WRITE, MAP_PRIVATE | MAP_ANON | MAP_NORESERVE, -1,0); ... madvise(ram_start, ram_size, MADV_MERGEABLE); printf("allocated %lld bytes from %p ",ram_size,ram_start); kvm->ram_start = ram_start; kvm->ram_size = ram_size; kvm->ram_pagesize = getpagesize(); struct kvm_userspace_memory_region region={ .slot = 0, .guest_phys_addr = 0, .memory_size = ram_size, .userspace_addr = (u64)ram_start }; ret = ioctl(kvm->vm_fd, KVM_SET_USER_MEMORY_REGION, ®ion); ... return ret; }

- M装载模块:

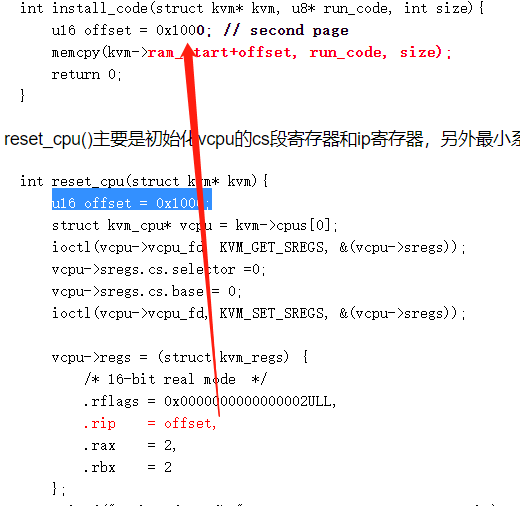

装载vm指令的函数install_code()比较简单,就是将预先存好指令数组run_code,使用memcpy()复制到虚拟机内存中的offset偏移位置,这里选择0x1000偏移,是为了让VM指令处于第2页内存中,其中一页内存是4K bytes(0x1000)。这个偏移值会影响后续的cpu寄存器初始化过程。

int install_code(struct kvm* kvm, u8* run_code, int size){

u16 offset = 0x1000; // second page

memcpy(kvm->ram_start+offset, run_code, size);

return 0;

}reset_cpu()主要是初始化vcpu的cs段寄存器和ip寄存器,另外最小系统实现的是ax寄存器和bx寄存器相加的操作,这里传入2+2的任务。还需要设置rflags为16位实模式(real mode)。

int reset_cpu(struct kvm* kvm){

u16 offset = 0x1000;

struct kvm_cpu* vcpu = kvm->cpus[0];

ioctl(vcpu->vcpu_fd, KVM_GET_SREGS, &(vcpu->sregs));

vcpu->sregs.cs.selector =0;

vcpu->sregs.cs.base = 0;

ioctl(vcpu->vcpu_fd, KVM_SET_SREGS, &(vcpu->sregs));

vcpu->regs = (struct kvm_regs) {

/* 16-bit real mode */

.rflags = 0x0000000000000002ULL,

.rip = offset,

.rax = 2,

.rbx = 2

};

printf("task: %d + %d

",vcpu->regs.rax, vcpu->regs.rbx);

ioctl(vcpu->vcpu_fd, KVM_SET_REGS, &(vcpu->regs));

return 0;

}