http://containerz.blogspot.com/2015/03/virtualization-vs-containerization.html

Virtualization vs. Containerization

Containers provide isolated runtime environments for applications: the entire user space environment is exclusively presented to the container, and any changes to it do not impact other containers' environments. To provide this isolation, a combination of OS-based mechanisms is used: Linux name spaces are used for isolation and scoping mechanism. File system mounts define what files are accessible to the container. cgroups define resource consumption of containers. Still all containers share the same OS kernel which can realize memory footprint efficiencies when identical libraries are used by multiple containers.

With system virtualization, the hypervisor provides a full virtual machine to a guest: the entire OS image including the kernel is now dedicated to the virtual machine. CPU virtualization is used to provide each guest with an exclusive view of a full system environment, and these mechanisms also ensure isolation from other guests. Hypervisor-based management of virtual CPUs, memory and I/O devices is used to define resource consumption of guests.

As always, it depends on your needs. If you just want to have a number of separate instances to run applications, a container environment often provides greater efficiency, both in managing the application environment, starting the application instances, and in resource consumption. Simple modification and deployment of application environments has been a design principle of container solutions like Docker and is entirely in the DevOps spirit (guess you just have been waiting for more buzzwords).

If you want to have best isolation of environments and come from a server virtualization perspective, then system virtualization may be more relevant: Noisy neighbours are much less of an issue than with containers. While many of the container folks currently focus on improving container isolation, virtual machine isolation is still superior. Coming from physical servers, virtual servers are a natural step, and an existing ecosystem around server management can often be applied to virtual servers, too.

On z systems, Linux has good scalability (to run containers), but z is the platform with an extremely efficient virtualization technology (to run virtual servers), and it is inherent in the entire system architecture. Without having measured it, combining the technologies is probably less painful on z Systems than on other platforms.

There is a third way: both.

Combining system virtualization with containers can be done in multiple ways:

To summarize: it depends.

With system virtualization, the hypervisor provides a full virtual machine to a guest: the entire OS image including the kernel is now dedicated to the virtual machine. CPU virtualization is used to provide each guest with an exclusive view of a full system environment, and these mechanisms also ensure isolation from other guests. Hypervisor-based management of virtual CPUs, memory and I/O devices is used to define resource consumption of guests.

Which one is better?

As always, it depends on your needs. If you just want to have a number of separate instances to run applications, a container environment often provides greater efficiency, both in managing the application environment, starting the application instances, and in resource consumption. Simple modification and deployment of application environments has been a design principle of container solutions like Docker and is entirely in the DevOps spirit (guess you just have been waiting for more buzzwords).

If you want to have best isolation of environments and come from a server virtualization perspective, then system virtualization may be more relevant: Noisy neighbours are much less of an issue than with containers. While many of the container folks currently focus on improving container isolation, virtual machine isolation is still superior. Coming from physical servers, virtual servers are a natural step, and an existing ecosystem around server management can often be applied to virtual servers, too.

On z systems, Linux has good scalability (to run containers), but z is the platform with an extremely efficient virtualization technology (to run virtual servers), and it is inherent in the entire system architecture. Without having measured it, combining the technologies is probably less painful on z Systems than on other platforms.

There is a third way: both.

Combining system virtualization with containers can be done in multiple ways:

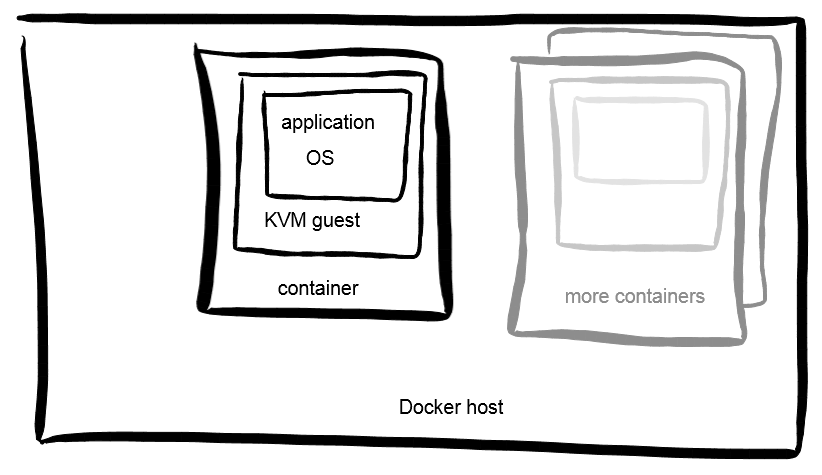

1. A Virtual Machine in a Container

Docker has quite some flexibility on where containers are deployed. One option (called "execution driver") is to use KVM images. This allows to use all the DevOps methods of Docker and combine it with best isolation available, at the cost of having to start up entire Operating System instances when starting containers -- meaning startup time and footprint. Memory efficiencies can only be realized through Kernel Samepage Merging (KSM) -- less effective and efficient, but it's a start.2. A Container in a Virtual Machine

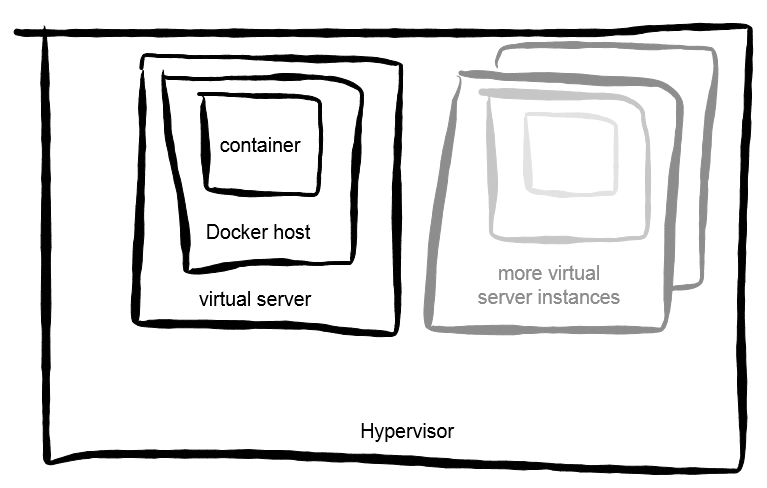

Conversely, you can run a virtual machine and start a container inside. The VM would not be controlled by Docker, but by existing virtualization management infrastructure. Once the OS instance is up, starting a container would then be done using Docker, and no special setup has to be performed for running containers. Again, containers would have strong isolation since the next container runs in another virtual system; footprint-wise, efficiencies would only be possible through memory deduplication techniques by the hypervisor.2b. Multiple Containers in a Virtual Machine

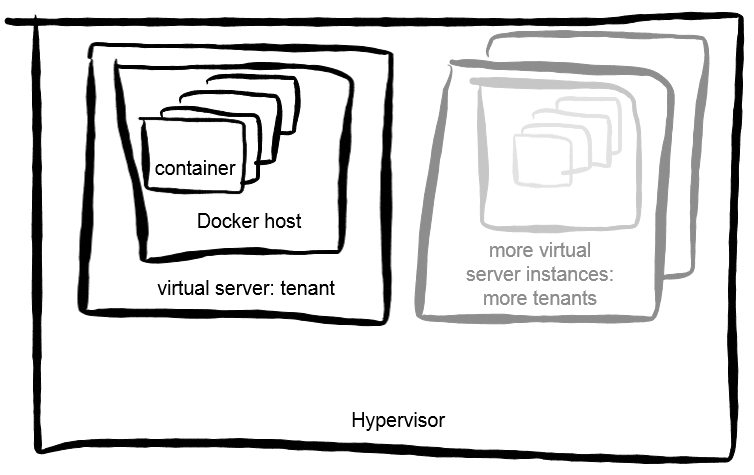

This is a variation of running Docker in a VM, suitable for multi-tenancy environments. Here, the assumption is that strongest isolation is only required between containers of different tenants, and straight Linux container isolation is good enough between several containers of the same tenant. Advantages are lower number of virtual machines to run, best isolation between tenants, and enjoying all the efficiencies of vanilla Docker setups.To summarize: it depends.