上次拜读了CTPN论文,趁热打铁,今天就从网上找到CTPN 的tensorflow代码实现一下,这里放出大佬的github项目地址:https://github.com/eragonruan/text-detection-ctpn

博客里的代码都是经过实际操作可以运行的,这里只是总结一下代码的实现过程,提高一下自己的代码能力,争取早日会自己写代码 !!!》o《!!!

首先从train_net.py开始开刀吧。。。。

import pprint import sys import os.path sys.path.append(os.getcwd())#os.getcwd 用于返回当前工作目录 sys.path.append()用于将前面得到的工作目录添加到搜索路径中 this_dir = os.path.dirname(__file__)#os.path.dirname 获取当前运行脚本的绝对路径。 from lib.fast_rcnn.train import get_training_roidb, train_net from lib.fast_rcnn.config import cfg_from_file, get_output_dir, get_log_dir from lib.datasets.factory import get_imdb from lib.networks.factory import get_network from lib.fast_rcnn.config import cfg if __name__ == '__main__': cfg_from_file('ctpn/text.yml')#text.yml 存放的是训练时的一些参数 print('Using config:') pprint.pprint(cfg)#pprint函数时pprint模块下方法,是一种标准、格式化输出方式。pprint(object, stream=None, indent=1, width=80, depth=None, *, compact=False) #这里是将训练的参数格式化显示出来。 imdb = get_imdb('voc_2007_trainval')#读取VOC中的数据集 print(imdb) print('Loaded dataset `{:s}` for training'.format(imdb.name)) roidb = get_training_roidb(imdb)#获得感兴趣区域的数据集 output_dir = get_output_dir(imdb, None)#返回程序运行结果存放的文件夹的路径 log_dir = get_log_dir(imdb)#返回程序运行时中间过程产生的文件。 print('Output will be saved to `{:s}`'.format(output_dir)) print('Logs will be saved to `{:s}`'.format(log_dir)) network = get_network('VGGnet_train')#获取VGG网络结构 train_net(network, imdb, roidb, output_dir=output_dir, log_dir=log_dir, pretrained_model='/home/chendali1/Gsj/text-detection-ctpn-master/data/pretrain/VGG_imagenet.npy', max_iters=int(cfg.TRAIN.max_steps),restore=bool(int(cfg.TRAIN.restore)))#采用VGG_Net 输入训练图片的数据集,感兴趣区域的数据集等开始训练。。

我们主要讲解两个函数,在下面给出了。

network = get_network('VGGnet_train')#获取VGG网络结构 train_net(network, imdb, roidb, output_dir=output_dir, log_dir=log_dir, pretrained_model='/home/chendali1/Gsj/text-detection-ctpn-master/data/pretrain/VGG_imagenet.npy', max_iters=int(cfg.TRAIN.max_steps),restore=bool(int(cfg.TRAIN.restore)))#采用VGG_Net 输入训练图片的数据集,感兴趣区域的数据集等开始训练。。

先让我们看看get_network这个函数,由名字可以大致猜到他可冷是定义网络结构的吧。。

def get_network(name): """Get a network by name.""" if name.split('_')[0] == 'VGGnet': if name.split('_')[1] == 'test': return VGGnet_test() elif name.split('_')[1] == 'train': return VGGnet_train() else: raise KeyError('Unknown dataset: {}'.format(name)) else: raise KeyError('Unknown dataset: {}'.format(name))

(感觉满满的套路,我们继续往下看吧。。。。。),这里我们寻找VGGnet_train()这个函数

class VGGnet_train(Network):#定义VGGnet网络结构类 def __init__(self, trainable=True): self.inputs = [] self.data = tf.placeholder(tf.float32, shape=[None, None, None, 3], name='data')#定义输入图片的占位符,图片为三通道的大小不设置 self.im_info = tf.placeholder(tf.float32, shape=[None, 3], name='im_info')# self.gt_boxes = tf.placeholder(tf.float32, shape=[None, 5], name='gt_boxes')#定义gt框的占位符包含一个标签 self.gt_ishard = tf.placeholder(tf.int32, shape=[None], name='gt_ishard') self.dontcare_areas = tf.placeholder(tf.float32, shape=[None, 4], name='dontcare_areas')#定义非关心区的占位符 self.keep_prob = tf.placeholder(tf.float32) self.layers = dict({'data':self.data, 'im_info':self.im_info, 'gt_boxes':self.gt_boxes, 'gt_ishard': self.gt_ishard, 'dontcare_areas': self.dontcare_areas}) self.trainable = trainable self.setup() def setup(self): # n_classes = 21 n_classes = cfg.NCLASSES#设置数据集中的类别数 # anchor_scales = [8, 16, 32] anchor_scales = cfg.ANCHOR_SCALES#定义anchor的尺寸 _feat_stride = [16, ]#滑动步长为16 (self.feed('data')#下面的是网络结构的框架 .conv(3, 3, 64, 1, 1, name='conv1_1') .conv(3, 3, 64, 1, 1, name='conv1_2') .max_pool(2, 2, 2, 2, padding='VALID', name='pool1') .conv(3, 3, 128, 1, 1, name='conv2_1') .conv(3, 3, 128, 1, 1, name='conv2_2') .max_pool(2, 2, 2, 2, padding='VALID', name='pool2') .conv(3, 3, 256, 1, 1, name='conv3_1') .conv(3, 3, 256, 1, 1, name='conv3_2') .conv(3, 3, 256, 1, 1, name='conv3_3') .max_pool(2, 2, 2, 2, padding='VALID', name='pool3') .conv(3, 3, 512, 1, 1, name='conv4_1') .conv(3, 3, 512, 1, 1, name='conv4_2') .conv(3, 3, 512, 1, 1, name='conv4_3') .max_pool(2, 2, 2, 2, padding='VALID', name='pool4') .conv(3, 3, 512, 1, 1, name='conv5_1') .conv(3, 3, 512, 1, 1, name='conv5_2') .conv(3, 3, 512, 1, 1, name='conv5_3')) #========= RPN ============ (self.feed('conv5_3') .conv(3,3,512,1,1,name='rpn_conv/3x3'))#rpn是从第五级的第三层开始处理的 (self.feed('rpn_conv/3x3').Bilstm(512,128,512,name='lstm_o'))#这里就是传说中的内网循环结构 (self.feed('lstm_o').lstm_fc(512,len(anchor_scales) * 10 * 4, name='rpn_bbox_pred')) (self.feed('lstm_o').lstm_fc(512,len(anchor_scales) * 10 * 2,name='rpn_cls_score')) # generating training labels on the fly # output: rpn_labels(HxWxA, 2) rpn_bbox_targets(HxWxA, 4) rpn_bbox_inside_weights rpn_bbox_outside_weights # 给每个anchor上标签,并计算真值(也是delta的形式),以及内部权重和外部权重 (self.feed('rpn_cls_score', 'gt_boxes', 'gt_ishard', 'dontcare_areas', 'im_info') .anchor_target_layer(_feat_stride, anchor_scales, name = 'rpn-data' )) # shape is (1, H, W, Ax2) -> (1, H, WxA, 2) # 给之前得到的score进行softmax,得到0-1之间的得分 (self.feed('rpn_cls_score') .spatial_reshape_layer(2, name = 'rpn_cls_score_reshape') .spatial_softmax(name='rpn_cls_prob'))

上面的conv等函数的定义并未详细说明,下面的任务就是一一解释他们,由于本人能力有限,但尽其所能进行解释。代码如下:

# -*- coding:utf-8 -*- import numpy as np import tensorflow as tf from ..fast_rcnn.config import cfg from ..rpn_msr.proposal_layer_tf import proposal_layer as proposal_layer_py from ..rpn_msr.anchor_target_layer_tf import anchor_target_layer as anchor_target_layer_py DEFAULT_PADDING = 'SAME'#定义padding 为"SAME" def layer(op): def layer_decorated(self, *args, **kwargs): # Automatically set a name if not provided. name = kwargs.setdefault('name', self.get_unique_name(op.__name__)) # Figure out the layer inputs. if len(self.inputs)==0: raise RuntimeError('No input variables found for layer %s.'%name) elif len(self.inputs)==1: layer_input = self.inputs[0] else: layer_input = list(self.inputs) # Perform the operation and get the output. layer_output = op(self, layer_input, *args, **kwargs) # Add to layer LUT. self.layers[name] = layer_output # This output is now the input for the next layer. self.feed(layer_output) # Return self for chained calls. return self return layer_decorated class Network(object):#这里定义了一个网络的类,内部含有所有搭建网络所需操作函数的定义 def __init__(self, inputs, trainable=True): self.inputs = [] self.layers = dict(inputs)#网络层为一个字典类型 self.trainable = trainable#是否可以训练 self.setup() def setup(self): raise NotImplementedError('Must be subclassed.')#预留一个方法不实现,在其子类中进行实现。 def load(self, data_path, session, ignore_missing=False): data_dict = np.load(data_path,encoding='latin1').item() for key in data_dict: with tf.variable_scope(key, reuse=True): for subkey in data_dict[key]: try: var = tf.get_variable(subkey) session.run(var.assign(data_dict[key][subkey])) print("assign pretrain model "+subkey+ " to "+key) except ValueError: print("ignore "+key) if not ignore_missing: raise def feed(self, *args):#添加网络层,搭建网络 assert len(args)!=0 self.inputs = [] for layer in args: if isinstance(layer, str): try: layer = self.layers[layer]#输入网络层 print(layer) except KeyError: print(list(self.layers.keys())) raise KeyError('Unknown layer name fed: %s'%layer) self.inputs.append(layer)#在原有网络结构上添加新的网络层 return self def get_output(self, layer): try: layer = self.layers[layer] except KeyError: print(list(self.layers.keys())) raise KeyError('Unknown layer name fed: %s'%layer) return layer def get_unique_name(self, prefix): id = sum(t.startswith(prefix) for t,_ in list(self.layers.items()))+1 return '%s_%d'%(prefix, id) def make_var(self, name, shape, initializer=None, trainable=True, regularizer=None): return tf.get_variable(name, shape, initializer=initializer, trainable=trainable, regularizer=regularizer) #tf.get_variable 如果已经创建变量对象,就将此对象返回,如果没有,就创建一个。 def validate_padding(self, padding): assert padding in ('SAME', 'VALID') @layer#'@'符号用作函数修饰符是python2.4新增加的功能,修饰符必须出现在函数定义前一行,不允许和函数定义在同一行。 #也就是说@A def f(): 是非法的。只可以在模块或类定义层内对函数进行修饰,不允许修修饰一个类。 #一个修饰符就是一个函数,它将被修饰的函数做为参数,并返回修饰后的同名函数或其它可调用的东西。 def Bilstm(self, input, d_i, d_h, d_o, name, trainable=True): img = input with tf.variable_scope(name) as scope: shape = tf.shape(img) N, H, W, C = shape[0], shape[1], shape[2], shape[3]#样本数,高,宽,通道数 img = tf.reshape(img, [N * H, W, C]) img.set_shape([None, None, d_i])#更新img中的shape lstm_fw_cell = tf.contrib.rnn.LSTMCell(d_h, state_is_tuple=True)#d_h为单元的个数 lstm_bw_cell = tf.contrib.rnn.LSTMCell(d_h, state_is_tuple=True)#若state_is_tuple为True,返回c_state和m_state的元组 lstm_out, last_state = tf.nn.bidirectional_dynamic_rnn(lstm_fw_cell,lstm_bw_cell, img, dtype=tf.float32)#论文中提到的双向RNN进而实现双向LSTM #lstm_fw_cell,lstm_bw_cell分别为前向RNN,后向RNN lstm_out = tf.concat(lstm_out, axis=-1)#连接两个矩阵的操作,axis=-1表示在最后一维上进行连接 lstm_out = tf.reshape(lstm_out, [N * H * W, 2*d_h])#双向LSTM的输出 init_weights = tf.truncated_normal_initializer(stddev=0.1) init_biases = tf.constant_initializer(0.0) weights = self.make_var('weights', [2*d_h, d_o], init_weights, trainable, regularizer=self.l2_regularizer(cfg.TRAIN.WEIGHT_DECAY)) biases = self.make_var('biases', [d_o], init_biases, trainable) outputs = tf.matmul(lstm_out, weights) + biases outputs = tf.reshape(outputs, [N, H, W, d_o]) return outputs @layer def lstm(self, input, d_i,d_h,d_o, name, trainable=True): img = input with tf.variable_scope(name) as scope: shape = tf.shape(img) N,H,W,C = shape[0], shape[1],shape[2], shape[3] img = tf.reshape(img,[N*H,W,C]) img.set_shape([None,None,d_i]) lstm_cell = tf.contrib.rnn.LSTMCell(d_h, state_is_tuple=True) initial_state = lstm_cell.zero_state(N*H, dtype=tf.float32) lstm_out, last_state = tf.nn.dynamic_rnn(lstm_cell, img, initial_state=initial_state,dtype=tf.float32) lstm_out = tf.reshape(lstm_out,[N*H*W,d_h]) init_weights = tf.truncated_normal_initializer(stddev=0.1) init_biases = tf.constant_initializer(0.0) weights = self.make_var('weights', [d_h, d_o], init_weights, trainable, regularizer=self.l2_regularizer(cfg.TRAIN.WEIGHT_DECAY)) biases = self.make_var('biases', [d_o], init_biases, trainable) outputs = tf.matmul(lstm_out, weights) + biases outputs = tf.reshape(outputs, [N,H,W,d_o]) return outputs @layer def lstm_fc(self, input, d_i, d_o, name, trainable=True):#定义LSTM的全连接层 with tf.variable_scope(name) as scope: shape = tf.shape(input) N, H, W, C = shape[0], shape[1], shape[2], shape[3] input = tf.reshape(input, [N*H*W,C]) init_weights = tf.truncated_normal_initializer(0.0, stddev=0.01) init_biases = tf.constant_initializer(0.0) kernel = self.make_var('weights', [d_i, d_o], init_weights, trainable, regularizer=self.l2_regularizer(cfg.TRAIN.WEIGHT_DECAY)) biases = self.make_var('biases', [d_o], init_biases, trainable) _O = tf.matmul(input, kernel) + biases return tf.reshape(_O, [N, H, W, int(d_o)]) @layer def conv(self, input, k_h, k_w, c_o, s_h, s_w, name, biased=True,relu=True, padding=DEFAULT_PADDING, trainable=True): # self,输入,核高,核宽,输出数,步长高,步长宽,名字。。。 """ contribution by miraclebiu, and biased option""" self.validate_padding(padding)#{SAME,PADDING} c_i = input.get_shape()[-1]#获得input中最后一维的值 convolve = lambda i, k: tf.nn.conv2d(i, k, [1, s_h, s_w, 1], padding=padding)#定义卷积过程 with tf.variable_scope(name) as scope: init_weights = tf.truncated_normal_initializer(0.0, stddev=0.01)#初始化权重 init_biases = tf.constant_initializer(0.0)#初始化偏差为0 kernel = self.make_var('weights', [k_h, k_w, c_i, c_o], init_weights, trainable, regularizer=self.l2_regularizer(cfg.TRAIN.WEIGHT_DECAY))#定义核的格式 if biased: biases = self.make_var('biases', [c_o], init_biases, trainable) conv = convolve(input, kernel) if relu:#RELU bias = tf.nn.bias_add(conv, biases) return tf.nn.relu(bias, name=scope.name) return tf.nn.bias_add(conv, biases, name=scope.name) else: conv = convolve(input, kernel) if relu: return tf.nn.relu(conv, name=scope.name) return conv @layer def relu(self, input, name):#定义RELU return tf.nn.relu(input, name=name) @layer def max_pool(self, input, k_h, k_w, s_h, s_w, name, padding=DEFAULT_PADDING):#定义最大池化层 self.validate_padding(padding) return tf.nn.max_pool(input, ksize=[1, k_h, k_w, 1], strides=[1, s_h, s_w, 1], padding=padding, name=name) @layer def avg_pool(self, input, k_h, k_w, s_h, s_w, name, padding=DEFAULT_PADDING):#定义平均池化层 self.validate_padding(padding) return tf.nn.avg_pool(input, ksize=[1, k_h, k_w, 1], strides=[1, s_h, s_w, 1], padding=padding, name=name) @layer def proposal_layer(self, input, _feat_stride, anchor_scales, cfg_key, name): if isinstance(input[0], tuple): input[0] = input[0][0] # input[0] shape is (1, H, W, Ax2) # rpn_rois <- (1 x H x W x A, 5) [0, x1, y1, x2, y2] with tf.variable_scope(name) as scope: blob,bbox_delta = tf.py_func(proposal_layer_py,[input[0],input[1],input[2], cfg_key, _feat_stride, anchor_scales], [tf.float32,tf.float32]) rpn_rois = tf.convert_to_tensor(tf.reshape(blob,[-1, 5]), name = 'rpn_rois') # shape is (1 x H x W x A, 2) rpn_targets = tf.convert_to_tensor(bbox_delta, name = 'rpn_targets') # shape is (1 x H x W x A, 4) self.layers['rpn_rois'] = rpn_rois self.layers['rpn_targets'] = rpn_targets return rpn_rois, rpn_targets @layer def anchor_target_layer(self, input, _feat_stride, anchor_scales, name):#给每个anchor加标签,并计算groundTruth if isinstance(input[0], tuple): input[0] = input[0][0] with tf.variable_scope(name) as scope: # 'rpn_cls_score', 'gt_boxes', 'gt_ishard', 'dontcare_areas', 'im_info'分别用input[0]~[5]进行表示 rpn_labels,rpn_bbox_targets,rpn_bbox_inside_weights,rpn_bbox_outside_weights = tf.py_func(anchor_target_layer_py, [input[0],input[1],input[2],input[3],input[4], _feat_stride, anchor_scales], [tf.float32,tf.float32,tf.float32,tf.float32]) rpn_labels = tf.convert_to_tensor(tf.cast(rpn_labels,tf.int32), name = 'rpn_labels') # shape is (1 x H x W x A, 2) rpn_bbox_targets = tf.convert_to_tensor(rpn_bbox_targets, name = 'rpn_bbox_targets') # shape is (1 x H x W x A, 4) rpn_bbox_inside_weights = tf.convert_to_tensor(rpn_bbox_inside_weights , name = 'rpn_bbox_inside_weights') # shape is (1 x H x W x A, 4) rpn_bbox_outside_weights = tf.convert_to_tensor(rpn_bbox_outside_weights , name = 'rpn_bbox_outside_weights') # shape is (1 x H x W x A, 4) return rpn_labels, rpn_bbox_targets, rpn_bbox_inside_weights, rpn_bbox_outside_weights @layer def reshape_layer(self, input, d, name): input_shape = tf.shape(input) if name == 'rpn_cls_prob_reshape': # # transpose: (1, AxH, W, 2) -> (1, 2, AxH, W) # reshape: (1, 2xA, H, W) # transpose: -> (1, H, W, 2xA) return tf.transpose(tf.reshape(tf.transpose(input,[0,3,1,2]), [ input_shape[0], int(d), tf.cast(tf.cast(input_shape[1],tf.float32)/tf.cast(d,tf.float32)*tf.cast(input_shape[3],tf.float32),tf.int32), input_shape[2] ]), [0,2,3,1],name=name) else: return tf.transpose(tf.reshape(tf.transpose(input,[0,3,1,2]), [ input_shape[0], int(d), tf.cast(tf.cast(input_shape[1],tf.float32)*(tf.cast(input_shape[3],tf.float32)/tf.cast(d,tf.float32)),tf.int32), input_shape[2] ]), [0,2,3,1],name=name) @layer def spatial_reshape_layer(self, input, d, name): input_shape = tf.shape(input) # transpose: (1, H, W, A x d) -> (1, H, WxA, d) return tf.reshape(input, [input_shape[0], input_shape[1], -1, int(d)]) @layer def lrn(self, input, radius, alpha, beta, name, bias=1.0): return tf.nn.local_response_normalization(input, depth_radius=radius, alpha=alpha, beta=beta, bias=bias, name=name) @layer def concat(self, inputs, axis, name): return tf.concat(concat_dim=axis, values=inputs, name=name) @layer def fc(self, input, num_out, name, relu=True, trainable=True): with tf.variable_scope(name) as scope: # only use the first input if isinstance(input, tuple): input = input[0] input_shape = input.get_shape() if input_shape.ndims == 4: dim = 1 for d in input_shape[1:].as_list(): dim *= d feed_in = tf.reshape(tf.transpose(input,[0,3,1,2]), [-1, dim]) else: feed_in, dim = (input, int(input_shape[-1])) if name == 'bbox_pred': init_weights = tf.truncated_normal_initializer(0.0, stddev=0.001) init_biases = tf.constant_initializer(0.0) else: init_weights = tf.truncated_normal_initializer(0.0, stddev=0.01) init_biases = tf.constant_initializer(0.0) weights = self.make_var('weights', [dim, num_out], init_weights, trainable, regularizer=self.l2_regularizer(cfg.TRAIN.WEIGHT_DECAY)) biases = self.make_var('biases', [num_out], init_biases, trainable) op = tf.nn.relu_layer if relu else tf.nn.xw_plus_b fc = op(feed_in, weights, biases, name=scope.name) return fc @layer def softmax(self, input, name): input_shape = tf.shape(input) if name == 'rpn_cls_prob': return tf.reshape(tf.nn.softmax(tf.reshape(input,[-1,input_shape[3]])),[-1,input_shape[1],input_shape[2],input_shape[3]],name=name) else: return tf.nn.softmax(input,name=name) @layer def spatial_softmax(self, input, name): input_shape = tf.shape(input) # d = input.get_shape()[-1] return tf.reshape(tf.nn.softmax(tf.reshape(input, [-1, input_shape[3]])), [-1, input_shape[1], input_shape[2], input_shape[3]], name=name) @layer def add(self,input,name): """contribution by miraclebiu""" return tf.add(input[0],input[1]) @layer def batch_normalization(self,input,name,relu=True,is_training=False): """contribution by miraclebiu""" if relu: temp_layer=tf.contrib.layers.batch_norm(input,scale=True,center=True,is_training=is_training,scope=name) return tf.nn.relu(temp_layer) else: return tf.contrib.layers.batch_norm(input,scale=True,center=True,is_training=is_training,scope=name) @layer def dropout(self, input, keep_prob, name): return tf.nn.dropout(input, keep_prob, name=name) def l2_regularizer(self, weight_decay=0.0005, scope=None): def regularizer(tensor): with tf.name_scope(scope, default_name='l2_regularizer', values=[tensor]): l2_weight = tf.convert_to_tensor(weight_decay, dtype=tensor.dtype.base_dtype, name='weight_decay') #return tf.mul(l2_weight, tf.nn.l2_loss(tensor), name='value') return tf.multiply(l2_weight, tf.nn.l2_loss(tensor), name='value') return regularizer def smooth_l1_dist(self, deltas, sigma2=9.0, name='smooth_l1_dist'): with tf.name_scope(name=name) as scope: deltas_abs = tf.abs(deltas) smoothL1_sign = tf.cast(tf.less(deltas_abs, 1.0/sigma2), tf.float32) return tf.square(deltas) * 0.5 * sigma2 * smoothL1_sign + (deltas_abs - 0.5 / sigma2) * tf.abs(smoothL1_sign - 1) def build_loss(self, ohem=False):#定义损失函数,一个为RPN的分类,一个为RPN回归 # classification loss rpn_cls_score = tf.reshape(self.get_output('rpn_cls_score_reshape'), [-1, 2]) # shape (HxWxA, 2) rpn_label = tf.reshape(self.get_output('rpn-data')[0], [-1]) # shape (HxWxA) # ignore_label(-1) fg_keep = tf.equal(rpn_label, 1) rpn_keep = tf.where(tf.not_equal(rpn_label, -1)) rpn_cls_score = tf.gather(rpn_cls_score, rpn_keep) # shape (N, 2) rpn_label = tf.gather(rpn_label, rpn_keep) rpn_cross_entropy_n = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=rpn_label,logits=rpn_cls_score) # box loss rpn_bbox_pred = self.get_output('rpn_bbox_pred') # shape (1, H, W, Ax4) rpn_bbox_targets = self.get_output('rpn-data')[1] rpn_bbox_inside_weights = self.get_output('rpn-data')[2] rpn_bbox_outside_weights = self.get_output('rpn-data')[3] rpn_bbox_pred = tf.gather(tf.reshape(rpn_bbox_pred, [-1, 4]), rpn_keep) # shape (N, 4) rpn_bbox_targets = tf.gather(tf.reshape(rpn_bbox_targets, [-1, 4]), rpn_keep) rpn_bbox_inside_weights = tf.gather(tf.reshape(rpn_bbox_inside_weights, [-1, 4]), rpn_keep) rpn_bbox_outside_weights = tf.gather(tf.reshape(rpn_bbox_outside_weights, [-1, 4]), rpn_keep) rpn_loss_box_n = tf.reduce_sum(rpn_bbox_outside_weights * self.smooth_l1_dist( rpn_bbox_inside_weights * (rpn_bbox_pred - rpn_bbox_targets)), reduction_indices=[1]) rpn_loss_box = tf.reduce_sum(rpn_loss_box_n) / (tf.reduce_sum(tf.cast(fg_keep, tf.float32)) + 1) rpn_cross_entropy = tf.reduce_mean(rpn_cross_entropy_n) model_loss = rpn_cross_entropy + rpn_loss_box regularization_losses = tf.get_collection(tf.GraphKeys.REGULARIZATION_LOSSES)#tf.get_collection(collection_name)返回某个collection的列表 total_loss = tf.add_n(regularization_losses) + model_loss return total_loss,model_loss, rpn_cross_entropy, rpn_loss_box

下面是给anchor加GT的代码

# -*- coding:utf-8 -*- import numpy as np import numpy.random as npr from .generate_anchors import generate_anchors from ..utils.bbox import bbox_overlaps, bbox_intersections from ..fast_rcnn.config import cfg from ..fast_rcnn.bbox_transform import bbox_transform DEBUG = False def anchor_target_layer(rpn_cls_score, gt_boxes, gt_ishard, dontcare_areas, im_info, _feat_stride = [16,], anchor_scales = [16,]): """ Assign anchors to ground-truth targets. Produces anchor classification labels and bounding-box regression targets. Parameters ---------- rpn_cls_score: (1, H, W, Ax2) bg/fg scores of previous conv layer gt_boxes: (G, 5) vstack of [x1, y1, x2, y2, class] gt_ishard: (G, 1), 1 or 0 indicates difficult or not dontcare_areas: (D, 4), some areas may contains small objs but no labelling. D may be 0 im_info: a list of [image_height, image_width, scale_ratios] _feat_stride: the downsampling ratio of feature map to the original input image anchor_scales: the scales to the basic_anchor (basic anchor is [16, 16]) ---------- Returns ---------- rpn_labels : (HxWxA, 1), for each anchor, 0 denotes bg, 1 fg, -1 dontcare rpn_bbox_targets: (HxWxA, 4), distances of the anchors to the gt_boxes(may contains some transform) that are the regression objectives rpn_bbox_inside_weights: (HxWxA, 4) weights of each boxes, mainly accepts hyper param in cfg rpn_bbox_outside_weights: (HxWxA, 4) used to balance the fg/bg, beacuse the numbers of bgs and fgs mays significiantly different """ _anchors = generate_anchors(scales=np.array(anchor_scales))#生成基本的anchor,一共9个 _num_anchors = _anchors.shape[0]#9个anchor if DEBUG: print('anchors:') print(_anchors) print('anchor shapes:') print(np.hstack(( _anchors[:, 2::4] - _anchors[:, 0::4], _anchors[:, 3::4] - _anchors[:, 1::4], ))) _counts = cfg.EPS _sums = np.zeros((1, 4)) _squared_sums = np.zeros((1, 4)) _fg_sum = 0 _bg_sum = 0 _count = 0 # allow boxes to sit over the edge by a small amount _allowed_border = 0 # map of shape (..., H, W) #height, width = rpn_cls_score.shape[1:3] im_info = im_info[0]#图像的高宽及通道数 #在feature-map上定位anchor,并加上delta,得到在实际图像中anchor的真实坐标 # Algorithm: # for each (H, W) location i # generate 9 anchor boxes centered on cell i # apply predicted bbox deltas at cell i to each of the 9 anchors # filter out-of-image anchors # measure GT overlap assert rpn_cls_score.shape[0] == 1, 'Only single item batches are supported' # map of shape (..., H, W) height, width = rpn_cls_score.shape[1:3]#feature-map的高宽 if DEBUG: print('AnchorTargetLayer: height', height, 'width', width) print('') print('im_size: ({}, {})'.format(im_info[0], im_info[1])) print('scale: {}'.format(im_info[2])) print('height, ({}, {})'.format(height, width)) print('rpn: gt_boxes.shape', gt_boxes.shape) print('rpn: gt_boxes', gt_boxes) # 1. Generate proposals from bbox deltas and shifted anchors shift_x = np.arange(0, width) * _feat_stride shift_y = np.arange(0, height) * _feat_stride shift_x, shift_y = np.meshgrid(shift_x, shift_y) # in W H order # K is H x W shifts = np.vstack((shift_x.ravel(), shift_y.ravel(),#.ravel 将多维数组转为一维数组。reshape(-1)可以“拉平”多维数组 shift_x.ravel(), shift_y.ravel())).transpose()#生成feature-map和真实image上anchor之间的偏移量 # add A anchors (1, A, 4) to # cell K shifts (K, 1, 4) to get # shift anchors (K, A, 4) # reshape to (K*A, 4) shifted anchors A = _num_anchors#9个anchor K = shifts.shape[0]#50*37,feature-map的宽乘高的大小 all_anchors = (_anchors.reshape((1, A, 4)) + shifts.reshape((1, K, 4)).transpose((1, 0, 2)))#相当于复制宽高的维度,然后相加 all_anchors = all_anchors.reshape((K * A, 4)) total_anchors = int(K * A) # only keep anchors inside the image #仅保留那些还在图像内部的anchor,超出图像的都删掉 inds_inside = np.where( (all_anchors[:, 0] >= -_allowed_border) & (all_anchors[:, 1] >= -_allowed_border) & (all_anchors[:, 2] < im_info[1] + _allowed_border) & # width (all_anchors[:, 3] < im_info[0] + _allowed_border) # height )[0] if DEBUG: print('total_anchors', total_anchors) print('inds_inside', len(inds_inside)) # keep only inside anchors anchors = all_anchors[inds_inside, :]#保留那些在图像内的anchor if DEBUG: print('anchors.shape', anchors.shape) #至此,anchor准备好了 #-------------------------------------------------------------- # label: 1 is positive, 0 is negative, -1 is dont care # (A) labels = np.empty((len(inds_inside), ), dtype=np.float32) labels.fill(-1)#初始化label,均为-1 # overlaps between the anchors and the gt boxes # overlaps (ex, gt), shape is A x G #计算anchor和gt-box的overlap,用来给anchor上标签 overlaps = bbox_overlaps( np.ascontiguousarray(anchors, dtype=np.float),#np.ascontiguousarray 返回一个地址连续的数组 np.ascontiguousarray(gt_boxes, dtype=np.float))#假设anchors有x个,gt_boxes有y个,返回的是一个(x,y)的数组 # 存放每一个anchor和每一个gtbox之间的overlap argmax_overlaps = overlaps.argmax(axis=1) # (A)#找到和每一个gtbox,overlap最大的那个anchor max_overlaps = overlaps[np.arange(len(inds_inside)), argmax_overlaps] gt_argmax_overlaps = overlaps.argmax(axis=0) # G#找到每个位置上9个anchor中与gtbox,overlap最大的那个 gt_max_overlaps = overlaps[gt_argmax_overlaps, np.arange(overlaps.shape[1])] gt_argmax_overlaps = np.where(overlaps == gt_max_overlaps)[0] if not cfg.TRAIN.RPN_CLOBBER_POSITIVES: # assign bg labels first so that positive labels can clobber them labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0#先给背景上标签,小于0.3overlap的 # fg label: for each gt, anchor with highest overlap labels[gt_argmax_overlaps] = 1#每个位置上的9个anchor中overlap最大的认为是前景 # fg label: above threshold IOU labels[max_overlaps >= cfg.TRAIN.RPN_POSITIVE_OVERLAP] = 1#overlap大于0.7的认为是前景 if cfg.TRAIN.RPN_CLOBBER_POSITIVES: # assign bg labels last so that negative labels can clobber positives labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0 # preclude dontcare areas if dontcare_areas is not None and dontcare_areas.shape[0] > 0:#这里我们暂时不考虑有doncare_area的存在 # intersec shape is D x A intersecs = bbox_intersections( np.ascontiguousarray(dontcare_areas, dtype=np.float), # D x 4 np.ascontiguousarray(anchors, dtype=np.float) # A x 4 ) intersecs_ = intersecs.sum(axis=0) # A x 1 labels[intersecs_ > cfg.TRAIN.DONTCARE_AREA_INTERSECTION_HI] = -1 #这里我们暂时不考虑难样本的问题 # preclude hard samples that are highly occlusioned, truncated or difficult to see if cfg.TRAIN.PRECLUDE_HARD_SAMPLES and gt_ishard is not None and gt_ishard.shape[0] > 0: assert gt_ishard.shape[0] == gt_boxes.shape[0] gt_ishard = gt_ishard.astype(int) gt_hardboxes = gt_boxes[gt_ishard == 1, :] if gt_hardboxes.shape[0] > 0: # H x A hard_overlaps = bbox_overlaps( np.ascontiguousarray(gt_hardboxes, dtype=np.float), # H x 4 np.ascontiguousarray(anchors, dtype=np.float)) # A x 4 hard_max_overlaps = hard_overlaps.max(axis=0) # (A) labels[hard_max_overlaps >= cfg.TRAIN.RPN_POSITIVE_OVERLAP] = -1 max_intersec_label_inds = hard_overlaps.argmax(axis=1) # H x 1 labels[max_intersec_label_inds] = -1 # # subsample positive labels if we have too many #对正样本进行采样,如果正样本的数量太多的话 # 限制正样本的数量不超过128个 #TODO 这个后期可能还需要修改,毕竟如果使用的是字符的片段,那个正样本的数量是很多的。 num_fg = int(cfg.TRAIN.RPN_FG_FRACTION * cfg.TRAIN.RPN_BATCHSIZE) fg_inds = np.where(labels == 1)[0] if len(fg_inds) > num_fg: disable_inds = npr.choice(#npr.choice 返回一个列表,元组或字符串的随机项 fg_inds, size=(len(fg_inds) - num_fg), replace=False)#随机去除掉一些正样本 labels[disable_inds] = -1#变为-1 # subsample negative labels if we have too many #对负样本进行采样,如果负样本的数量太多的话 # 正负样本总数是256,限制正样本数目最多128, # 如果正样本数量小于128,差的那些就用负样本补上,凑齐256个样本 num_bg = cfg.TRAIN.RPN_BATCHSIZE - np.sum(labels == 1) bg_inds = np.where(labels == 0)[0] if len(bg_inds) > num_bg: disable_inds = npr.choice( bg_inds, size=(len(bg_inds) - num_bg), replace=False) labels[disable_inds] = -1 #print "was %s inds, disabling %s, now %s inds" % ( #len(bg_inds), len(disable_inds), np.sum(labels == 0)) # 至此, 上好标签,开始计算rpn-box的真值 #-------------------------------------------------------------- bbox_targets = np.zeros((len(inds_inside), 4), dtype=np.float32) bbox_targets = _compute_targets(anchors, gt_boxes[argmax_overlaps, :])#根据anchor和gtbox计算得真值(anchor和gtbox之间的偏差) bbox_inside_weights = np.zeros((len(inds_inside), 4), dtype=np.float32) bbox_inside_weights[labels == 1, :] = np.array(cfg.TRAIN.RPN_BBOX_INSIDE_WEIGHTS)#内部权重,前景就给1,其他是0 bbox_outside_weights = np.zeros((len(inds_inside), 4), dtype=np.float32) if cfg.TRAIN.RPN_POSITIVE_WEIGHT < 0:#暂时使用uniform 权重,也就是正样本是1,负样本是0 # uniform weighting of examples (given non-uniform sampling) num_examples = np.sum(labels >= 0) + 1 # positive_weights = np.ones((1, 4)) * 1.0 / num_examples # negative_weights = np.ones((1, 4)) * 1.0 / num_examples positive_weights = np.ones((1, 4)) negative_weights = np.zeros((1, 4)) else: assert ((cfg.TRAIN.RPN_POSITIVE_WEIGHT > 0) & (cfg.TRAIN.RPN_POSITIVE_WEIGHT < 1)) positive_weights = (cfg.TRAIN.RPN_POSITIVE_WEIGHT / (np.sum(labels == 1)) + 1) negative_weights = ((1.0 - cfg.TRAIN.RPN_POSITIVE_WEIGHT) / (np.sum(labels == 0)) + 1) bbox_outside_weights[labels == 1, :] = positive_weights#外部权重,前景是1,背景是0 bbox_outside_weights[labels == 0, :] = negative_weights if DEBUG: _sums += bbox_targets[labels == 1, :].sum(axis=0) _squared_sums += (bbox_targets[labels == 1, :] ** 2).sum(axis=0) _counts += np.sum(labels == 1) means = _sums / _counts stds = np.sqrt(_squared_sums / _counts - means ** 2) print('means:') print(means) print('stdevs:') print(stds) # map up to original set of anchors # 一开始是将超出图像范围的anchor直接丢掉的,现在在加回来 labels = _unmap(labels, total_anchors, inds_inside, fill=-1)#这些anchor的label是-1,也即dontcare bbox_targets = _unmap(bbox_targets, total_anchors, inds_inside, fill=0)#这些anchor的真值是0,也即没有值 bbox_inside_weights = _unmap(bbox_inside_weights, total_anchors, inds_inside, fill=0)#内部权重以0填充 bbox_outside_weights = _unmap(bbox_outside_weights, total_anchors, inds_inside, fill=0)#外部权重以0填充 if DEBUG: print('rpn: max max_overlap', np.max(max_overlaps)) print('rpn: num_positive', np.sum(labels == 1)) print('rpn: num_negative', np.sum(labels == 0)) _fg_sum += np.sum(labels == 1) _bg_sum += np.sum(labels == 0) _count += 1 print('rpn: num_positive avg', _fg_sum / _count) print('rpn: num_negative avg', _bg_sum / _count) # labels labels = labels.reshape((1, height, width, A))#reshap一下label rpn_labels = labels # bbox_targets bbox_targets = bbox_targets .reshape((1, height, width, A * 4))#reshape rpn_bbox_targets = bbox_targets # bbox_inside_weights bbox_inside_weights = bbox_inside_weights .reshape((1, height, width, A * 4)) rpn_bbox_inside_weights = bbox_inside_weights # bbox_outside_weights bbox_outside_weights = bbox_outside_weights .reshape((1, height, width, A * 4)) rpn_bbox_outside_weights = bbox_outside_weights return rpn_labels, rpn_bbox_targets, rpn_bbox_inside_weights, rpn_bbox_outside_weights def _unmap(data, count, inds, fill=0): """ Unmap a subset of item (data) back to the original set of items (of size count) """ if len(data.shape) == 1: ret = np.empty((count, ), dtype=np.float32) ret.fill(fill) ret[inds] = data else: ret = np.empty((count, ) + data.shape[1:], dtype=np.float32) ret.fill(fill) ret[inds, :] = data return ret def _compute_targets(ex_rois, gt_rois): """Compute bounding-box regression targets for an image.""" assert ex_rois.shape[0] == gt_rois.shape[0] assert ex_rois.shape[1] == 4 assert gt_rois.shape[1] == 5 return bbox_transform(ex_rois, gt_rois[:, :4]).astype(np.float32, copy=False)

生成anchor的代码如下

import numpy as np def generate_basic_anchors(sizes, base_size=16): base_anchor = np.array([0, 0, base_size - 1, base_size - 1], np.int32)#base_anchor[0,0,15,15] anchors = np.zeros((len(sizes), 4), np.int32)#anchors的shape为[10,4] index = 0 for h, w in sizes: anchors[index] = scale_anchor(base_anchor, h, w) index += 1 return anchors def scale_anchor(anchor, h, w): x_ctr = (anchor[0] + anchor[2]) * 0.5#7.5 y_ctr = (anchor[1] + anchor[3]) * 0.5#7.5 scaled_anchor = anchor.copy() scaled_anchor[0] = x_ctr - w / 2 # xmin scaled_anchor[2] = x_ctr + w / 2 # xmax scaled_anchor[1] = y_ctr - h / 2 # ymin scaled_anchor[3] = y_ctr + h / 2 # ymax return scaled_anchor def generate_anchors(base_size=16, ratios=[0.5, 1, 2], scales=2**np.arange(3, 6)): heights = [11, 16, 23, 33, 48, 68, 97, 139, 198, 283]#定义10个高度 widths = [16] sizes = [] for h in heights: for w in widths: sizes.append((h, w))#sizes为[10,2] return generate_basic_anchors(sizes) if __name__ == '__main__': import time t = time.time() a = generate_anchors() print(time.time() - t) print(a) from IPython import embed; embed()

trainnet.py部分

# coding: utf-8 from __future__ import print_function import numpy as np import os import tensorflow as tf from ..roi_data_layer.layer import RoIDataLayer from ..utils.timer import Timer from ..roi_data_layer import roidb as rdl_roidb from ..fast_rcnn.config import cfg _DEBUG = False class SolverWrapper(object): def __init__(self, sess, network, imdb, roidb, output_dir,logdir,pretrained_model=None): #Initialize the SolverWrapper. self.net = network self.imdb = imdb self.roidb = roidb self.output_dir = output_dir self.pretrained_model = pretrained_model print('Computing bounding-box regression targets...') if cfg.TRAIN.BBOX_REG: self.bbox_means, self.bbox_stds = rdl_roidb.add_bbox_regression_targets(roidb) print('done') # For checkpoint self.saver = tf.train.Saver(max_to_keep=100,write_version=tf.train.SaverDef.V2) self.writer = tf.summary.FileWriter(logdir=logdir, graph=tf.get_default_graph(), flush_secs=5) def snapshot(self, sess, iter): net = self.net if cfg.TRAIN.BBOX_REG and 'bbox_pred' in net.layers and cfg.TRAIN.BBOX_NORMALIZE_TARGETS: # save original values with tf.variable_scope('bbox_pred', reuse=True): weights = tf.get_variable("weights") biases = tf.get_variable("biases") orig_0 = weights.eval() orig_1 = biases.eval() # scale and shift with bbox reg unnormalization; then save snapshot weights_shape = weights.get_shape().as_list() sess.run(weights.assign(orig_0 * np.tile(self.bbox_stds, (weights_shape[0],1)))) sess.run(biases.assign(orig_1 * self.bbox_stds + self.bbox_means)) if not os.path.exists(self.output_dir): os.makedirs(self.output_dir) infix = ('_' + cfg.TRAIN.SNAPSHOT_INFIX if cfg.TRAIN.SNAPSHOT_INFIX != '' else '') filename = (cfg.TRAIN.SNAPSHOT_PREFIX + infix + '_iter_{:d}'.format(iter+1) + '.ckpt') filename = os.path.join(self.output_dir, filename) self.saver.save(sess, filename) print('Wrote snapshot to: {:s}'.format(filename)) if cfg.TRAIN.BBOX_REG and 'bbox_pred' in net.layers: # restore net to original state sess.run(weights.assign(orig_0)) sess.run(biases.assign(orig_1)) def build_image_summary(self): # A simple graph for write image summary log_image_data = tf.placeholder(tf.uint8, [None, None, 3]) log_image_name = tf.placeholder(tf.string) # import tensorflow.python.ops.gen_logging_ops as logging_ops from tensorflow.python.ops import gen_logging_ops from tensorflow.python.framework import ops as _ops log_image = gen_logging_ops._image_summary(log_image_name, tf.expand_dims(log_image_data, 0), max_images=1) _ops.add_to_collection(_ops.GraphKeys.SUMMARIES, log_image) # log_image = tf.summary.image(log_image_name, tf.expand_dims(log_image_data, 0), max_outputs=1) return log_image, log_image_data, log_image_name def train_model(self, sess, max_iters, restore=False): #Network training loop. data_layer = get_data_layer(self.roidb, self.imdb.num_classes) total_loss,model_loss, rpn_cross_entropy, rpn_loss_box=self.net.build_loss(ohem=cfg.TRAIN.OHEM) # scalar summary tf.summary.scalar('rpn_reg_loss', rpn_loss_box) tf.summary.scalar('rpn_cls_loss', rpn_cross_entropy) tf.summary.scalar('model_loss', model_loss) tf.summary.scalar('total_loss',total_loss) summary_op = tf.summary.merge_all() log_image, log_image_data, log_image_name = self.build_image_summary() # optimizer lr = tf.Variable(cfg.TRAIN.LEARNING_RATE, trainable=False) if cfg.TRAIN.SOLVER == 'Adam': opt = tf.train.AdamOptimizer(cfg.TRAIN.LEARNING_RATE) elif cfg.TRAIN.SOLVER == 'RMS': opt = tf.train.RMSPropOptimizer(cfg.TRAIN.LEARNING_RATE) else: # lr = tf.Variable(0.0, trainable=False) momentum = cfg.TRAIN.MOMENTUM opt = tf.train.MomentumOptimizer(lr, momentum) global_step = tf.Variable(0, trainable=False) with_clip = True if with_clip: tvars = tf.trainable_variables()#tf.trainable_variables返回的是需要训练的变量列表 grads, norm = tf.clip_by_global_norm(tf.gradients(total_loss, tvars), 10.0)#Gradient Clipping的引入是为了处理gradient explosion或者gradients vanishing的问题。 #当在一次迭代中权重的更新过于迅猛的话,很容易导致loss divergence。Gradient Clipping的直观作用就是让权重的更新限制在一个合适的范围。 train_op = opt.apply_gradients(list(zip(grads, tvars)), global_step=global_step) else: train_op = opt.minimize(total_loss, global_step=global_step) # intialize variables sess.run(tf.global_variables_initializer()) restore_iter = 0 # load vgg16 if self.pretrained_model is not None and not restore: try: print(('Loading pretrained model ' 'weights from {:s}').format(self.pretrained_model)) self.net.load(self.pretrained_model, sess,True) except: raise Exception('Check your pretrained model {:s}'.format(self.pretrained_model)) self.net.load(self.pretrained_model, sess,True) # resuming a trainer if restore: try: ckpt = tf.train.get_checkpoint_state(self.output_dir) print('Restoring from {}...'.format(ckpt.model_checkpoint_path), end=' ') self.saver.restore(sess, ckpt.model_checkpoint_path) stem = os.path.splitext(os.path.basename(ckpt.model_checkpoint_path))[0] restore_iter = int(stem.split('_')[-1]) sess.run(global_step.assign(restore_iter)) print('done') except: raise Exception('Check your pretrained {:s}'.format(ckpt.model_checkpoint_path)) last_snapshot_iter = -1 timer = Timer() for iter in range(restore_iter, max_iters): timer.tic() # learning rate if iter != 0 and iter % cfg.TRAIN.STEPSIZE == 0: sess.run(tf.assign(lr, lr.eval() * cfg.TRAIN.GAMMA)) print(lr) # get one batch blobs = data_layer.forward() feed_dict={ self.net.data: blobs['data'], self.net.im_info: blobs['im_info'], self.net.keep_prob: 0.5, self.net.gt_boxes: blobs['gt_boxes'], self.net.gt_ishard: blobs['gt_ishard'], self.net.dontcare_areas: blobs['dontcare_areas'] } res_fetches=[] fetch_list = [total_loss,model_loss, rpn_cross_entropy, rpn_loss_box, summary_op, train_op] + res_fetches total_loss_val,model_loss_val, rpn_loss_cls_val, rpn_loss_box_val, summary_str, _ = sess.run(fetches=fetch_list, feed_dict=feed_dict) self.writer.add_summary(summary=summary_str, global_step=global_step.eval()) _diff_time = timer.toc(average=False) if (iter) % (cfg.TRAIN.DISPLAY) == 0: print('iter: %d / %d, total loss: %.4f, model loss: %.4f, rpn_loss_cls: %.4f, rpn_loss_box: %.4f, lr: %f'% (iter, max_iters, total_loss_val,model_loss_val,rpn_loss_cls_val,rpn_loss_box_val,lr.eval())) print('speed: {:.3f}s / iter'.format(_diff_time)) if (iter+1) % cfg.TRAIN.SNAPSHOT_ITERS == 0: last_snapshot_iter = iter self.snapshot(sess, iter) if last_snapshot_iter != iter: self.snapshot(sess, iter) def get_training_roidb(imdb): """Returns a roidb (Region of Interest database) for use in training.""" if cfg.TRAIN.USE_FLIPPED:#使用数据增强 print('Appending horizontally-flipped training examples...') imdb.append_flipped_images() print('done') print('Preparing training data...') if cfg.TRAIN.HAS_RPN: rdl_roidb.prepare_roidb(imdb) else: rdl_roidb.prepare_roidb(imdb) print('done') return imdb.roidb def get_data_layer(roidb, num_classes): """return a data layer.""" if cfg.TRAIN.HAS_RPN: if cfg.IS_MULTISCALE: # obsolete # layer = GtDataLayer(roidb) raise Exception("Calling caffe modules...") else: layer = RoIDataLayer(roidb, num_classes) else: layer = RoIDataLayer(roidb, num_classes) return layer def train_net(network, imdb, roidb, output_dir, log_dir, pretrained_model=None, max_iters=40000, restore=False): """Train a Fast R-CNN network.""" # config = tf.ConfigProto(allow_soft_placement=True) # config.gpu_options.allocator_type = 'BFC' # config.gpu_options.per_process_gpu_memory_fraction = 0.75 # with tf.Session(config=config) as sess: with tf.Session() as sess: sw = SolverWrapper(sess,network, imdb, roidb, output_dir,log_dir, pretrained_model=pretrained_model) print('Solving...') sw.train_model(sess, max_iters,restore) print('done solving')

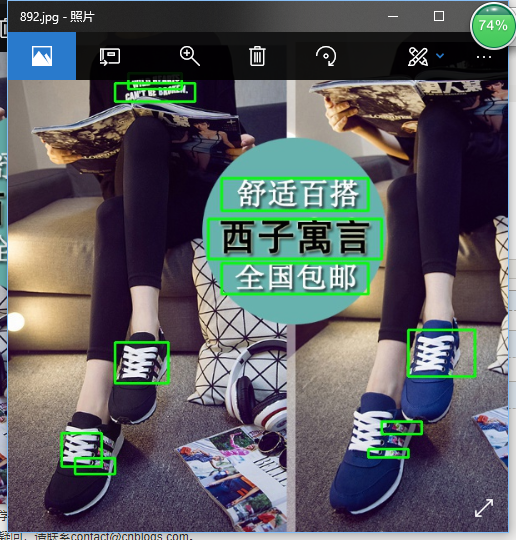

实验测试图

表示效果不太好,参数没有调的很好。。。。。。