所以,这篇文章也是使用二进制包部署Kubernetes集群。

本章目录

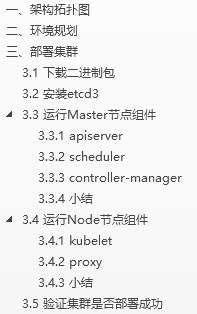

一、架构拓扑图

二、环境规划

|

角色 |

IP |

组件 |

|

master |

192.168.0.211 |

etcd kube-apiserver kube-controller-manager kube-scheduler |

|

node01 |

192.168.0.212 |

kubelet kube-proxy docker |

|

node02 |

192.168.0.213 |

kubelet kube-proxy docker |

环境说明:

操作系统:Ubuntu16.04 or CentOS7

Kubernetes版本:v1.8.3

Docker版本:v17.09-ce

均采用当前最新稳定版本。

关闭selinux。

三、部署集群

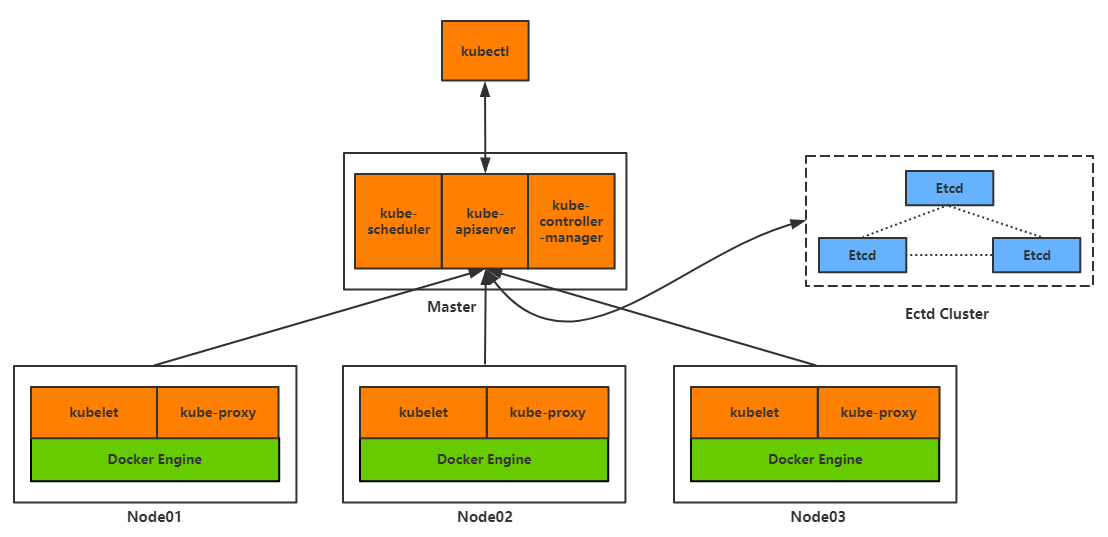

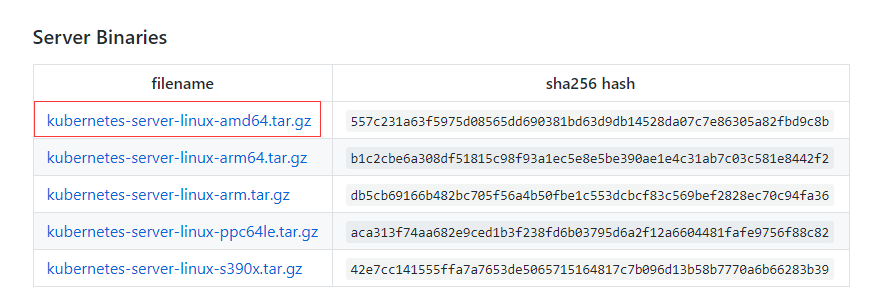

3.1 下载二进制包

打开下面网址,下载下面两个红色框框的包。

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.8.md#v183

下载完成后,上传到服务器:

kubernetes-server-linux-amd64.tar.gz上传到master节点。

kubernetes-node-linux-amd64.tar.gz 上传到node节点。

3.2 安装etcd3

|

1

2

3

4

5

6

7

8

|

k8s-master# yum install etcd –yk8s-master# vi /etc/etcd/etcd.conf ETCD_NAME="default"ETCD_DATA_DIR="/var/lib/etcd/default"ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"ETCD_ADVERTISE_CLIENT_URLS=http://0.0.0.0:2379k8s-master# systemctl enable etcdk8s-master# systemctl start etcd |

注意:Ubuntu系统etcd配置文件在/etc/default/etcd。

3.3 运行Master节点组件

|

1

2

3

|

k8s-master# tar zxvf kubernetes-server-linux-amd64.tar.gzk8s-master# mkdir -p /opt/kubernetes/{bin,cfg}k8s-master# mv kubernetes/server/bin/{kube-apiserver,kube-scheduler,kube-controller-manager,kubectl} /opt/kubernetes/bin |

3.3.1 apiserver

创建配置文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

# vi /opt/kubernetes/cfg/kube-apiserver# 启用日志标准错误KUBE_LOGTOSTDERR="--logtostderr=true"# 日志级别KUBE_LOG_LEVEL="--v=4"# Etcd服务地址KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.0.211:2379"# API服务监听地址KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"# API服务监听端口KUBE_API_PORT="--insecure-port=8080"# 对集群中成员提供API服务地址KUBE_ADVERTISE_ADDR="--advertise-address=192.168.0.211"# 允许容器请求特权模式,默认falseKUBE_ALLOW_PRIV="--allow-privileged=false"# 集群分配的IP范围KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.10.10.0/24" |

创建systemd服务文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

# vi /lib/systemd/system/kube-apiserver.service [Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver#ExecStart=/opt/kubernetes/bin/kube-apiserver ${KUBE_APISERVER_OPTS}ExecStart=/opt/kubernetes/bin/kube-apiserver ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${KUBE_ETCD_SERVERS} ${KUBE_API_ADDRESS} ${KUBE_API_PORT} ${KUBE_ADVERTISE_ADDR} ${KUBE_ALLOW_PRIV} ${KUBE_SERVICE_ADDRESSES}Restart=on-failure[Install]WantedBy=multi-user.target |

启动服务,并设置开机启动:

|

1

2

3

|

# systemctl daemon-reload# systemctl enable kube-apiserver# systemctl restart kube-apiserver |

注意:apiserver默认支持etcd3,如果是etcd2,需启动时指定版本选项--storage-backend=etcd2

3.3.2 scheduler

创建配置文件:

|

1

2

3

4

5

|

# vi /opt/kubernetes/cfg/kube-schedulerKUBE_LOGTOSTDERR="--logtostderr=true"KUBE_LOG_LEVEL="--v=4"KUBE_MASTER="--master=192.168.0.211:8080"KUBE_LEADER_ELECT="--leader-elect" |

创建systemd服务文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

# vi /lib/systemd/system/kube-scheduler.service[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-schedulerExecStart=/opt/kubernetes/bin/kube-scheduler ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${KUBE_MASTER} ${KUBE_LEADER_ELECT}Restart=on-failure[Install]WantedBy=multi-user.target |

启动服务,并设置开机启动:

|

1

2

3

|

# systemctl daemon-reload# systemctl enable kube-scheduler# systemctl restart kube-scheduler |

3.3.3 controller-manager

创建配置文件:

|

1

2

3

4

|

# vi /opt/kubernetes/cfg/kube-controller-managerKUBE_LOGTOSTDERR="--logtostderr=true"KUBE_LOG_LEVEL="--v=4"KUBE_MASTER="--master=192.168.0.211:8080" |

创建systemd服务文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

# vi /lib/systemd/system/kube-controller-manager.service[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-managerExecStart=/opt/kubernetes/bin/kube-controller-manager ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${KUBE_MASTER} ${KUBE_LEADER_ELECT}Restart=on-failure[Install]WantedBy=multi-user.target |

启动服务,并设置开机启动:

|

1

2

3

|

# systemctl daemon-reload# systemctl enable kube-controller-manager# systemctl restart kube-controller-manager |

3.3.4 小结

Master节点组件就全部启动了,需要注意的是服务启动顺序有依赖,先启动etcd,再启动apiserver,其他组件无顺序要求。

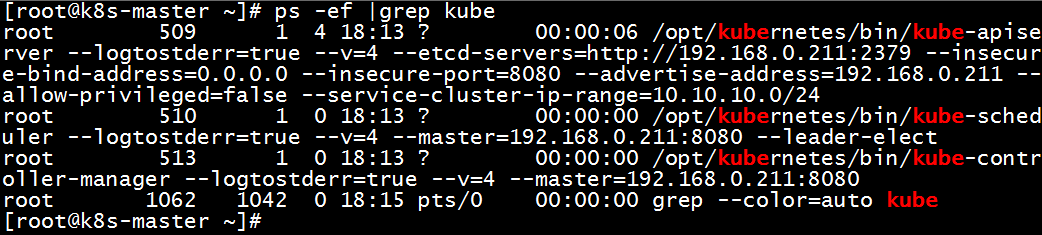

查看Master节点组件进程状态:

说明组件都在运行。

如果启动失败,请查看启动日志,例如:

#journalctl -u kube-apiserver

3.4 运行Node节点组件

|

1

2

3

|

k8s-node01# tar zxvf kubernetes-node-linux-amd64.tar.gzk8s-node01# mkdir -p /opt/kubernetes/{bin,cfg}k8s-node01# mv kubernetes/node/bin/{kubelet,kube-proxy} /opt/kubernetes/bin/ |

3.4.1 kubelet

创建kubeconfig配置文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

|

# vi /opt/kubernetes/cfg/kubelet.kubeconfigapiVersion: v1kind: Configclusters: - cluster: server: http://192.168.0.211:8080 name: localcontexts: - context: cluster: local name: localcurrent-context: local |

kubeconfig文件用于kubelet连接master apiserver。

创建配置文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

# vi /opt/kubernetes/cfg/kubelet # 启用日志标准错误KUBE_LOGTOSTDERR="--logtostderr=true"# 日志级别KUBE_LOG_LEVEL="--v=4"# Kubelet服务IP地址NODE_ADDRESS="--address=192.168.0.212"# Kubelet服务端口NODE_PORT="--port=10250"# 自定义节点名称NODE_HOSTNAME="--hostname-override=192.168.0.212"# kubeconfig路径,指定连接API服务器KUBELET_KUBECONFIG="--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig"# 允许容器请求特权模式,默认falseKUBE_ALLOW_PRIV="--allow-privileged=false"# DNS信息KUBELET_DNS_IP="--cluster-dns=10.10.10.2"KUBELET_DNS_DOMAIN="--cluster-domain=cluster.local"# 禁用使用SwapKUBELET_SWAP="--fail-swap-on=false" |

创建systemd服务文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

# vi /lib/systemd/system/kubelet.service[Unit]Description=Kubernetes KubeletAfter=docker.serviceRequires=docker.service[Service]EnvironmentFile=-/opt/kubernetes/cfg/kubeletExecStart=/opt/kubernetes/bin/kubelet ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${NODE_ADDRESS} ${NODE_PORT} ${NODE_HOSTNAME} ${KUBELET_KUBECONFIG} ${KUBE_ALLOW_PRIV} ${KUBELET_DNS_IP} ${KUBELET_DNS_DOMAIN} ${KUBELET_SWAP} Restart=on-failureKillMode=process[Install]WantedBy=multi-user.target |

启动服务,并设置开机启动:

|

1

2

3

|

# systemctl daemon-reload# systemctl enable kubelet# systemctl restart kubelet |

3.4.2 proxy

创建配置文件:

|

1

2

3

4

5

6

7

8

9

|

# vi /opt/kubernetes/cfg/kube-proxy # 启用日志标准错误KUBE_LOGTOSTDERR="--logtostderr=true"# 日志级别KUBE_LOG_LEVEL="--v=4"# 自定义节点名称NODE_HOSTNAME="--hostname-override=192.168.0.212"# API服务地址KUBE_MASTER="--master=http://192.168.0.211:8080" |

创建systemd服务文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

# vi /lib/systemd/system/kube-proxy.service[Unit]Description=Kubernetes ProxyAfter=network.target[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-proxyExecStart=/opt/kubernetes/bin/kube-proxy ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${NODE_HOSTNAME} ${KUBE_MASTER}Restart=on-failure[Install]WantedBy=multi-user.target |

启动服务,并设置开机启动:

|

1

2

3

|

# systemctl daemon-reload# systemctl enable kube-proxy# systemctl restart kube-proxy |

3.4.3 小结

其他节点加入集群与node01方式相同,但需修改kubelet的--address和--hostname-override选项为本机IP。

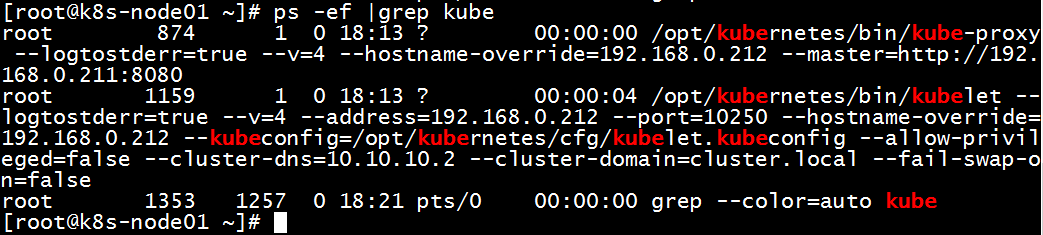

查看Node节点组件进程状态:

说明组件都在运行。

如果启动失败,请查看启动日志,例如:

#journalctl -u kubelet

3.5 验证集群是否部署成功

设置可执行文件到系统变量,方便使用:

|

1

2

|

# echo "export PATH=$PATH:/opt/kubernetes/bin" >> /etc/profile# source /etc/profile |

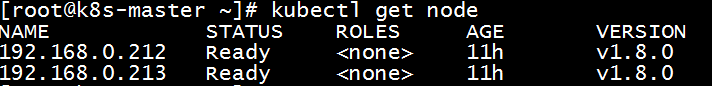

查看集群节点状态:

两个节点都加入到了kubernetes集群,就此部署完成。