下载Sqoop

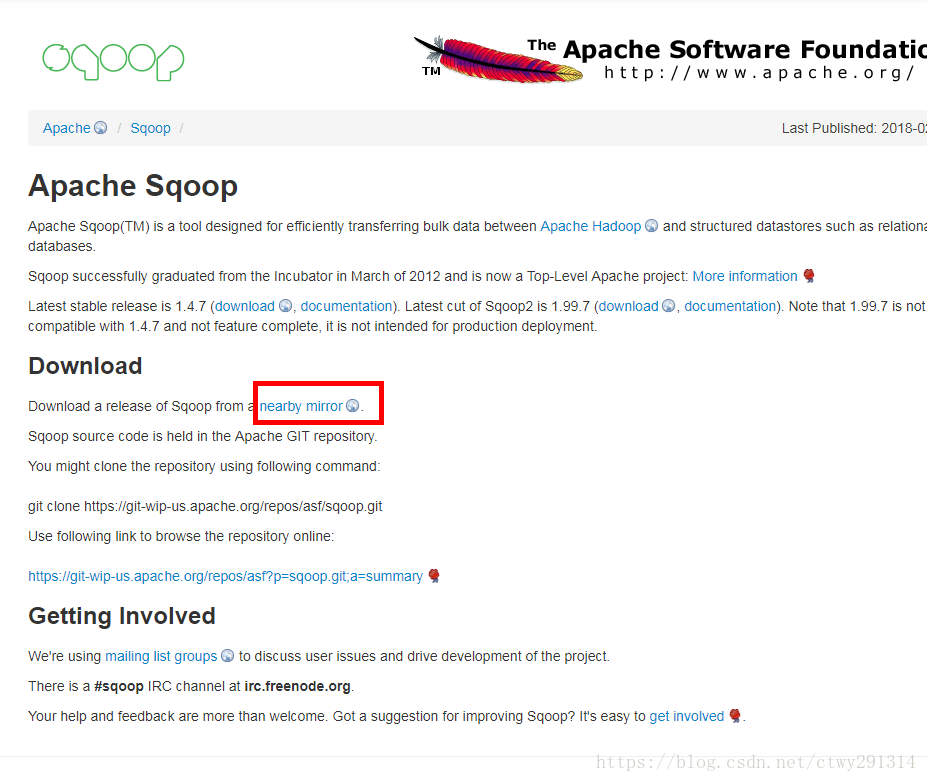

官网地址

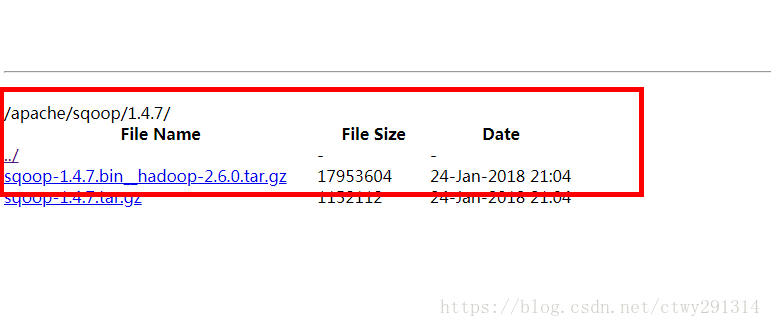

wget http://mirrors.hust.edu.cn/apache/sqoop/1.4.7/sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz安装及配置

解压

tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz移动文件夹

mv sqoop-1.4.7.bin__hadoop-2.6.0 /usr/local/hadoop/配置环境变量

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

source /etc/profile

# Hadoop Environment Variables

export HADOOP_HOME=/usr/local/hadoop/hadoop-2.8.0

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export ZOOKEEPER_HOME=/usr/local/hadoop/zookeeper-3.4.10

export HBASE_HOME=/usr/local/hadoop/hbase-1.2.6

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export FLUME_HOME=/usr/local/hadoop/apache-flume-1.6.0-bin

export KAFKA_HOME=/usr/local/hadoop/kafka_2.11-0.10.0.0

export HIVE_HOME=/usr/local/hadoop/apache-hive-2.1.1-bin

export HIVE_CONF_DIR=${HIVE_HOME}/conf

export JAVA_HOME=/usr/java/jdk1.8.0_161

export NODE_HOME=/usr/local/node/node-v9.7.1-linux-x64

export PHANTOMJS_HOME=/usr/local/node/phantomjs-2.1.1-linux-x86_64

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib:${HIVE_HOME}/lib:$CLASS_PATH

export SQOOP_HOME=/usr/local/hadoop/sqoop-1.4.7.bin__hadoop-2.6.0

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HIVE_HOME/lib/*

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$ZOOKEEPER_HOME/bin:$HBASE_HOME/bin:$FLUME_HOME/bin:$KAFKA_HOME/bin:$HIVE_HOME/bin:$HIVE_CONF_DIR:$JAVA_HOME:$NODE_HOME:$PHANTOMJS_HOME:$JRE_HOME:$CLASSPATH:$SQOOP_HOME/bin立即生效

source ~/.bashrcSqoop配置文件修改

新建

进入到/usr/local/sqoop-1.4.7.bin__hadoop-2.6.0/conf目录下,也就是执行命令

cd /usr/local/sqoop-1.4.7.bin__hadoop-2.6.0/confcp sqoop-env-template.sh sqoop-env.sh修改配置

vim sqoop-env.sh在文件头部追加

export HADOOP_COMMON_HOME=/usr/local/hadoop/hadoop-2.8.0

export HADOOP_MAPRED_HOME=/usr/local/hadoop/hadoop-2.8.0

export HIVE_HOME=/usr/local/hadoop/apache-hive-2.1.1-bin

export HIVE_CONF_DIR=${HIVE_HOME}/conf对应自己的路径进行相应修改

上传驱动包到sqoop的lib目录

解决ERROR tool.ImportTool: Encountered IOException running import job: java.io.IOException: Hive exited with status 1异常

解决方法:找到HIVE_HOME下的lib文件夹,将文件夹中的libthrift-0.9.3.jar 拷贝到SQOOP_HOME路径下的lib文件夹下面

使用Sqoop

sqoop是一个工具,安装完成后,如果操作的命令不涉及hive和hadoop的,可以实现不启动hive和hadoop,直接输入sqoop命令即可,例如sqoop help命令。要使用hive相关的命令,必须事先启动hive和hadoop。

hadoop的安装和启动可以参考该博文:

CentOS7搭建Hadoop2.8.0集群及基础操作与测试

hive的安装和启动可以参考该博文:

Linux基于Hadoop2.8.0集群安装配置Hive2.1.1及基础操作

help命令

sqoop help列出oracle中的数据库

sqoop list-databases --connect jdbc:oracle:thin:@172.19.1.209:1521:ZFGFH --username hcapp --password app789hc列出oracle的数据表

sqoop list-tables --connect jdbc:oracle:thin:@172.19.1.209:1521:ZFGFH --username hcapp --password app789hcoracle数据表导入Hive

sqoop import --connect jdbc:oracle:thin:@172.19.1.209:1521:ZFGFH --username hcapp --password app789hc --table HCAPP.HC_CASEWOODLIST -m 1 --hive-import --hive-database db_hive_edu --hive-table HC_CASEWOODLIST在导入过程中发生异常再次导入时提示already exists需删除对应的HDFS

hadoop fs -rmr hdfs://master:9000/user/root/HCAPP.HC_CASEWOODLIST常见问题

2018-05-15 09:07:30,830 main ERROR Could not register mbeans java.security.AccessControlException: access denied ("javax.management.MBeanTrustPermission" "register")

at java.security.AccessControlContext.checkPermission(AccessControlContext.java:472)

at java.lang.SecurityManager.checkPermission(SecurityManager.java:585)

at com.sun.jmx.interceptor.DefaultMBeanServerInterceptor.checkMBeanTrustPermission(DefaultMBeanServerInterceptor.java:1848)

at com.sun.jmx.interceptor.DefaultMBeanServerInterceptor.registerMBean(DefaultMBeanServerInterceptor.java:322)

at com.sun.jmx.mbeanserver.JmxMBeanServer.registerMBean(JmxMBeanServer.java:522)

at org.apache.logging.log4j.core.jmx.Server.register(Server.java:379)

at org.apache.logging.log4j.core.jmx.Server.reregisterMBeansAfterReconfigure(Server.java:171)

at org.apache.logging.log4j.core.jmx.Server.reregisterMBeansAfterReconfigure(Server.java:147)

at org.apache.logging.log4j.core.LoggerContext.setConfiguration(LoggerContext.java:457)

at org.apache.logging.log4j.core.LoggerContext.start(LoggerContext.java:246)

at org.apache.logging.log4j.core.impl.Log4jContextFactory.getContext(Log4jContextFactory.java:230)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:140)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:113)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:98)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:156)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jDefault(LogUtils.java:155)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:91)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:83)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:66)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:657)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:331)

at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:537)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)解决办法

%JAVA_HOME%jrelibsecurityjava.policy

添加如下内容:

permission javax.management.MBeanTrustPermission "register";