相关环境:

Python3

requests库

BeautifulSoup库

一.requests库简单使用

简单获取一个网页的源代码:

import requests sessions = requests.session() sessions.headers['User-Agent'] = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/34.0.1847.131 Safari/537.36' url = "https://baike.baidu.com/item/%E8%8C%83%E5%86%B0%E5%86%B0/22984" r = sessions.get(url) print(r.status_code) html_content = r.content.decode('utf-8') print(html_content)

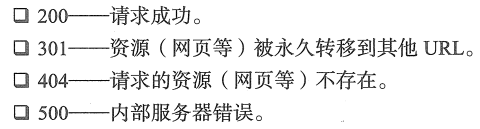

其中,r.status_code的值有如下对应关系。

r.content可以获取页面的全部内容。

二.BeautifulSoup库简单使用

Beautiful Soup是一个可以从HTML或XML文件中提取数据的Python库.

测试文档如下:

html_doc = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="title"><b>The Dormouse's story</b></p> <p class="story">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """

Beautiful Soup库简单使用。

from bs4 import BeautifulSoup soup = BeautifulSoup(html_doc, "lxml")

简单调用方法如下:

soup.title # <title>The Dormouse's story</title> soup.title.name # u'title' soup.title.string # u'The Dormouse's story' soup.title.parent.name # u'head' soup.p # <p class="title"><b>The Dormouse's story</b></p> soup.p['class'] # u'title' soup.a # <a class="sister" href="http://example.com/elsie" id="link1">Elsie</a> soup.find_all('a') # [<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>, # <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>, # <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>] soup.find(id="link3") # <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a> print(soup.find("a", id="link1")) # <a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>

其中,find_all或者find是比较常用的。

find_all() 方法将返回文档中符合条件的所有tag;find() 方法将返回文档中符合条件的一个tag;

三.简单下载一张图片

已知网页上图片的地址,下载该图片到本地。

import requests sessions = requests.session() sessions.headers['User-Agent'] = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/34.0.1847.131 Safari/537.36' img_url = "https://gss2.bdstatic.com/9fo3dSag_xI4khGkpoWK1HF6hhy/baike/c0%3Dbaike150%2C5%2C5%2C150%2C50/sign=e95e57acd20735fa85fd46ebff3864d6/f703738da9773912f15d70d6fe198618367ae20a.jpg" r = sessions.get(img_url) print(r.status_code) f = open("1.jpg","wb") f.write(r.content) f.close()

参考: