查看pod,server

root @ master ➜ ~ kubectl get po,svc -n irm-server -o wide

NAME READY STATUS RESTARTS AGE IP NODE

po/test-agw-864b7554d6-wmxnm 0/1 ImagePullBackOff 0 21d 10.244.5.108 node12

po/test-ecd-server-56b77d9fbb-zfctt 1/1 Running 1 162d 10.244.5.39 node12

po/test-flow-server-b477756f-2s5bc 1/1 Running 0 21d 10.244.1.172 node1

po/test-huishi-api-5f474cbcc6-wpb7j 0/1 ImagePullBackOff 0 21h 10.244.12.159 node11

po/test-huishi-api-86dcfdb7c5-jtcpt 1/1 Running 0 22h 10.244.12.85 node11

po/test-huishi-message-5d4b456b88-n8xl5 1/1 Running 1 44d 10.244.5.35 node12

po/test-huishi-schedule-bc5f5d4d5-z22gq 1/1 Running 1 21d 10.244.1.159 node1

po/test-huishi-server-6f875487d7-9rzpd 1/1 Running 0 1d 10.244.7.112 node6

po/test-irm-bd9444948-rwtgn 1/1 Running 0 20d 10.244.2.195 node2

po/test-irm-task-89f65f5bd-zht2x 1/1 Running 1 77d 10.244.5.34 node12

po/test-message-service-bbf469fd8-bf847 0/1 ImagePullBackOff 0 20d 10.244.1.176 node1

po/test-order-server-5fcc5575bd-67c4r 1/1 Running 0 21d 10.244.1.158 node1

po/test-payment-6df4459864-5d8xl 1/1 Running 0 21d 10.244.5.221 node12

po/test-ufida-server-7cb4886f5b-5t4x2 0/1 ImagePullBackOff 0 106d 10.244.4.246 node4

po/test-urule-54f4b8f84f-l5xbm 1/1 Running 1 21d 10.244.1.160 node1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/test-agw ClusterIP 10.109.158.202 <none> 8080/TCP 1y app=test-agw

svc/test-ecd-server ClusterIP 10.102.200.21 <none> 8080/TCP 1y app=test-ecd-server

svc/test-flow-server ClusterIP 10.98.89.221 <none> 8080/TCP 175d app=test-flow-server

svc/test-huishi-api NodePort 10.108.234.58 <none> 8080:30007/TCP 62d app=test-huishi-api

svc/test-huishi-message ClusterIP 10.97.120.29 <none> 8080/TCP 46d app=test-huishi-message

svc/test-huishi-schedule ClusterIP 10.99.250.121 <none> 8080/TCP 51d app=test-huishi-schedule

svc/test-huishi-schedule9999 NodePort 10.98.35.162 <none> 9999:30099/TCP 51d app=test-huishi-schedule

svc/test-huishi-server ClusterIP 10.96.74.168 <none> 8080/TCP 14d app=test-huishi-server

svc/test-irm ClusterIP 10.106.170.108 <none> 80/TCP 20d app=test-irm

svc/test-irm-task ClusterIP 10.109.108.154 <none> 80/TCP 1y app=test-irm-task

svc/test-message-service ClusterIP 10.108.202.97 <none> 8080/TCP 100d app=test-message-service

svc/test-order-node NodePort 10.110.147.74 <none> 8080:30881/TCP 1y app=test-order-server

svc/test-order-server ClusterIP 10.101.12.207 <none> 8080/TCP 1y app=test-order-server

svc/test-payment ClusterIP 10.97.245.133 <none> 8080/TCP 1y app=test-payment

svc/test-ssoweb ClusterIP 10.110.162.251 <none> 8080/TCP 1y app=test-ssoweb

svc/test-urule ClusterIP 10.102.23.41 <none> 8080/TCP 1y app=test-urule

查看nodes

root @ master ➜ ~ kubectl get nodes -n irm-server -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready master 1y v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node1 Ready <none> 70d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.6.2

node11 Ready <none> 286d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node12 Ready <none> 174d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node2 Ready <none> 1y v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node4 Ready <none> 1y v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node5 Ready <none> 104d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node6 Ready <none> 23d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node7 Ready <none> 23d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node8 Ready <none> 1y v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.6.2

node9 Ready <none> 23d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.6.2

特么看不出具体的节点ip

这里我也不关心

查看Endpoint

root @ master ➜ ~ kubectl get ep -n irm-server

NAME ENDPOINTS AGE

test-agw 1y

test-ecd-server 10.244.5.39:20884 1y

test-flow-server 10.244.1.172:8080 175d

test-huishi-api 10.244.12.85:8088 63d

test-huishi-message 10.244.5.35:8080 46d

test-huishi-schedule 10.244.1.159:8080 51d

test-huishi-schedule9999 10.244.1.159:9999 51d

test-huishi-server 10.244.1.225:8080 14d

test-irm 10.244.2.195:80 21d

test-irm-task 10.244.5.34:80 1y

test-message-service 100d

test-order-node 10.244.1.158:20881 1y

test-order-server 10.244.1.158:20881 1y

test-payment 10.244.5.221:20882 1y

test-ssoweb <none> 1y

test-urule 10.244.1.160:8787 1y

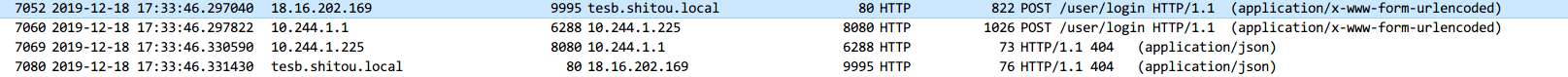

请求huishi-api,抓包分析

请求http://18.16.200.130:30007/api/user/login

tcpdump -i any -w aaa.cap

这里要抓取所有的网卡

查看抓捕的记录:

其中18.16.202.169是我本机ip地址

18.16.200.130是k8s集群的master节点ip地址

10.244.12.85可以从上面的配置看出是huishi-api的NodePort

查看master的网卡信息

root @ master ➜ ~ ifconfig

cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.244.0.1 netmask 255.255.255.0 broadcast 0.0.0.0

inet6 fe80::204b:36ff:fe65:f36 prefixlen 64 scopeid 0x20<link>

ether 0a:58:0a:f4:00:01 txqueuelen 1000 (Ethernet)

RX packets 11043743 bytes 2245109724 (2.0 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 11598708 bytes 1866487140 (1.7 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 0.0.0.0

ether 02:42:d9:c7:64:79 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 18.16.200.130 netmask 255.255.255.0 broadcast 18.16.200.255

inet6 fe80::623c:3b90:5a52:57b9 prefixlen 64 scopeid 0x20<link>

ether 52:54:00:50:6c:6a txqueuelen 1000 (Ethernet)

RX packets 258611996 bytes 77421475020 (72.1 GiB)

RX errors 0 dropped 33 overruns 0 frame 0

TX packets 228059421 bytes 326005447771 (303.6 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.244.0.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::aca0:86ff:fe1b:64e0 prefixlen 64 scopeid 0x20<link>

ether ae:a0:86:1b:64:e0 txqueuelen 0 (Ethernet)

RX packets 10422535 bytes 966970104 (922.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 14453971 bytes 1874942581 (1.7 GiB)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 129207398 bytes 75774837801 (70.5 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 129207398 bytes 75774837801 (70.5 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

可以看出使用的是flannel.1网卡,ip是10.244.0.0

查看huishi-server的描述

root @ master ➜ ~ kubectl describe svc test-huishi-server -n irm-server

Name: test-huishi-server

Namespace: irm-server

Labels: app=test-huishi-server

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"test-huishi-server"},"name":"test-huishi-server","namespace":"irm-ser...

Selector: app=test-huishi-server

Type: ClusterIP

IP: 10.96.74.168

Port: http 8080/TCP

TargetPort: 8080/TCP

Endpoints: 10.244.1.225:8080

Session Affinity: None

Events: <none>

访问Service

内部访问

如果是内部网络访问的话,比较方便

-

通过svc中配置的clusterIp访问

huishi-server: root @ master ➜ ~ curl 10.96.74.168:8080/test/get {"data":null,"success":true,"resCode":"000","msg":"成功"}# huishi-api root @ master ➜ ~ curl 10.108.234.58:8080/api/test/get {"data":null,"success":true,"resCode":"000","msg":"成功"}# -

通过ep中的Endpoints访问

huishi-server: root @ master ➜ ~ curl 10.244.1.225:8080/test/get {"data":null,"success":true,"resCode":"000","msg":"成功"}# huishi-api: root @ master ➜ ~ curl 10.244.12.85:8088/api/test/get {"data":null,"success":true,"resCode":"000","msg":"成功"}#

外部访问

NodePort(集群外任意一个节点的IP加上端口访问该服务)

如果类型是NodePort,直接访问master的ip,svc中映射的端口

huishi-api:

root @ master ➜ ~ curl 127.0.0.1:30007/api/test/get

{"data":null,"success":true,"resCode":"000","msg":"成功"}#

root @ master ➜ ~ curl 18.16.200.130:30007/api/test/get

{"data":null,"success":true,"resCode":"000","msg":"成功"}#

集群外就可以使用kubernetes任意一个节点的IP加上端口访问该服务

root @ master ➜ ~ curl 18.16.200.130:30007/api/test/get

{"data":null,"success":true,"resCode":"000","msg":"成功"}#

root @ master ➜ ~ curl 18.16.200.141:30007/api/test/get

{"data":null,"success":true,"resCode":"000","msg":"成功"}#

查看master节点的网络

root @ master ➜ ~ netstat -anop |grep 30007

tcp6 0 0 :::30007 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

root @ master ➜ ~ netstat -anop |grep 2788

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp 0 0 18.16.200.130:49232 18.16.200.130:6443 ESTABLISHED 2788/kube-proxy keepalive (4.15/0/0)

tcp6 0 0 :::10256 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30001 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30002 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30099 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30003 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30004 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::31988 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30005 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30998 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30006 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30999 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30007 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30008 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::32088 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30009 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30010 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::32666 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30011 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30012 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30908 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::31998 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 1 0 :::31999 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30881 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::32098 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30020 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::32070 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

tcp6 0 0 :::30088 :::* LISTEN 2788/kube-proxy off (0.00/0/0)

Ingress

除了NodePort暴露服务ip,也可以使用ingress暴露

root @ master ➜ ~ kubectl get po,svc --all-namespaces -o wide |grep ingress

ingress-nginx po/default-http-backend-77b86c5478-9f9jv 1/1 Running 0 21d 10.244.1.157 node1

ingress-nginx po/nginx-ingress-controller-5bb76649d8-5cg4g 1/1 Running 0 21d 18.16.200.131 node1

ingress-nginx svc/default-http-backend ClusterIP 10.107.214.146 <none> 80/TCP 350d app=default-http-backend

查看ep

root @ master ➜ ~ kubectl get ep -n ingress-nginx

NAME ENDPOINTS AGE

default-http-backend 10.244.1.157:8080 350d

查看ingress具体域名对应服务配置:

root @ master ➜ ~ kubectl get ingress -n irm-server

NAME HOSTS ADDRESS PORTS AGE

agw-ingress tagw.shitou.local 80 1y

test-flow-ingress tflowserver.shitou.local 80 175d

test-huishi-api thuishi-api.shitou.local 80 355d

test-huishi-server thuishi-server.shitou.local 80 14d

test-irm-ingress tirm.shitou.local 80 21d

test-irmtask-ingress tirmtask.shitou.local 80 1y

test-urule-ingress turule.shitou.local 80 1y

从上面可以看出ingress安装在18.16.200.131的节点上

那么上述hosts就会访问到18.16.200.131,并通过ingress组件访问到各自的svc

查看:

$ ping thuishi-server.shitou.local

Pinging thuishi-server.shitou.local [18.16.200.131] with 32 bytes of data:

Reply from 18.16.200.131: bytes=32 time<1ms TTL=63

$ ping tirm.shitou.local

Pinging tirm.shitou.local [18.16.200.131] with 32 bytes of data:

Reply from 18.16.200.131: bytes=32 time=10ms TTL=63

抓包分析:

这里的tesb.shitou.local就是ip地址18.16.200.131

10.244.1.225是huishi-server的pod地址

10.244.1.1是18.16.200.131其他网卡地址

查看18.16.200.131的网卡信息:

[root@node1 ~]# ifconfig

cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.244.1.1 netmask 255.255.255.0 broadcast 0.0.0.0

inet6 fe80::70ae:ccff:fe27:c166 prefixlen 64 scopeid 0x20<link>

ether 0a:58:0a:f4:01:01 txqueuelen 1000 (Ethernet)

RX packets 268014941 bytes 115322010128 (107.4 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 264897557 bytes 222922218246 (207.6 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:d0:aa:90:92 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 18.16.200.131 netmask 255.255.255.0 broadcast 18.16.200.255

inet6 fe80::9b8b:60b5:a1d3:590e prefixlen 64 scopeid 0x20<link>

inet6 fe80::9290:78fe:4051:52d0 prefixlen 64 scopeid 0x20<link>

ether 52:54:00:41:df:fa txqueuelen 1000 (Ethernet)

RX packets 320582588 bytes 249052790297 (231.9 GiB)

RX errors 0 dropped 15 overruns 0 frame 0

TX packets 298828981 bytes 130431593188 (121.4 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.244.1.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::c066:f4ff:fe9e:3dc9 prefixlen 64 scopeid 0x20<link>

ether c2:66:f4:9e:3d:c9 txqueuelen 0 (Ethernet)

RX packets 38098631 bytes 13492636682 (12.5 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 30463742 bytes 13901092750 (12.9 GiB)

TX errors 0 dropped 15 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 6353298 bytes 565363964 (539.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6353298 bytes 565363964 (539.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

查看具体的node的ip

root @ master ➜ / kubectl get node -n irm-server -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready master 1y v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node1 Ready <none> 72d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.6.2

node11 Ready <none> 288d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node12 Ready <none> 176d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node2 Ready <none> 1y v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node4 Ready <none> 1y v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node5 Ready <none> 107d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node6 Ready <none> 25d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node7 Ready <none> 25d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://17.3.2

node8 Ready <none> 1y v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.6.2

node9 Ready <none> 25d v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.6.2

查看node1的ip

root @ master ➜ / kubectl describe node node1

Name: node1

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=node1

Annotations: flannel.alpha.coreos.com/backend-data={"VtepMAC":"c2:66:f4:9e:3d:c9"}

flannel.alpha.coreos.com/backend-type=vxlan

flannel.alpha.coreos.com/kube-subnet-manager=true

flannel.alpha.coreos.com/public-ip=18.16.200.131

node.alpha.kubernetes.io/ttl=0

volumes.kubernetes.io/controller-managed-attach-detach=true

Taints: <none>

CreationTimestamp: Tue, 08 Oct 2019 20:16:34 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

OutOfDisk False Fri, 20 Dec 2019 15:42:27 +0800 Tue, 08 Oct 2019 20:16:34 +0800 KubeletHasSufficientDisk kubelet has sufficient disk space available

MemoryPressure False Fri, 20 Dec 2019 15:42:27 +0800 Wed, 27 Nov 2019 14:10:16 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Fri, 20 Dec 2019 15:42:27 +0800 Wed, 27 Nov 2019 14:10:16 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

Ready True Fri, 20 Dec 2019 15:42:27 +0800 Wed, 27 Nov 2019 14:10:26 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 18.16.200.131

Hostname: node1

Capacity:

cpu: 8

memory: 32394276Ki

pods: 110

Allocatable:

cpu: 6500m

memory: 29772836Ki

pods: 110

System Info:

Machine ID: b6a6cf5e392f9fc1f6073ee61021f733

System UUID: 683E599A-45DF-358F-03FE-0FAD8CDFA0C2

Boot ID: 4571df28-481c-4def-8213-b89dd481e0e2

Kernel Version: 3.10.0-693.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://18.6.2

Kubelet Version: v1.9.0

Kube-Proxy Version: v1.9.0

PodCIDR: 10.244.1.0/24

ExternalID: node1

Non-terminated Pods: (23 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits

--------- ---- ------------ ---------- --------------- -------------

common-server test-capital-schedule-6468f8774f-2s6dd 100m (1%) 1 (15%) 1536Mi (5%) 1536Mi (5%)

common-server test-p2p-bms-7db78b7c46-9vvnt 0 (0%) 0 (0%) 0 (0%) 0 (0%)

ingress-nginx default-http-backend-77b86c5478-9f9jv 10m (0%) 10m (0%) 20Mi (0%) 20Mi (0%)

ingress-nginx nginx-ingress-controller-5bb76649d8-5cg4g 0 (0%) 0 (0%) 0 (0%) 0 (0%)

institution-server test-institution-6569774458-t8sk9 0 (0%) 0 (0%) 0 (0%) 0 (0%)

irm-server test-flow-server-b477756f-2s5bc 0 (0%) 0 (0%) 0 (0%) 0 (0%)

irm-server test-huishi-schedule-bc5f5d4d5-z22gq 0 (0%) 0 (0%) 1536Mi (5%) 1536Mi (5%)

irm-server test-huishi-server-6c68d8c769-5lf9z 0 (0%) 0 (0%) 2Gi (7%) 2Gi (7%)

irm-server test-message-service-bbf469fd8-bf847 0 (0%) 0 (0%) 0 (0%) 0 (0%)

irm-server test-order-server-5fcc5575bd-67c4r 0 (0%) 0 (0%) 0 (0%) 0 (0%)

irm-server test-urule-54f4b8f84f-l5xbm 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system debug-agent-h88sf 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system heapster-6d4b8f864c-t6ffq 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system kube-flannel-ds-wssv7 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system kube-proxy-hzgln 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system monitoring-influxdb-5db68cd87f-crxb6 0 (0%) 0 (0%) 0 (0%) 0 (0%)

ops-server ali-log-77465ffcd-jhqnc 0 (0%) 0 (0%) 0 (0%) 0 (0%)

ops-server daohang-7b47c999d5-bmmc7 0 (0%) 0 (0%) 0 (0%) 0 (0%)

ops-server jishiyu-web-6796c68f97-vbpqh 0 (0%) 0 (0%) 0 (0%) 0 (0%)

shitou-market test-shitou-market-79668fcdf5-zrvvt 0 (0%) 0 (0%) 0 (0%) 0 (0%)

transaction-server test-business-transaction-9c6cf9bd6-zhtrt 0 (0%) 0 (0%) 0 (0%) 0 (0%)

transaction-server test-callback-transaction-5d58f89b5-468lt 0 (0%) 0 (0%) 0 (0%) 0 (0%)

transaction-server test-trade-basic-7d7b6bb6c9-q647g 0 (0%) 0 (0%) 0 (0%) 0 (0%)

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

CPU Requests CPU Limits Memory Requests Memory Limits

------------ ---------- --------------- -------------

110m (1%) 1010m (15%) 5140Mi (17%) 5140Mi (17%)

Events: <none>

可以看出InternalIP的值为18.16.200.131

总结

NodePort:

同时还可以给service指定一个nodePort值,范围是30000-32767,集群外就可以使用kubernetes任意一个节点的IP加上30000端口访问该服务了。kube-proxy会自动将流量以round-robin的方式转发给该service的每一个pod。

Ingress:

通过域名来访问指定的服务