原文

1.笔记

#-*- codeing = utf-8 -*-

#@Time : 2020/7/15 22:49

#@Author : HUGBOY

#@File : hello_sqlite3.py

#@Software: PyCharm

'''---------------|Briefing|------------------

sqlite3

——a new way to save data !

------------------------------------'''

import sqlite3

#连接

print("Connect code:")

# conn = sqlite3.connect("sql.database")#创建/打开数据库文件

#

# c = conn.cursor()#获取游标

#

# sql = '''

# create table company

# (id int primary key not null,

# name text not null,

# age int not null,

# address char(58),

# salary real);

# ''' #三个点多行语句

#

# #第一次创建 c.execute(sql)#执行sql语句

#

# conn.commit()#提交保存

#

# print("建表成功!")

#

# conn.close()#关闭数据库

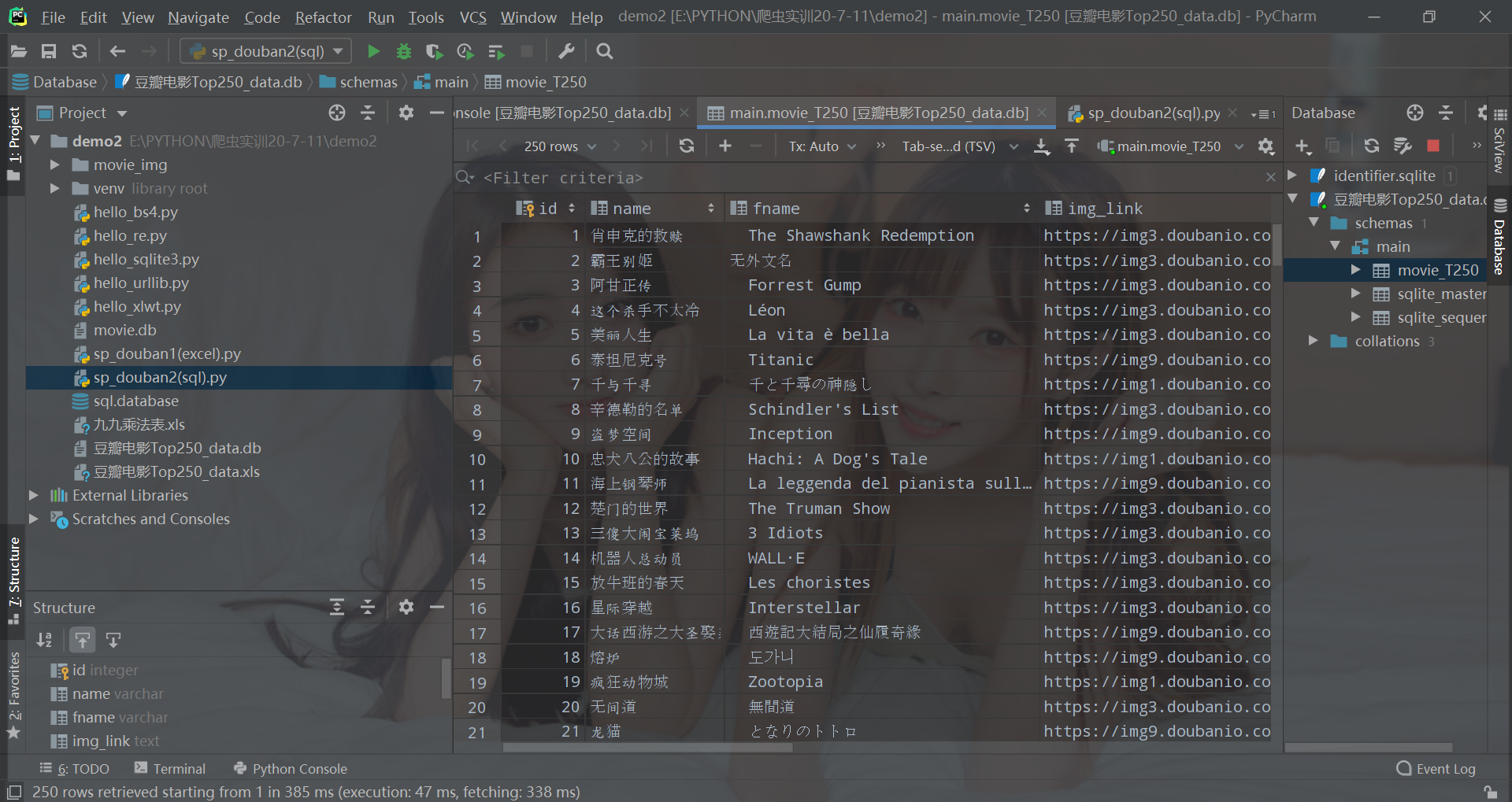

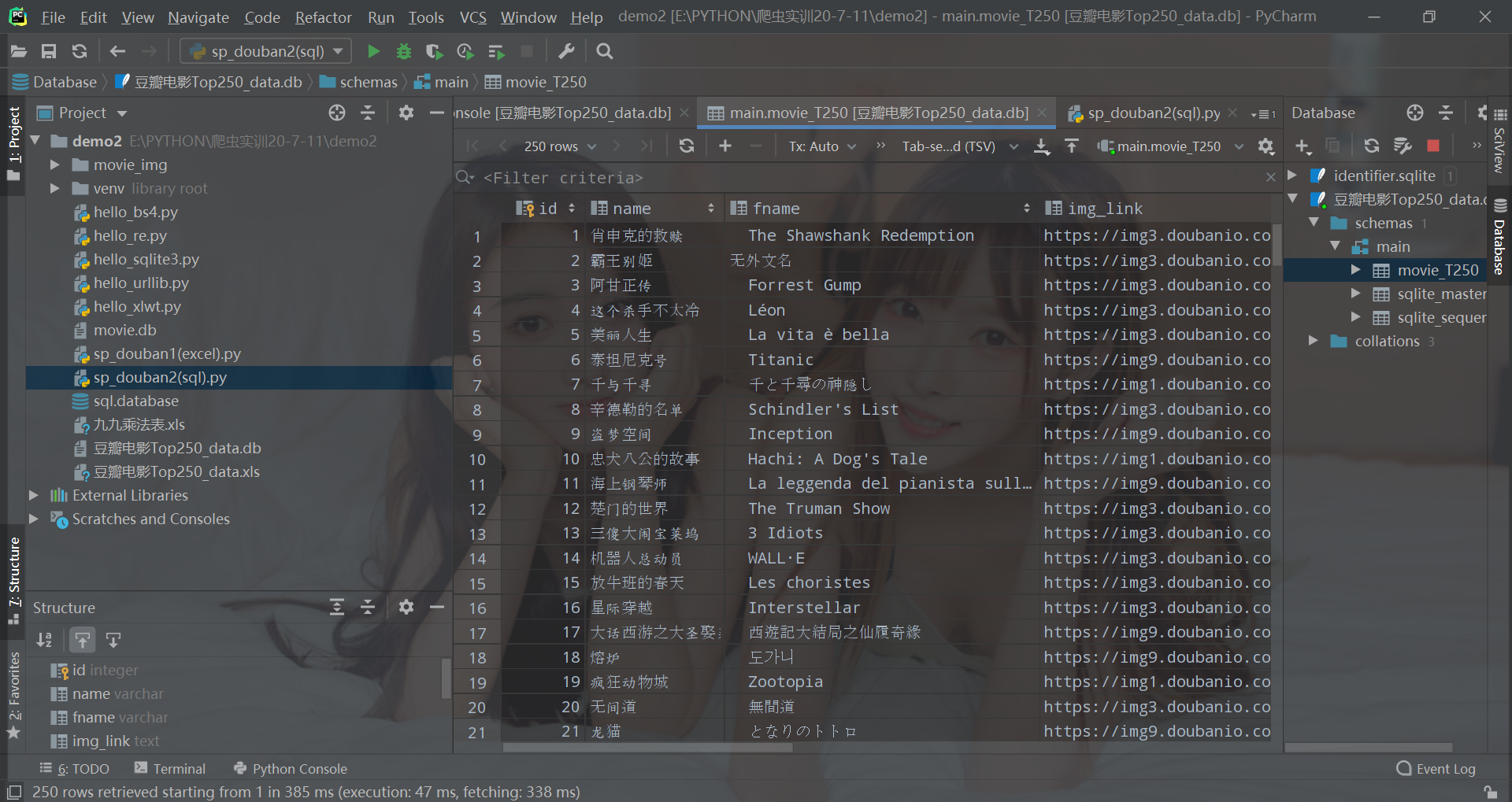

2.实例

效果

原码

#-*- codeing = utf-8 -*-

#@Time : 2020/7/16 12:12

#@Author : HUGBOY

#@File : sp_douban2(sql).py

#@Software: PyCharm

# -*- codeing = utf-8 -*-

# @Time : 2020/7/12 19:11

# @Author : HUGBOY

# @File : sp_douban1(excel).py

# @Software: PyCharm

'''----------------------|简介|------------------

#爬虫

#爬取豆瓣TOP250电影数据

#1.爬取网页

#2.逐一解析数据

#3.保存数据

----------------------------------------------'''

from bs4 import BeautifulSoup # 网页解析、获取数据

import re # 正则表达式

import urllib.request, urllib.error # 指定URL、获取网页数据

import random

import xlwt # 存到excel的操作

import sqlite3 # 存到数据库操作

import os

from urllib.request import urlretrieve#保存电影海报图

def main():

baseurl = "https://movie.douban.com/top250/?start="

datalist = getdata(baseurl)

#savepath = ".\豆瓣电影Top250_data.xls"

#savedata(datalist,savepath)

dbpath = ("豆瓣电影Top250_data.db")

savedata(datalist,dbpath)

# 正则表达式匹配规则

findTitle = re.compile(r'<span class="title">(.*)</span>') # 影片片名

findR = re.compile(r'<span class="inq">(.*)</span>') # 一句话评

findRating = re.compile(r'<span class="rating_num" property="v:average">(.*)</span>') # 影片评分

findPeople = re.compile(r'<span>(d*)人评价</span>') # 影评人数-/d 代表数字

findLink = re.compile(r'<a href="(.*?)">') # 影片链接

findImg = re.compile(r'<img.*src="(.*?)"', re.S) # 影片图片-re.S 允许.中含换行符

findBd = re.compile(r'<p class="">(.*?)</p>', re.S) # 影片简介

def getdata(baseurl):

datalist = []

getimg = 0

for i in range(0, 10): # 调用获取页面信息的函数*10次

url = baseurl + str(i * 25)

html = askURL(url) # 保存获取到的网页原码

# 解析网页

soup = BeautifulSoup(html, "html.parser")

for item in soup.find_all('div', class_="item"):

# print(item) #一部电影的所有信息

data = []

item = str(item)

# 提取影片详细信息

title = re.findall(findTitle, item)

if (len(title) == 2):

ctitle = title[0] # 中文名

data.append(ctitle)

otitle = title[1].replace("/", "") # 外文名-去掉'/'和""

data.append(otitle)

else:

data.append(title[0])

data.append("无外文名")

img = re.findall(findImg, item)[0]

data.append(img)

'''--------------------

爬取图片

--------------------'''

os.makedirs('./movie_img/', exist_ok=True)#创建保存目录

getimg+=1

str_getimg = str(getimg)

#格式 urlretrieve(IMAGE_URL, './img/image1.png')

urlretrieve(img, './movie_img/img'+str_getimg+'.png')

link = re.findall(findLink, item)[0] # re库:正则表达式找指定字符串

data.append(link)

rating = re.findall(findRating, item)[0]

data.append(rating)

people = re.findall(findPeople, item)[0]

data.append(people)

r = re.findall(findR, item)

if len(r) != 0:

r = r[0].replace("。", "")

data.append(r)

else:

data.append("无一句评")

bd = re.findall(findBd, item)[0]

bd = re.sub('<br(s+)?/>(s+)?', " ", bd) # 替换</br>

bd = re.sub('/', " ", bd) # 替换/

data.append(bd.strip()) # 去掉空格

datalist.append(data) # 把一部电影信息存储

#print(datalist)

return datalist

# 得到指定Url网页内容

def askURL(url):

head = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:78.0) Gecko/20100101 Firefox/78.0"}

request = urllib.request.Request(url, headers=head)

html = ""

try:

response = urllib.request.urlopen(request)

html = response.read().decode("utf-8")

#print(html) 测试

except Exception as rel:

if hasattr(rel, "code"):

print(rel.code)

if hasattr(rel, "reason"):

print(rel.reason)

return html

def savedata(datalist,dbpath):

print("save data in sql ...")

create_db(dbpath)

conn = sqlite3.connect(dbpath)

c = conn.cursor()

for data in datalist:

for index in range(len(data)):

if index==4 or index==5:#score rated 类型为numeric

continue

data[index]='"'+data[index]+'"'

sql = '''

insert into movie_T250

(

name,fname,img_link,film_link,score,rated,one_cont,instroduction

)

values(%s)'''%",".join(data)

c.execute(sql)

conn.commit()

c.close()

conn.close()

def create_db(dbpath):

sql='''

create table movie_T250

(

id integer primary key autoincrement,

name varchar,

fname varchar,

img_link text,

film_link text,

score numeric,

rated numeric,

one_cont text,

instroduction text

);

'''

conn = sqlite3.connect(dbpath)

c = conn.cursor()

c.execute(sql)

conn.commit()

c.close()

print("create table success !")

if __name__ == "__main__":

main()

print("爬取完成,奥利给!")