@CopyLeft by ICANTH,I Can do ANy THing that I CAN THink!~

Author:WenHui,WuHan University,2012-6-15

PDF版阅读地址:http://www.docin.com/p1-424285718.html

普通自旋锁

自旋锁最常见的使用场景是创建一段临界区 :

static DEFINE_SPINLOCK(xxx_lock);

unsigned long flags;

spin_lock_irqsave(&xxx_lock, flags);

... critical section here ..

spin_unlock_irqrestore(&xxx_lock, flags);

自旋锁使用时值得注意的是:对于采用使用自旋锁以保证共享变量的存取安全时,仅当系统中所有涉及到存取该共享变量的程序部分都采用成对的spin_lock、和spin_unlock来进行操作才能保证其安全性。

NOTE! The spin-lock is safe only when you _also_ use the lock itself to do locking across CPU's, which implies that EVERYTHING that touches a shared variable has to agree about the spinlock they want to use.

在Linux2.6.15.5中,自旋体数据结构如下:

当配置CONFIG_SMP时,raw_spinlock_t才是一个含有slock变量的结构,该slock字段标识自旋锁是否空闲状态,用以处理多CPU处理器并发申请锁的情况;当未配置CONFIG_SMP时,对于单CPU而言,不会发生发申请自旋锁,故raw_lock为空结构体。

当配置CONFIG_SMP和CONFIG_PREEMPT时,spinlock_t才会有break_lock字段,break_lock字段用于标记自旋锁竞争状态,当break_lock = 0时表示没有多于两个的执行路径,当break_lock = 1时表示没有其它进程在忙等待该锁。当在SMP多CPU体系架构下有可能出现申请不到自旋锁、空等的情况,但LINUX内核必须保证在spin_lock的原子性,故在配置CONFIG_PREEMPT时必须禁止内核抢占。

字段 |

描述 |

spin_lock_init(lock) |

一个自旋锁时,可使用接口函数将其初始化为锁定状态 |

spin_lock(lock) |

用于锁定自旋锁,如果成功则返回;否则循环等待自旋锁变为空闲 |

spin_unlock(lock) |

释放自旋锁lock,重新设置自旋锁为锁定状态 |

spin_is_locked(lock) |

判断当前自旋锁是否处于锁定状态 |

spin_unlock_wait(lock) |

循环等待、直到自旋锁lock变为可用状态 |

spin_trylock(lock) |

尝试锁定自旋锁lock,如不成功则返回0;否则锁定,并返回1 |

spin_can_lock(lock) |

判断自旋锁lock是否处于空闲状态 |

spin_lock和spin_unlock的关系如下:

可见,在UP体系架构中,由于没有必要有实际的锁以防止多CPU抢占,spin操作仅仅是禁止和开启内核抢占。

LINUX 2.6.35版本,将spin lock实现更改为 ticket lock。spin_lock数据结构除了用于内核调试之外,字段为:raw_spinlock rlock。

ticket spinlock将rlock字段分解为如下两部分:

Next是下一个票号,而Owner是允许使用自旋锁的票号。加锁时CPU取Next,并将rlock.Next + 1。将Next与Owner相比较,若相同,则加锁成功;否则循环等待、直到Next = rlock.Owner为止。解锁则直接将Owner + 1即可。

spin_lock和spin_unlock的调用关系如下:

普通自旋锁源码分析

源程序文件目录关系图

在/include/linux/spinlock.h中通过是否配置CONFIG_SMP项判断导入哪种自旋锁定义及操作:

004 /*

005 * include/linux/spinlock.h - generic spinlock/rwlock declarations

007 * here's the role of the various spinlock/rwlock related include files:

009 * on SMP builds:

011 * asm/spinlock_types.h: contains the arch_spinlock_t/arch_rwlock_t and the

012 * initializers

014 * linux/spinlock_types.h:

015 * defines the generic type and initializers

017 * asm/spinlock.h: contains the arch_spin_*()/etc. lowlevel

018 * implementations, mostly inline assembly code

022 * linux/spinlock_api_smp.h:

023 * contains the prototypes for the _spin_*() APIs.

025 * linux/spinlock.h: builds the final spin_*() APIs.

027 * on UP builds:

029 * linux/spinlock_type_up.h:

030 * contains the generic, simplified UP spinlock type.

031 * (which is an empty structure on non-debug builds)

033 * linux/spinlock_types.h:

034 * defines the generic type and initializers

036 * linux/spinlock_up.h:

037 * contains the arch_spin_*()/etc. version of UP

038 * builds. (which are NOPs on non-debug, non-preempt

039 * builds)

041 * (included on UP-non-debug builds:)

043 * linux/spinlock_api_up.h:

044 * builds the _spin_*() APIs.

046 * linux/spinlock.h: builds the final spin_*() APIs.

047 */

082 /*

083 * Pull the arch_spin*() functions/declarations (UP-nondebug doesnt need them):

084 */

085 #ifdef CONFIG_SMP

086 # include <asm/spinlock.h>

087 #else

088 # include <linux/spinlock_up.h>

089 #endif

064 typedef struct spinlock {

065 union {

066 struct raw_spinlock rlock;

075 };

076 } spinlock_t;

282 static inline void spin_lock(spinlock_t *lock)

283 {

284 raw_spin_lock(&lock->rlock);

285 }

169 #define raw_spin_lock(lock) _raw_spin_lock(lock)

322 static inline void spin_unlock(spinlock_t *lock)

323 {

324 raw_spin_unlock(&lock->rlock);

325 }

222 #define raw_spin_unlock(lock) _raw_spin_unlock(lock)

UP体系架构

spin_lock函数在UP体系架构中最终实现方式为:

/include/linux/spinlock_api_up.h

052 #define _raw_spin_lock(lock) __LOCK(lock)

021 /*

022 * In the UP-nondebug case there's no real locking going on, so the

023 * only thing we have to do is to keep the preempt counts and irq

024 * flags straight, to suppress compiler warnings of unused lock

025 * variables, and to add the proper checker annotations:

026 */

027 #define __LOCK(lock) \

028 do { preempt_disable(); __acquire(lock); (void)(lock); } while (0)

052 #define _raw_spin_lock(lock) __LOCK(lock)

preempt_disable在未配置CONFIG_PREEMPT时为空函数,否则禁止内核抢占。而__acquire()用于内核编译过程中静态检查。(void)(lock)则是为避免编译器产生lock未被使用的警告。

spin_unlock函数在UP体系架构中最终实现方式为:

039 #define __UNLOCK(lock) \

040 do { preempt_enable(); __release(lock); (void)(lock); } while (0)

SMP体系架构-Tickect Spin Lock的实现方式

在Linux2.6.24中,自旋锁由一个整数表示,当为1时表示锁是空闲的,spin_lock()每次减少1,故 <=0时则表示有多个锁在忙等待,但这将导致不公平性。自linux2.6.25开始,自旋锁将整数拆为一个16位数,结构如下:

该实现机制称为“Ticket spinlocks”,Next字节表示下一次请求锁给其分配的票号,而Owner表示当前可以取得锁的票号,Next和Owner初始化为0。当lock.Next = lock.Owner时,表示该锁处于空闲状态。spin_lock执行如下过程:

1、my_ticket = slock.next

2、slock.next++

3、wait until my_ticket = slock.owner

spin_unlock执行如下过程:

1、slock.owner++

但该锁将导致一个问题:8个bit将只能最多表示255个CPU来竞争该锁。故系统通过的方式,将实现两个tickect_spin_lock和ticket_spin_unclock的版本:

058 #if (NR_CPUS < 256)

059 #define TICKET_SHIFT 8

106 #else

107 #define TICKET_SHIFT 16

SMP体系架构-SPIN LOCK (ticket_shif 8)

046 #ifdef CONFIG_INLINE_SPIN_LOCK

047 #define _raw_spin_lock(lock) __raw_spin_lock(lock)

048 #endif

/include/linux/spinlock_api_smp.h:

140 static inline void __raw_spin_lock(raw_spinlock_t *lock)

141 {

142 preempt_disable();

143 spin_acquire(&lock->dep_map, 0, 0, _RET_IP_);

144 LOCK_CONTENDED(lock, do_raw_spin_trylock, do_raw_spin_lock);

145 }

在__raw_spin_lock中,首先禁止内核抢占,调用LOCK_CONTENED宏

391 #define LOCK_CONTENDED(_lock, try, lock) \

392 do { \

393 if (!try(_lock)) { \

394 lock_contended(&(_lock)->dep_map, _RET_IP_); \

395 lock(_lock); \

396 } \

397 lock_acquired(&(_lock)->dep_map, _RET_IP_); \

398 } while (0)

其中即在_raw_spin_lock中,即为首先调用do_raw_spin_trylock尝试加锁,若失败则继续调用do_raw_spin_lock进行加锁。而do_raw_spin_xxx具体实现与平台有关。

/include/linux/spinlock.h

136 static inline void do_raw_spin_lock(raw_spinlock_t *lock) __acquires(lock)

137 {

138 __acquire(lock);

139 arch_spin_lock(&lock->raw_lock);

140 }

149 static inline int do_raw_spin_trylock(raw_spinlock_t *lock)

150 {

151 return arch_spin_trylock(&(lock)->raw_lock);

152 }

在X86平台下,do_raw_spin_lock和do_raw_spin_trylock实现为两个函数:

/arch/x86/include/asm/spinlock.h

188 static __always_inline void arch_spin_lock(arch_spinlock_t *lock)

189 {

190 __ticket_spin_lock(lock);

191 }

192

193 static __always_inline int arch_spin_trylock(arch_spinlock_t *lock)

194 {

195 return __ticket_spin_trylock(lock);

196 }

058 #if (NR_CPUS < 256)

059 #define TICKET_SHIFT 8

061 static __always_inline void __ticket_spin_lock(arch_spinlock_t *lock)

062 {

063 short inc = 0x0100;

064

065 asm volatile (

066 LOCK_PREFIX "xaddw %w0, %1\n"

067 "1:\t"

068 "cmpb %h0, %b0\n\t"

069 "je 2f\n\t"

070 "rep ; nop\n\t"

071 "movb %1, %b0\n\t"

072 /* don't need lfence here, because loads are in-order */

073 "jmp 1b\n"

074 "2:"

075 : "+Q" (inc), "+m" (lock->slock)

076 :

077 : "memory", "cc");

078 }

066行:LOCK_PREFIX在UP上为空定义,而在SMP上为Lock,用以保证从066行~074行为原子操作,强制所有CPU缓存失效。xaddw指令用法如下:

xaddw src, dsc ==

tmp = dsc

desc = dsc + src

src = tmp

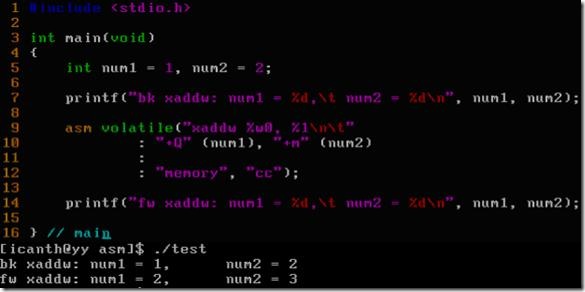

XADDW语法验证实验:

xaddw使%0和%1按1个word长度交换相加,即:%0: inc → slock, %1: slock → slock + 0x0100。%1此时高字节Next + 1。xaddw使%0和%1内容改变如下:

068行:比较inc中自己的Next是否与Owner中ticket相等,若相等则获取自旋锁使用权、结束循环。

070行 ~ 073行:如果Owner不属于自己,则执行空语句,并重新读取slock中的Owner,跳回至068行进行判断。

为什么要用LOCK_PREFIX宏来代替直接使用lock指令的方式呢?解释如下:为了避免在配置了CONFIG_SMP项编译产生的SMP内核、实际却运行在UP系统上时系统执行lock命令所带来的开销,系统创建在.smp_locks一张SMP alternatives table用以保存系统中所有lock指令的指针。当实际运行时,若从SMP→UP时,可以根据.smp_locks lock 指针表通过热补丁的方式将lock指令替换成nop指令。当然也可以实现系统运行时将锁由UP→SMP的切换。具体应用可参见参考资料《Linux 内核 LOCK_PREFIX 的含义》。

009 /*

010 * Alternative inline assembly for SMP.

011 *

012 * The LOCK_PREFIX macro defined here replaces the LOCK and

013 * LOCK_PREFIX macros used everywhere in the source tree.

014 *

015 * SMP alternatives use the same data structures as the other

016 * alternatives and the X86_FEATURE_UP flag to indicate the case of a

017 * UP system running a SMP kernel. The existing apply_alternatives()

018 * works fine for patching a SMP kernel for UP.

019 *

020 * The SMP alternative tables can be kept after boot and contain both

021 * UP and SMP versions of the instructions to allow switching back to

022 * SMP at runtime, when hotplugging in a new CPU, which is especially

023 * useful in virtualized environments.

024 *

025 * The very common lock prefix is handled as special case in a

026 * separate table which is a pure address list without replacement ptr

027 * and size information. That keeps the table sizes small.

028 */

029

030 #ifdef CONFIG_SMP

031 #define LOCK_PREFIX_HERE \

032 ".section .smp_locks,\"a\"\n" \

033 ".balign 4\n" \

034 ".long 671f - .\n" /* offset */ \

035 ".previous\n" \

036 "671:"

037

038 #define LOCK_PREFIX LOCK_PREFIX_HERE "\n\tlock; "

039

040 #else /* ! CONFIG_SMP */

041 #define LOCK_PREFIX_HERE ""

042 #define LOCK_PREFIX ""

043 #endif

032行“.section .smp_locks, a”,表示以下代码生成在.smp_locks段中,而“a”代表——allocatable。

033行~034行 “.balign 4 .long 571f”,表示以4字节对齐、将671标签的地址置于.smp_locks段中,而标签671的地址即为:代码段lock指令的地址。(其实就是lock指令的指针啦~~~)

033行~034行 “.previous”伪指令,表示恢复以前section,即代码段。故在038行将导致在代码段生成lock指令。

LOCK_CONTENDED时首先尝试使用__ticket_spin_trylock对lock进行加锁,若失败则继续使用__ticket_spin_lock进行加锁。不直接调用__ticket_spin_lock而使用__ticket_spin_trylock的原因是:

trylock首先不会修改lock.slock的ticket,它只是通过再次检查,1)将slock读出,并判断slock是否处于空闲状态;2)调用LOCK执行原子操作,判断当前slock的Next是否已经被其它CPU修改,若未被修改则获得该锁,并将lock.slock.Next + 1。

spin_lock,无论如何,首先调用LOCK执行原子性操作、声明ticket;而trylock则首先进行slock.Next == slock.Owner的判断,降低第二次比较调用LOCK的概率。

080 static __always_inline int __ticket_spin_trylock(arch_spinlock_t *lock)

081 {

082 int tmp, new;

083

084 asm volatile("movzwl %2, %0\n\t"

085 "cmpb %h0,%b0\n\t"

086 "leal 0x100(%" REG_PTR_MODE "0), %1\n\t"

087 "jne 1f\n\t"

088 LOCK_PREFIX "cmpxchgw %w1,%2\n\t"

089 "1:"

090 "sete %b1\n\t"

091 "movzbl %b1,%0\n\t"

092 : "=&a" (tmp), "=&q" (new), "+m" (lock->slock)

093 :

094 : "memory", "cc");

095

096 return tmp;

097 }

084行将lock.slock的值赋给tmp。

085行 比较tmp.next == tmp.owner,判断当前自旋锁是否空闲。

086行 leal指令(Load effective address),实际上是movl的变形,“leal 0x10 (%eax, %eax, 3), %edx” → “%edx = 0x10 + %eax + %eax * 3”,但leal却不像movl那样从内存取值、而直接读取寄存器。086行语句,根据REG_PTR_MODE不同配置,在X86平台下为:“leal 0x100(%k0), %1”,而在其它平台为:“leal 0x100(%q0), %1”,忽略占位符修饰“k”或“q”,则该行语句等价于:

“movl (%0 + 0x100),%1”,此时new = { tmp.Next + 1, tmp.Owner }。

087行 若tmp.next != tmp.owner,即自旋锁不空闲,则跳到089行将0赋值给tmp并返回。

088行 原子性地执行操作cmpxchgw,用以检测当前自旋锁是否已被其它CPU修改lock.slock的Next域,若有竞争者则失败、否则获得该锁并将Next + 1,这一系列操作是原子性的!cmpxchgw操作解释如下:

the accumulator (8-32 bits) with "dest". If equal the "dest" is loaded with "src", otherwise the accumulator is loaded with "dest".(在IA32下,%EAX即为累加器。)

所以,“cmpxchgw %w1, %2”等效于:

“tmp.Next == lock.slock.Next ? lock.slock = new : tmp = lock.slock”

若Next未发生变化,则将lock.slock更新为new,实质上是将slock的Next+1。

090行 执行sete指令,若cmpxchgw或cmpb成功则将new的最低字节%b1赋值为1,否则赋值为0. sete的解释为:

Sets the byte in the operand to 1 if the Zero Flag is set, otherwise sets the operand to 0.

091行 movzbl(movz from byte to long)指令将%b1赋值给tmp最低字节,且其它位补0.即将tmp置为0或1.

SMP体系架构-SPIN UNLOCK (ticket_shif 8)

/include/linux/spinlock_api_smp.h

046 #ifdef CONFIG_INLINE_SPIN_LOCK

047 #define _raw_spin_lock(lock) __raw_spin_lock(lock)

048 #endif

149 static inline void __raw_spin_unlock(raw_spinlock_t *lock)

150 {

151 spin_release(&lock->dep_map, 1, _RET_IP_);

152 do_raw_spin_unlock(lock);

153 preempt_enable();

154 }

spin_unlock即最终调用do_raw_spin_unlock对自旋锁进行释放操作。

/include/linux/spinlock.h

136 static inline void do_raw_spin_lock(raw_spinlock_t *lock) __acquires(lock)

137 {

138 __acquire(lock);

139 arch_spin_lock(&lock->raw_lock);

140 }

对于x86的IA32平台,arch_spin_lock实现如下:

/arch/x86/include/asm/spinlock.h

198 static __always_inline void arch_spin_unlock(arch_spinlock_t *lock)

199 {

200 __ticket_spin_unlock(lock);

201 }

058 #if (NR_CPUS < 256)

059 #define TICKET_SHIFT 8

099 static __always_inline void __ticket_spin_unlock(arch_spinlock_t *lock)

100 {

101 asm volatile(UNLOCK_LOCK_PREFIX "incb %0"

102 : "+m" (lock->slock)

103 :

104 : "memory", "cc");

105 }

101行 将lock->slock的Owner + 1,表示可以让下一个拥有牌号的CPU加锁。

030 #if defined(CONFIG_X86_32) && \

031 (defined(CONFIG_X86_OOSTORE) || defined(CONFIG_X86_PPRO_FENCE))

032 /*

033 * On PPro SMP or if we are using OOSTORE, we use a locked operation to unlock

034 * (PPro errata 66, 92)

035 */

036 # define UNLOCK_LOCK_PREFIX LOCK_PREFIX

037 #else

038 # define UNLOCK_LOCK_PREFIX

039 #endif

参考资料

自旋锁

《spinlocks.txt》,/Documentation/spinlocks.txt

《Ticket spinlocks》,http://lwn.net/Articles/267968/

《Linux x86 spinlock实现之分析》,http://blog.csdn.net/david_henry/article/details/5405093

《Linux 内核 LOCK_PREFIX 的含义》,http://blog.csdn.net/ture010love/article/details/7663008

《The Intel 8086 / 8088/ 80186 / 80286 / 80386 / 80486 Instruction Set》:http://zsmith.co/intel.html